30. Occupational Hygiene

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Goals, Definitions and General Information

Berenice I. Ferrari Goelzer

Recognition of Hazards

Linnéa Lillienberg

Evaluation of the Work Environment

Lori A. Todd

Occupational Hygiene: Control of Exposures Through Intervention

James Stewart

The Biological Basis for Exposure Assessment

Dick Heederik

Occupational Exposure Limits

Dennis J. Paustenbach

Tables

1. Hazards of chemical; biological & physical agents

2. Occupational exposure limits (OELs) - various countries

Figures

Goals, Definitions and General Information

Work is essential for life, development and personal fulfilment. Unfortunately, indispensable activities such as food production, extraction of raw materials, manufacturing of goods, energy production and services involve processes, operations and materials which can, to a greater or lesser extent, create hazards to the health of workers and those in nearby communities, as well as to the general environment.

However, the generation and release of harmful agents in the work environment can be prevented, through adequate hazard control interventions, which not only protect workers’ health but also limit the damage to the environment often associated with industrialization. If a harmful chemical is eliminated from a work process, it will neither affect the workers nor go beyond, to pollute the environment.

The profession that aims specifically at the prevention and control of hazards arising from work processes is occupational hygiene. The goals of occupational hygiene include the protection and promotion of workers’ health, the protection of the environment and contribution to a safe and sustainable development.

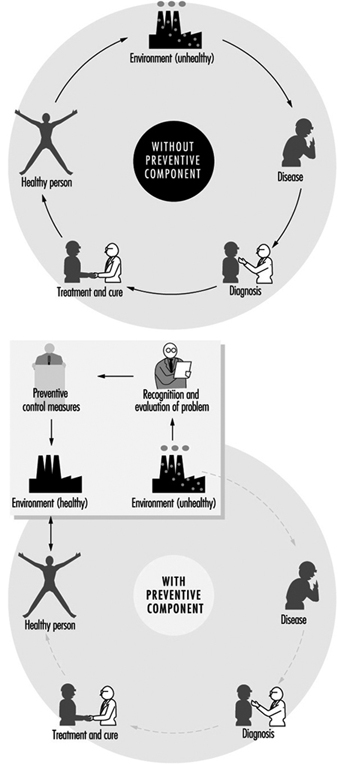

The need for occupational hygiene in the protection of workers’ health cannot be overemphasized. Even when feasible, the diagnosis and the cure of an occupational disease will not prevent further occurrences, if exposure to the aetiological agent does not cease. So long as the unhealthy work environment remains unchanged, its potential to impair health remains. Only the control of health hazards can break the vicious circle illustrated in figure 1.

Figure 1. Interactions between people and the environment

However, preventive action should start much earlier, not only before the manifestation of any health impairment but even before exposure actually occurs. The work environment should be under continuous surveillance so that hazardous agents and factors can be detected and removed, or controlled, before they cause any ill effects; this is the role of occupational hygiene.

Furthermore, occupational hygiene may also contribute to a safe and sustainable development, that is “to ensure that (development) meets the needs of the present without compromising the ability of the future generations to meet their own needs” (World Commission on Environment and Development 1987). Meeting the needs of the present world population without depleting or damaging the global resource base, and without causing adverse health and environmental consequences, requires knowledge and means to influence action (WHO 1992a); when related to work processes this is closely related to occupational hygiene practice.

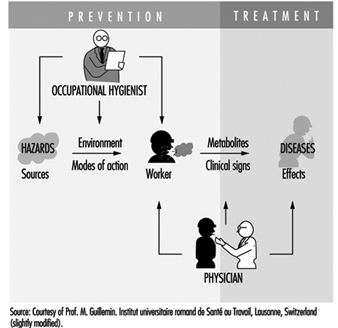

Occupational health requires a multidisciplinary approach and involves fundamental disciplines, one of which is occupational hygiene, along with others which include occupational medicine and nursing, ergonomics and work psychology. A schematic representation of the scopes of action for occupational physicians and occupational hygienists is presented in figure 2.

Figure 2. Scopes of action for occupational physicians and occupational hygienists.

It is important that decision makers, managers and workers themselves, as well as all occupational health professionals, understand the essential role that occupational hygiene plays in the protection of workers’ health and of the environment, as well as the need for specialized professionals in this field. The close link between occupational and environmental health should also be kept in mind, since the prevention of pollution from industrial sources, through the adequate handling and disposal of hazardous effluents and waste, should be started at the workplace level. (See “Evaluation of the work environment”).

Concepts and Definitions

Occupational hygiene

Occupational hygiene is the science of the anticipation, recognition, evaluation and control of hazards arising in or from the workplace, and which could impair the health and well-being of workers, also taking into account the possible impact on the surrounding communities and the general environment.

Definitions of occupational hygiene may be presented in different ways; however, they all have essentially the same meaning and aim at the same fundamental goal of protecting and promoting the health and well-being of workers, as well as protecting the general environment, through preventive actions in the workplace.

Occupational hygiene is not yet universally recognized as a profession; however, in many countries, framework legislation is emerging that will lead to its establishment.

Occupational hygienist

An occupational hygienist is a professional able to:

- anticipate the health hazards that may result from work processes, operations and equipment, and accordingly advise on their planning and design

- recognize and understand, in the work environment, the occurrence (real or potential) of chemical, physical and biological agents and other stresses, and their interactions with other factors, which may affect the health and well-being of workers

- understand the possible routes of agent entry into the human body, and the effects that such agents and other factors may have on health

- assess workers’ exposure to potentially harmful agents and factors and to evaluate the results

- evaluate work processes and methods, from the point of view of the possible generation and release/propagation of potentially harmful agents and other factors, with a view to eliminating exposures, or reducing them to acceptable levels

- design, recommend for adoption, and evaluate the effectiveness of control strategies, alone or in collaboration with other professionals to ensure effective and economical control

- participate in overall risk analysis and management of an agent, process or workplace, and contribute to the establishment of priorities for risk management

- understand the legal framework for occupational hygiene practice in their own country

- educate, train, inform and advise persons at all levels, in all aspects of hazard communication

- work effectively in a multidisciplinary team involving other professionals

- recognize agents and factors that may have environmental impact, and understand the need to integrate occupational hygiene practice with environmental protection.

It should be kept in mind that a profession consists not only of a body of knowledge, but also of a Code of Ethics; national occupational hygiene associations, as well as the International Occupational Hygiene Association (IOHA), have their own Codes of Ethics (WHO 1992b).

Occupational hygiene technician

An occupational hygiene technician is “a person competent to carry out measurements of the work environment” but not “to make the interpretations, judgements, and recommendations required from an occupational hygienist”. The necessary level of competence may be obtained in a comprehensive or limited field (WHO 1992b).

International Occupational Hygiene Association (IOHA)

IOHA was formally established, during a meeting in Montreal, on June 2, 1987. At present IOHA has the participation of 19 national occupational hygiene associations, with over nineteen thousand members from seventeen countries.

The primary objective of IOHA is to promote and develop occupational hygiene throughout the world, at a high level of professional competence, through means that include the exchange of information among organizations and individuals, the further development of human resources and the promotion of a high standard of ethical practice. IOHA activities include scientific meetings and publication of a newsletter. Members of affiliated associations are automatically members of IOHA; it is also possible to join as an individual member, for those in countries where there is not yet a national association.

Certification

In addition to an accepted definition of occupational hygiene and of the role of the occupational hygienist, there is need for the establishment of certification schemes to ensure acceptable standards of occupational hygiene competence and practice. Certification refers to a formal scheme based on procedures for establishing and maintaining knowledge, skills and competence of professionals (Burdorf 1995).

IOHA has promoted a survey of existing national certification schemes (Burdorf 1995), together with recommendations for the promotion of international cooperation in assuring the quality of professional occupational hygienists, which include the following:

- “the harmonization of standards on the competence and practice of professional occupational hygienists”

- “the establishment of an international body of peers to review the quality of existing certification schemes”.

Other suggestions in this report include items such as: “reciprocity” and “cross-acceptance of national designations, ultimately aiming at an umbrella scheme with one internationally accepted designation”.

The Practice of Occupational Hygiene

The classical steps in occupational hygiene practice are:

- the recognition of the possible health hazards in the work environment

- the evaluation of hazards, which is the process of assessing exposure and reaching conclusions as to the level of risk to human health

- prevention and control of hazards, which is the process of developing and implementing strategies to eliminate, or reduce to acceptable levels, the occurrence of harmful agents and factors in the workplace, while also accounting for environmental protection.

The ideal approach to hazard prevention is “anticipated and integrated preventive action”, which should include:

- occupational health and environmental impact assessments, prior to the design and installation of any new workplace

- selection of the safest, least hazardous and least polluting technology (“cleaner production”)

- environmentally appropriate location

- proper design, with adequate layout and appropriate control technology, including for the safe handling and disposal of the resulting effluents and waste

- elaboration of guidelines and regulations for training on the correct operation of processes, including on safe work practices, maintenance and emergency procedures.

The importance of anticipating and preventing all types of environmental pollution cannot be overemphasized. There is, fortunately, an increasing tendency to consider new technologies from the point of view of the possible negative impacts and their prevention, from the design and installation of the process to the handling of the resulting effluents and waste, in the so-called cradle-to-grave approach. Environmental disasters, which have occurred in both developed and developing countries, could have been avoided by the application of appropriate control strategies and emergency procedures in the workplace.

Economic aspects should be viewed in broader terms than the usual initial cost consideration; more expensive options that offer good health and environmental protection may prove to be more economical in the long run. The protection of workers’ health and of the environment must start much earlier than it usually does. Technical information and advice on occupational and environmental hygiene should always be available to those designing new processes, machinery, equipment and workplaces. Unfortunately such information is often made available much too late, when the only solution is costly and difficult retrofitting, or worse, when consequences have already been disastrous.

Recognition of hazards

Recognition of hazards is a fundamental step in the practice of occupational hygiene, indispensable for the adequate planning of hazard evaluation and control strategies, as well as for the establishment of priorities for action. For the adequate design of control measures, it is also necessary to physically characterize contaminant sources and contaminant propagation paths.

The recognition of hazards leads to the determination of:

- which agents may be present and under which circumstances

- the nature and possible extent of associated adverse effects on health and well-being.

The identification of hazardous agents, their sources and the conditions of exposure requires extensive knowledge and careful study of work processes and operations, raw materials and chemicals used or generated, final products and eventual by-products, as well as of possibilities for the accidental formation of chemicals, decomposition of materials, combustion of fuels or the presence of impurities. The recognition of the nature and potential magnitude of the biological effects that such agents may cause if overexposure occurs, requires knowledge on and access to toxicological information. International sources of information in this respect include International Programme on Chemical Safety (IPCS), International Agency for Research on Cancer (IARC) and International Register of Potentially Toxic Chemicals, United Nations Environment Programme (UNEP-IRPTC).

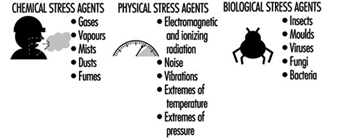

Agents which pose health hazards in the work environment include airborne contaminants; non-airborne chemicals; physical agents, such as heat and noise; biological agents; ergonomic factors, such as inadequate lifting procedures and working postures; and psychosocial stresses.

Occupational hygiene evaluations

Occupational hygiene evaluations are carried out to assess workers’ exposure, as well as to provide information for the design, or to test the efficiency, of control measures.

Evaluation of workers’ exposure to occupational hazards, such as airborne contaminants, physical and biological agents, is covered elsewhere in this chapter. Nevertheless, some general considerations are provided here for a better understanding of the field of occupational hygiene.

It is important to keep in mind that hazard evaluation is not an end in itself, but must be considered as part of a much broader procedure that starts with the realization that a certain agent, capable of causing health impairment, may be present in the work environment, and concludes with the control of this agent so that it will be prevented from causing harm. Hazard evaluation paves the way to, but does not replace, hazard prevention.

Exposure assessment

Exposure assessment aims at determining how much of an agent workers have been exposed to, how often and for how long. Guidelines in this respect have been established both at the national and international level—for example, EN 689, prepared by the Comité Européen de Normalisation (European Committee for Standardization) (CEN 1994).

In the evaluation of exposure to airborne contaminants, the most usual procedure is the assessment of inhalation exposure, which requires the determination of the air concentration of the agent to which workers are exposed (or, in the case of airborne particles, the air concentration of the relevant fraction, e.g., the “respirable fraction”) and the duration of the exposure. However, if routes other than inhalation contribute appreciably to the uptake of a chemical, an erroneous judgement may be made by looking only at the inhalation exposure. In such cases, total exposure has to be assessed, and a very useful tool for this is biological monitoring.

The practice of occupational hygiene is concerned with three kinds of situations:

- initial studies to assess workers’ exposure

- follow-up monitoring/surveillance

- exposure assessment for epidemiological studies.

A primary reason for determining whether there is overexposure to a hazardous agent in the work environment, is to decide whether interventions are required. This often, but not necessarily, means establishing whether there is compliance with an adopted standard, which is usually expressed in terms of an occupational exposure limit. The determination of the “worst exposure” situation may be enough to fulfil this purpose. Indeed, if exposures are expected to be either very high or very low in relation to accepted limit values, the accuracy and precision of quantitative evaluations can be lower than when the exposures are expected to be closer to the limit values. In fact, when hazards are obvious, it may be wiser to invest resources initially on controls and to carry out more precise environmental evaluations after controls have been implemented.

Follow-up evaluations are often necessary, particularly if the need existed to install or improve control measures or if changes in the processes or materials utilized were foreseen. In these cases, quantitative evaluations have an important surveillance role in:

- evaluating the adequacy, testing the efficiency or disclosing possible failures in the control systems

- detecting whether alterations in the processes, such as operating temperature, or in the raw materials, have altered the exposure situation.

Whenever an occupational hygiene survey is carried out in connection with an epidemiological study in order to obtain quantitative data on relationships between exposure and health effects, the exposure must be characterized with a high level of accuracy and precision. In this case, all exposure levels must be adequately characterized, since it would not be enough, for example, to characterize only the worst case exposure situation. It would be ideal, although difficult in practice, to always keep precise and accurate exposure assessment records since there may be a future need to have historical exposure data.

In order to ensure that evaluation data is representative of workers’ exposure, and that resources are not wasted, an adequate sampling strategy, accounting for all possible sources of variability, must be designed and followed. Sampling strategies, as well as measurement techniques, are covered in “Evaluation of the work environment”.

Interpretation of results

The degree of uncertainty in the estimation of an exposure parameter, for example, the true average concentration of an airborne contaminant, is determined through statistical treatment of the results from measurements (e.g., sampling and analysis). The level of confidence on the results will depend on the coefficient of variation of the “measuring system” and on the number of measurements. Once there is an acceptable confidence, the next step is to consider the health implications of the exposure: what does it mean for the health of the exposed workers: now? in the near future? in their working life? will there be an impact on future generations?

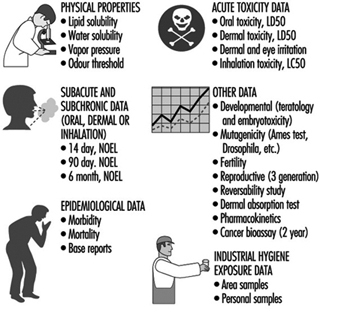

The evaluation process is only completed when results from measurements are interpreted in view of data (sometimes referred to as “risk assessment data”) derived from experimental toxicology, epidemiological and clinical studies and, in certain cases, clinical trials. It should be clarified that the term risk assessment has been used in connection with two types of assessments—the assessment of the nature and extent of risk resulting from exposure to chemicals or other agents, in general, and the assessment of risk for a particular worker or group of workers, in a specific workplace situation.

In the practice of occupational hygiene, exposure assessment results are often compared with adopted occupational exposure limits which are intended to provide guidance for hazard evaluation and for setting target levels for control. Exposure in excess of these limits requires immediate remedial action by the improvement of existing control measures or implementation of new ones. In fact, preventive interventions should be made at the “action level”, which varies with the country (e.g., one-half or one-fifth of the occupational exposure limit). A low action level is the best assurance of avoiding future problems.

Comparison of exposure assessment results with occupational exposure limits is a simplification, since, among other limitations, many factors which influence the uptake of chemicals (e.g., individual susceptibilities, physical activity and body build) are not accounted for by this procedure. Furthermore, in most workplaces there is simultaneous exposure to many agents; hence a very important issue is that of combined exposures and agent interactions, because the health consequences of exposure to a certain agent alone may differ considerably from the consequences of exposure to this same agent in combination with others, particularly if there is synergism or potentiation of effects.

Measurements for control

Measurements with the purpose of investigating the presence of agents and the patterns of exposure parameters in the work environment can be extremely useful for the planning and design of control measures and work practices. The objectives of such measurements include:

- source identification and characterization

- spotting of critical points in closed systems or enclosures (e.g., leaks)

- determination of propagation paths in the work environment

- comparison of different control interventions

- verification that respirable dust has settled together with the coarse visible dust, when using water sprays

- checking that contaminated air is not coming from an adjacent area.

Direct-reading instruments are extremely useful for control purposes, particularly those which can be used for continuous sampling and reflect what is happening in real time, thus disclosing exposure situations which might not otherwise be detected and which need to be controlled. Examples of such instruments include: photo-ionization detectors, infrared analysers, aerosol meters and detector tubes. When sampling to obtain a picture of the behaviour of contaminants, from the source throughout the work environment, accuracy and precision are not as critical as they would be for exposure assessment.

Recent developments in this type of measurement for control purposes include visualization techniques, one of which is the Picture Mix Exposure—PIMEX (Rosen 1993). This method combines a video image of the worker with a scale showing airborne contaminant concentrations, which are continuously measured, at the breathing zone, with a real-time monitoring instrument, thus making it possible to visualize how the concentration varies while the task is performed. This provides an excellent tool for comparing the relative efficacy of different control measures, such as ventilation and work practices, thus contributing to better design.

Measurements are also needed to assess the efficiency of control measures. In this case, source sampling or area sampling are convenient, alone or in addition to personal sampling, for the assessment of workers’ exposure. In order to assure validity, the locations for “before” and “after” sampling (or measurements) and the techniques used should be the same, or equivalent, in sensitivity, accuracy and precision.

Hazard prevention and control

The primary goal of occupational hygiene is the implementation of appropriate hazard prevention and control measures in the work environment. Standards and regulations, if not enforced, are meaningless for the protection of workers’ health, and enforcement usually requires both monitoring and control strategies. The absence of legally established standards should not be an obstacle to the implementation of the necessary measures to prevent harmful exposures or control them to the lowest level feasible. When serious hazards are obvious, control should be recommended, even before quantitative evaluations are carried out. It may sometimes be necessary to change the classical concept of “recognition-evaluation-control” to “recognition-control-evaluation”, or even to “recognition-control”, if capabilities for evaluation of hazards do not exist. Some examples of hazards in obvious need of action without the necessity of prior environmental sampling are electroplating carried out in an unventilated, small room, or using a jackhammer or sand-blasting equipment with no environmental controls or protective equipment. For such recognized health hazards, the immediate need is control, not quantitative evaluation.

Preventive action should in some way interrupt the chain by which the hazardous agent—a chemical, dust, a source of energy—is transmitted from the source to the worker. There are three major groups of control measures: engineering controls, work practices and personal measures.

The most efficient hazard prevention approach is the application of engineering control measures which prevent occupational exposures by managing the work environment, thus decreasing the need for initiatives on the part of workers or potentially exposed persons. Engineering measures usually require some process modifications or mechanical structures, and involve technical measures that eliminate or reduce the use, generation or release of hazardous agents at their source, or, when source elimination is not possible, engineering measures should be designed to prevent or reduce the spread of hazardous agents into the work environment by:

- containing them

- removing them immediately beyond the source

- interfering with their propagation

- reducing their concentration or intensity.

Control interventions which involve some modification of the source are the best approach because the harmful agent can be eliminated or reduced in concentration or intensity. Source reduction measures include substitution of materials, substitution/modification of processes or equipment and better maintenance of equipment.

When source modifications are not feasible, or are not sufficient to attain the desired level of control, then the release and dissemination of hazardous agents in the work environment should be prevented by interrupting their transmission path through measures such as isolation (e.g., closed systems, enclosures), local exhaust ventilation, barriers and shields, isolation of workers.

Other measures aiming at reducing exposures in the work environment include adequate workplace design, dilution or displacement ventilation, good housekeeping and adequate storage. Labelling and warning signs can assist workers in safe work practices. Monitoring and alarm systems may be required in a control programme. Monitors for carbon monoxide around furnaces, for hydrogen sulphide in sewage work, and for oxygen deficiency in closed spaces are some examples.

Work practices are an important part of control—for example, jobs in which a worker’s work posture can affect exposure, such as whether a worker bends over his or her work. The position of the worker may affect the conditions of exposure (e.g., breathing zone in relation to contaminant source, possibility of skin absorption).

Lastly, occupational exposure can be avoided or reduced by placing a protective barrier on the worker, at the critical entry point for the harmful agent in question (mouth, nose, skin, ear)—that is, the use of personal protective devices. It should be pointed out that all other possibilities of control should be explored before considering the use of personal protective equipment, as this is the least satisfactory means for routine control of exposures, particularly to airborne contaminants.

Other personal preventive measures include education and training, personal hygiene and limitation of exposure time.

Continuous evaluations, through environmental monitoring and health surveillance, should be part of any hazard prevention and control strategy.

Appropriate control technology for the work environment must also encompass measures for the prevention of environmental pollution (air, water, soil), including adequate management of hazardous waste.

Although most of the control principles hereby mentioned apply to airborne contaminants, many are also applicable to other types of hazards. For example, a process can be modified to produce less air contaminants or to produce less noise or less heat. An isolating barrier can isolate workers from a source of noise, heat or radiation.

Far too often prevention dwells on the most widely known measures, such as local exhaust ventilation and personal protective equipment, without proper consideration of other valuable control options, such as alternative cleaner technologies, substitution of materials, modification of processes, and good work practices. It often happens that work processes are regarded as unchangeable when, in reality, changes can be made which effectively prevent or at least reduce the associated hazards.

Hazard prevention and control in the work environment requires knowledge and ingenuity. Effective control does not necessarily require very costly and complicated measures. In many cases, hazard control can be achieved through appropriate technology, which can be as simple as a piece of impervious material between the naked shoulder of a dock worker and a bag of toxic material that can be absorbed through the skin. It can also consist of simple improvements such as placing a movable barrier between an ultraviolet source and a worker, or training workers in safe work practices.

Aspects to be considered when selecting appropriate control strategies and technology, include the type of hazardous agent (nature, physical state, health effects, routes of entry into the body), type of source(s), magnitude and conditions of exposure, characteristics of the workplace and relative location of workstations.

The required skills and resources for the correct design, implementation, operation, evaluation and maintenance of control systems must be ensured. Systems such as local exhaust ventilation must be evaluated after installation and routinely checked thereafter. Only regular monitoring and maintenance can ensure continued efficiency, since even well-designed systems may lose their initial performance if neglected.

Control measures should be integrated into hazard prevention and control programmes, with clear objectives and efficient management, involving multidisciplinary teams made up of occupational hygienists and other occupational health and safety staff, production engineers, management and workers. Programmes must also include aspects such as hazard communication, education and training covering safe work practices and emergency procedures.

Health promotion aspects should also be included, since the workplace is an ideal setting for promoting healthy life-styles in general and for alerting as to the dangers of hazardous non-occupational exposures caused, for example, by shooting without adequate protection, or smoking.

The Links among Occupational Hygiene, Risk Assessment and Risk Management

Risk assessment

Risk assessment is a methodology that aims at characterizing the types of health effects expected as a result of a certain exposure to a given agent, as well as providing estimates on the probability of occurrence of these health effects, at different levels of exposure. It is also used to characterize specific risk situations. It involves hazard identification, the establishment of exposure-effect relationships, and exposure assessment, leading to risk characterization.

The first step refers to the identification of an agent—for example, a chemical—as causing a harmful health effect (e.g., cancer or systemic poisoning). The second step establishes how much exposure causes how much of a given effect in how many of the exposed persons. This knowledge is essential for the interpretation of exposure assessment data.

Exposure assessment is part of risk assessment, both when obtaining data to characterize a risk situation and when obtaining data for the establishment of exposure-effect relationships from epidemiological studies. In the latter case, the exposure that led to a certain occupational or environmentally caused effect has to be accurately characterized to ensure the validity of the correlation.

Although risk assessment is fundamental to many decisions which are taken in the practice of occupational hygiene, it has limited effect in protecting workers’ health, unless translated into actual preventive action in the workplace.

Risk assessment is a dynamic process, as new knowledge often discloses harmful effects of substances until then considered relatively harmless; therefore the occupational hygienist must have, at all times, access to up-to-date toxicological information. Another implication is that exposures should always be controlled to the lowest feasible level.

Figure 3 is presented as an illustration of different elements of risk assessment.

Figure 3. Elements of risk assessment.

Risk management in the work environment

It is not always feasible to eliminate all agents that pose occupational health risks because some are inherent to work processes that are indispensable or desirable; however, risks can and must be managed.

Risk assessment provides a basis for risk management. However, while risk assessment is a scientific procedure, risk management is more pragmatic, involving decisions and actions that aim at preventing, or reducing to acceptable levels, the occurrence of agents which may pose hazards to the health of workers, surrounding communities and the environment, also accounting for the socio-economic and public health context.

Risk management takes place at different levels; decisions and actions taken at the national level pave the way for the practice of risk management at the workplace level.

Risk management at the workplace level requires information and knowledge on:

- health hazards and their magnitude, identified and rated according to risk assessment findings

- legal requirements and standards

- technological feasibility, in terms of the available and applicable control technology

- economic aspects, such as the costs to design, implement, operate and maintain control systems, and cost-benefit analysis (control costs versus financial benefits incurred by controlling occupational and environment hazards)

- human resources (available and required)

- socio-economic and public health context

to serve as a basis for decisions which include:

- establishment of a target for control

- selection of adequate control strategies and technologies

- establishment of priorities for action in view of the risk situation, as well as of the existing socio-economic and public health context (particularly important in developing countries)

and which should lead to actions such as:

- identification/search of financial and human resources (if not yet available)

- design of specific control measures, which should be appropriate for the protection of workers’ health and of the environment, as well as safeguarding as much as possible the natural resource base

- implementation of control measures, including provisions for adequate operation, maintenance and emergency procedures

- establishment of a hazard prevention and control programme with adequate management and including routine surveillance.

Traditionally, the profession responsible for most of these decisions and actions in the workplace is occupational hygiene.

One key decision in risk management, that of acceptable risk (what effect can be accepted, in what percentage of the working population, if any at all?), is usually, but not always, taken at the national policy-making level and followed by the adoption of occupational exposure limits and the promulgation of occupational health regulations and standards. This leads to the establishment of targets for control, usually at the workplace level by the occupational hygienist, who should have knowledge of the legal requirements. However, it may happen that decisions on acceptable risk have to be taken by the occupational hygienist at the workplace level—for example, in situations when standards are not available or do not cover all potential exposures.

All these decisions and actions must be integrated into a realistic plan, which requires multidisciplinary and multisectorial coordination and collaboration. Although risk management involves pragmatic approaches, its efficiency should be scientifically evaluated. Unfortunately risk management actions are, in most cases, a compromise between what should be done to avoid any risk and the best which can be done in practice, in view of financial and other limitations.

Risk management concerning the work environment and the general environment should be well coordinated; not only are there overlapping areas, but, in most situations, the success of one is interlinked with the success of the other.

Occupational Hygiene Programmes and Services

Political will and decision making at the national level will, directly or indirectly, influence the establishment of occupational hygiene programmes or services, either at the governmental or private level. It is beyond the scope of this article to provide detailed models for all types of occupational hygiene programmes and services; however, there are general principles that are applicable to many situations and may contribute to their efficient implementation and operation.

A comprehensive occupational hygiene service should have the capability to carry out adequate preliminary surveys, sampling, measurements and analysis for hazard evaluation and for control purposes, and to recommend control measures, if not to design them.

Key elements of a comprehensive occupational hygiene programme or service are human and financial resources, facilities, equipment and information systems, well organized and coordinated through careful planning, under efficient management, and also involving quality assurance and continuous programme evaluation. Successful occupational hygiene programmes require a policy basis and commitment from top management. The procurement of financial resources is beyond the scope of this article.

Human resources

Adequate human resources constitute the main asset of any programme and should be ensured as a priority. All staff should have clear job descriptions and responsibilities. If needed, provisions for training and education should be made. The basic requirements for occupational hygiene programmes include:

- occupational hygienists—in addition to general knowledge on the recognition, evaluation and control of occupational hazards, occupational hygienists may be specialized in specific areas, such as analytical chemistry or industrial ventilation; the ideal situation is to have a team of well-trained professionals in the comprehensive practice of occupational hygiene and in all required areas of expertise

- laboratory personnel, chemists (depending on the extent of analytical work)

- technicians and assistants, for field surveys and for laboratories, as well as for instrument maintenance and repairs

- information specialists and administrative support.

One important aspect is professional competence, which must not only be achieved but also maintained. Continuous education, in or outside the programme or service, should cover, for example, legislation updates, new advances and techniques, and gaps in knowledge. Participation in conferences, symposia and workshops also contribute to the maintenance of competence.

Health and safety for staff

Health and safety should be ensured for all staff in field surveys, laboratories and offices. Occupational hygienists may be exposed to serious hazards and should wear the required personal protective equipment. Depending on the type of work, immunization may be required. If rural work is involved, depending on the region, provisions such as antidote for snake bites should be made. Laboratory safety is a specialized field discussed elsewhere in this Encyclopaedia.

Occupational hazards in offices should not be overlooked—for example, work with visual display units and sources of indoor pollution such as laser printers, photocopying machines and air-conditioning systems. Ergonomic and psychosocial factors should also be considered.

Facilities

These include offices and meeting room(s), laboratories and equipment, information systems and library. Facilities should be well designed, accounting for future needs, as later moves and adaptations are usually more costly and time consuming.

Occupational hygiene laboratories and equipment

Occupational hygiene laboratories should have in principle the capability to carry out qualitative and quantitative assessment of exposure to airborne contaminants (chemicals and dusts), physical agents (noise, heat stress, radiation, illumination) and biological agents. In the case of most biological agents, qualitative assessments are enough to recommend controls, thus eliminating the need for the usually difficult quantitative evaluations.

Although some direct-reading instruments for airborne contaminants may have limitations for exposure assessment purposes, these are extremely useful for the recognition of hazards and identification of their sources, the determination of peaks in concentration, the gathering of data for control measures, and for checking on controls such as ventilation systems. In connection with the latter, instruments to check air velocity and static pressure are also needed.

One of the possible structures would comprise the following units:

- field equipment (sampling, direct-reading)

- analytical laboratory

- particles laboratory

- physical agents (noise, thermal environment, illumination and radiation)

- workshop for maintenance and repairs of instrumentation.

Whenever selecting occupational hygiene equipment, in addition to performance characteristics, practical aspects have to be considered in view of the expected conditions of use—for example, available infrastructure, climate, location. These aspects include portability, required source of energy, calibration and maintenance requirements, and availability of the required expendable supplies.

Equipment should be purchased only if and when:

- there is a real need

- skills for the adequate operation, maintenance and repairs are available

- the complete procedure has been developed, since it is of no use, for example, to purchase sampling pumps without a laboratory to analyse the samples (or an agreement with an outside laboratory).

Calibration of all types of occupational hygiene measuring and sampling as well as analytical equipment should be an integral part of any procedure, and the required equipment should be available.

Maintenance and repairs are essential to prevent equipment from staying idle for long periods of time, and should be ensured by manufacturers, either by direct assistance or by providing training of staff.

If a completely new programme is being developed, only basic equipment should be initially purchased, more items being added as the needs are established and operational capabilities ensured. However, even before equipment and laboratories are available and operational, much can be achieved by inspecting workplaces to qualitatively assess health hazards, and by recommending control measures for recognized hazards. Lack of capability to carry out quantitative exposure assessments should never justify inaction concerning obviously hazardous exposures. This is particularly true for situations where workplace hazards are uncontrolled and heavy exposures are common.

Information

This includes library (books, periodicals and other publications), databases (e.g. on CD-ROM) and communications.

Whenever possible, personal computers and CD-ROM readers should be provided, as well as connections to the INTERNET. There are ever-increasing possibilities for on-line networked public information servers (World Wide Web and GOPHER sites), which provide access to a wealth of information sources relevant to workers’ health, therefore fully justifying investment in computers and communications. Such systems should include e-mail, which opens new horizons for communications and discussions, either individually or as groups, thus facilitating and promoting exchange of information throughout the world.

Planning

Timely and careful planning for the implementation, management and periodic evaluation of a programme is essential to ensure that the objectives and goals are achieved, while making the best use of the available resources.

Initially, the following information should be obtained and analysed:

- nature and magnitude of prevailing hazards, in order to establish priorities

- legal requirements (legislation, standards)

- available resources

- infrastructure and support services.

The planning and organization processes include:

- establishment of the purpose of the programme or service, definition of objectives and the scope of the activities, in view of the expected demand and the available resources

- allocation of resources

- definition of the organizational structure

- profile of the required human resources and plans for their development (if needed)

- clear assignment of responsibilities to units, teams and individuals

- design/adaptation of the facilities

- selection of equipment

- operational requirements

- establishment of mechanisms for communication within and outside the service

- timetable.

Operational costs should not be underestimated, since lack of resources may seriously hinder the continuity of a programme. Requirements which cannot be overlooked include:

- purchase of expendable supplies (including items such as filters, detector tubes, charcoal tubes, reagents), spare parts for equipment, etc.

- maintenance and repairs of equipment

- transportation (vehicles, fuel, maintenance) and travel

- information update.

Resources must be optimized through careful study of all elements which should be considered as integral parts of a comprehensive service. A well-balanced allocation of resources to the different units (field measurements, sampling, analytical laboratories, etc.) and all the components (facilities and equipment, personnel, operational aspects) is essential for a successful programme. Moreover, allocation of resources should allow for flexibility, because occupational hygiene services may have to undergo adaptations in order to respond to the real needs, which should be periodically assessed.

Communication, sharing and collaboration are key words for successful teamwork and enhanced individual capabilities. Effective mechanisms for communication, within and outside the programme, are needed to ensure the required multidisciplinary approach for the protection and promotion of workers’ health. There should be close interaction with other occupational health professionals, particularly occupational physicians and nurses, ergonomists and work psychologists, as well as safety professionals. At the workplace level, this should include workers, production personnel and managers.

The implementation of successful programmes is a gradual process. Therefore, at the planning stage, a realistic timetable should be prepared, according to well-established priorities and in view of the available resources.

Management

Management involves decision-making as to the goals to be achieved and actions required to efficiently achieve these goals, with participation of all concerned, as well as foreseeing and avoiding, or recognizing and solving, the problems which may create obstacles to the completion of the required tasks. It should be kept in mind that scientific knowledge is no assurance of the managerial competence required to run an efficient programme.

The importance of implementing and enforcing correct procedures and quality assurance cannot be overemphasized, since there is much difference between work done and work well done. Moreover, the real objectives, not the intermediate steps, should serve as a yardstick; the efficiency of an occupational hygiene programme should be measured not by the number of surveys carried out, but rather by the number of surveys that led to actual action to protect workers’ health.

Good management should be able to distinguish between what is impressive and what is important; very detailed surveys involving sampling and analysis, yielding very accurate and precise results, may be very impressive, but what is really important are the decisions and actions that will be taken afterwards.

Quality assurance

The concept of quality assurance, involving quality control and proficiency testing, refers primarily to activities which involve measurements. Although these concepts have been more often considered in connection with analytical laboratories, their scope has to be extended to also encompass sampling and measurements.

Whenever sampling and analysis are required, the complete procedure should be considered as one, from the point of view of quality. Since no chain is stronger than the weakest link, it is a waste of resources to use, for the different steps of a same evaluation procedure, instruments and techniques of unequal levels of quality. The accuracy and precision of a very good analytical balance cannot compensate for a pump sampling at a wrong flowrate.

The performance of laboratories has to be checked so that the sources of errors can be identified and corrected. There is need for a systematic approach in order to keep the numerous details involved under control. It is important to establish quality assurance programmes for occupational hygiene laboratories, and this refers both to internal quality control and to external quality assessments (often called “proficiency testing”).

Concerning sampling, or measurements with direct-reading instruments (including for measurement of physical agents), quality involves adequate and correct:

- preliminary studies including the identification of possible hazards and the factors required for the design of the strategy

- design of the sampling (or measurement) strategy

- selection and utilization of methodologies and equipment for sampling or measurements, accounting both for the purpose of the investigation and for quality requirements

- performance of the procedures, including time monitoring

- handling, transport and storage of samples (if the case).

Concerning the analytical laboratory, quality involves adequate and correct:

- design and installation of the facilities

- selection and utilization of validated analytical methods (or, if necessary, validation of analytical methods)

- selection and installation of instrumentation

- adequate supplies (reagents, reference samples, etc.).

For both, it is indispensable to have:

- clear protocols, procedures and written instructions

- routine calibration and maintenance of the equipment

- training and motivation of the staff to adequately perform the required procedures

- adequate management

- internal quality control

- external quality assessment or proficiency testing (if applicable).

Furthermore, it is essential to have a correct treatment of the obtained data and interpretation of results, as well as accurate reporting and record keeping.

Laboratory accreditation, defined by CEN (EN 45001) as “formal recognition that a testing laboratory is competent to carry out specific tests or specific types of tests” is a very important control tool and should be promoted. It should cover both the sampling and the analytical procedures.

Programme evaluation

The concept of quality must be applied to all steps of occupational hygiene practice, from the recognition of hazards to the implementation of hazard prevention and control programmes. With this in mind, occupational hygiene programmes and services must be periodically and critically evaluated, aiming at continuous improvement.

Concluding Remarks

Occupational hygiene is essential for the protection of workers’ health and the environment. Its practice involves many steps, which are interlinked and which have no meaning by themselves but must be integrated into a comprehensive approach.

Recognition of Hazards

A workplace hazard can be defined as any condition that may adversely affect the well-being or health of exposed persons. Recognition of hazards in any occupational activity involves characterization of the workplace by identifying hazardous agents and groups of workers potentially exposed to these hazards. The hazards might be of chemical, biological or physical origin (see table 1). Some hazards in the work environment are easy to recognize—for example, irritants, which have an immediate irritating effect after skin exposure or inhalation. Others are not so easy to recognize—for example, chemicals which are accidentally formed and have no warning properties. Some agents like metals (e.g., lead, mercury, cadmium, manganese), which may cause injury after several years of exposure, might be easy to identify if you are aware of the risk. A toxic agent may not constitute a hazard at low concentrations or if no one is exposed. Basic to the recognition of hazards are identification of possible agents at the workplace, knowledge about health risks of these agents and awareness of possible exposure situations.

Table 1. Hazards of chemical, biological and physical agents.

|

Type of hazard |

Description |

Examples |

|

CHEMICAL HAZARDS

|

Chemicals enter the body principally through inhalation, skin absorption or ingestion. The toxic effect might be acute, chronic or both., |

|

|

Corrosion |

Corrosive chemicals actually cause tissue destruction at the site of contact. Skin, eyes and digestive system are the most commonly affected parts of the body. |

Concentrated acids and alkalis, phosphorus |

|

Irritation |

Irritants cause inflammation of tissues where they are deposited. Skin irritants may cause reactions like eczema or dermatitis. Severe respiratory irritants might cause shortness of breath, inflammatory responses and oedema. |

Skin: acids, alkalis, solvents, oils Respiratory: aldehydes, alkaline dusts, ammonia, nitrogendioxide, phosgene, chlorine, bromine, ozone |

|

Allergic reactions |

Chemical allergens or sensitizers can cause skin or respiratory allergic reactions. |

Skin: colophony (rosin), formaldehyde, metals like chromium or nickel, some organic dyes, epoxy hardeners, turpentine Respiratory: isocyanates, fibre-reactive dyes, formaldehyde, many tropical wood dusts, nickel

|

|

Asphyxiation |

Asphyxiants exert their effects by interfering with the oxygenation of the tissues. Simple asphyxiants are inert gases that dilute the available atmospheric oxygen below the level required to support life. Oxygen-deficient atmospheres may occur in tanks, holds of ships, silos or mines. Oxygen concentration in air should never be below 19.5% by volume. Chemical asphyxiants prevent oxygen transport and the normal oxygenation of blood or prevent normal oxygenation of tissues. |

Simple asphyxiants: methane, ethane, hydrogen, helium Chemical asphyxiants: carbon monoxide, nitrobenzene, hydrogencyanide, hydrogen sulphide

|

|

Cancer |

Known human carcinogens are chemicals that have been clearly demonstrated to cause cancer in humans. Probable human carcinogens are chemicals that have been clearly demonstrated to cause cancer in animals or the evidence is not definite in humans. Soot and coal tars were the first chemicals suspected to cause cancer. |

Known: benzene (leukaemia); vinyl chloride (liver angio-sarcoma); 2-naphthylamine, benzidine (bladder cancer); asbestos (lung cancer, mesothelioma); hardwood dust (nasalor nasal sinus adenocarcinoma) Probable: formaldehyde, carbon tetrachloride, dichromates, beryllium |

|

Reproductive effects

|

Reproductive toxicants interfere with reproductive or sexual functioning of an individual. |

Manganese, carbon disulphide, monomethyl and ethyl ethers of ethylene glycol, mercury |

|

|

Developmental toxicants are agents that may cause an adverse effect in offspring of exposed persons; for example, birth defects. Embryotoxic or foetotoxic chemicals can cause spontaneous abortions or miscarriages. |

Organic mercury compounds, carbon monoxide, lead, thalidomide, solvents |

|

Systemic poisons

|

Systemic poisons are agents that cause injury to particular organs or body systems. |

Brain: solvents, lead, mercury, manganese Peripheral nervous system: n-hexane, lead, arsenic, carbon disulphide Blood-forming system: benzene, ethylene glycol ethers Kidneys: cadmium, lead, mercury, chlorinated hydrocarbons Lungs: silica, asbestos, coal dust (pneumoconiosis)

|

|

BIOLOGICAL HAZARDS

|

Biological hazards can be defined as organic dusts originating from different sources of biological origin such as virus, bacteria, fungi, proteins from animals or substances from plants such as degradation products of natural fibres. The aetiological agent might be derived from a viable organism or from contaminants or constitute a specific component in the dust. Biological hazards are grouped into infectious and non-infectious agents. Non-infectious hazards can be further divided into viable organisms, biogenic toxins and biogenic allergens. |

|

|

Infectious hazards |

Occupational diseases from infectious agents are relatively uncommon. Workers at risk include employees at hospitals, laboratory workers, farmers, slaughterhouse workers, veterinarians, zoo keepers and cooks. Susceptibility is very variable (e.g., persons treated with immunodepressing drugs will have a high sensitivity). |

Hepatitis B, tuberculosis, anthrax, brucella, tetanus, chlamydia psittaci, salmonella |

|

Viable organisms and biogenic toxins |

Viable organisms include fungi, spores and mycotoxins; biogenic toxins include endotoxins, aflatoxin and bacteria. The products of bacterial and fungal metabolism are complex and numerous and affected by temperature, humidity and kind of substrate on which they grow. Chemically they might consist of proteins, lipoproteins or mucopolysaccharides. Examples are Gram positive and Gram negative bacteria and moulds. Workers at risk include cotton mill workers, hemp and flax workers, sewage and sludge treatment workers, grain silo workers. |

Byssinosis, “grain fever”, Legionnaire’s disease |

|

Biogenic allergens |

Biogenic allergens include fungi, animal-derived proteins, terpenes, storage mites and enzymes. A considerable part of the biogenic allergens in agriculture comes from proteins from animal skin, hair from furs and protein from the faecal material and urine. Allergens might be found in many industrial environments, such as fermentation processes, drug production, bakeries, paper production, wood processing (saw mills, production, manufacturing) as well as in bio-technology (enzyme and vaccine production, tissue culture) and spice production. In sensitized persons, exposure to the allergic agents may induce allergic symptoms such as allergic rhinitis, conjunctivitis or asthma. Allergic alveolitis is characterized by acute respiratory symptoms like cough, chills, fever, headache and pain in the muscles, which might lead to chronic lung fibrosis. |

Occupational asthma: wool, furs, wheat grain, flour, red cedar, garlic powder Allergic alveolitis: farmer’s disease, bagassosis, “bird fancier’s disease”, humidifier fever, sequoiosis

|

|

PHYSICAL HAZARDS |

|

|

|

Noise |

Noise is considered as any unwanted sound that may adversely affect the health and well-being of individuals or populations. Aspects of noise hazards include total energy of the sound, frequency distribution, duration of exposure and impulsive noise. Hearing acuity is generally affected first with a loss or dip at 4000 Hz followed by losses in the frequency range from 2000 to 6000 Hz. Noise might result in acute effects like communication problems, decreased concentration, sleepiness and as a consequence interference with job performance. Exposure to high levels of noise (usually above 85 dBA) or impulsive noise (about 140 dBC) over a significant period of time may cause both temporary and chronic hearing loss. Permanent hearing loss is the most common occupational disease in compensation claims. |

Foundries, woodworking, textile mills, metalworking |

|

Vibration |

Vibration has several parameters in common with noise-frequency, amplitude, duration of exposure and whether it is continuous or intermittent. Method of operation and skilfulness of the operator seem to play an important role in the development of harmful effects of vibration. Manual work using powered tools is associated with symptoms of peripheral circulatory disturbance known as “Raynaud’s phenomenon” or “vibration-induced white fingers” (VWF). Vibrating tools may also affect the peripheral nervous system and the musculo-skeletal system with reduced grip strength, low back pain and degenerative back disorders. |

Contract machines, mining loaders, fork-lift trucks, pneumatic tools, chain saws |

|

Ionizing radiation

|

The most important chronic effect of ionizing radiation is cancer, including leukaemia. Overexposure from comparatively low levels of radiation have been associated with dermatitis of the hand and effects on the haematological system. Processes or activities which might give excessive exposure to ionizing radiation are very restricted and regulated. |

Nuclear reactors, medical and dental x-ray tubes, particle accelerators, radioisotopes |

|

Non-ionizing radiation

|

Non-ionizing radiation consists of ultraviolet radiation, visible radiation, infrared, lasers, electromagnetic fields (microwaves and radio frequency) and extreme low frequency radiation. IR radiation might cause cataracts. High-powered lasers may cause eye and skin damage. There is an increasing concern about exposure to low levels of electromagnetic fields as a cause of cancer and as a potential cause of adverse reproductive outcomes among women, especially from exposure to video display units. The question about a causal link to cancer is not yet answered. Recent reviews of available scientific knowledge generally conclude that there is no association between use of VDUs and adverse reproductive outcome. |

Ultraviolet radiation: arc welding and cutting; UV curing of inks, glues, paints, etc.; disinfection; product control Infrared radiation: furnaces, glassblowing Lasers: communications, surgery, construction

|

Identification and Classification of Hazards

Before any occupational hygiene investigation is performed the purpose must be clearly defined. The purpose of an occupational hygiene investigation might be to identify possible hazards, to evaluate existing risks at the workplace, to prove compliance with regulatory requirements, to evaluate control measures or to assess exposure with regard to an epidemiological survey. This article is restricted to programmes aimed at identification and classification of hazards at the workplace. Many models or techniques have been developed to identify and evaluate hazards in the working environment. They differ in complexity, from simple checklists, preliminary industrial hygiene surveys, job-exposure matrices and hazard and operability studies to job exposure profiles and work surveillance programmes (Renes 1978; Gressel and Gideon 1991; Holzner, Hirsh and Perper 1993; Goldberg et al. 1993; Bouyer and Hémon 1993; Panett, Coggon and Acheson 1985; Tait 1992). No single technique is a clear choice for everyone, but all techniques have parts which are useful in any investigation. The usefulness of the models also depends on the purpose of the investigation, size of workplace, type of production and activity as well as complexity of operations.

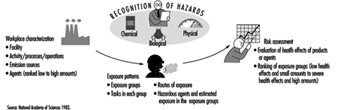

Identification and classification of hazards can be divided into three basic elements: workplace characterization, exposure pattern and hazard evaluation.

Workplace characterization

A workplace might have from a few employees up to several thousands and have different activities (e.g., production plants, construction sites, office buildings, hospitals or farms). At a workplace different activities can be localized to special areas such as departments or sections. In an industrial process, different stages and operations can be identified as production is followed from raw materials to finished products.

Detailed information should be obtained about processes, operations or other activities of interest, to identify agents utilized, including raw materials, materials handled or added in the process, primary products, intermediates, final products, reaction products and by-products. Additives and catalysts in a process might also be of interest to identify. Raw material or added material which has been identified only by trade name must be evaluated by chemical composition. Information or safety data sheets should be available from manufacturer or supplier.

Some stages in a process might take place in a closed system without anyone exposed, except during maintenance work or process failure. These events should be recognized and precautions taken to prevent exposure to hazardous agents. Other processes take place in open systems, which are provided with or without local exhaust ventilation. A general description of the ventilation system should be provided, including local exhaust system.

When possible, hazards should be identified in the planning or design of new plants or processes, when changes can be made at an early stage and hazards might be anticipated and avoided. Conditions and procedures that may deviate from the intended design must be identified and evaluated in the process state. Recognition of hazards should also include emissions to the external environment and waste materials. Facility locations, operations, emission sources and agents should be grouped together in a systematic way to form recognizable units in the further analysis of potential exposure. In each unit, operations and agents should be grouped according to health effects of the agents and estimation of emitted amounts to the work environment.

Exposure patterns

The main exposure routes for chemical and biological agents are inhalation and dermal uptake or incidentally by ingestion. The exposure pattern depends on frequency of contact with the hazards, intensity of exposure and time of exposure. Working tasks have to be systematically examined. It is important not only to study work manuals but to look at what actually happens at the workplace. Workers might be directly exposed as a result of actually performing tasks, or be indirectly exposed because they are located in the same general area or location as the source of exposure. It might be necessary to start by focusing on working tasks with high potential to cause harm even if the exposure is of short duration. Non-routine and intermittent operations (e.g., maintenance, cleaning and changes in production cycles) have to be considered. Working tasks and situations might also vary throughout the year.

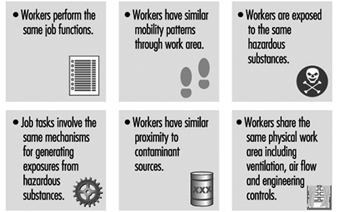

Within the same job title exposure or uptake might differ because some workers wear protective equipment and others do not. In large plants, recognition of hazards or a qualitative hazard evaluation very seldom can be performed for every single worker. Therefore workers with similar working tasks have to be classified in the same exposure group. Differences in working tasks, work techniques and work time will result in considerably different exposure and have to be considered. Persons working outdoors and those working without local exhaust ventilation have been shown to have a larger day-to-day variability than groups working indoors with local exhaust ventilation (Kromhout, Symanski and Rappaport 1993). Work processes, agents applied for that process/job or different tasks within a job title might be used, instead of the job title, to characterize groups with similar exposure. Within the groups, workers potentially exposed must be identified and classified according to hazardous agents, routes of exposure, health effects of the agents, frequency of contact with the hazards, intensity and time of exposure. Different exposure groups should be ranked according to hazardous agents and estimated exposure in order to determine workers at greatest risk.

Qualitative hazard evaluation

Possible health effects of chemical, biological and physical agents present at the workplace should be based on an evaluation of available epidemiological, toxicological, clinical and environmental research. Up-to-date information about health hazards for products or agents used at the workplace should be obtained from health and safety journals, databases on toxicity and health effects, and relevant scientific and technical literature.

Material Safety Data Sheets (MSDSs) should if necessary be updated. Data Sheets document percentages of hazardous ingredients together with the Chemical Abstracts Service chemical identifier, the CAS-number, and threshold limit value (TLV), if any. They also contain information about health hazards, protective equipment, preventive actions, manufacturer or supplier, and so on. Sometimes the ingredients reported are rather rudimentary and have to be supplemented with more detailed information.

Monitored data and records of measurements should be studied. Agents with TLVs provide general guidance in deciding whether the situation is acceptable or not, although there must be allowance for possible interactions when workers are exposed to several chemicals. Within and between different exposure groups, workers should be ranked according to health effects of agents present and estimated exposure (e.g., from slight health effects and low exposure to severe health effects and estimated high exposure). Those with the highest ranks deserve highest priority. Before any prevention activities start it might be necessary to perform an exposure monitoring programme. All results should be documented and easily attainable. A working scheme is illustrated in figure 1.

Figure 1. Elements of risk assessment

In occupational hygiene investigations the hazards to the outdoor environment (e.g., pollution and greenhouse effects as well as effects on the ozone layer) might also be considered.

Chemical, Biological and Physical Agents

Hazards might be of chemical, biological or physical origin. In this section and in table 1 a brief description of the various hazards will be given together with examples of environments or activities where they will be found (Casarett 1980; International Congress on Occupational Health 1985; Jacobs 1992; Leidel, Busch and Lynch 1977; Olishifski 1988; Rylander 1994). More detailed information will be found elsewhere in this Encyclopaedia.

Chemical agents

Chemicals can be grouped into gases, vapours, liquids and aerosols (dusts, fumes, mists).

Gases

Gases are substances that can be changed to liquid or solid state only by the combined effects of increased pressure and decreased temperature. Handling gases always implies risk of exposure unless they are processed in closed systems. Gases in containers or distribution pipes might accidentally leak. In processes with high temperatures (e.g., welding operations and exhaust from engines) gases will be formed.

Vapours

Vapours are the gaseous form of substances that normally are in the liquid or solid state at room temperature and normal pressure. When a liquid evaporates it changes to a gas and mixes with the surrounding air. A vapour can be regarded as a gas, where the maximal concentration of a vapour depends on the temperature and the saturation pressure of the substance. Any process involving combustion will generate vapours or gases. Degreasing operations might be performed by vapour phase degreasing or soak cleaning with solvents. Work activities like charging and mixing liquids, painting, spraying, cleaning and dry cleaning might generate harmful vapours.

Liquids

Liquids may consist of a pure substance or a solution of two or more substances (e.g., solvents, acids, alkalis). A liquid stored in an open container will partially evaporate into the gas phase. The concentration in the vapour phase at equilibrium depends on the vapour pressure of the substance, its concentration in the liquid phase, and the temperature. Operations or activities with liquids might give rise to splashes or other skin contact, besides harmful vapours.

Dusts

Dusts consist of inorganic and organic particles, which can be classified as inhalable, thoracic or respirable, depending on particle size. Most organic dusts have a biological origin. Inorganic dusts will be generated in mechanical processes like grinding, sawing, cutting, crushing, screening or sieving. Dusts may be dispersed when dusty material is handled or whirled up by air movements from traffic. Handling dry materials or powder by weighing, filling, charging, transporting and packing will generate dust, as will activities like insulation and cleaning work.

Fumes

Fumes are solid particles vaporized at high temperature and condensed to small particles. The vaporization is often accompanied by a chemical reaction such as oxidation. The single particles that make up a fume are extremely fine, usually less than 0.1 μm, and often aggregate in larger units. Examples are fumes from welding, plasma cutting and similar operations.

Mists

Mists are suspended liquid droplets generated by condensation from the gaseous state to the liquid state or by breaking up a liquid into a dispersed state by splashing, foaming or atomizing. Examples are oil mists from cutting and grinding operations, acid mists from electroplating, acid or alkali mists from pickling operations or paint spray mists from spraying operations.

Evaluation of the Work Environment

Hazard Surveillance and Survey Methods

Occupational surveillance involves active programmes to anticipate, observe, measure, evaluate and control exposures to potential health hazards in the workplace. Surveillance often involves a team of people that includes an occupational hygienist, occupational physician, occupational health nurse, safety officer, toxicologist and engineer. Depending upon the occupational environment and problem, three surveillance methods can be employed: medical, environmental and biological. Medical surveillance is used to detect the presence or absence of adverse health effects for an individual from occupational exposure to contaminants, by performing medical examinations and appropriate biological tests. Environmental surveillance is used to document potential exposure to contaminants for a group of employees, by measuring the concentration of contaminants in the air, in bulk samples of materials, and on surfaces. Biological surveillance is used to document the absorption of contaminants into the body and correlate with environmental contaminant levels, by measuring the concentration of hazardous substances or their metabolites in the blood, urine or exhaled breath of workers.

Medical Surveillance

Medical surveillance is performed because diseases can be caused or exacerbated by exposure to hazardous substances. It requires an active programme with professionals who are knowledgeable about occupational diseases, diagnoses and treatment. Medical surveillance programmes provide steps to protect, educate, monitor and, in some cases, compensate the employee. It can include pre-employment screening programmes, periodic medical examinations, specialized tests to detect early changes and impairment caused by hazardous substances, medical treatment and extensive record keeping. Pre-employment screening involves the evaluation of occupational and medical history questionnaires and results of physical examinations. Questionnaires provide information concerning past illnesses and chronic diseases (especially asthma, skin, lung and heart diseases) and past occupational exposures. There are ethical and legal implications of pre-employment screening programmes if they are used to determine employment eligibility. However, they are fundamentally important when used to (1) provide a record of previous employment and associated exposures, (2) establish a baseline of health for an employee and (3) test for hypersusceptibility. Medical examinations can include audiometric tests for hearing loss, vision tests, tests of organ function, evaluation of fitness for wearing respiratory protection equipment, and baseline urine and blood tests. Periodic medical examinations are essential for evaluating and detecting trends in the onset of adverse health effects and may include biological monitoring for specific contaminants and the use of other biomarkers.

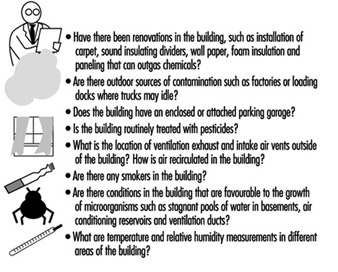

Environmental and Biological Surveillance

Environmental and biological surveillance starts with an occupational hygiene survey of the work environment to identify potential hazards and contaminant sources, and determine the need for monitoring. For chemical agents, monitoring could involve air, bulk, surface and biological sampling. For physical agents, monitoring could include noise, temperature and radiation measurements. If monitoring is indicated, the occupational hygienist must develop a sampling strategy that includes which employees, processes, equipment or areas to sample, the number of samples, how long to sample, how often to sample, and the sampling method. Industrial hygiene surveys vary in complexity and focus depending upon the purpose of the investigation, type and size of establishment, and nature of the problem.