11. Sensory Systems

Chapter Editor: Heikki Savolainen

Table of Contents

Tables and Figures

The Ear

Marcel-André Boillat

Chemically-Induced Hearing Disorders

Peter Jacobsen

Physically-Induced Hearing Disorders

Peter L. Pelmear

Equilibrium

Lucy Yardley

Vision and Work

Paule Rey and Jean-Jacques Meyer

Taste

April E. Mott and Norman Mann

Smell

April E. Mott

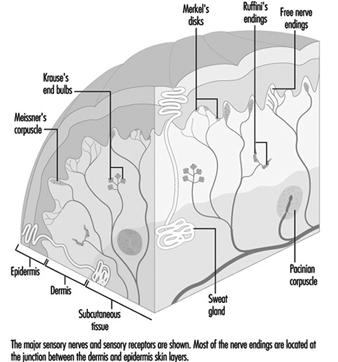

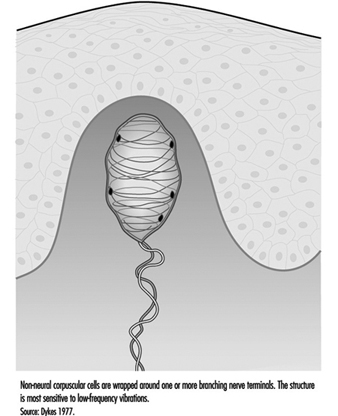

Cutaneous Receptors

Robert Dykes and Daniel McBain

Tables

Click a link below to view table in article context.

1. Typical calculation of functional loss from an audiogram

2. Visual requirements for different activities

3. Recommended illuminance values for the lighting design

4. Visual requirements for a driving licence in France

5. Agents/processes reported to alter the taste system

6. Agents/processes associated with olfactory abnormalities

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

The Ear

Anatomy

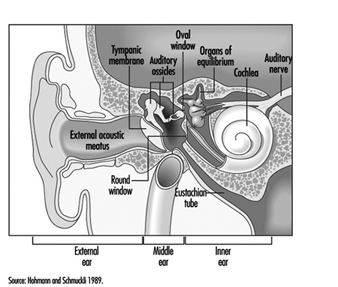

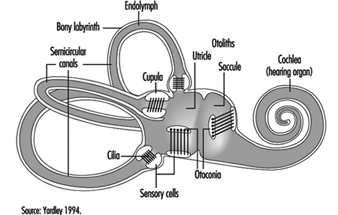

The ear is the sensory organ responsible for hearing and the maintenance of equilibrium, via the detection of body position and of head movement. It is composed of three parts: the outer, middle, and inner ear; the outer ear lies outside the skull, while the other two parts are embedded in the temporal bone (figure 1).

Figure 1. Diagram of the ear.

The outer ear consists of the auricle, a cartilaginous skin-covered structure, and the external auditory canal, an irregularly-shaped cylinder approximately 25 mm long which is lined by glands secreting wax.

The middle ear consists of the tympanic cavity, an air-filled cavity whose outer walls form the tympanic membrane (eardrum), and communicates proximally with the nasopharynx by the Eustachian tubes, which maintain pressure equilibrium on either side of the tympanic membrane. For instance, this communication explains how swallowing allows equalization of pressure and restoration of lost hearing acuity caused by rapid change in barometric pressure (e.g., landing airplanes, fast elevators). The tympanic cavity also contains the ossicles—the malleus, incus and stapes—which are controlled by the stapedius and tensor tympani muscles. The tympanic membrane is linked to the inner ear by the ossicles, specifically by the mobile foot of the stapes, which lies against the oval window.

The inner ear contains the sensory apparatus per se. It consists of a bony shell (the bony labyrinth) within which is found the membranous labyrinth—a series of cavities forming a closed system filled with endolymph, a potassium-rich liquid. The membranous labyrinth is separated from the bony labyrinth by the perilymph, a sodium-rich liquid.

The bony labyrinth itself is composed of two parts. The anterior portion is known as the cochlea and is the actual organ of hearing. It has a spiral shape reminiscent of a snail shell, and is pointed in the anterior direction. The posterior portion of the bony labyrinth contains the vestibule and the semicircular canals, and is responsible for equilibrium. The neurosensory structures involved in hearing and equilibrium are located in the membranous labyrinth: the organ of Corti is located in the cochlear canal, while the maculae of the utricle and the saccule and the ampullae of the semicircular canals are located in the posterior section.

Hearing organs

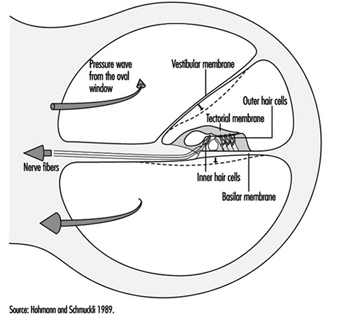

The cochlear canal is a spiral triangular tube, comprising two and one-half turns, which separates the scala vestibuli from the scala tympani. One end terminates in the spiral ligament, a process of the cochlea’s central column, while the other is connected to the bony wall of the cochlea.

The scala vestibuli and tympani end in the oval window (the foot of the stapes) and round window, respectively. The two chambers communicate through the helicotrema, the tip of the cochlea. The basilar membrane forms the inferior surface of the cochlear canal, and supports the organ of Corti, responsible for the transduction of acoustic stimuli. All auditory information is transduced by only 15,000 hair cells (organ of Corti), of which the so-called inner hair cells, numbering 3,500, are critically important, since they form synapses with approximately 90% of the 30,000 primary auditory neurons (figure 2). The inner and outer hair cells are separated from each other by an abundant layer of support cells. Traversing an extraordinarily thin membrane, the cilia of the hair cells are embedded in the tectorial membrane, whose free end is located above the cells. The superior surface of the cochlear canal is formed by Reissner’s membrane.

Figure 2. Cross-section of one loop of the cochlea. Diameter: approximately 1.5 mm.

The bodies of the cochlear sensory cells resting on the basilar membrane are surrounded by nerve terminals, and their approximately 30,000 axons form the cochlear nerve. The cochlear nerve crosses the inner ear canal and extends to the central structures of the brain stem, the oldest part of the brain. The auditory fibres end their tortuous path in the temporal lobe, the part of the cerebral cortex responsible for the perception of acoustic stimuli.

Organs of Equilibrium

The sensory cells are located in the ampullae of the semicircular canals and the maculae of the utricle and saccule, and are stimulated by pressure transmitted through the endolymph as a result of head or body movements. The cells connect with bipolar cells whose peripheral processes form two tracts, one from the anterior and external semicircular canals, the other from the posterior semicircular canal. These two tracts enter the inner ear canal and unite to form the vestibular nerve, which extends to the vestibular nuclei in the brainstem. Fibres from the vestibular nuclei, in turn, extend to cerebellar centres controlling eye movements, and to the spinal cord.

The union of the vestibular and cochlear nerves forms the 8th cranial nerve, also known as the vestibulocochlear nerve.

Physiology of Hearing

Sound conduction through air

The ear is composed of a sound conductor (the outer and middle ear) and a sound receptor (the inner ear).

Sound waves passing through the external auditory canal strike the tympanic membrane, causing it to vibrate. This vibration is transmitted to the stapes through the hammer and anvil. The surface area of the tympanic membrane is almost 16 times that of the foot of the stapes (55 mm2/3.5 mm2), and this, in combination with the lever mechanism of the ossicles, results in a 22-fold amplification of the sound pressure. Due to the middle ear’s resonant frequency, the transmission ratio is optimal between 1,000 and 2,000 Hz. As the foot of the stapes moves, it causes waves to form in the liquid within the vestibular canal. Since the liquid is incompressible, each inward movement of the foot of the stapes causes an equivalent outward movement of the round window, towards the middle ear.

When exposed to high sound levels, the stapes muscle contracts, protecting the inner ear (the attenuation reflex). In addition to this function, the muscles of the middle ear also extend the dynamic range of the ear, improve sound localization, reduce resonance in the middle ear, and control air pressure in the middle ear and liquid pressure in the inner ear.

Between 250 and 4,000 Hz, the threshold of the attenuation reflex is approximately 80 decibels (dB) above the hearing threshold, and increases by approximately 0.6 dB/dB as the stimulation intensity increases. Its latency is 150 ms at threshold, and 24-35 ms in the presence of intense stimuli. At frequencies below the natural resonance of the middle ear, contraction of the middle ear muscles attenuates sound transmission by approximately 10 dB. Because of its latency, the attenuation reflex provides adequate protection from noise generated at rates above two to three per second, but not from discrete impulse noise.

The speed with which sound waves propagate through the ear depends on the elasticity of the basilar membrane. The elasticity increases, and the wave velocity thus decreases, from the base of the cochlea to the tip. The transfer of vibration energy to Reissner’s membrane and the basilar membrane is frequency-dependent. At high frequencies, the wave amplitude is greatest at the base, while for lower frequencies, it is greatest at the tip. Thus, the point of greatest mechanical excitation in the cochlea is frequency-dependent. This phenomenon underlies the ability to detect frequency differences. Movement of the basilar membrane induces shear forces in the stereocilia of the hair cells and triggers a series of mechanical, electrical and biochemical events responsible for mechanical-sensory transduction and initial acoustic signal processing. The shear forces on the stereocilia cause ionic channels in the cell membranes to open, modifying the permeability of the membranes and allowing the entry of potassium ions into the cells. This influx of potassium ions results in depolarization and the generation of an action potential.

Neurotransmitters liberated at the synaptic junction of the inner hair cells as a result of depolarization trigger neuronal impulses which travel down the afferent fibres of the auditory nerve toward higher centres. The intensity of auditory stimulation depends on the number of action potentials per unit time and the number of cells stimulated, while the perceived frequency of the sound depends on the specific nerve fibre populations activated. There is a specific spatial mapping between the frequency of the sound stimulus and the section of the cerebral cortex stimulated.

The inner hair cells are mechanoreceptors which transform signals generated in response to acoustic vibration into electric messages sent to the central nervous system. They are not, however, responsible for the ear’s threshold sensitivity and its extraordinary frequency selectivity.

The outer hair cells, on the other hand, send no auditory signals to the brain. Rather, their function is to selectively amplify mechano-acoustic vibration at near-threshold levels by a factor of approximately 100 (i.e., 40 dB), and so facilitate stimulation of inner hair cells. This amplification is believed to function through micromechanical coupling involving the tectorial membrane. The outer hair cells can produce more energy than they receive from external stimuli and, by contracting actively at very high frequencies, can function as cochlear amplifiers.

In the inner ear, interference between outer and inner hair cells creates a feedback loop which permits control of auditory reception, particularly of threshold sensitivity and frequency selectivity. Efferent cochlear fibres may thus help reduce cochlear damage caused by exposure to intense acoustic stimuli. Outer hair cells may also undergo reflex contraction in the presence of intense stimuli. The attenuation reflex of the middle ear, active primarily at low frequencies, and the reflex contraction in the inner ear, active at high frequencies, are thus complementary.

Bone conduction of sound

Sound waves may also be transmitted through the skull. Two mechanisms are possible:

In the first, compression waves impacting the skull cause the incompressible perilymph to deform the round or oval window. As the two windows have differing elasticities, movement of the endolymph results in movement of the basilar membrane.

The second mechanism is based on the fact that movement of the ossicles induces movement in the scala vestibuli only. In this mechanism, movement of the basilar membrane results from the translational movement produced by the inertia.

Bone conduction is normally 30-50 dB lower than air conduction—as is readily apparent when both ears are blocked. This is only true, however, for air-mediated stimuli, direct bone stimulation being attenuated to a different degree.

Sensitivity range

Mechanical vibration induces potential changes in the cells of the inner ear, conduction pathways and higher centres. Only frequencies of 16 Hz–25,000 Hz and sound pressures (these can be expressed in pascals, Pa) of 20 μPa to 20 Pa can be perceived. The range of sound pressures which can be perceived is remarkable—a 1-million-fold range! The detection thresholds of sound pressure are frequency-dependent, lowest at 1,000-6,000 Hz and increasing at both higher and lower frequencies.

For practical purposes, the sound pressure level is expressed in decibels (dB), a logarithmic measurement scale corresponding to perceived sound intensity relative to the auditory threshold. Thus, 20 μPa is equivalent to 0 dB. As the sound pressure increases tenfold, the decibel level increases by 20 dB, in accordance with the following formula:

Lx = 20 log Px/P0

where:

Lx = sound pressure in dB

Px = sound pressure in pascals

P0 = reference sound pressure(2×10–5 Pa, the auditory threshold)

The frequency-discrimination threshold, that is the minimal detectable difference in frequency, is 1.5 Hz up to 500 Hz, and 0.3% of the stimulus frequency at higher frequencies. At sound pressures near the auditory threshold, the sound-pressure-discrimination threshold is approximately 20%, although differences of as little as 2% may be detected at high sound pressures.

If two sounds differ in frequency by a sufficiently small amount, only one tone will be heard. The perceived frequency of the tone will be midway between the two source tones, but its sound pressure level is variable. If two acoustic stimuli have similar frequencies but differing intensities, a masking effect occurs. If the difference in sound pressure is large enough, masking will be complete, with only the loudest sound perceived.

Localization of acoustic stimuli depends on the detection of the time lag between the arrival of the stimulus at each ear, and, as such, requires intact bilateral hearing. The smallest detectable time lag is 3 x 10–5 seconds. Localization is facilitated by the head’s screening effect, which results in differences in stimulus intensity at each ear.

The remarkable ability of human beings to resolve acoustic stimuli is a result of frequency decomposition by the inner ear and frequency analysis by the brain. These are the mechanisms that allow individual sound sources such as individual musical instruments to be detected and identified in the complex acoustic signals that make up the music of a full symphony orchestra.

Physiopathology

Ciliary damage

The ciliary motion induced by intense acoustic stimuli may exceed the mechanical resistance of the cilia and cause mechanical destruction of hair cells. As these cells are limited in number and incapable of regeneration, any cell loss is permanent, and if exposure to the harmful sound stimulus continues, progressive. In general, the ultimate effect of ciliary damage is the development of a hearing deficit.

Outer hair cells are the most sensitive cells to sound and toxic agents such as anoxia, ototoxic medications and chemicals (e.g., quinine derivates, streptomycin and some other antibiotics, some anti-tumour preparations), and are thus the first to be lost. Only passive hydromechanical phenomena remain operative in outer hair cells which are damaged or have damaged stereocilia. Under these conditions, only gross analysis of acoustic vibration is possible. In very rough terms, cilia destruction in outer hair cells results in a 40 dB increase in hearing threshold.

Cellular damage

Exposure to noise, especially if it is repetitive or prolonged, may also affect the metabolism of cells of the organ of Corti, and afferent synapses located beneath the inner hair cells. Reported extraciliary effects include modification of cell ultrastructure (reticulum, mitochondria, lysosomes) and, postsynaptically, swelling of afferent dendrites. Dendritic swelling is probably due to the toxic accumulation of neurotransmitters as a result of excessive activity by inner hair cells. Nevertheless, the extent of stereociliary damage appears to determine whether hearing loss is temporary or permanent.

Noise-induced Hearing Loss

Noise is a serious hazard to hearing in today’s increasingly complex industrial societies. For example, noise exposure accounts for approximately one-third of the 28 million cases of hearing loss in the United States, and NIOSH (the National Institute for Occupational Safety and Health) reports that 14% of American workers are exposed to potentially dangerous sound levels, that is levels exceeding 90 dB. Noise exposure is the most widespread harmful occupational exposure and is the second leading cause, after age-related effects, of hearing loss. Finally, the contribution of non-occupational noise exposure must not be forgotten, such as home workshops, over-amplified music especially with use of earphones, use of firearms, etc.

Acute noise-induced damage. The immediate effects of exposure to high-intensity sound stimuli (for example, explosions) include elevation of the hearing threshold, rupture of the eardrum, and traumatic damage to the middle and inner ears (dislocation of ossicles, cochlear injury or fistulas).

Temporary threshold shift. Noise exposure results in a decrease in the sensitivity of auditory sensory cells which is proportional to the duration and intensity of exposure. In its early stages, this increase in auditory threshold, known as auditory fatigue or temporary threshold shift (TTS), is entirely reversible but persists for some time after the cessation of exposure.

Studies of the recovery of auditory sensitivity have identified several types of auditory fatigue. Short-term fatigue dissipates in less than two minutes and results in a maximum threshold shift at the exposure frequency. Long-term fatigue is characterized by recovery in more than two minutes but less than 16 hours, an arbitrary limit derived from studies of industrial noise exposure. In general, auditory fatigue is a function of stimulus intensity, duration, frequency, and continuity. Thus, for a given dose of noise, obtained by integration of intensity and duration, intermittent exposure patterns are less harmful than continuous ones.

The severity of the TTS increases by approximately 6 dB for every doubling of stimulus intensity. Above a specific exposure intensity (the critical level), this rate increases, particularly if exposure is to impulse noise. The TTS increases asymptotically with exposure duration; the asymptote itself increases with stimulus intensity. Due to the characteristics of the outer and middle ears’ transfer function, low frequencies are tolerated the best.

Studies on exposure to pure tones indicate that as the stimulus intensity increases, the frequency at which the TTS is the greatest progressively shifts towards frequencies above that of the stimulus. Subjects exposed to a pure tone of 2,000 Hz develop TTS which is maximal at approximately 3,000 Hz (a shift of a semi-octave). The noise’s effect on the outer hair cells is believed to be responsible for this phenomenon.

The worker who shows TTS recovers to baseline hearing values within hours after removal from noise. However, repeated noise exposures result in less hearing recovery and resultant permanent hearing loss.

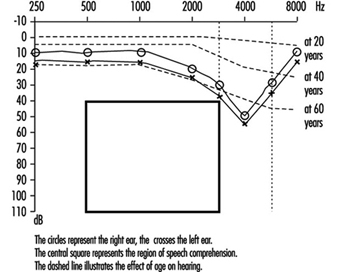

Permanent threshold shift. Exposure to high-intensity sound stimuli over several years may lead to permanent hearing loss. This is referred to as permanent threshold shift (PTS). Anatomically, PTS is characterized by degeneration of the hair cells, starting with slight histological modifications but eventually culminating in complete cell destruction. Hearing loss is most likely to involve frequencies to which the ear is most sensitive, as it is at these frequencies that the transmission of acoustic energy from the external environment to the inner ear is optimal. This explains why hearing loss at 4,000 Hz is the first sign of occupationally induced hearing loss (figure 3). Interaction has been observed between stimulus intensity and duration, and international standards assume the degree of hearing loss to a function of the total acoustic energy received by the ear (dose of noise).

Figure 3. Audiogram showing bilateral noise-induced hearing loss.

The development of noise-induced hearing loss shows individual susceptibility. Various potentially important variables have been examined to explain this susceptibility, such as age, gender, race, cardiovascular disease, smoking, etc. The data were inconclusive.

An interesting question is whether the amount of TTS could be used to predict the risk of PTS. As noted above, there is a progressive shift of the TTS to frequencies above that of the stimulation frequency. On the other hand, most of the ciliary damage occurring at high stimulus intensities involves cells that are sensitive to the stimulus frequency. Should exposure persist, the difference between the frequency at which the PTS is maximal and the stimulation frequency progressively decreases. Ciliary damage and cell loss consequently occurs in the cells most sensitive to the stimulus frequencies. It thus appears that TTS and PTS involve different mechanisms, and that it is thus impossible to predict an individual’s PTS on the basis of the observed TTS.

Individuals with PTS are usually asymptomatic initially. As the hearing loss progresses, they begin to have difficulty following conversations in noisy settings such as parties or restaurants. The progression, which usually affects the ability to perceive high-pitched sounds first, is usually painless and relatively slow.

Examination of individuals suffering from hearing loss

Clinical examination

In addition to the history of the date when the hearing loss was first detected (if any) and how it has evolved, including any asymmetry of hearing, the medical questionnaire should elicit information on the patient’s age, family history, use of ototoxic medications or exposure to other ototoxic chemicals, the presence of tinnitus (i.e., buzzing, whistling or ringing sounds in one or both ears), dizziness or any problems with balance, and any history of ear infections with pain or discharge from the outer ear canal. Of critical importance is a detailed life-long history of exposures to high sound levels (note that, to the layperson, not all sounds are “noise”) on the job, in previous jobs and off-the-job. A history of episodes of TTS would confirm prior toxic exposures to noise.

Physical examination should include evaluation of the function of the other cranial nerves, tests of balance, and ophthalmoscopy to detect any evidence of increased cranial pressure. Visual examination of the external auditory canal will detect any impacted cerumen and, after it has been cautiously removed (no sharp object!), any evidence of scarring or perforation of the tympanic membrane. Hearing loss can be determined very crudely by testing the patient’s ability to repeat words and phrases spoken softly or whispered by the examiner when positioned behind and out of the sight of the patient. The Weber test (placing a vibrating tuning fork in the centre of the forehead to determine if this sound is “heard” in either or both ears) and the Rinné pitch-pipe test (placing a vibrating tuning fork on the mastoid process until the patient can no longer hear the sound, then quickly placing the fork near the ear canal; normally the sound can be heard longer through air than through bone) will allow classification of the hearing loss as transmission- or neurosensory.

The audiogram is the standard test to detect and evaluate hearing loss (see below). Specialized studies to complement the audiogram may be necessary in some patients. These include: tympanometry, word discrimination tests, evaluation of the attenuation reflex, electrophysical studies (electrocochleogram, auditory evoked potentials) and radiological studies (routine skull x rays complemented by CAT scan, MRI).

Audiometry

This crucial component of the medical evaluation uses a device known as an audiometer to determine the auditory threshold of individuals to pure tones of 250-8,000 Hz and sound levels between –10 dB (the hearing threshold of intact ears) and 110 dB (maximal damage). To eliminate the effects of TTSs, patients should not have been exposed to noise during the previous 16 hours. Air conduction is measured by earphones placed on the ears, while bone conduction is measured by placing a vibrator in contact with the skull behind the ear. Each ear’s hearing is measured separately and test results are reported on a graph known as an audiogram (Figure 3). The threshold of intelligibility, that is. the sound intensity at which speech becomes intelligible, is determined by a complementary test method known as vocal audiometry, based on the ability to understand words composed of two syllables of equal intensity (for instance, shepherd, dinner, stunning).

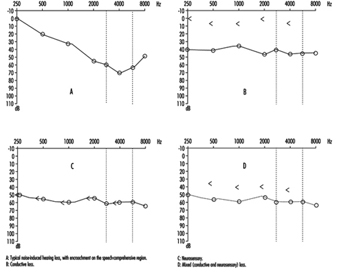

Comparison of air and bone conduction allows classification of hearing losses as transmission (involving the external auditory canal or middle ear) or neurosensory loss (involving the inner ear or auditory nerve) (figures 3 and 4). The audiogram observed in cases of noise-induced hearing loss is characterized by an onset of hearing loss at 4,000 Hz, visible as a dip in the audiogram (figure 3). As exposure to excessive noise levels continues, neighbouring frequencies are progressively affected and the dip broadens, encroaching, at approximately 3,000 Hz, on frequencies essential for the comprehension of conversation. Noise-induced hearing loss is usually bilateral and shows a similar pattern in both ears, that is, the difference between the two ears does not exceed 15 dB at 500 Hz, at 1,000 dB and at 2,000 Hz, and 30 dB at 3,000, at 4,000 and at 6,000 Hz. Asymmetric damage may, however, be present in cases of non-uniform exposure, for example, with marksmen, in whom hearing loss is higher on the side opposite to the trigger finger (the left side, in a right-handed person). In hearing loss unrelated to noise exposure, the audiogram does not exhibit the characteristic 4,000 Hz dip (figure 4).

Figure 4. Examples of right-ear audiograms. The circles represent air-conduction hearing loss, the ““ bone conduction.

There are two types of audiometric examinations: screening and diagnostic. Screening audiometry is used for the rapid examination of groups of individuals in the workplace, in schools or elsewhere in the community to identify those who appear to have some hearing loss. Often, electronic audiometers that permit self-testing are used and, as a rule, screening audiograms are obtained in a quiet area but not necessarily in a sound-proof, vibration-free chamber. The latter is considered to be a prerequisite for diagnostic audiometry which is intended to measure hearing loss with reproducible precision and accuracy. The diagnostic examination is properly performed by a trained audiologist (in some circumstances, formal certification of the competence of the audiologist is required). The accuracy of both types of audiometry depends on periodic testing and recalibration of the equipment being used.

In many jurisdictions, individuals with job-related, noise-induced hearing loss are eligible for workers’ compensation benefits. Accordingly, many employers are including audiometry in their preplacement medical examinations to detect any existing hearing loss that may be the responsibility of a previous employer or represent a non-occupational exposure.

Hearing thresholds progressively increase with age, with higher frequencies being more affected (figure 3). The characteristic 4,000 Hz dip observed in noise-induced hearing loss is not seen with this type of hearing loss.

Calculation of hearing loss

In the United States the most widely accepted formula for calculating functional limitation related to hearing loss is the one proposed in 1979 by the American Academy of Otolaryngology (AAO) and adopted by the American Medical Association. It is based on the average of values obtained at 500, at 1,000, at 2,000 and at 3,000 Hz (table 1), with the lower limit for functional limitation set at 25 dB.

Table 1. Typical calculation of functional loss from an audiogram

| Frequency | |||||||

| 500 Hz |

1,000 Hz |

2,000 Hz |

3,000 Hz |

4,000 Hz |

6,000 Hz |

8,000 Hz |

|

| Right ear (dB) | 25 | 35 | 35 | 45 | 50 | 60 | 45 |

| Left ear (dB) | 25 | 35 | 40 | 50 | 60 | 70 | 50 |

| Unilateral loss |

| Percentage unilateral loss = (average at 500, 1,000, 2,000 and 3,000 Hz) – 25dB (lower limit) x1.5 |

| Example: Right ear: [([25 + 35 + 35 + 45]/4) – 25) x 1.5 = 15 (per cent) Left ear: [([25 + 35 + 40 + 50]/4) – 25) x 1.5 = 18.8 (per cent) |

| Bilateral loss |

| Percentage of bilateral loss = {(percentage of unilateral loss of the best ear x 5) + (percentage of unilateral loss of the worst ear)}/6 |

| Example: {(15 x 5) + 18.8}/6 = 15.6 (per cent) |

Source: Rees and Duckert 1994.

Presbycusis

Presbycusis or age-related hearing loss generally begins at about age 40 and progresses gradually with increasing age. It is usually bilateral. The characteristic 4,000 Hz dip observed in noise-induced hearing loss is not seen with presbycusis. However, it is possible to have the effects of ageing superimposed on noise-related hearing loss.

Treatment

The first essential of treatment is avoidance of any further exposure to potentially toxic levels of noise (see “Prevention” below). It is generally believed that no more subsequent hearing loss occurs after the removal from noise exposure than would be expected from the normal ageing process.

While conduction losses, for example, those related to acute traumatic noise-induced damage, are amenable to medical treatment or surgery, chronic noise-induced hearing loss cannot be corrected by treatment. The use of a hearing aid is the sole “remedy” possible, and is only indicated when hearing loss affects the frequencies critical for speech comprehension (500 to 3,000 Hz). Other types of support, for example lip-reading and sound amplifiers (on telephones, for example), may, however, be possible.

Prevention

Because noise-induced hearing loss is permanent, it is essential to apply any measure likely to reduce exposure. This includes reduction at the source (quieter machines and equipment or encasing them in sound-proof enclosures) or the use of individual protective devices such as ear plugs and/or ear muffs. If reliance is placed on the latter, it is imperative to verify that their manufacturers’ claims for effectiveness are valid and that exposed workers are using them properly at all times.

The designation of 85 dB (A) as the highest permissible occupational exposure limit was to protect the greatest number of people. But, since there is significant interpersonal variation, strenuous efforts to keep exposures well below that level are indicated. Periodic audiometry should be instituted as part of the medical surveillance programme to detect as early as possible any effects that may indicate noise toxicity.

Chemically-Induced Hearing Disorders

Hearing impairment due to the cochlear toxicity of several drugs is well documented (Ryback 1993). But until the latest decade there has been only little attention paid to audiologic effects of industrial chemicals. The recent research on chemically-induced hearing disorders has focused on solvents, heavy metals and chemicals inducing anoxia.

Solvents. In studies with rodents, a permanent decrease in auditory sensitivity to high-frequency tones has been demonstrated following weeks of high-level exposure to toluene. Histopathological and auditory brainstem response studies have indicated a major effect on the cochlea with damage to the outer hair cells. Similar effects have been found in exposure to styrene, xylenes or trichloroethylene. Carbon disulphide and n-hexane may also affect auditory functions while their major effect seems to be on more central pathways (Johnson and Nylén 1995).

Several human cases with damage to the auditory system together with severe neurologic abnormalities have been reported following solvent sniffing. In case series of persons with occupational exposure to solvent mixtures, to n-hexane or to carbon disulphide, both cochlear and central effects on auditory functions have been reported. Exposure to noise was prevalent in these groups, but the effect on hearing has been considered greater than expected from noise.

Only few controlled studies have so far addressed the problem of hearing impairment in humans exposed to solvents without a significant noise exposure. In a Danish study, a statistically significant elevated risk for self-reported hearing impairment at 1.4 (95% CI: 1.1-1.9) was found after exposure to solvents for five years or more. In a group exposed to both solvents and noise, no additional effect from solvent exposure was found. A good agreement between reporting hearing problems and audiometric criteria for hearing impairment was found in a subsample of the study population (Jacobsen et al. 1993).

In a Dutch study of styrene-exposed workers a dose-dependent difference in hearing thresholds was found by audiometry (Muijser et al. 1988).

In another study from Brazil the audiologic effect from exposure to noise, toluene combined with noise, and mixed solvents was examined in workers in printing and paint manufacturing industries. Compared to an unexposed control group, significantly elevated risks for audiometric high frequency hearing loss were found for all three exposure groups. For noise and mixed solvent exposures the relative risks were 4 and 5 respectively. In the group with combined toluene and noise exposure a relative risk of 11 was found, suggesting interaction between the two exposures (Morata et al. 1993).

Metals. The effect of lead on hearing has been studied in surveys of children and teenagers from the United States. A significant dose-response association between blood lead and hearing thresholds at frequencies from 0.5 to 4 kHz was found after controlling for several potential confounders. The effect of lead was present across the entire range of exposure and could be detected at blood lead levels below 10 μg/100ml. In children without clinical signs of lead toxicity a linear relationship between blood lead and latencies of waves III and V in brainstem auditory potentials (BAEP) has been found, indicating a site of action central to the cochlear nucleus (Otto et al. 1985).

Hearing loss is described as a common part of the clinical picture in acute and chronic methyl-mercury poisoning. Both cochlear and postcochlear lesions have been involved (Oyanagi et al. 1989). Inorganic mercury may also affect the auditory system, probably through damage to cochlear structures.

Exposure to inorganic arsenic has been implied in hearing disorders in children. A high frequency of severe hearing loss (>30 dB) has been observed in children fed with powdered milk contaminated with inorganic arsenic V. In a study from Czechoslovakia, environmental exposure to arsenic from a coal-burning power plant was associated with audiometric hearing loss in ten-year-old children. In animal experiments, inorganic arsenic compounds have produced extensive cochlear damage (WHO 1981).

In acute trimethyltin poisoning, hearing loss and tinnitus have been early symptoms. Audiometry has shown pancochlear hearing loss between 15 and 30 dB at presentation. It is not clear whether the abnormalities have been reversible (Besser et al. 1987). In animal experiments, trimethyltin and triethyltin compounds have produced partly reversible cochlear damage (Clerisi et al. 1991).

Asphyxiants. In reports on acute human poisoning by carbon monoxide or hydrogen sulphide, hearing disorders have often been noted along with central nervous system disease (Ryback 1992).

In experiments with rodents, exposure to carbon monoxide had a synergistic effect with noise on auditory thresholds and cochlear structures. No effect was observed after exposure to carbon monoxide alone (Fechter et al. 1988).

Summary

Experimental studies have documented that several solvents can produce hearing disorders under certain exposure circumstances. Studies in humans have indicated that the effect may be present following exposures that are common in the occupational environment. Synergistic effects between noise and chemicals have been observed in some human and experimental animal studies. Some heavy metals may affect hearing, most of them only at exposure levels that produce overt systemic toxicity. For lead, minor effects on hearing thresholds have been observed at exposures far below occupational exposure levels. A specific ototoxic effect from asphyxiants has not been documented at present although carbon monoxide may enhance the audiological effect of noise.

Physically-Induced Hearing Disorders

By virtue of its position within the skull, the auditory system is generally well protected against injuries from external physical forces. There are, however, a number of physical workplace hazards that may affect it. They include:

Barotrauma. Sudden variation in barometric pressure (due to rapid underwater descent or ascent, or sudden aircraft descent) associated with malfunction of the Eustachian tube (failure to equalize pressure) may lead to rupture of the tympanic membrane with pain and haemorrhage into the middle and external ears. In less severe cases stretching of the membrane will cause mild to severe pain. There will be a temporary impairment of hearing (conductive loss), but generally the trauma has a benign course with complete functional recovery.

Vibration. Simultaneous exposure to vibration and noise (continuous or impact) does not increase the risk or severity of sensorineural hearing loss; however, the rate of onset appears to be increased in workers with hand-arm vibration syndrome (HAVS). The cochlear circulation is presumed to be affected by reflex sympathetic spasm, when such workers have bouts of vasospasm (Raynaud’s phenomenon) in their fingers or toes.

Infrasound and ultrasound. The acoustic energy from both of these sources is normally inaudible to humans. The common sources of ultrasound, for example, jet engines, high-speed dental drills, and ultrasonic cleaners and mixers all emit audible sound so the effects of ultrasound on exposed subjects are not easily discernible. It is presumed to be harmless below 120 dB and therefore unlikely to cause NIHL. Likewise, low-frequency noise is relatively safe, but with high intensity (119-144 dB), hearing loss may occur.

“Welder’s ear”. Hot sparks may penetrate the external auditory canal to the level of the tympanic membrane, burning it. This causes acute ear pain and sometimes facial nerve paralysis. With minor burns, the condition requires no treatment, while in more severe cases, surgical repair of the membrane may be necessary. The risk may be avoided by correct positioning of the welder’s helmet or by wearing ear plugs.

Equilibrium

Balance System Function

Input

Perception and control of orientation and motion of the body in space is achieved by a system that involves simultaneous input from three sources: vision, the vestibular organ in the inner ear and sensors in the muscles, joints and skin that provide somatosensory or “proprioceptive” information about movement of the body and physical contact with the environment (figure 1). The combined input is integrated in the central nervous system which generates appropriate actions to restore and maintain balance, coordination and well-being. Failure to compensate in any part of the system may produce unease, dizziness and unsteadiness that can produce symptoms and/or falls.

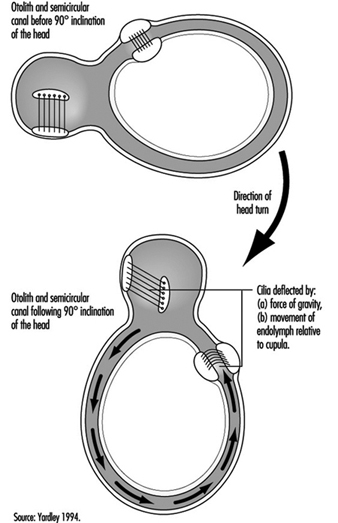

Figure 1. An outline of the principal elements of the balance system

The vestibular system directly registers the orientation and movement of the head. The vestibular labyrinth is a tiny bony structure located in the inner ear, and comprises the semicircular canals filled with fluid (endolymph) and the otoliths (Figure 6). The three semicircular canals are positioned at right angles so that acceleration can be detected in each of the three possible planes of angular motion. During head turns, the relative movement of the endolymph within the canals (caused by inertia) results in deflection of the cilia projecting from the sensory cells, inducing a change in the neural signal from these cells (figure 2). The otoliths contain heavy crystals (otoconia) which respond to changes in the position of the head relative to the force of gravity and to linear acceleration or deceleration, again bending the cilia and so altering the signal from the sensory cells to which they are attached.

Figure 2. Schematic diagram of the vestibular labyrinth.

Figure 3. Schematic representation of the biomechanical effects of a ninety-degree (forward) inclination of the head.

Integration

The central interconnections within the balance system are extremely complex; information from the vestibular organs in both ears is combined with information derived from vision and the somatosensory system at various levels within the brainstem, cerebellum and cortex (Luxon 1984).

Output

This integrated information provides the basis not only for the conscious perception of orientation and self-motion, but also the preconscious control of eye movements and posture, by means of what are known as the vestibuloocular and vestibulospinal reflexes. The purpose of the vestibuloocular reflex is to maintain a stable point of visual fixation during head movement by automatically compensating for the head movement with an equivalent eye movement in the opposite direction (Howard 1982). The vestibulospinal reflexes contribute to postural stability and balance (Pompeiano and Allum 1988).

Balance System Dysfunction

In normal circumstances, the input from the vestibular, visual and somatosensory systems is congruent, but if an apparent mismatch occurs between the different sensory inputs to the balance system, the result is a subjective sensation of dizziness, disorientation, or illusory sense of movement. If the dizziness is prolonged or severe it will be accompanied by secondary symptoms such as nausea, cold sweating, pallor, fatigue, and even vomiting. Disruption of reflex control of eye movements and posture may result in a blurred or flickering visual image, a tendency to veer to one side when walking, or staggering and falling. The medical term for the disorientation caused by balance system dysfunction is “vertigo,” which can be caused by a disorder of any of the sensory systems contributing to balance or by faulty central integration. Only 1 or 2% of the population consult their doctor each year on account of vertigo, but the incidence of dizziness and imbalance rises steeply with age. “Motion sickness” is a form of disorientation induced by artificial environmental conditions with which our balance system has not been equipped by evolution to cope, such as passive transport by car or boat (Crampton 1990).

Vestibular causes of vertigo

The most common causes of vestibular dysfunction are infection (vestibular labyrinthitis or neuronitis), and benign positional paroxysmal vertigo (BPPV) which is triggered principally by lying on one side. Recurrent attacks of severe vertigo accompanied by loss of hearing and noises (tinnitus) in one ear are typical of a syndrome known as Menière’s disease. Vestibular damage can also result from disorders of the middle ear (including bacterial disease, trauma and cholesteatoma), ototoxic drugs (which should be used only in medical emergencies), and head injury.

Non-vestibular peripheral causesof vertigo

Disorders of the neck, which may alter the somatosensory information relating to head movement or interfere with the blood-supply to the vestibular system, are believed by many clinicians to be a cause of vertigo. Common aetiologies include whiplash injury and arthritis. Sometimes unsteadiness is related to a loss of feeling in the feet and legs, which may be caused by diabetes, alcohol abuse, vitamin deficiency, damage to the spinal cord, or a number of other disorders. Occasionally the origin of feelings of giddiness or illusory movement of the environment can be traced to some distortion of the visual input. An abnormal visual input may be caused by weakness of the eye muscles, or may be experienced when adjusting to powerful lenses or to bifocal glasses.

Central causes of vertigo

Although most cases of vertigo are attributable to peripheral (mainly vestibular) pathology, symptoms of disorientation can be caused by damage to the brainstem, cerebellum or cortex. Vertigo due to central dysfunction is almost always accompanied by some other symptom of central neurological disorder, such as sensations of pain, tingling or numbness in the face or limbs, difficulty speaking or swallowing, headache, visual disturbances, and loss of motor control or loss of consciousness. The more common central causes of vertigo include disorders of the blood supply to the brain (ranging from migraine to strokes), epilepsy, multiple sclerosis, alcoholism, and occasionally tumours. Temporary dizziness and imbalance is a potential side-effect of a vast array of drugs, including widely-used analgesics, contraceptives, and drugs used in the control of cardiovascular disease, diabetes and Parkinson’s disease, and in particular the centrally-acting drugs such as stimulants, sedatives, anti-convulsants, anti-depressants and tranquillizers (Ballantyne and Ajodhia 1984).

Diagnosis and treatment

All cases of vertigo require medical attention in order to ensure that the (relatively uncommon) dangerous conditions which can cause vertigo are detected and appropriate treatment is given. Medication can be given to relieve symptoms of acute vertigo in the short term, and in rare cases surgery may be required. However, if the vertigo is caused by a vestibular disorder the symptoms will generally subside over time as the central integrators adapt to the altered pattern of vestibular input—in the same way that sailors continuously exposed to the motion of waves gradually acquire their “sea legs”. For this to occur, it is essential to continue to make vigorous movements which stimulate the balance system, even though these will at first cause dizziness and discomfort. Since the symptoms of vertigo are frightening and embarrassing, sufferers may need physiotherapy and psychological support to combat the natural tendency to restrict their activities (Beyts 1987; Yardley 1994).

Vertigo in the Workplace

Risk factors

Dizziness and disorientation, which may become chronic, is a common symptom in workers exposed to organic solvents; furthermore, long-term exposure can result in objective signs of balance system dysfunction (e.g., abnormal vestibular-ocular reflex control) even in people who experience no subjective dizziness (Gyntelberg et al. 1986; Möller et al. 1990). Changes in pressure encountered when flying or diving can cause damage to the vestibular organ which results in sudden vertigo and hearing loss requiring immediate treatment (Head 1984). There is some evidence that noise-induced hearing loss can be accompanied by damage to the vestibular organs (van Dijk 1986). People who work for long periods at computer screens sometimes complain of dizziness; the cause of this remains unclear, although it may be related to the combination of a stiff neck and moving visual input.

Occupational difficulties

Unexpected attacks of vertigo, such as occur in Menière’s disease, can cause problems for people whose work involves heights, driving, handling dangerous machinery, or responsibility for the safety of others. An increased susceptibility to motion sickness is a common effect of balance system dysfunction and may interfere with travel.

Conclusion

Equilibrium is maintained by a complex multisensory system, and so disorientation and imbalance can result from a wide variety of aetiologies, in particular any condition which affects the vestibular system or the central integration of perceptual information for orientation. In the absence of central neurological damage the plasticity of the balance system will normally enable the individual to adapt to peripheral causes of disorientation, whether these are disorders of the inner ear which alter vestibular function, or environments which provoke motion sickness. However, attacks of dizziness are often unpredictable, alarming and disabling, and rehabilitation may be necessary to restore confidence and assist the balance function.

Vision and Work

Anatomy of the Eye

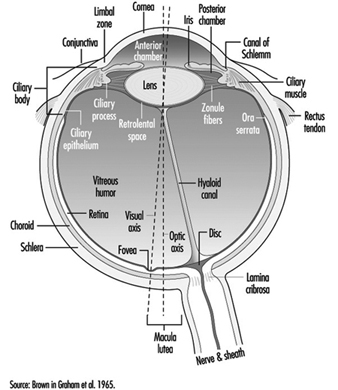

The eye is a sphere (Graham et al. 1965; Adler 1992), approximately 20 mm in diameter, that is set in the body orbit with the six extrinsic (ocular) muscles that move the eye attached to the sclera, its external wall (figure 1). In front, the sclera is replaced by the cornea, which is transparent. Behind the cornea in the interior chamber is the iris, which regulates the diameter of the pupil, the space through which the optic axis passes. The back of the anterior chamber is formed by the biconvex crystalline lens, whose curvature is determined by the ciliary muscles attached at the front to the sclera and behind to the choroidal membrane, which lines the posterior chamber. The posterior chamber is filled with the vitreous humour—a clear, gelatinous liquid. The choroid, the inner surface of the posterior chamber, is black to prevent interference with visual acuity by internal light reflections.

Figure 1. Schematic representation of the eye.

The eyelids help to maintain a film of tears, produced by the lacrymal glands, which protects the anterior surface of the eye. Blinking facilitates the spread of tears and their emptying into the lacrymal canal, which empties in the nasal cavity. The frequency of blinking, which is used as a test in ergonomics, varies greatly depending on the activity being undertaken (for example, it is slower during reading) and also on the lighting conditions (the rate of blinking is lowered by an increase of illumination).

The eyelids help to maintain a film of tears, produced by the lacrymal glands, which protects the anterior surface of the eye. Blinking facilitates the spread of tears and their emptying into the lacrymal canal, which empties in the nasal cavity. The frequency of blinking, which is used as a test in ergonomics, varies greatly depending on the activity being undertaken (for example, it is slower during reading) and also on the lighting conditions (the rate of blinking is lowered by an increase of illumination).

The anterior chamber contains two muscles: the sphincter of the iris, which contracts the pupil, and the dilator, which widens it. When a bright light is directed toward a normal eye, the pupil contracts (pupillary reflex). It also contracts when viewing a nearby object.

The retina has several inner layers of nerve cells and an outer layer containing two types of photoreceptor cells, the rods and cones. Thus, light passes through the nerve cells to the rods and cones where, in a manner not yet understood, it generates impulses in the nerve cells which pass along the optic nerve to the brain. The cones, numbering four to five millions, are responsible for the perception of bright images and colour. They are concentrated in the inner portion of the retina, most densely at the fovea, a small depression at the centre of the retina where there are no rods and where vision is most acute. With the help of spectrophotometry, three types of cones have been identified, whose absorption peaks are yellow, green and blue zones accounting for the sense of colour. The 80 to 100 million rods become more and more numerous toward the periphery of the retina and are sensitive to dim light (night vision). They also play a major role in black-white vision and in the detection of motion.

The nerve fibres, along with the blood vessels which nourish the retina, traverse the choroid, the middle of the three layers forming the wall of the posterior chamber, and leave the eye as the optic nerve at a point somewhat off-centre, which, because there are no photoreceptors there, is known as the “blind spot.”

The retinal vessels, the only arteries and veins that can be viewed directly, can be visualized by directing a light through the pupil and using an ophthalmoscope to focus on their image (the images can also be photographed). Such retinoscopic examinations, part of the routine medical examination, are important in evaluating the vascular components of such diseases as arteriosclerosis, hypertension and diabetes, which may cause retinal haemorrhages and/or exudates that may cause defects in the field of vision.

Properties of the Eye that Are Important for Work

Mechanism of accommodation

In the emmetropic (normal) eye, as light rays pass through the cornea, the pupil and the lens, they are focused on the retina, producing an inverted image which is reversed by the visual centres in the brain.

When a distant object is viewed, the lens is flattened. When viewing nearby objects, the lens accommodates (i.e., increases its power) by a squeezing of the ciliary muscles into a more oval, convex shape. At the same time, the iris constricts the pupil, which improves the quality of the image by reducing the spherical and chromatic aberrations of the system and increasing the depth of field.

In binocular vision, accommodation is necessarily accompanied by proportional convergence of both eyes.

The visual field and the field of fixation

The visual field (the space covered by the eyes at rest) is limited by anatomical obstacles in the horizontal plane (more reduced on the side towards the nose) and in the vertical plane (limited by the upper edge of the orbit). In binocular vision, the horizontal field is about 180 degrees and the vertical field 120 to 130 degrees. In daytime vision, most visual functions are weakened at the periphery of the visual field; on the contrary, perception of movement is improved. In night vision there is a considerable loss of acuity at the centre of the visual field, where, as noted above, the rods are less numerous.

The field of fixation extends beyond the visual field thanks to the mobility of the eyes, head and body; in work activities it is the field of fixation that matters. The causes of reduction of the visual field, whether anatomical or physiological, are very numerous: narrowing of the pupil; opacity of the lens; pathological conditions of the retina, visual pathways or visual centres; the brightness of the target to be perceived; the frames of spectacles for correction or protection; the movement and speed of the target to be perceived; and others.

Visual acuity

“Visual acuity (VA) is the capacity to discriminate the fine details of objects in the field of view. It is specified in terms of the minimum dimension of some critical aspects of a test object that a subject can correctly identify” (Riggs, in Graham et al. 1965). A good visual acuity is the ability to distinguish fine details. Visual acuity defines the limit of spatial discrimination.

The retinal size of an object depends not only on its physical size but also on its distance from the eye; it is therefore expressed in terms of the visual angle (usually in minutes of arc). Visual acuity is the reciprocal of this angle.

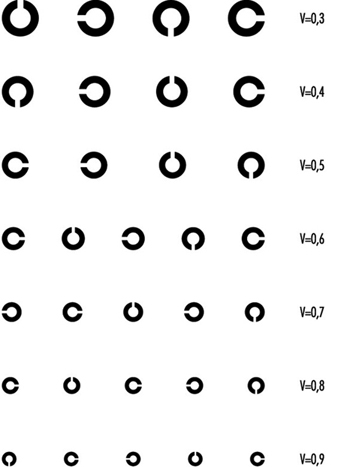

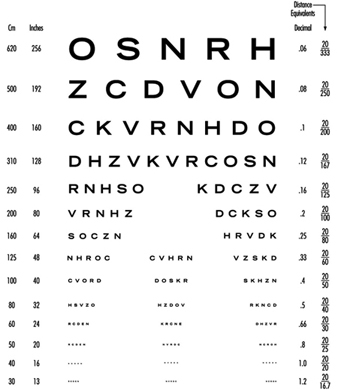

Riggs (1965) describes several types of “acuity task”. In clinical and occupational practice, the recognition task, in which the subject is required to name the test object and locate some details of it, is the most commonly applied. For convenience, in ophthalmology, visual acuity is measured relative to a value called “normal” using charts presenting a series of objects of different sizes; they have to be viewed at a standard distance.

In clinical practice Snellen charts are the most widely used tests for distant visual acuity; a series of test objects are used in which the size and broad shape of characters are designed to subtend an angle of 1 minute at a standard distance which varies from country to country (in the United States, 20 feet between the chart and the tested individual; in most European countries, 6 metres). The normal Snellen score is thus 20/20. Larger test objects which form an angle of 1 minute of arc at greater distances are also provided.

The visual acuity of an individual is given by the relation VA = D¢/D, where D¢ is the standard viewing distance and D the distance at which the smallest test object correctly identified by the individual subtends an angle of 1 minute of arc. For example, a person’s VA is 20/30 if, at a viewing distance of 20 ft, he or she can just identify an object which subtends an angle of 1 minute at 30 feet.

In optometric practice, the objects are often letters of the alphabet (or familiar shapes, for illiterates or children). However, when the test is repeated, charts should present unlearnable characters for which the recognition of differences involve no educational and cultural features. This is one reason why it is nowadays internationally recommended to use Landolt rings, at least in scientific studies. Landolt rings are circles with a gap, the directional position of which has to be identified by the subject.

Except in ageing people or in those individuals with accommodative defects (presbyopia), the far and the near visual acuity parallel each other. Most jobs require both a good far (without accommodation) and a good near vision. Snellen charts of different kinds are also available for near vision (figures 2 and 3). This particular Snellen chart should be held at 16 inches from the eye (40 cm); in Europe, similar charts exist for a reading distance of 30 cm (the appropriate distance for reading a newspaper).

Figure 2. Example of a Snellen chart: Landolt rings (acuity in decimal values (reading distance not specified)).

Figure 3. Example of a Snellen chart: Sloan letters for measuring near vision (40 cm)(acuity in decimal values and in distance equivalents).

With the broad use of visual display units, VDUs, however, there is an increased interest in occupational health to test operators at a longer distance (60 to 70 cm, according to Krueger (1992), in order to correct VDU operators properly.

Vision testers and visual screening

For occupational practice, several types of visual testers are available on the market which have similar features; they are named Orthorater, Visiotest, Ergovision, Titmus Optimal C Tester, C45 Glare Tester, Mesoptometer, Nyctometer and so on.

They are small; they are independent of the lighting of the testing room, having their own internal lighting; they provide several tests, such as far and near binocular and monocular visual acuity (most of the time with unlearnable characters), but also depth perception, rough colour discrimination, muscular balance and so on. Near visual acuity can be measured, sometimes for short and intermediate distance of the test object. The most recent of these devices makes extensive use of electronics to provide automatically written scores for different tests. Moreover, these instruments can be handled by non-medical personnel after some training.

Vision testers are designed for the purpose of pre-recruitment screening of workers, or sometimes later testing, taking into account the visual requirements of their workplace. Table 1 indicates the level of visual acuity needed to fulfil unskilled to highly skilled activities, when using one particular testing device (Fox, in Verriest and Hermans 1976).

Table 1. Visual requirements for different activities when using Titmus Optimal C Tester, with correction

Category 1: Office work

Far visual acuity 20/30 in each eye (20/25 for binocular vision)

Near VA 20/25 in each eye (20/20 for binocular vision)

Category 2: Inspection and other activities in fine mechanics

Far VA 20/35 in each eye (20/30 for binocular vision)

Near VA 20/25 in each eye (20/20 for binocular vision)

Category 3: Operators of mobile machinery

Far VA 20/25 in each eye (20/20 for binocular vision)

Near VA 20/35 in each eye (20/30 for binocular vision)

Category 4 : Machine tools operations

Far and near VA 20/30 in each eye (20/25 for binocular vision)

Category 5 : Unskilled workers

Far VA 20/30 in each eye (20/25 for binocular vision)

Near VA 20/35 in each eye (20/30 for binocular vision)

Category 6 : Foremen

Far VA 20/30 in each eye (20/25 for binocular vision)

Near VA 20/25 in each eye (20/20 for binocular vision)

Source: According to Fox in Verriest and Hermans 1975.

It is recommended by manufacturers that employees are measured when wearing their corrective glasses. Fox (1965), however, stresses that such a procedure may lead to wrong results—for example, workers are tested with glasses which are too old in comparison with the time of the present measurement; or lenses may be worn out by exposure to dust or other noxious agents. It is also very often the case that people come to the testing room with the wrong glasses. Fox (1976) suggests therefore that, if “the corrected vision is not improved to 20/20 level for distance and near, referral should be made to an ophthalmologist for a proper evaluation and refraction for the current need of the employee on his job”. Other deficiencies of vision testers are referred to later in this article.

Factors influencing visual acuity

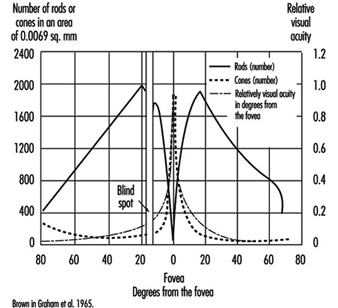

VA meets its first limitation in the structure of the retina. In daytime vision, it may exceed 10/10ths at the fovea and may rapidly decline as one moves a few degrees away from the centre of the retina. In night vision, acuity is very poor or nil at the centre but may reach one tenth at the periphery, because of the distribution of cones and rods (figure 4).

Figure 4. Density of cones and rods in the retina as compared with the relative visual acuity in the corresponding visual field.

The diameter of the pupil acts on visual performance in a complex manner. When dilated, the pupil allows more light to enter into the eye and stimulate the retina; the blur due to the diffraction of the light is minimized. A narrower pupil, however, reduces the negative effects of the aberrations of the lens mentioned above. In general, a pupil diameter of 3 to 6 mm favours clear vision.

Thanks to the process of adaptation it is possible for the human being to see as well by moonlight as by full sunshine, even though there is a difference in illumination of 1 to 10,000,000. Visual sensitivity is so wide that luminous intensity is plotted on a logarithmic scale.

On entering a dark room we are at first completely blind; then the objects around us become perceptible. As the light level is increased, we pass from rod-dominated vision to cone-dominated vision. The accompanying change in sensitivity is known as the Purkinje shift. The dark-adapted retina is mainly sensitive to low luminosity, but is characterized by the absence of colour vision and poor spatial resolution (low VA); the light-adapted retina is not very sensitive to low luminosity (objects have to be well illuminated in order to be perceived), but is characterized by a high degree of spatial and temporal resolution and by colour vision. After the desensitization induced by intense light stimulation, the eye recovers its sensitivity according to a typical progression: at first a rapid change involving cones and daylight or photopic adaptation, followed by a slower phase involving rods and night or scotopic adaptation; the intermediate zone involves dim light or mesopic adaptation.

In the work environment, night adaptation is hardly relevant except for activities in a dark room and for night driving (although the reflection on the road from headlights always brings some light). Simple daylight adaptation is the most common in industrial or office activities, provided either by natural or by artificial lighting. However, nowadays with emphasis on VDU work, many workers like to operate in dim light.

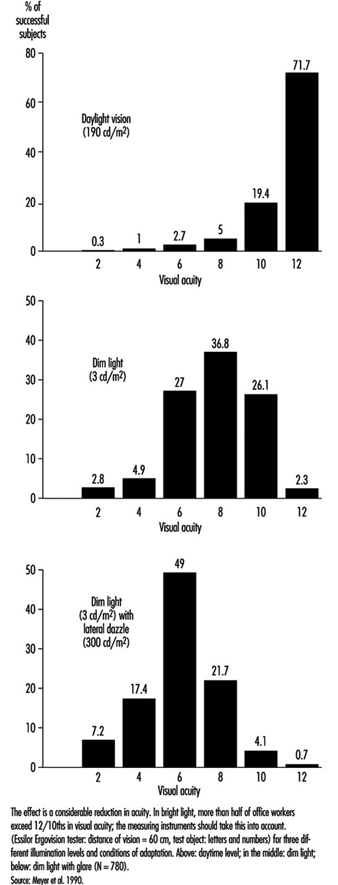

In occupational practice, the behaviour of groups of people is particularly important (in comparison with individual evaluation) when selecting the most appropriate design of workplaces. The results of a study of 780 office workers in Geneva (Meyer et al. 1990) show the shift in percentage distribution of acuity levels when lighting conditions are changed. It may be seen that, once adapted to daylight, most of the tested workers (with eye correction) reach a quite high visual acuity; as soon as the surrounding illumination level is reduced, the mean VA decreases, but also the results are more spread, with some people having very poor performance; this tendency is aggravated when dim light is accompanied by some disturbing glare source (figure 5). In other words, it is very hard to predict the behaviour of a subject in dim light from his or her score in optimal daylight conditions.

Figure 5. Percentage distribution of tested office workers’ visual acuity.

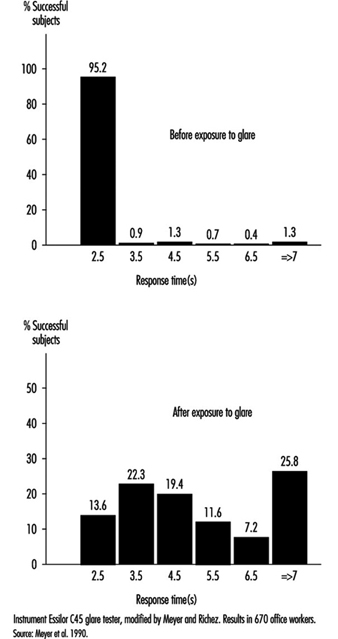

Glare. When the eyes are directed from a dark area to a lighted area and back again, or when the subject looks for a moment at a lamp or window (illuminance varying from 1,000 to 12,000 cd/m2), changes in adaptation concern a limited area of the visual field (local adaptation). Recovery time after disabling glare may last several seconds, depending on illumination level and contrast (Meyer et al. 1986) (figure 6).

Figure 6. Response time before and after exposure to glare for perceiving the gap of a Landolt ring: Adaption to dim light.

Afterimages. Local disadaptation is usually accompanied by the continued image of a bright spot, coloured or not, which produces a veil or masking effect (this is the consecutive image). Afterimages have been studied very extensively to better understand certain visual phenomena (Brown in Graham et al. 1965). After visual stimulation has ceased, the effect remains for some time; this persistence explains, for example, why perception of continuous light may be present when facing a flickering light (see below). If the frequency of flicker is high enough, or when looking at cars at night, we see a line of light. These afterimages are produced in the dark when viewing an enlighted spot; they are also produced by coloured areas, leaving coloured images. It is the reason why VDU operators may be exposed to sharp afterimages after looking for a prolonged time at the screen and then moving their eyes towards another area in the room.

Afterimages are very complicated. For example, one experiment on afterimages found that a blue spot appears white during the first seconds of observation, then pink after 30 seconds, and then bright red after a minute or two. Another experiment showed that an orange-red field appeared momentarily pink, then within 10 to 15 seconds passed through orange and yellow to a bright green appearance which remained throughout the whole observation. When the point of fixation moves, usually the afterimage moves too (Brown in Graham et al. 1965). Such effects could be very disturbing to someone working with a VDU.

Diffused light emitted by glare sources also has the effect of reducing the object/background contrast (veiling effect) and thus reducing visual acuity (disability glare). Ergophthalmologists also describe discomfort glare, which does not reduce visual acuity but causes uncomfortable or even painful sensation (IESNA 1993).

The level of illumination at the workplace must be adapted to the level required by the task. If all that is required is to perceive shapes in an environment of stable luminosity, weak illumination may be adequate; but as soon as it is a question of seeing fine details that require increased acuity, or if the work involves colour discrimination, retinal illumination must be markedly increased.

Table 2 gives recommended illuminance values for the lighting design of a few workstations in different industries (IESNA 1993).

Table 2. Recommended illuminance values for the lighting design of a few workstations

| Cleaning and pressing industry | |

| Dry and wet cleaning and steaming | 500-1,000 lux or 50-100 footcandles |

| Inspection and spotting | 2,000-5,000 lux or 200-500 footcandles |

| Repair and alteration | 1,000-2,000 lux or 100-200 footcandles |

| Dairy products, fluid milk industry | |

| Bottle storage | 200-500 lux or 20-50 footcandles |

| Bottle washers | 200-500 lux or 20-50 footcandles |

| Filling, inspection | 500-1,000 lux or 50-100 footcandles |

| Laboratories | 500-1,000 lux or 50-100 footcandles |

| Electrical equipment, manufacturing | |

| Impregnating | 200-500 lux or 20-50 footcandles |

| Insulating coil winding | 500-1,000 lux or 50-100 footcandles |

| Electricity-generating stations | |

| Air-conditioning equipment, air preheater | 50-100 lux or 50-10 footcandles |

| Auxiliaries, pumps, tanks, compressors | 100-200 lux or 10-20 footcandles |

| Clothing industry | |

| Examining (perching) | 10,000-20,000 lux or 1,000-2,000 footcandles |

| Cutting | 2,000-5,000 lux or 200-500 footcandles |

| Pressing | 1,000-2,000 lux or 100-200 footcandles |

| Sewing | 2,000-5,000 lux or 200-500 footcandles |

| Piling up and marking | 500-1,000 lux or 50-100 footcandles |

| Sponging, decating, winding | 200-500 lux or 20-50 footcandles |

| Banks | |

| General | 100-200 lux or 10-20 footcandles |

| Writing area | 200-500 lux or 20-50 footcandles |

| Tellers’ stations | 500-1,000 lux or 50-100 footcandles |

| Dairy farms | |

| Haymow area | 20-50 lux or 2-5 footcandles |

| Washing area | 500-1,000 lux or 50-100 footcandles |

| Feeding area | 100-200 lux or 10-20 footcandles |

| Foundries | |

| Core-making: fine | 1,000-2,000 lux or 100-200 footcandles |

| Core-making: medium | 500-1,000 lux or 50-100 footcandles |

| Moulding: medium | 1,000-2,000 lux or 100-200 footcandles |

| Moulding: large | 500-1,000 lux or 50-100 footcandles |

| Inspection: fine | 1,000-2,000 lux or 100-200 footcandles |

| Inspection: medium | 500-1,000 lux or 50-100 footcandles |

Source: IESNA 1993.

Brightness contrast and spatial distribution of luminances at the workplace. From the point of view of ergonomics, the ratio between luminances of the test object, its immediate background and the surrounding area has been widely studied, and recommendations on this subject are available for different requirements of the task (see Verriest and Hermans 1975; Grandjean 1987).

The object-background contrast is currently defined by the formula (Lf – Lo)/Lf, where Lo is the luminance of the object and Lf the luminance of the background. It thus varies from 0 to 1.

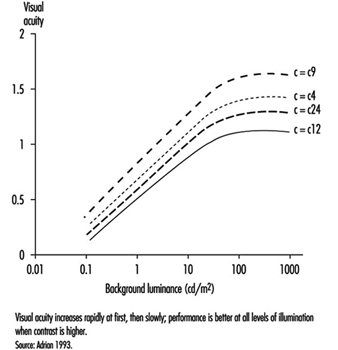

As shown by figure 7, visual acuity increases with the level of illumination (as previously said) and with the increase of object-background contrast (Adrian 1993). This effect is particularly marked in young people. A large light background and a dark object thus provides the best efficiency. However, in real life, contrast will never reach unity. For example, when a black letter is printed on a white sheet of paper, the object-background contrast reaches a value of only around 90%.

Figure 7. Relationship between visual acuity of a dark object perceived on a background receiving increasing illumination for four contrast values.

In the most favourable situation—that is, in positive presentation (dark letters on a light background)—acuity and contrast are linked, so that visibility can be improved by affecting either one or the other factor—for example, increasing the size of letters or their darkness, as in Fortuin’s table (in Verriest and Hermans 1975). When video display units appeared on the market, letters or symbols were presented on the screen as light spots on a dark background. Later on, new screens were developed which displayed dark letters on a light background. Many studies were conducted in order to verify whether this presentation improved vision. The results of most experiments stress without any doubt that visual acuity is enhanced when reading dark letters on a light background; of course a dark screen favours reflections of glare sources.

The functional visual field is defined by the relationship between the luminosity of the surfaces actually perceived by the eye at the workpost and those of the surrounding areas. Care must be taken not to create too great differences of luminosity in the visual field; according to the size of the surfaces involved, changes in general or local adaptation occur which cause discomfort in the execution of the task. Moreover, it is recognized that in order to achieve good performance, the contrasts in the field must be such that the task area is more illuminated than its immediate surroundings, and that the far areas are darker.

Time of presentation of the object. The capacity to detect an object depends directly on the quantity of light entering the eye, and this is linked with the luminous intensity of the object, its surface qualities and the time during which it appears (this is known in tests of tachystocopic presentation). A reduction in acuity occurs when the duration of presentation is less than 100 to 500 ms.

Movements of the eye or of the target. Loss of performance occurs particularly when the eye jerks; nevertheless, total stability of the image is not required in order to attain maximum resolution. But it has been shown that vibrations such as those of construction site machines or tractors can adversely affect visual acuity.

Diplopia. Visual acuity is higher in binocular than in monocular vision. Binocular vision requires optical axes that both meet at the object being looked at, so that the image falls into corresponding areas of the retina in each eye. This is made possible by the activity of the external muscles. If the coordination of the external muscles is failing, more or less transitory images may appear, such as in excessive visual fatigue, and may cause annoying sensations (Grandjean 1987).

In short, the discriminating power of the eye depends on the type of object to be perceived and the luminous environment in which it is measured; in the medical consulting room, conditions are optimal: high object-background contrast, direct daylight adaptation, characters with sharp edges, presentation of the object without a time limit, and certain redundancy of signals (e.g., several letters of the same size on a Snellen chart). Moreover, visual acuity determined for diagnosis purposes is a maximal and unique operation in the absence of accommodative fatigue. Clinical acuity is thus a poor reference for the visual performance attained on the job. What is more, good clinical acuity does not necessarily mean the absence of discomfort at work, where conditions of individual visual comfort are rarely attained. At most workplaces, as stressed by Krueger (1992), objects to be perceived are blurred and of low contrast, background luminances are unequally scattered with many glare sources producing veiling and local adaptation effects and so on. According to our own calculations, clinical results do not carry much predictive value of the amount and nature of visual fatigue encountered, for example, in VDU work. A more realistic laboratory set-up in which conditions of measurement were closer to task requirements did somewhat better (Rey and Bousquet 1990; Meyer et al. 1990).

Krueger (1992) is right when claiming that ophthalmological examination is not really appropriate in occupational health and ergonomics, that new testing procedures should be developed or extended, and that existing laboratory set-ups should be made available to the occupational practitioner.

Relief Vision, Stereoscopic Vision

Binocular vision allows a single image to be obtained by means of synthesis of the images received by the two eyes. Analogies between these images give rise to the active cooperation that constitutes the essential mechanism of the sense of depth and relief. Binocular vision has the additional property of enlarging the field, improving visual performance generally, relieving fatigue and increasing resistance to glare and dazzle.

When the fusion of both eyes is not sufficient, ocular fatigue may appear earlier.

Without achieving the efficiency of binocular vision in appreciating the relief of relatively near objects, the sensation of relief and the perception of depth are nevertheless possible with monocular vision by means of phenomena that do not require binocular disparity. We know that the size of objects does not change; that is why apparent size plays a part in our appreciation of distance; thus retinal images of small size will give the impression of distant objects, and vice versa (apparent size). Near objects tend to hide more distant objects (this is called interposition). The brighter one of two objects, or the one with a more saturated colour, seems to be nearer. The surroundings also play a part: more distant objects are lost in mist. Two parallel lines seem to meet at infinity (this is the perspective effect). Finally, if two targets are moving at the same speed, the one whose speed of retinal displacement is slower will appear farther from the eye.

In fact, monocular vision does not constitute a major obstacle in the majority of work situations. The subject needs to get accustomed to the narrowing of the visual field and also to the rather exceptional possibility that the image of the object may fall on the blind spot. (In binocular vision the same image never falls on the blind spot of both eyes at the same time.) It should also be noted that good binocular vision is not necessarily accompanied by relief (stereoscopic) vision, since this also depends on complex nervous system processes.

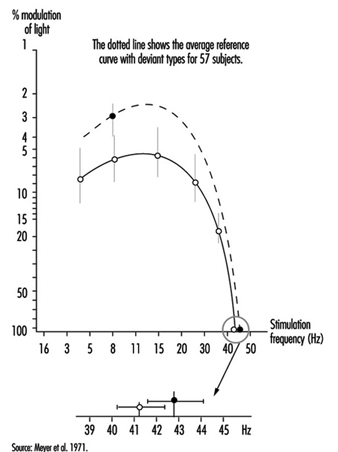

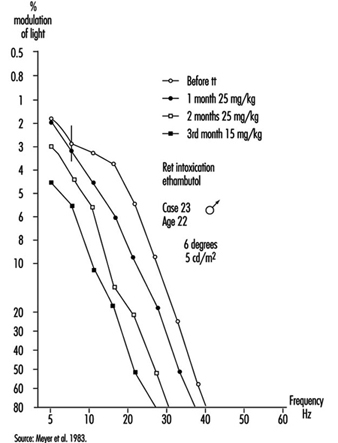

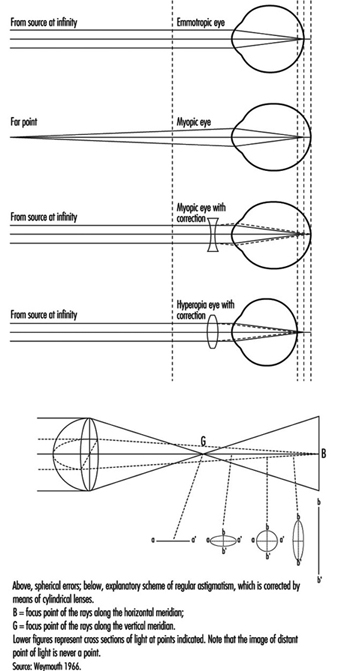

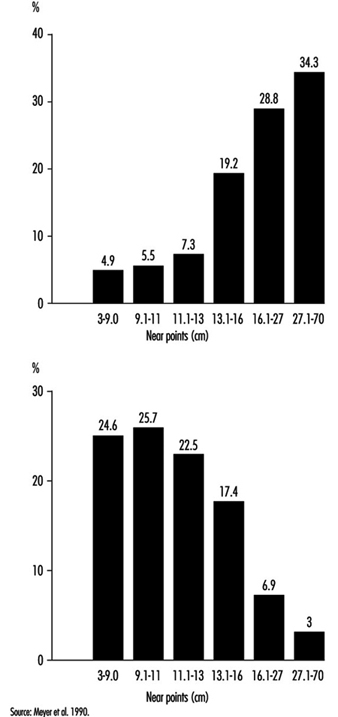

For all these reasons, regulations for the need of stereoscopic vision at work should be abandoned and replaced by a thorough examination of individuals by an eye doctor. Such regulations or recommendations exist nevertheless and stereoscopic vision is supposed to be necessary for such tasks as crane driving, jewellery work and cutting-out work. However, we should keep in mind that new technologies may modify deeply the content of the task; for example, modern computerized machine-tools are probably less demanding in stereoscopic vision than previously believed.