Validity Issues in Study Design

The Need for Validity

Epidemiology aims at providing an understanding of the disease experience in populations. In particular, it can be used to obtain insight into the occupational causes of ill health. This knowledge comes from studies conducted on groups of people having a disease by comparing them to people without that disease. Another approach is to examine what diseases people who work in certain jobs with particular exposures acquire and to compare these disease patterns to those of people not similarly exposed. These studies provide estimates of risk of disease for specific exposures. For information from such studies to be used for establishing prevention programmes, for the recognition of occupational diseases, and for those workers affected by exposures to be appropriately compensated, these studies must be valid.

Validity can be defined as the ability of a study to reflect the true state of affairs. A valid study is therefore one which measures correctly the association (either positive, negative or absent) between an exposure and a disease. It describes the direction and magnitude of a true risk. Two types of validity are distinguished: internal and external validity. Internal validity is a study’s ability to reflect what really happened among the study subjects; external validity reflects what could occur in the population.

Validity relates to the truthfulness of a measurement. Validity must be distinguished from precision of the measurement, which is a function of the size of the study and the efficiency of the study design.

Internal Validity

A study is said to be internally valid when it is free from biases and therefore truly reflects the association between exposure and disease which exists among the study participants. An observed risk of disease in association with an exposure may indeed result from a real association and therefore be valid, but it may also reflect the influence of biases. A bias will give a distorted image of reality.

Three major types of biases, also called systematic errors, are usually distinguished:

- selection bias

- information or observation bias

- confounding

They will be presented briefly below, using examples from the occupational health setting.

Selection bias

Selection bias will occur when the entry into the study is influenced by knowledge of the exposure status of the potential study participant. This problem is therefore encountered only when the disease has already taken place by the time (before) the person enters the study. Typically, in the epidemiological setting, this will happen in case-control studies or in retrospective cohort studies. This means that a person will be more likely to be considered a case if it is known that he or she has been exposed. Three sets of circumstances may lead to such an event, which will also depend on the severity of the disease.

Self-selection bias

This can occur when people who know they have been exposed to known or believed harmful products in the past and who are convinced their disease is the result of the exposure will consult a physician for symptoms which other people, not so exposed, might have ignored. This is particularly likely to happen for diseases which have few noticeable symptoms. An example may be early pregnancy loss or spontaneous abortion among female nurses handling drugs used for cancer treatment. These women are more aware than most of reproductive physiology and, by being concerned about their ability to have children, may be more likely to recognize or label as a spontaneous abortion what other women would only consider as a delay in the onset of menstruation. Another example from a retrospective cohort study, cited by Rothman (1986), involves a Centers for Disease Control study of leukaemia among troops who had been present during a US atomic test in Nevada. Of the troops present on the test site, 76% were traced and constituted the cohort. Of these, 82% were found by the investigators, but an additional 18% contacted the investigators themselves after hearing publicity about the study. Four cases of leukaemia were present among the 82% traced by CDC and four cases were present among the self-referred 18%. This strongly suggests that the investigators’ ability to identify exposed persons was linked to leukaemia risk.

Diagnostic bias

This will occur when the doctors are more likely to diagnose a given disease once they know to what the patient has been previously exposed. For example, when most paints were lead-based, a symptom of disease of the peripheral nerves called peripheral neuritis with paralysis was also known as painters’ “wrist drop”. Knowing the occupation of the patient made it easier to diagnose the disease even in its early stages, whereas the identification of the causal agent would be much more difficult in research participants not known to be occupationally exposed to lead.

Bias resulting from refusal to participate in a study

When people, either healthy or sick, are asked to participate in a study, several factors play a role in determining whether or not they will agree. Willingness to answer variably lengthy questionnaires, which at times inquire about sensitive issues, and even more so to give blood or other biological samples, may be determined by the degree of self-interest held by the person. Someone who is aware of past potential exposure may be ready to comply with this inquiry in the hope that it will help to find the cause of the disease, whereas someone who considers that they have not been exposed to anything dangerous, or who is not interested in knowing, may decline the invitation to participate in the study. This can lead to a selection of those people who will finally be the study participants as compared to all those who might have been.

Information bias

This is also called observation bias and concerns disease outcome in follow-up studies and exposure assessment in case-control studies.

Differential outcome assessment in prospective follow-up (cohort) studies

Two groups are defined at the start of the study: an exposed group and an unexposed group. Problems of diagnostic bias will arise if the search for cases differs between these two groups. For example, consider a cohort of people exposed to an accidental release of dioxin in a given industry. For the highly exposed group, an active follow-up system is set up with medical examinations and biological monitoring at regular intervals, whereas the rest of the working population receives only routine care. It is highly likely that more disease will be identified in the group under close surveillance, which would lead to a potential over-estimation of risk.

Differential losses in retrospective cohort studies

The reverse mechanism to that described in the preceding paragraph may occur in retrospective cohort studies. In these studies, the usual way of proceeding is to start with the files of all the people who have been employed in a given industry in the past, and to assess disease or mortality subsequent to employment. Unfortunately, in almost all studies files are incomplete, and the fact that a person is missing may be related either to exposure status or to disease status or to both. For example, in a recent study conducted in the chemical industry in workers exposed to aromatic amines, eight tumours were found in a group of 777 workers who had undergone cytological screening for urinary tumours. Altogether, only 34 records were found missing, corresponding to a 4.4% loss from the exposure assessment file, but for bladder cancer cases, exposure data were missing for two cases out of eight, or 25%. This shows that the files of people who became cases were more likely to become lost than the files of other workers. This may occur because of more frequent job changes within the company (which may be linked to exposure effects), resignation, dismissal or mere chance.

Differential assessment of exposure in case-control studies

In case-control studies, the disease has already occurred at the start of the study, and information will be sought on exposures in the past. Bias may result either from the interviewer’s or study participant’s attitude to the investigation. Information is usually collected by trained interviewers who may or may not be aware of the hypothesis underlying the research. For example, in a population-based case-control study of bladder cancer conducted in a highly industrialized region, study staff may well be aware of the fact that certain chemicals, such as aromatic amines, are risk factors for bladder cancer. If they also know who has developed the disease and who has not, they may be likely to conduct more in-depth interviews with the participants who have bladder cancer than with the controls. They may insist on more detailed information of past occupations, searching systematically for exposure to aromatic amines, whereas for controls they may record occupations in a more routine way. The resulting bias is known as exposure suspicion bias.

The participants themselves may also be responsible for such bias. This is called recall bias to distinguish it from interviewer bias. Both have exposure suspicion as the mechanism for the bias. Persons who are sick may suspect an occupational origin to their disease and therefore will try to remember as accurately as possible all the dangerous agents to which they may have been exposed. In the case of handling undefined products, they may be inclined to recall the names of precise chemicals, particularly if a list of suspected products is made available to them. By contrast, controls may be less likely to go through the same thought process.

Confounding

Confounding exists when the association observed between exposure and disease is in part the result of a mixing of the effect of the exposure under study and another factor. Let us say, for example, that we are finding an increased risk of lung cancer among welders. We are tempted to conclude immediately that there is a causal association between exposure to welding fumes and lung cancer. However, we also know that smoking is by far the main risk factor for lung cancer. Therefore, if information is available, we begin checking the smoking status of welders and other study participants. We may find that welders are more likely to smoke than non-welders. In that situation, smoking is known to be associated with lung cancer and, at the same time, in our study smoking is also found to be associated with being a welder. In epidemiological terms, this means that smoking, linked both to lung cancer and to welding, is confounding the association between welding and lung cancer.

Interaction or effect modification

In contrast to all the issues listed above, namely selection, information and confounding, which are biases, interaction is not a bias due to problems in study design or analysis, but reflects reality and its complexity. An example of this phenomenon is the following: exposure to radon is a risk factor for lung cancer, as is smoking. In addition, smoking and radon exposure have different effects on lung cancer risk depending on whether they act together or in isolation. Most of the occupational studies on this topic have been conducted among underground miners and at times have provided conflicting results. Overall, there seem to be arguments in favour of an interaction of smoking and radon exposure in producing lung cancer. This means that lung cancer risk is increased by exposure to radon, even in non-smokers, but that the size of the risk increase from radon is much greater among smokers than among non-smokers. In epidemiological terms, we say that the effect is multiplicative. In contrast to confounding, described above, interaction needs to be carefully analysed and described in the analysis rather than simply controlled, as it reflects what is happening at the biological level and is not merely a consequence of poor study design. Its explanation leads to a more valid interpretation of the findings from a study.

External Validity

This issue can be addressed only after ensuring that internal validity is secured. If we are convinced that the results observed in the study reflect associations which are real, we can ask ourselves whether or not we can extrapolate these results to the larger population from which the study participants themselves were drawn, or even to other populations which are identical or at least very similar. The most common question is whether results obtained for men also apply to women. For years, studies and, in particular, occupational epidemiological investigations have been conducted exclusively among men. Studies among chemists carried out in the 1960s and 1970s in the United States, United Kingdom and Sweden all found increased risks of specific cancers—namely leukaemia, lymphoma and pancreatic cancer. Based on what we knew of the effects of exposure to solvents and some other chemicals, we could already have deduced at the time that laboratory work also entailed carcinogenic risk for women. This in fact was shown to be the case when the first study among women chemists was finally published in the mid-1980s, which found results similar to those among men. It is worth noting that other excess cancers found were tumours of the breast and ovary, traditionally considered as being related only to endogenous factors or reproduction, but for which newly suspected environmental factors such as pesticides may play a role. Much more work needs to be done on occupational determinants of female cancers.

Strategies for a Valid Study

A perfectly valid study can never exist, but it is incumbent upon the researcher to try to avoid, or at least to minimize, as many biases as possible. This can often best be done at the study design stage, but can also be carried out during analysis.

Study design

Selection and information bias can be avoided only through the careful design of an epidemiological study and the scrupulous implementation of all the ensuing day-to-day guidelines, including meticulous attention to quality assurance, for the conduct of the study in field conditions. Confounding may be dealt with either at the design or analysis stage.

Selection

Criteria for considering a participant as a case must be explicitly defined. One cannot, or at least should not, attempt to study ill-defined clinical conditions. A way of minimizing the impact that knowledge of the exposure may have on disease assessment is to include only severe cases which would have been diagnosed irrespective of any information on the history of the patient. In the field of cancer, studies often will be limited to cases with histological proof of the disease to avoid the inclusion of borderline lesions. This also will mean that groups under study are well defined. For example, it is well-known in cancer epidemiology that cancers of different histological types within a given organ may have dissimilar risk factors. If the number of cases is sufficient, it is better to separate adenocarcinoma of the lung from squamous cell carcinoma of the lung. Whatever the final criteria for entry into the study, they should always be clearly defined and described. For example, the exact code of the disease should be indicated using the International Classification of Diseases (ICD) and also, for cancer, the International Classification of Diseases-Oncology (ICD-O).

Efforts should be made once the criteria are specified to maximize participation in the study. The decision to refuse to participate is hardly ever made at random and therefore leads to bias. Studies should first of all be presented to the clinicians who are seeing the patients. Their approval is needed to approach patients, and therefore they will have to be convinced to support the study. One argument that is often persuasive is that the study is in the interest of the public health. However, at this stage it is better not to discuss the exact hypothesis being evaluated in order to avoid unduly influencing the clinicians involved. Physicians should not be asked to take on supplementary duties; it is easier to convince health personnel to lend their support to a study if means are provided by the study investigators to carry out any additional tasks, over and above routine care, necessitated by the study. Interviewers and data abstractors ought to be unaware of the disease status of their patients.

Similar attention should be paid to the information provided to participants. The goal of the study must be described in broad, neutral terms, but must also be convincing and persuasive. It is important that issues of confidentiality and interest for public health be fully understood while avoiding medical jargon. In most settings, use of financial or other incentives is not considered appropriate, although compensation should be provided for any expense a participant may incur. Last, but not least, the general population should be sufficiently scientifically literate to understand the importance of such research. Both the benefits and the risks of participation must be explained to each prospective participant where they need to complete questionnaires and/or to provide biological samples for storage and/or analysis. No coercion should be applied in obtaining prior and fully informed consent. Where studies are exclusively records-based, prior approval of the agencies responsible for ensuring the confidentiality of such records must be secured. In these instances, individual participant consent usually can be waived. Instead, approval of union and government officers will suffice. Epidemiological investigations are not a threat to an individual’s private life, but are a potential aid to improve the health of the population. The approval of an institutional review board (or ethics review committee) will be needed prior to the conduct of a study, and much of what is stated above will be expected by them for their review.

Information

In prospective follow-up studies, means for assessment of the disease or mortality status must be identical for exposed and non-exposed participants. In particular, different sources should not be used, such as only checking in a central mortality register for non-exposed participants and using intensive active surveillance for exposed participants. Similarly, the cause of death must be obtained in strictly comparable ways. This means that if a system is used to gain access to official documents for the unexposed population, which is often the general population, one should never plan to get even more precise information through medical records or interviews on the participants themselves or on their families for the exposed subgroup.

In retrospective cohort studies, efforts should be made to determine how closely the population under study is compared to the population of interest. One should beware of potential differential losses in exposed and non-exposed groups by using various sources concerning the composition of the population. For example, it may be useful to compare payroll lists with union membership lists or other professional listings. Discrepancies must be reconciled and the protocol adopted for the study must be closely followed.

In case-control studies, other options exist to avoid biases. Interviewers, study staff and study participants need not be aware of the precise hypothesis under study. If they do not know the association being tested, they are less likely to try to provide the expected answer. Keeping study personnel in the dark as to the research hypothesis is in fact often very impractical. The interviewer will almost always know the exposures of greatest potential interest as well as who is a case and who is a control. We therefore have to rely on their honesty and also on their training in basic research methodology, which should be a part of their professional background; objectivity is the hallmark at all stages in science.

It is easier not to inform the study participants of the exact object of the research. Good, basic explanations on the need to collect data in order to have a better understanding of health and disease are usually sufficient and will satisfy the needs of ethics review.

Confounding

Confounding is the only bias which can be dealt with either at the study design stage or, provided adequate information is available, at the analysis stage. If, for example, age is considered to be a potential confounder of the association of interest because age is associated with the risk of disease (i.e., cancer becomes more frequent in older age) and also with exposure (conditions of exposure vary with age or with factors related to age such as qualification, job position and duration of employment), several solutions exist. The simplest is to limit the study to a specified age range—for example, enrol only Caucasian men aged 40 to 50. This will provide elements for a simple analysis, but will also have the drawback of limiting the application of the results to a single sex age/racial group. Another solution is matching on age. This means that for each case, a referent of the same age is needed. This is an attractive idea, but one has to keep in mind the possible difficulty of fulfilling this requirement as the number of matching factors increases. In addition, once a factor has been matched on, it becomes impossible to evaluate its role in the occurrence of disease. The last solution is to have sufficient information on potential confounders in the study database in order to check for them in the analysis. This can be done either through a simple stratified analysis, or with more sophisticated tools such as multivariate analysis. However, it should be remembered that analysis will never be able to compensate for a poorly designed or conducted study.

Conclusion

The potential for biases to occur in epidemiological research is long established. This was not too much of a concern when the associations being studied were strong (as is the case for smoking and lung cancer) and therefore some inaccuracy did not cause too severe a problem. However, now that the time has come to evaluate weaker risk factors, the need for better tools becomes paramount. This includes the need for excellent study designs and the possibility of combining the advantages of various traditional designs such as the case-control or cohort studies with more innovative approaches such as case-control studies nested within a cohort. Also, the use of biomarkers may provide the means of obtaining more accurate assessments of current and possibly past exposures, as well as for the early stages of disease.

Measuring Effects of Exposures

Epidemiology involves measuring the occurrence of disease and quantifying associations between diseases and exposures.

Measures of Disease Occurrence

Disease occurrence can be measured by frequencies (counts) but is better described by rates, which are composed of three elements: the number of people affected (numerator), the number of people in the source or base population (i.e., the population at risk) from which the affected persons come, and the time period covered. The denominator of the rate is the total person-time experienced by the source population. Rates allow more informative comparisons between populations of different sizes than counts alone. Risk, the probability of an individual developing disease within a specified time period, is a proportion, ranging from 0 to 1, and is not a rate per se. Attack rate, the proportion of people in a population who are affected within a specified time period, is technically a measure of risk, not a rate.

Disease-specific morbidity includes incidence, which refers to the number of persons who are newly diagnosed with the disease of interest. Prevalence refers to the number of existing cases. Mortality refers to the number of persons who die.

Incidence is defined as the number of newly diagnosed cases within a specified time period, whereas the incidence rate is this number divided by the total person-time experienced by the source population (table 1). For cancer, rates are usually expressed as annual rates per 100,000 people. Rates for other more common diseases may be expressed per a smaller number of people. For example, birth defect rates are usually expressed per 1,000 live births. Cumulative incidence, the proportion of people who become cases within a specified time period, is a measure of average risk for a population.

Table 1. Measures of disease occurrence: Hypothetical population observed for a five-year period

|

Newly diagnosed cases |

10 |

|

Previously diagnosed living cases |

12 |

|

Deaths, all causes* |

5 |

|

Deaths, disease of interest |

3 |

|

Persons in population |

100 |

|

Years observed |

5 |

|

Incidence |

10 persons |

|

Annual incidence rate |

|

|

Point prevalence (at end of year 5) |

(10 + 12 - 3) = 19 persons |

|

Period prevalence (five-year period) |

(10 + 12) = 22 persons |

|

Annual death rate |

|

|

Annual mortality rate |

|

*To simplify the calculations, this example assumes that all deaths occurred at the end of the five-year period so that all 100 persons in the population were alive for the full five years.

Prevalence includes point prevalence, the number of cases of disease at a point in time, and period prevalence, the total number of cases of a disease known to have existed at some time during a specified period.

Mortality, which concerns deaths rather than newly diagnosed cases of disease, reflects factors that cause disease as well as factors related to the quality of medical care, such as screening, access to medical care, and availability of effective treatments. Consequently, hypothesis-generating efforts and aetiological research may be more informative and easier to interpret when based on incidence rather than on mortality data. However, mortality data are often more readily available on large populations than incidence data.

The term death rate is generally accepted to mean the rate for deaths from all causes combined, whereas mortality rate is the rate of death from one specific cause. For a given disease, the case-fatality rate (technically a proportion, not a rate) is the number of persons dying from the disease during a specified time period divided by the number of persons with the disease. The complement of the case-fatality rate is the survival rate. The five-year survival rate is a common benchmark for chronic diseases such as cancer.

The occurrence of a disease may vary across subgroups of the population or over time. A disease measure for an entire population, without consideration of any subgroups, is called a crude rate. For example, an incidence rate for all age groups combined is a crude rate. The rates for the individual age groups are the age-specific rates. To compare two or more populations with different age distributions, age-adjusted (or, age-standardized) rates should be calculated for each population by multiplying each age-specific rate by the per cent of the standard population (e.g., one of the populations under study, the 1970 US population) in that age group, then summing over all age groups to produce an overall age-adjusted rate. Rates can be adjusted for factors other than age, such as race, gender or smoking status, if the category-specific rates are known.

Surveillance and evaluation of descriptive data can provide clues to disease aetiology, identify high-risk subgroups that may be suitable for intervention or screening programmes, and provide data on the effectiveness of such programmes. Sources of information that have been used for surveillance activities include death certificates, medical records, cancer registries, other disease registries (e.g., birth defects registries, end-stage renal disease registries), occupational exposure registries, health or disability insurance records and workmen’s compensation records.

Measures of Association

Epidemiology attempts to identify and quantify factors that influence disease. In the simplest approach, the occurrence of disease among persons exposed to a suspect factor is compared to the occurrence among persons unexposed. The magnitude of an association between exposure and disease can be expressed in either absolute or relative terms. (See also "Case Study: Measures").

Absolute effects are measured by rate differences and risk differences (table 2). A rate difference is one rate minus a second rate. For example, if the incidence rate of leukaemia among workers exposed to benzene is 72 per 100,000 person-years and the rate among non-exposed workers is 12 per 100,000 person-years, then the rate difference is 60 per 100,000 person-years. A risk difference is a difference in risks or cumulative incidence and can range from -1 to 1.

Table 2. Measures of association for a cohort study

|

Cases |

Person-years at risk |

Rate per 100,000 |

|

|

Exposed |

100 |

20,000 |

500 |

|

Unexposed |

200 |

80,000 |

250 |

|

Total |

300 |

100,000 |

300 |

Rate Difference (RD) = 500/100,000 - 250/100,000

= 250/100,000 per year

(146.06/100,000 - 353.94/100,000)*

Rate ratio (or relative risk) (RR) = ![]()

Attributable risk in the exposed (ARe) = 100/20,000 - 200/80,000

= 250/100,000 per year

Attributable risk per cent in the exposed (ARe%) =![]()

Population attributable risk (PAR) = 300/100,000 - 200/80,000

= 50/100,000 per year

Population attributable risk per cent (PAR%) =

![]()

* In parentheses 95% confidence intervals computed using the formulas in the boxes.

Relative effects are based on ratios of rates or risk measures, instead of differences. A rate ratio is the ratio of a rate in one population to the rate in another. The rate ratio has also been called the risk ratio, relative risk, relative rate, and incidence (or mortality) rate ratio. The measure is dimensionless and ranges from 0 to infinity. When the rate in two groups is similar (i.e., there is no effect from the exposure), the rate ratio is equal to unity (1). An exposure that increased risk would yield a rate ratio greater than unity, while a protective factor would yield a ratio between 0 and 1. The excess relative risk is the relative risk minus 1. For example, a relative risk of 1.4 may also be expressed as an excess relative risk of 40%.

In case-control studies (also called case-referent studies), persons with disease are identified (cases) and persons without disease are identified (controls or referents). Past exposures of the two groups are compared. The odds of being an exposed case is compared to the odds of being an exposed control. Complete counts of the source populations of exposed and unexposed persons are not available, so disease rates cannot be calculated. Instead, the exposed cases can be compared to the exposed controls by calculation of relative odds, or the odds ratio (table 3).

Table 3. Measures of association for case-control studies: Exposure to wood dust and adenocarcinoma of the nasal cavity and paranasal sinues

|

Cases |

Controls |

|

|

Exposed |

18 |

55 |

|

Unexposed |

5 |

140 |

|

Total |

23 |

195 |

Relative odds (odds ratio) (OR) = ![]()

Attributable risk per cent in the exposed (![]() ) =

) = ![]()

Population attributable risk per cent (PAR%) = ![]()

where ![]() = proportion of exposed controls = 55/195 = 0.28

= proportion of exposed controls = 55/195 = 0.28

* In parentheses 95% confidence intervals computed using the formulas in the box overleaf.

Source: Adapted from Hayes et al. 1986.

Relative measures of effect are used more frequently than absolute measures to report the strength of an association. Absolute measures, however, may provide a better indication of the public health impact of an association. A small relative increase in a common disease, such as heart disease, may affect more persons (large risk difference) and have more of an impact on public health than a large relative increase (but small absolute difference) in a rare disease, such as angiosarcoma of the liver.

Significance Testing

Testing for statistical significance is often performed on measures of effect to evaluate the likelihood that the effect observed differs from the null hypothesis (i.e., no effect). While many studies, particularly in other areas of biomedical research, may express significance by p-values, epidemiological studies typically present confidence intervals (CI) (also called confidence limits). A 95% confidence interval, for example, is a range of values for the effect measure that includes the estimated measure obtained from the study data and that which has 95% probability of including the true value. Values outside the interval are deemed to be unlikely to include the true measure of effect. If the CI for a rate ratio includes unity, then there is no statistically significant difference between the groups being compared.

Confidence intervals are more informative than p-values alone. A p-value’s size is determined by either or both of two reasons. Either the measure of association (e.g., rate ratio, risk difference) is large or the populations under study are large. For example, a small difference in disease rates observed in a large population may yield a highly significant p-value. The reasons for the large p-value cannot be identified from the p-value alone. Confidence intervals, however, allow us to disentangle the two factors. First, the magnitude of the effect is discernible by the values of the effect measure and the numbers encompassed by the interval. Larger risk ratios, for example, indicate a stronger effect. Second, the size of the population affects the width of the confidence interval. Small populations with statistically unstable estimates generate wider confidence intervals than larger populations.

The level of confidence chosen to express the variability of the results (the “statistical significance”) is arbitrary, but has traditionally been 95%, which corresponds to a p-value of 0.05. A 95% confidence interval has a 95% probability of containing the true measure of the effect. Other levels of confidence, such as 90%, are occasionally used.

Exposures can be dichotomous (e.g., exposed and unexposed), or may involve many levels of exposure. Effect measures (i.e., response) can vary by level of exposure. Evaluating exposure-response relationships is an important part of interpreting epidemiological data. The analogue to exposure-response in animal studies is “dose-response”. If the response increases with exposure level, an association is more likely to be causal than if no trend is observed. Statistical tests to evaluate exposure-response relationships include the Mantel extension test and the chi-square trend test.

Standardization

To take into account factors other than the primary exposure of interest and the disease, measures of association may be standardized through stratification or regression techniques. Stratification means dividing the populations into homogenous groups with respect to the factor (e.g., gender groups, age groups, smoking groups). Risk ratios or odds ratios are calculated for each stratum and overall weighted averages of the risk ratios or odds ratios are calculated. These overall values reflect the association between the primary exposure and disease, adjusted for the stratification factor, i.e., the association with the effects of the stratification factor removed.

A standardized rate ratio (SRR) is the ratio of two standardized rates. In other words, an SRR is a weighted average of stratum-specific rate ratios where the weights for each stratum are the person-time distribution of the non-exposed, or referent, group. SRRs for two or more groups may be compared if the same weights are used. Confidence intervals can be constructed for SRRs as for rate ratios.

The standardized mortality ratio (SMR) is a weighted average of age-specific rate ratios where the weights (e.g., person-time at risk) come from the group under study and the rates come from the referent population, the opposite of the situation in a SRR. The usual referent population is the general population, whose mortality rates may be readily available and based on large numbers and thus are more stable than using rates from a non-exposed cohort or subgroup of the occupational population under study. Using the weights from the cohort instead of the referent population is called indirect standardization. The SMR is the ratio of the observed number of deaths in the cohort to the expected number, based on the rates from the referent population (the ratio is typically multiplied by 100 for presentation). If no association exists, the SMR equals 100. It should be noted that because the rates come from the referent population and the weights come from the study group, two or more SMRs tend not to be comparable. This non-comparability is often forgotten in the interpretation of epidemiological data, and erroneous conclusions can be drawn.

Healthy Worker Effect

It is very common for occupational cohorts to have lower total mortality than the general population, even if the workers are at increased risk for selected causes of death from workplace exposures. This phenomenon, called the healthy worker effect, reflects the fact that any group of employed persons is likely to be healthier, on average, than the general population, which includes workers and persons unable to work due to illnesses and disabilities. The overall mortality rate in the general population tends to be higher than the rate in workers. The effect varies in strength by cause of death. For example, it appears to be less important for cancer in general than for chronic obstructive lung disease. One reason for this is that it is likely that most cancers would not have developed out of any predisposition towards cancer underlying job/career selection at a younger age. The healthy worker effect in a given group of workers tends to diminish over time.

Proportional Mortality

Sometimes a complete tabulation of a cohort (i.e., person-time at risk) is not available and there is information only on the deaths or some subset of deaths experienced by the cohort (e.g., deaths among retirees and active employees, but not among workers who left employment before becoming eligible for a pension). Computation of person-years requires special methods to deal with person-time assessment, including life-table methods. Without total person-time information on all cohort members, regardless of disease status, SMRs and SRRs cannot be calculated. Instead, proportional mortality ratios (PMRs) can be used. A PMR is the ratio of the observed number of deaths due to a specific cause in comparison to the expected number, based on the proportion of total deaths due to the specific cause in the referent population, multiplied by the number of total deaths in the study group, multiplied by 100.

Because the proportion of deaths from all causes combined must equal 1 (PMR=100), some PMRs may appear to be in excess, but are actually artificially inflated due to real deficits in other causes of death. Similarly, some apparent deficits may merely reflect real excesses of other causes of death. For example, if aerial pesticide applicators have a large real excess of deaths due to accidents, the mathematical requirement that the PMR for all causes combined equal 100 may cause some one or other causes of death to appear deficient even if the mortality is excessive. To ameliorate this potential problem, researchers interested primarily in cancer can calculate proportionate cancer mortality ratios (PCMRs). PCMRs compare the observed number of cancer deaths to the number expected based on the proportion of total cancer deaths (rather than all deaths) for the cancer of interest in the referent population multiplied by the total number of cancer deaths in the study group, multiplied by 100. Thus, the PCMR will not be affected by an aberration (excess or deficit) in a non-cancer cause of death, such as accidents, heart disease or non-malignant lung disease.

PMR studies can better be analysed using mortality odds ratios (MORs), in essence analysing the data as if they were from a case-control study. The “controls” are the deaths from a subset of all deaths that are thought to be unrelated to the exposure under study. For example, if the main interest of the study were cancer, mortality odds ratios could be calculated comparing exposure among the cancer deaths to exposure among the cardiovascular deaths. This approach, like the PCMR, avoids the problems with the PMR which arise when a fluctuation in one cause of death affects the apparent risk of another simply because the overall PMR must equal 100. The choice of the control causes of death is critical, however. As mentioned above, they must not be related to the exposure, but the possible relationship between exposure and disease may not be known for many potential control diseases.

Attributable Risk

There are measures available which express the amount of disease that would be attributable to an exposure if the observed association between the exposure and disease were causal. The attributable risk in the exposed (ARe) is the disease rate in the exposed minus the rate in the unexposed. Because disease rates cannot be measured directly in case-control studies, the ARe is calculable only for cohort studies. A related, more intuitive, measure, the attributable risk percent in the exposed (ARe%), can be obtained from either study design. The ARe% is the proportion of cases arising in the exposed population that is attributable to the exposure (see table 2 and table 3 for formula). The ARe% is the rate ratio (or the odds ratio) minus 1, divided by the rate ratio (or odds ratio), multiplied by 100.

The population attributable risk (PAR) and the population attributable risk per cent (PAR%), or aetiological fraction, express the amount of disease in the total population, which is comprised of exposed and unexposed persons, that is due to the exposure if the observed association is causal. The PAR can be obtained from cohort studies (table 28.3 ) and the PAR% can be calculated in both cohort and case-control studies (table 2 and table 3).

Representativeness

There are several measures of risk that have been described. Each assumes underlying methods for counting events and in the representatives of these events to a defined group. When results are compared across studies, an understanding of the methods used is essential for explaining any observed differences.

Options in Study Design

The epidemiologist is interested in relationships between variables, chiefly exposure and outcome variables. Typically, epidemiologists want to ascertain whether the occurrence of disease is related to the presence of a particular agent (exposure) in the population. The ways in which these relationships are studied may vary considerably. One can identify all persons who are exposed to that agent and follow them up to measure the incidence of disease, comparing such incidence with disease occurrence in a suitable unexposed population. Alternatively, one can simply sample from among the exposed and unexposed, without having a complete enumeration of them. Or, as a third alternative, one can identify all people who develop a disease of interest in a defined time period (“cases”) and a suitable group of disease-free individuals (a sample of the source population of cases), and ascertain whether the patterns of exposure differ between the two groups. Follow-up of study participants is one option (in so-called longitudinal studies): in this situation, a time lag exists between the occurrence of exposure and disease onset. One alternative option is a cross-section of the population, where both exposure and disease are measured at the same point in time.

In this article, attention is given to the common study designs—cohort, case-referent (case-control) and cross-sectional. To set the stage for this discussion, consider a large viscose rayon factory in a small town. An investigation into whether carbon disulphide exposure increases the risk of cardiovascular disease is started. The investigation has several design choices, some more and some less obvious. A first strategy is to identify all workers who have been exposed to carbon disulphide and follow them up for cardiovascular mortality.

Cohort Studies

A cohort study encompasses research participants sharing a common event, the exposure. A classical cohort study identifies a defined group of exposed people, and then everyone is followed up and their morbidity and/or mortality experience is registered. Apart from a common qualitative exposure, the cohort should also be defined on other eligibility criteria, such as age range, gender (male or female or both), minimum duration and intensity of exposure, freedom from other exposures, and the like, to enhance the study’s validity and efficiency. At entrance, all cohort members should be free of the disease under study, according to the empirical set of criteria used to measure the disease.

If, for example, in the cohort study on the effects of carbon disulphide on coronary morbidity, coronary heart disease is empirically measured as clinical infarctions, those who, at the baseline, have had a history of coronary infarction must be excluded from the cohort. By contrast, electrocardiographic abnormalities without a history of infarction can be accepted. However, if the appearance of new electrocardiographic changes is the empirical outcome measure, the cohort members should also have normal electrocardiograms at the baseline.

The morbidity (in terms of incidence) or the mortality of an exposed cohort should be compared to a reference cohort which ideally should be as similar as possible to the exposed cohort in all relevant aspects, except for the exposure, to determine the relative risk of illness or death from exposure. Using a similar but unexposed cohort as a provider of the reference experience is preferable to the common (mal)practice of comparing the morbidity or mortality of the exposed cohort to age-standardized national figures, because the general population falls short on fulfilling even the most elementary requirements for comparison validity. The Standardized Morbidity (or Mortality) Ratio (SMR), resulting from such a comparison, usually generates an underestimate of the true risk ratio because of a bias operating in the exposed cohort, leading to the lack of comparability between the two populations. This comparison bias has been named the “Healthy Worker Effect”. However, it is really not a true “effect”, but a bias from negative confounding, which in turn has arisen from health-selective turnover in an employed population. (People with poor health tend to move out from, or never enter, “exposed” cohorts, their end destination often being the unemployed section of the general population.)

Because an “exposed” cohort is defined as having a certain exposure, only effects caused by that single exposure (or mix of exposures) can be studied simultaneously. On the other hand, the cohort design permits the study of several diseases at the same time. One can also study concomitantly different manifestations of the same disease—for example, angina, ECG changes, clinical myocardial infarctions and coronary mortality. While well-suited to test specific hypotheses (e.g., “exposure to carbon disulphide causes coronary heart disease”), a cohort study also provides answers to the more general question: “What diseases are caused by this exposure?”

For example, in a cohort study investigating the risk to foundry workers of dying from lung cancer, the mortality data are obtained from the national register of causes of death. Although the study was to determine if foundry dust causes lung cancer, the data source, with the same effort, also gives information on all other causes of death. Therefore, other possible health risks can be studied at the same time.

The timing of a cohort study can either be retrospective (historical) or prospective (concurrent). In both instances the design structure is the same. A full enumeration of exposed people occurs at some point or period in time, and the outcome is measured for all individuals through a defined end point in time. The difference between prospective and retrospective is in the timing of the study. If retrospective, the end point has already occurred; if prospective, one has to wait for it.

In the retrospective design, the cohort is defined at some point in the past (for example, those exposed on 1 January 1961, or those taking on exposed work between 1961 and 1970). The morbidity and/or mortality of all cohort members is then followed to the present. Although “all” means that also those having left the job must be traced, in practice a 100 per cent coverage can rarely be achieved. However, the more complete the follow-up, the more valid is the study.

In the prospective design, the cohort is defined at the present, or during some future period, and the morbidity is then followed into the future.

When doing cohort studies, enough time must be allowed for follow-up in order that the end points of concern have sufficient time to manifest. Sometimes, because historical records may be available for only a short period into the past, it is nevertheless desirable to take advantage of this data source because it means that a shorter period of prospective follow-up would be needed before results from the study could be available. In these situations, a combination of the retrospective and the prospective cohort study designs can be efficient. The general layout of frequency tables presenting cohort data is shown in table 1.

Table 1. The general layout of frequency tables presenting cohort data

|

Component of disease rate |

Exposed cohort |

Unexposed cohort |

|

Cases of illness or death |

c1 |

c0 |

|

Number of people in cohort |

N1 |

N0 |

The observed proportion of diseased in the exposed cohort is calculated as:

![]()

and that of the reference cohort as:

![]()

The rate ratio then is expressed as:

N0 and N1 are usually expressed in person-time units instead of as the number of people in the populations. Person-years are computed for each individual separately. Different people often enter the cohort during a period of time, not at the same date. Hence their follow-up times start at different dates. Likewise, after their death, or after the event of interest has occurred, they are no longer “at risk” and should not continue to contribute person-years to the denominator.

If the RR is greater than 1, the morbidity of the exposed cohort is higher than that of the reference cohort, and vice versa. The RR is a point estimate and a confidence interval (CI) should be computed for it. The larger the study, the narrower the confidence interval will become. If RR = 1 is not included in the confidence interval (e.g., the 95% CI is 1.4 to 5.8), the result can be considered as “statistically significant” at the chosen level of probability (in this example, α = 0.05).

If the general population is used as the reference population, c0 is substituted by the “expected” figure, E(c1 ), derived from the age-standardized morbidity or mortality rates of that population (i.e., the number of cases that would have occurred in the cohort, had the exposure of interest not taken place). This yields the Standardized Mortality (or Morbidity) Ratio, SMR. Thus,

![]()

Also for the SMR, a confidence interval should be computed. It is better to give this measure in a publication than a p-value, because statistical significance testing is meaningless if the general population is the reference category. Such comparison entails a considerable bias (the healthy worker effect noted above), and statistical significance testing, originally developed for experimental research, is misleading in the presence of systematic error.

Suppose the question is whether quartz dust causes lung cancer. Usually, quartz dust occurs together with other carcinogens—such as radon daughters and diesel exhaust in mines, or polyaromatic hydrocarbons in foundries. Granite quarries do not expose the stone workers to these other carcinogens. Therefore the problem is best studied among stone workers employed in granite quarries.

Suppose then that all 2,000 workers, having been employed by 20 quarries between 1951 and 1960, are enrolled in the cohort and their cancer incidence (alternatively only mortality) is followed starting at ten years after first exposure (to allow for an induction time) and ending in 1990. This is a 20- to 30-year (depending on the year of entry) or, say, on average, a 25-year follow-up of the cancer mortality (or morbidity) among 1,000 of the quarry workers who were specifically granite workers. The exposure history of each cohort member must be recorded. Those who have left the quarries must be traced and their later exposure history recorded. In countries where all inhabitants have unique registration numbers, this is a straightforward procedure, governed chiefly by national data protection laws. Where no such system exists, tracing employees for follow up purposes can be extremely difficult. Where appropriate death or disease registries exist, the mortality from all causes, all cancers and specific cancer sites can be obtained from the national register of causes of death. (For cancer mortality, the national cancer registry is a better source because it contains more accurate diagnoses. In addition, incidence (or, morbidity) data can also be obtained.) The death rates (or cancer incidence rates) can be compared to “expected numbers”, computed from national rates using the person-years of the exposed cohort as a basis.

Suppose that 70 fatal cases of lung cancer are found in the cohort, whereas the expected number (the number which would have occurred had there been no exposure) is 35. Then:

c1 = 70, E(c1) = 35

![]()

Thus, the SMR = 200, which indicates a twofold increase in risk of dying from lung cancer among the exposed. If detailed exposure data are available, the cancer mortality can be studied as a function of different latency times (say, 10, 15, 20 years), work in different types of quarries (different kinds of granite), different historical periods, different exposure intensities and so on. However, 70 cases cannot be subdivided into too many categories, because the number falling into each one rapidly becomes too small for statistical analysis.

Both types of cohort designs have advantages and disadvantages. A retrospective study can, as a rule, measure only mortality, because data for milder manifestations usually are lacking. Cancer registries are an exception, and perhaps a few others, such as stroke registries and hospital discharge registries, in that incidence data also are available. Assessing past exposure is always a problem and the exposure data are usually rather weak in retrospective studies. This can lead to effect masking. On the other hand, since the cases have already occurred, the results of the study become available much sooner; in, say, two to three years.

A prospective cohort study can be better planned to comply with the researcher’s needs, and exposure data can be collected accurately and systematically. Several different manifestations of a disease can be measured. Measurements of both exposure and outcome can be repeated, and all measurements can be standardized and their validity can be checked. However, if the disease has a long latency (such as cancer), much time—even 20 to 30 years—will need to pass before the results of the study can be obtained. Much can happen during this time. For example, turnover of researchers, improvements in techniques for measuring exposure, remodelling or closure of the plants chosen for study and so forth. All these circumstances endanger the success of the study. The costs of a prospective study are also usually higher than those of a retrospective study, but this is mostly due to the much greater number of measurements (repeated exposure monitoring, clinical examinations and so on), and not to more expensive death registration. Therefore the costs per unit of information do not necessarily exceed those of a retrospective study. In view of all this, prospective studies are more suited for diseases with rather short latency, requiring short follow-up, while retrospective studies are better for disease with a long latency.

Case-Control (or Case-Referent) Studies

Let us go back to the viscose rayon plant. A retrospective cohort study may not be feasible if the rosters of the exposed workers have been lost, while a prospective cohort study would yield sound results in a very long time. An alternative would then be the comparison between those who died from coronary heart disease in the town, in the course of a defined time period, and a sample of the total population in the same age group.

The classical case-control (or, case-referent) design is based on sampling from a dynamic (open, characterized by a turnover of membership) population. This population can be that of a whole country, a district or a municipality (as in our example), or it can be the administratively defined population from which patients are admitted to a hospital. The defined population provides both the cases and the controls (or referents).

The technique is to gather all the cases of the disease in question that exist at a point in time (prevalent cases), or have occurred during a defined period of time (incident cases). The cases thus can be drawn from morbidity or mortality registries, or be gathered directly from hospitals or other sources having valid diagnostics. The controls are drawn as a sample from the same population, either from among non-cases or from the entire population. Another option is to select patients with another disease as controls, but then these patients must be representative of the population from which the cases came. There may be one or more controls (i.e., referents) for each case. The sampling approach differs from cohort studies, which examine the entire population. It goes without saying that the gains in terms of the lower costs of case-control designs are considerable, but it is important that the sample is representative of the whole population from which the cases originated (i.e., the “study base”)—otherwise the study can be biased.

When cases and controls have been identified, their exposure histories are gathered by questionnaires, interviews or, in some instances, from existing records (e.g., payroll records from which work histories can be deduced). The data can be obtained either from the participants themselves or, if they are deceased, from close relatives. To ensure symmetrical recall, it is important that the proportion of dead and live cases and referents be equal, because close relatives usually give a less detailed exposure history than the participants themselves. Information about the exposure pattern among cases is compared to that among controls, providing an estimate of the odds ratio (OR), an indirect measure of the risk among the exposed to incur the disease relative to that of the unexposed.

Because the case-control design relies on the exposure information obtained from patients with a certain disease (i.e., cases) along with a sample of non-diseased people (i.e., controls) from the population from which the cases originated, the connection with exposures can be investigated for only one disease. By contrast, this design allows the concomitant study of the effect of several different exposures. The case-referent study is well suited to address specific research questions (e.g., “Is coronary heart disease caused by exposure to carbon disulphide?”), but it also can help to answer the more general question: “What exposures can cause this disease?”

The question of whether exposure to organic solvents causes primary liver cancer is raised (as an example) in Europe. Cases of primary liver cancer, a comparatively rare disease in Europe, are best gathered from a national cancer registry. Suppose that all cancer cases occurring during three years form the case series. The population base for the study is then a three-year follow-up of the entire population in the European country in question. The controls are drawn as a sample of persons without liver cancer from the same population. For reasons of convenience (meaning that the same source can be used for sampling the controls) patients with another cancer type, not related to solvent exposure, can be used as controls. Colon cancer has no known relation to solvent exposure; hence this cancer type can be included among the controls. (Using cancer controls minimizes recall bias in that the accuracy of the history given by cases and controls is, on average, symmetrical. However, if some presently unknown connection between colon cancer and exposure to solvents were revealed later, this type of control would cause an underestimation of the true risk—not an exaggeration of it.)

For each case of liver cancer, two controls are drawn in order to achieve greater statistical power. (One could draw even more controls, but available funds may be a limiting factor. If funds were not limited, perhaps as many as four controls would be optimal. Beyond four, the law of diminishing returns applies.) After obtaining appropriate permission from data protection authorities, the cases and controls, or their close relatives, are approached, usually by means of a mailed questionnaire, asking for a detailed occupational history with special emphasis on a chronological list of the names of all employers, the departments of work, the job tasks in different employment, and the period of employment in each respective task. These data can be obtained from relatives with some difficulty; however, specific chemicals or trade names usually are not well recalled by relatives. The questionnaire also should include questions on possible confounding data, such as alcohol use, exposure to foodstuffs containing aflatoxins, and hepatitis B and C infection. In order to obtain a sufficiently high response rate, two reminders are sent to non-respondents at three-week intervals. This usually results in a final response rate in excess of 70%. The occupational history is then reviewed by an industrial hygienist, without knowledge of the respondent’s case or control status, and exposure is classified into high, medium, low, none, and unknown exposure to solvents. The ten years of exposure immediately preceding the cancer diagnosis are disregarded, because it is not biologically plausible that initiator-type carcinogens can be the cause of the cancer if the latency time is that short (although promoters, in fact, could). At this stage it is also possible to differentiate between different types of solvent exposure. Because a complete employment history has been given, it is also possible to explore other exposures, although the initial study hypothesis did not include these. Odds ratios can then be computed for exposure to any solvent, specific solvents, solvent mixtures, different categories of exposure intensity, and for different time windows in relation to cancer diagnosis. It is advisable to exclude from analysis those with unknown exposure.

The cases and controls can be sampled and analysed either as independent series or matched groups. Matching means that controls are selected for each case based on certain characteristics or attributes, to form pairs (or sets, if more than one control is chosen for each case). Matching is usually done based on one or more such factors, as age, vital status, smoking history, calendar time of case diagnosis, and the like. In our example, cases and controls are then matched on age and vital status. (Vital status is important, because patients themselves usually give a more accurate exposure history than close relatives, and symmetry is essential for validity reasons.) Today, the recommendation is to be restrictive with matching, because this procedure can introduce negative (effect-masking) confounding.

If one control is matched to one case, the design is called a matched-pair design. Provided the costs of studying more controls are not prohibitive, more than one referent per case improves the stability of the estimate of the OR, which makes the study more size efficient.

The layout of the results of an unmatched case-control study is shown in table 2.

Table 2. Sample layout of case-control data

|

Exposure classification |

||

|

Exposed |

Unexposed |

|

|

Cases |

c1 |

c0 |

|

Non-cases |

n1 |

n0 |

From this table, the odds of exposure among the cases, and the odds of exposure among the population (the controls), can be computed and divided to yield the exposure odds ratio, OR. For the cases, the exposure odds is c1 / c0, and for the controls it is n1 / n0. The estimate of the OR is then:

![]()

If relatively more cases than controls have been exposed, the OR is in excess of 1 and vice versa. Confidence intervals must be calculated and provided for the OR, in the same manner as for the RR.

By way of a further example, an occupational health centre of a large company serves 8,000 employees exposed to a variety of dusts and other chemical agents. We are interested in the connection between mixed dust exposure and chronic bronchitis. The study involves follow-up of this population for one year. We have set the diagnostic criteria for chronic bronchitis as “morning cough and phlegm production for three months during two consecutive years”. Criteria for “positive” dust exposure are defined before the study begins. Each patient visiting the health centre and fulfilling these criteria during a one-year period is a case, and the next patient seeking medical advice for non-pulmonary problems is defined as a control. Suppose that 100 cases and 100 controls become enrolled during the study period. Let 40 cases and 15 controls be classified as having been exposed to dust. Then

c1 = 40, c0 = 60, n1 = 15, and n0 = 85.

Consequently,

![]()

In the foregoing example, no consideration has been given to the possibility of confounding, which may lead to a distortion of the OR due to systematic differences between cases and controls in a variable like age. One way to reduce this bias is to match controls to cases on age or other suspect factors. This results in a data layout depicted in table 3.

Table 3. Layout of case-control data if one control is matched to each case

|

Referents |

||

|

Cases |

Exposure (+) |

Exposure (-) |

|

Exposure (+) |

f+ + |

f+ - |

|

Exposure (-) |

f- + |

f- - |

The analysis focuses on the discordant pairs: that is, “case exposed, control unexposed” (f+–); and “case unexposed, control exposed” (f–+). When both members of a pair are exposed or unexposed, the pair is disregarded. The OR in a matched-pair study design is defined as

![]()

In a study on the association between nasal cancer and wood dust exposure, there were all together 164 case-control pairs. In only one pair, both the case and the control had been exposed, and in 150 pairs, neither the case nor the control had been exposed. These pairs are not further considered. The case, but not the control had been exposed in 12 pairs, and the control, but not the case, in one pair. Hence,

![]()

and because unity is not included in this interval, the result is statistically significant—that is, there is a statistically significant association between nasal cancer and wood dust exposure.

Case-control studies are more efficient than cohort studies when the disease is rare; they may in fact provide the only option. However, common diseases also can be studied by this method. If the exposure is rare, an exposure-based cohort is the preferable or only feasible epidemiological design. Of course, cohort studies also can be carried out on common exposures. The choice between cohort and case-control designs when both the exposure and disease are common is usually decided taking validity considerations into account.

Because case-control studies rely on retrospective exposure data, usually based on the participants’ recall, their weak point is the inaccuracy and crudeness of the exposure information, which results in effect-masking through non-differential (symmetrical) misclassification of exposure status. Moreover, sometimes the recall can be asymmetrical between cases and controls, cases usually believed to remember “better” (i.e., recall bias).

Selective recall can cause an effect-magnifying bias through differential (asymmetrical) misclassification of exposure status. The advantages of case-control studies lie in their cost-effectiveness and their ability to provide a solution to a problem relatively quickly. Because of the sampling strategy, they allow the investigation of very large target populations (e.g., through national cancer registries), thereby increasing the statistical power of the study. In countries where data protection legislation or lack of good population and morbidity registries hinders the execution of cohort studies, hospital-based case-control studies may be the only practical way to conduct epidemiological research.

Case-control sampling within a cohort (nested case-control study designs)

A cohort study also can be designed for sampling instead of complete follow-up. This design has previously been called a “nested” case-control study. A sampling approach within the cohort sets different requirements on cohort eligibility, because the comparisons are now made within the same cohort. This should therefore include not only heavily exposed workers, but also less-exposed and even unexposed workers, in order to provide exposure contrasts within itself. It is important to realize this difference in eligibility requirements when assembling the cohort. If a full cohort analysis is first carried out on a cohort whose eligibility criteria were on “much” exposure, and a “nested” case-control study is done later on the same cohort, the study becomes insensitive. This introduces effect-masking because the exposure contrasts are insufficient “by design” by virtue of a lack of variability in exposure experience among members of the cohort.

However, provided the cohort has a broad range of exposure experience, the nested case-control approach is very attractive. One gathers all the cases arising in the cohort over the follow-up period to form the case series, while only a sample of the non-cases is drawn for the control series. The researchers then, as in the traditional case-control design, gather detailed information on the exposure experience by interviewing cases and controls (or, their close relatives), by scrutinizing the employers’ personnel rolls, by constructing a job exposure matrix, or by combining two or more of these approaches. The controls can either be matched to the cases or they can be treated as an independent series.

The sampling approach can be less costly compared to exhaustive information procurement on each member of the cohort. In particular, because only a sample of controls is studied, more resources can be devoted to detailed and accurate exposure assessment for each case and control. However, the same statistical power problems prevail as in classical cohort studies. To achieve adequate statistical power, the cohort must always comprise an “adequate” number of exposed cases depending on the magnitude of the risk that should be detected.

Cross-sectional study designs

In a scientific sense, a cross-sectional design is a cross-section of the study population, without any consideration given to time. Both exposure and morbidity (prevalence) are measured at the same point in time.

From the aetiological point of view, this study design is weak, partly because it deals with prevalence as opposed to incidence. Prevalence is a composite measure, depending both on the incidence and duration of the disease. This also restricts the use of cross-sectional studies to diseases of long duration. Even more serious is the strong negative bias caused by the health-dependent elimination from the exposed group of those people more sensitive to the effects of exposure. Therefore aetiological problems are best solved by longitudinal designs. Indeed, cross-sectional studies do not permit any conclusions about whether exposure preceded disease, or vice versa. The cross-section is aetiologically meaningful only if a true time relation exists between the exposure and the outcome, meaning that present exposure must have immediate effects. However, the exposure can be cross-sectionally measured so that it represents a longer past time period (e.g., the blood lead level), while the outcome measure is one of prevalence (e.g., nerve conduction velocities). The study then is a mixture of a longitudinal and a cross-sectional design rather than a mere cross-section of the study population.

Cross-sectional descriptive surveys

Cross-sectional surveys are often useful for practical and administrative, rather than for scientific, purposes. Epidemiological principles can be applied to systematic surveillance activities in the occupational health setting, such as:

- observation of morbidity in relation to occupation, work area, or certain exposures

- regular surveys of workers exposed to known occupational hazards

- examination of workers coming into contact with new health hazards

- biological monitoring programmes

- exposure surveys to identify and quantify hazards

- screening programmes of different worker groups

- assessing the proportion of workers in need of prevention or regular control (e.g., blood pressure, coronary heart disease).

It is important to choose representative, valid, and specific morbidity indicators for all types of surveys. A survey or a screening programme can use only a rather small number of tests, in contrast to clinical diagnostics, and therefore the predictive value of the screening test is important. Insensitive methods fail to detect the disease of interest, while highly sensitive methods produce too many falsely positive results. It is not worthwhile to screen for rare diseases in an occupational setting. All case finding (i.e., screening) activities also require a mechanism for taking care of people having “positive” findings, both in terms of diagnostics and therapy. Otherwise only frustration will result with a potential for more harm than good emerging.

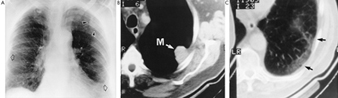

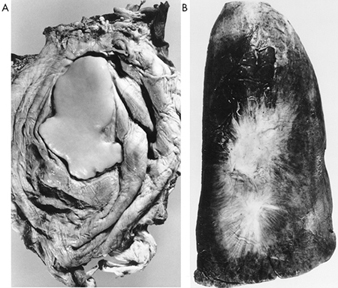

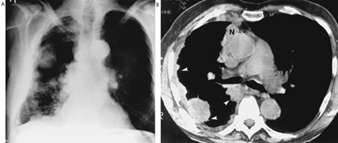

Occupationally Acquired Infections of the Lung

Although epidemiological studies of occupationally acquired pneumonia (OAP) are limited, work-related lung infections are thought to be declining in frequency worldwide. In contrast, OAPs in developed nations may be increasing in occupations associated with biomedical research or healthcare. OAP in hospital workers largely reflects the prevalent community-acquired pathogens, but the re-emergence of tuberculosis, measles and pertussis in health care settings presents additional risk for health-based occupations. In developing nations, and in specific occupations in developed nations, unique infectious pathogens that do not commonly circulate in the community cause many OAPs.

Attributing infection to occupational rather than community exposure can be difficult, especially for hospital workers. In the past, occupational risk was documented with certainty only in situations where workers were infected with agents that occurred in the workplace but were not present in the community. In the future, the use of molecular techniques to track specific microbial clones through the workplace and communities will make risk determinations more clear.

Like community-acquired pneumonia, OAP results from microaspiration of bacteria that colonize the oropharynx, inhalation of respirable infectious particles, or haematogenous seeding of the lungs. Most community-acquired pneumonia results from microaspiration, but OAP is usually due to inhalation of infectious 0.5 to 10μm airborne particles in the workplace. Larger particles fail to reach the alveoli because of impaction or sedimentation onto the walls of the large airways and are subsequently cleared. Smaller particles remain suspended during inspiratory and expiratory flow and are rarely deposited in the alveoli. For some diseases, such as the haemorrhagic fever with renal syndrome associated with hantavirus infection, the principal mode of transmission is inhalation but the primary focus of disease may not be the lungs. Occupationally acquired pathogens that are not transmitted by inhalation may secondarily involve the lungs but will not be discussed here.

This review briefly discusses some of the most important occupationally acquired pathogens. A more extensive list of occupationally acquired pulmonary disorders, classified by specific aetiologies, is presented in table 1.

Table 1. Occupationally acquired infectious diseases contracted via microaspiration or inhalation of infectious particles

|

Disease (pathogen) |

Reservoir |

At-risk populations |

|

Bacteria, chlamydia, mycoplasma and rickettsia |

||

|

Brucellosis (Brucella spp.) |

Livestock (cattle, goats, pigs) |

Veterinary care workers, agricultural workers, laboratory workers, abattoir workers |

|

Inhalation anthrax (Bacillus anthracis) |

Animal products (wools, hides) |

Agricultural workers, tanners, abattoir workers, textile workers, laboratory workers |

|

Pneumonic plague (Yersinia pestis) |

Wild rodents |

Veterinary care workers, hunters/trappers, laboratory workers |

|

Pertussis (Bordatella pertussis) |

Humans |

Employees of nursing homes, health care workers |

|

Legionnaire’s disease (Legionella spp.) |

Contaminated water sources (e.g., cooling towers, evaporator condensers) |

Health care workers, laboratory workers, industrial laboratory workers, water well excavators |

|

Melioidosis (Pseudomonas pseudomallei) |

Soil, stagnant water, rice fields |