Role of Questionnaires in Epidemiological Research

Epidemiological research is generally carried out in order to answer a specific research question which relates the exposures of individuals to hazardous substances or situations with subsequent health outcomes, such as cancer or death. At the heart of nearly every such investigation is a questionnaire which constitutes the basic data-gathering tool. Even when physical measurements are to be made in a workplace environment, and especially when biological materials such as serum are to be collected from exposed or unexposed study subjects, a questionnaire is essential in order to develop an adequate exposure picture by systematically collecting personal and other characteristics in an organized and uniform way.

The questionnaire serves a number of critical research functions:

- It provides data on individuals which may not be available from any other source, including workplace records or environmental measurements.

- It permits targeted studies of specific workplace problems.

- It provides baseline information against which future health effects can be assessed.

- It provides information about participant characteristics that are necessary for proper analysis and interpretation of exposure-outcome relationships, especially possibly confounding variables like age and education, and other lifestyle variables that may affect disease risk, like smoking and diet.

Place of questionnaire design within overall study goals

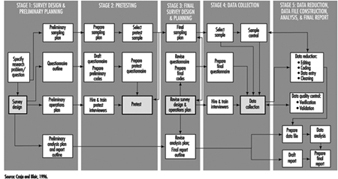

While the questionnaire is often the most visible part of an epidemiological study, particularly to the workers or other study participants, it is only a tool and indeed is often called an “instrument” by researchers. Figure 1 depicts in a very general way the stages of survey design from conception through data collection and analysis. The figure shows four levels or tiers of study operation which proceed in parallel throughout the life of the study: sampling, questionnaire, operations, and analysis. The figure demonstrates quite clearly the way in which stages of questionnaire development are related to the overall study plan, proceeding from an initial outline to a first draft of both the questionnaire and its associated codes, followed by pretesting within a selected subpopulation, one or more revisions dictated by pretest experiences, and preparation of the final document for actual data collection in the field. What is most important is the context: each stage of questionnaire development is carried out in conjunction with a corresponding stage of creation and refinement of the overall sampling plan, as well as the operational design for administration of the questionnaire.

Figure 1. The stages of a survey

Types of studies and questionnaires

The research goals of the study itself determine the structure, length and content of the questionnaire. These questionnaire attributes are invariably tempered by the method of data collection, which usually falls within one of three modes: in person, mail and telephone. Each of these has its advantages and disadvantages which can affect not only the quality of the data but the validity of the overall study.

A mailed questionnaire is the least expensive format and can cover workers in a wide geographical area. However, in that overall response rates are often low (typically 45 to 75%), it cannot be overly complex since there is little or no opportunity for clarification of questions, and it may be difficult to ascertain whether potential responses to critical exposure or other questions differ systematically between respondents and non-respondents. The physical layout and language must accommodate the least educated of potential study participants, and must be capable of completion in a fairly short time period, typically 20 to 30 minutes.

Telephone questionnaires can be used in population-based studies—that is, surveys in which a sample of a geographically defined population is canvassed—and are a practical method to update information in existing data files. They may be longer and more complex than mailed questionnaires in language and content, and since they are administered by trained interviewers the greater cost of a telephone survey can be partially offset by physically structuring the questionnaire for efficient administration (such as through skip patterns). Response rates are usually better than with mailed questionnaires, but are subject to biases related to increasing use of telephone answering machines, refusals, non-contacts and problems of populations with limited telephone service. Such biases generally relate to the sampling design itself and not especially to the questionnaire. Although telephone questionnaires have long been in use in North America, their feasibility in other parts of the world has yet to be established.

Face-to-face interviews provide the greatest opportunity for collecting accurate complex data; they are also the most expensive to administer, since they require both training and travel for professional staff. The physical layout and order of questions may be arranged to optimize administration time. Studies which utilize in-person interviewing generally have the highest response rates and are subject to the least response bias. This is also the type of interview in which the interviewer is most likely to learn whether or not the participant is a case (in a case-control study) or the participant’s exposure status (in a cohort study). Care must therefore be taken to preserve the objectivity of the interviewer by training him or her to avoid leading questions and body language that might evoke biased responses.

It is becoming more common to use a hybrid study design in which complex exposure situations are assessed in a personal or telephone interview which allows maximum probing and clarification, followed by a mailed questionnaire to capture lifestyle data like smoking and diet.

Confidentiality and research participant issues

Since the purpose of a questionnaire is to obtain data about individuals, questionnaire design must be guided by established standards for ethical treatment of human subjects. These guidelines apply to acquisition of questionnaire data just as they do for biological samples such as blood and urine, or to genetic testing. In the United States and many other countries, no studies involving humans may be conducted with public funds unless approval of questionnaire language and content is first obtained from an appropriate Institutional Review Board. Such approval is intended to assure that questions are confined to legitimate study purposes, and that they do not violate the rights of study participants to answer questions voluntarily. Participants must be assured that their participation in the study is entirely voluntary, and that refusal to answer questions or even to participate at all will not subject them to any penalties or alter their relationship with their employer or medical practitioner.

Participants must also be assured that the information they provide will be held in strict confidence by the investigator, who must of course take steps to maintain the physical security and inviolability of the data. This often entails physical separation of information regarding the identity of participants from computerized data files. It is common practice to advise study participants that their replies to questionnaire items will be used only in aggregation with responses of other participants in statistical reports, and will not be disclosed to the employer, physician or other parties.

Measurement aspects of questionnaire design

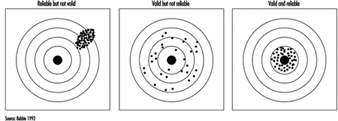

One of the most important functions of a questionnaire is to obtain data about some aspect or attribute of a person in either qualitative or quantitative form. Some items may be as simple as weight, height or age, while others may be considerably more complicated, as with an individual’s response to stress. Qualitative responses, such as gender, will ordinarily be converted into numerical variables. All such measures may be characterized by their validity and their reliability. Validity is the degree to which a questionnaire-derived number approaches its true, but possibly unknown, value. Reliability measures the likelihood that a given measurement will yield the same result on repetition, whether that result is close to the “truth” or not. Figure 2 shows how these concepts are related. It demonstrates that a measurement can be valid but not reliable, reliable but not valid, or both valid and reliable.

Figure 2. Validity & reliability relationship

Over the years, many questionnaires have been developed by researchers in order to answer research questions of wide interest. Examples include the Scholastic Aptitude Test, which measures a student’s potential for future academic achievement, and the Minnesota Multiphasic Personality Inventory (MMPI), which measures certain psychosocial characteristics. A variety of other psychological indicators are discussed in the chapter on psychometrics. There are also established physiological scales, such as the British Medical Research Council (BMRC) questionnaire for pulmonary function. These instruments have a number of important advantages. Chief among these are the facts that they have already been developed and tested, usually in many populations, and that their reliability and validity are known. Anyone constructing a questionnaire is well advised to utilize such scales if they fit the study purpose. Not only do they save the effort of “re-inventing the wheel”, but they make it more likely that study results will be accepted as valid by the research community. It also makes for more valid comparisons of results from different studies provided they have been properly used.

The preceding scales are examples of two important types of measures which are commonly used in questionnaires to quantify concepts that may not be fully objectively measurable in the way that height and weight are, or which require many similar questions to fully “tap the domain” of one specific behavioural pattern. More generally, indexes and scales are two data reduction techniques that provide a numerical summary of groups of questions. The above examples illustrate physiological and psychological indexes, and they are also frequently used to measure knowledge, attitude and behaviour. Briefly, an index is usually constructed as a score obtained by counting, among a group of related questions, the number of items that apply to a study participant. For instance, if a questionnaire presents a list of diseases, a disease history index could be the total number of those which a respondent says he or she has had. A scale is a composite measure based on the intensity with which a participant answers one or more related questions. For example, the Likert scale, which is frequently used in social research, is typically constructed from statements with which one may agree strongly, agree weakly, offer no opinion, disagree weakly, or disagree strongly, the response being scored as a number from 1 to 5. Scales and indexes may be summed or otherwise combined to form a fairly complex picture of study participants’ physical, psychological, social or behavioural characteristics.

Validity merits special consideration because of its reflection of the “truth”. Three important types of validity often discussed are face, content and criterion validity. Face validity is a subjective quality of an indicator which insures that the wording of a question is clear and unambiguous. Content validity insures that the questions will serve to tap that dimension of response in which the researcher is interested. Criterion (or predictive) validity is derived from an objective assessment of how closely a questionnaire measurement approaches a separately measurable quantity, as for instance how well a questionnaire assessment of dietary vitamin A intake matches the actual consumption of vitamin A, based upon food consumption as documented with dietary records.

Questionnaire content, quality and length

Wording. The wording of questions is both an art and a professional skill. Therefore, only the most general of guidelines can be presented. It is generally agreed that questions should be devised which:

- motivate the participant to respond

- draw upon the participant’s personal knowledge

- take into account his or her limitations and personal frame of reference, so that the aim and meaning of the questions is easily understood and

- elicit a response based upon the participant’s own knowledge and do not require guessing, except possibly for attitude and opinion questions.

Question sequence and structure. Both the order and presentation of questions can affect the quality of information gathered. A typical questionnaire, whether self-administered or read by an interviewer, contains a prologue which introduces the study and its topic to the respondent, provides any additional information he or she will need, and tries to motivate the respondent to answer the questions. Most questionnaires contain a section designed to collect demographic information, such as age, gender, ethnic background and other variables about the participant’s background, including possibly confounding variables. The main subject matter of data collection, such as nature of the workplace and exposure to specific substances, is usually a distinct questionnaire section, and is often preceded by an introductory prologue of its own which might first remind the participant of specific aspects of the job or workplace in order to create a context for detailed questions. The layout of questions that are intended to establish worklife chronologies should be arranged so as to minimize the risk of chronological omissions. Finally, it is customary to thank the respondent for his or her participation.

Types of questions. The designer must decide whether to use open-ended questions in which participants compose their own answers, or closed questions that require a definite response or a choice from a short menu of possible responses. Closed questions have the advantage that they clarify alternatives for the respondent, avoid snap responses, and minimize lengthy rambling that may be impossible to interpret. However, they require that the designer anticipate the range of potential responses in order to avoid losing information, particularly for unexpected situations that occur in many workplaces. This in turn requires well planned pilot testing. The investigator must decide whether and to what extent to permit a “don’t know” response category.

Length. Determining the final length of a questionnaire requires striking a balance between the desire to obtain as much detailed information as possible to achieve the study goals with the fact that if a questionnaire is too lengthy, at some point many respondents will lose interest and either stop responding or respond hastily, inaccurately and without thought in order to bring the session to an end. On the other hand, a questionnaire which is very short may obtain a high response rate but not achieve the study goals. Since respondent motivation often depends on having a personal stake in the outcome, such as improving working conditions, tolerance for a lengthy questionnaire may vary widely, especially when some participants (such as workers in a particular plant) may perceive their stake to be higher than others (such as persons contacted via random telephone dialling). This balance can be achieved only through pilot testing and experience. Interviewer-administered questionnaires should record the beginning and ending time to permit calculation of the duration of the interview. This information is useful in assessing the level of quality of the data.

Language. It is essential to use the language of the population to make the questions understood by all. This may require becoming familiar with local vernacular that may vary within any one country. Even in countries where the same language is nominally spoken, such as Britain and the United States, or the Spanish-speaking countries of Latin America, local idioms and usage may vary in a way that can obscure interpretation. For example, in the US “tea” is merely a beverage, whereas in Britain it may mean “a pot of tea,” “high tea,” or “the main evening meal,” depending on locale and context. It is especially important to avoid scientific jargon, except where study participants can be expected to possess specific technical knowledge.

Clarity and leading questions. While it is often the case that shorter questions are clearer, there are exceptions, especially where a complex subject needs to be introduced. Nevertheless, short questions clarify thinking and reduce unnecessary words. They also reduce the chance of overloading the respondent with too much information to digest. If the purpose of the study is to obtain objective information about the participant’s working situation, it is important to word questions in a neutral way and to avoid “leading” questions that may favour a particular answer, such as “Do you agree that your workplace conditions are harmful to your health?”

Questionnaire layout. The physical layout of a questionnaire can affect the cost and efficiency of a study. It is more important for self-administered questionnaires than those which are conducted by interviewers. A questionnaire which is designed to be completed by the respondent but which is overly complex or difficult to read may be filled out casually or even discarded. Even questionnaires which are designed to be read aloud by trained interviewers need to be printed in clear, readable type, and patterns of question skipping must be indicated in a manner which maintains a steady flow of questioning and minimizes page turning and searching for the next applicable question.

Validity Concerns

Bias

The enemy of objective data gathering is bias, which results from systematic but unplanned differences between groups of people: cases and controls in a case-control study or exposed and non-exposed in a cohort study. Information bias may be introduced when two groups of participants understand or respond differently to the same question. This may occur, for instance, if questions are posed in such a way as to require special technical knowledge of a workplace or its exposures that would be understood by exposed workers but not necessarily by the general public from which controls are drawn.

The use of surrogates for ill or deceased workers has the potential for bias because next-of-kin are likely to recall information in different ways and with less accuracy than the worker himself or herself. The introduction of such bias is especially likely in studies in which some interviews are carried out directly with study participants while other interviews are carried out with relatives or co-workers of other research participants. In either situation, care must be taken to reduce any effect that might arise from the interviewer’s knowledge of the disease or exposure status of the worker of interest. Since it is not always possible to keep interviewers “blind,” it is important to emphasize objectivity and avoidance of leading or suggestive questions or unconscious body language during training, and to monitor performance while the study is being carried out.

Recall bias results when cases and controls “remember” exposures or work situations differently. Hospitalized cases with a potential occupationally related illness may be more capable of recalling details of their medical history or occupational exposures than persons contacted randomly on the telephone. A type of this bias that is becoming more common has been labelled social desirability bias. It describes the tendency of many people to understate, whether consciously or not, their indulgence in “bad habits” such as cigarette smoking or consumption of foods high in fat and cholesterol, and to overstate “good habits” like exercise.

Response bias denotes a situation in which one group of study participants, such as workers with a particular occupational exposure, may be more likely to complete questionnaires or otherwise participate in a study than unexposed persons. Such a situation may result in a biased estimation of the association between exposure and disease. Response bias may be suspected if response rates or the time taken to complete a questionnaire or interview differ substantially between groups (e.g., cases vs. controls, exposed vs. unexposed). Response bias generally differs depending upon the mode of questionnaire administration. Questionnaires which are mailed are usually more likely to be returned by individuals who see a personal stake in study findings, and are more likely to be ignored or discarded by persons selected at random from the general population. Many investigators who utilize mail surveys also build in a follow-up mechanism which may include second and third mailings as well as subsequent telephone contacts with non-respondents in order to maximize response rates.

Studies which utilize telephone surveys, including those which make use of random digit dialling to identify controls, usually have a set of rules or a protocol defining how many times attempts to contact potential respondents must be made, including time of day, and whether evening or weekend calls should be attempted. Those who conduct hospital-based studies usually record the number of patients who refuse to participate, and reasons for non-participation. In all such cases, various measures of response rates are recorded in order to provide an assessment of the extent to which the target population has actually been reached.

Selection bias results when one group of participants preferentially responds or otherwise participates in a study, and can result in biased estimation of the relationship between exposure and disease. In order to assess selection bias and whether it leads to under- or over-estimation of exposure, demographic information such as educational level can be used to compare respondents with non-respondents. For example, if participants with little education have lower response rates than participants with higher education, and if a particular occupation or smoking habit is known to be more frequent in less educated groups, then selection bias with underestimation of exposure for that occupation or smoking category is likely to have occurred.

Confounding is an important type of selection bias which results when the selection of respondents (cases and controls in a case-control study, or exposed and unexposed in a cohort study) depends in some way upon a third variable, sometimes in a manner unknown to the investigator. If not identified and controlled, it can lead unpredictably to underestimates or overestimates of disease risks associated with occupational exposures. Confounding is usually dealt with either by manipulating the design of the study itself (e.g., through matching cases to controls on age and other variables) or at the analysis stage. Details of these techniques are presented in other articles within this chapter.

Documentation

In any research study, all study procedures must be thoroughly documented so that all staff, including interviewers, supervisory personnel and researchers, are clear about their respective duties. In most questionnaire-based studies, a coding manual is prepared which describes on a question-by-question basis everything the interviewer needs to know beyond the literal wording of the questions. This includes instructions for coding categorical responses and may contain explicit instructions on probing, listing those questions for which it is permitted and those for which it is not. In many studies new, unforeseen response choices for certain questions are occasionally encountered in the field; these must be recorded in the master codebook and copies of additions, changes or new instructions distributed to all interviewers in a timely fashion.

Planning, testing and revision

As can be seen from figure 1, questionnaire development requires a great deal of thoughtful planning. Every questionnaire needs to be tested at several stages in order to make certain that the questions “work”, i.e., that they are understandable and produce responses of the intended quality. It is useful to test new questions on volunteers and then to interrogate them at length to determine how well specific questions were understood and what types of problems or ambiguities were encountered. The results can then be utilized to revise the questionnaire, and the procedure can be repeated if necessary. The volunteers are sometimes referred to as a “focus group”.

All epidemiological studies require pilot testing, not only for the questionnaires, but for the study procedures as well. A well designed questionnaire serves its purpose only if it can be delivered efficiently to the study participants, and this can be determined only by testing procedures in the field and making adjustments when necessary.

Interviewer training and supervision

In studies which are conducted by telephone or face-to-face interview, the interviewer plays a critical role. This person is responsible not simply for presenting questions to the study participants and recording their responses, but also for interpreting those responses. Even with the most rigidly structured interview study, respondents occasionally request clarification of questions, or offer responses which do not fit the available response categories. In such cases the interviewer’s job is to interpret either the question or the response in a manner consistent with the intent of the researcher. To do so effectively and consistently requires training and supervision by an experienced researcher or manager. When more than one interviewer is employed on a study, interviewer training is especially important to insure that questions are presented and responses interpreted in a uniform manner. In many research projects this is accomplished in group training settings, and is repeated periodically (e.g., annually) in order to keep the interviewers’ skills fresh. Training seminars commonly cover the following topics in considerable detail:

- general introduction to the study

- informed consent and confidentiality issues

- how to introduce the interview and how to interact with respondents

- the intended meaning of each question

- instructions for probing, i.e., offering the respondent further opportunity to clarify or embellish responses

- discussion of typical problems which arise during interviews.

Study supervision often entails onsite observation, which may include tape-recording of interviews for subsequent dissection. It is common practice for the supervisor to personally review every questionnaire prior to approving and submitting it to data entry. The supervisor also sets and enforces performance standards for interviewers and in some studies conducts independent re-interviews with selected participants as a reliability check.

Data collection

The actual distribution of questionnaires to study participants and subsequent collection for analysis is carried out using one of the three modes described above: by mail, telephone or in person. Some researchers organize and even perform this function themselves within their own institutions. While there is considerable merit to a senior investigator becoming familiar with the dynamics of the interview at first hand, it is most cost effective and conducive to maintaining high data quality for trained and well-supervised professional interviewers to be included as part of the research team.

Some researchers make contractual arrangements with companies that specialize in survey research. Contractors can provide a range of services which may include one or more of the following tasks: distributing and collecting questionnaires, carrying out telephone or face-to-face interviews, obtaining biological specimens such as blood or urine, data management, and statistical analysis and report writing. Irrespective of the level of support, contractors are usually responsible for providing information about response rates and data quality. Nevertheless, it is the researcher who bears final responsibility for the scientific integrity of the study.

Reliability and re-interviews

Data quality may be assessed by re-interviewing a sample of the original study participants. This provides a means for determining the reliability of the initial interviews, and an estimate of the repeatability of responses. The entire questionnaire need not be re-administered; a subset of questions usually is sufficient. Statistical tests are available for assessing the reliability of a set of questions asked of the same participant at different times, as well as for assessing the reliability of responses provided by different participants and even for those queried by different interviewers (i.e., inter- and intra-rater assessments).

Technology of questionnaire processing

Advances in computer technology have created many different ways in which questionnaire data can be captured and made available to the researcher for computer analysis. There are three fundamentally different ways in which data can be computerized: in real time (i.e., as the participant responds during an interview), by traditional key entry methods, and by optical data capture methods.

Computer-aided data capture

Many researchers now use computers to collect responses to questions posed in both face-to-face and telephone interviews. Researchers in the field find it convenient to use laptop computers which have been programmed to display the questions sequentially and which permit the interviewer to enter the response immediately. Survey research companies which do telephone interviewing have developed analogous systems called computer-aided telephone interview (CATI) systems. These methods have two important advantages over more traditional paper questionnaires. First, responses can be instantly checked against a range of permissible answers and for consistency with previous responses, and discrepancies can be immediately brought to the attention of both the interviewer and the respondent. This greatly reduces the error rate. Secondly, skip patterns can be programmed to minimize administration time.

The most common method for computerizing data still is the traditional key entry by a trained operator. For very large studies, questionnaires are usually sent to a professional contract company which specializes in data capture. These firms often utilize specialized equipment which permits one operator to key a questionnaire (a procedure sometimes called keypunch for historical reasons) and a second operator to re-key the same data, a process called key verification. Results of the second keying are compared with the first to assure the data have been entered correctly. Quality assurance procedures can be programmed which ensure that each response falls within an allowable range, and that it is consistent with other responses. The resulting data files can be transmitted to the researcher on disk, tape or electronically by telephone or other computer network.

For smaller studies, there are numerous commercial PC-based programs which have data entry features which emulate those of more specialized systems. These include database programs such as dBase, Foxpro and Microsoft Access, as well as spreadsheets such as Microsoft Excel and Lotus 1-2-3. In addition, data entry features are included with many computer program packages whose principal purpose is statistical data analysis, such as SPSS, BMDP and EPI INFO.

One widespread method of data capture which works well for certain specialized questionnaires uses optical systems. Optical mark reading or optical sensing is used to read responses on questionnaires that are specially designed for participants to enter data by marking small rectangles or circles (sometimes called “bubble codes”). These work most efficiently when each individual completes his or her own questionnaire. More sophisticated and expensive equipment can read hand-printed characters, but at present this is not an efficient technique for capturing data in large-scale studies.

Archiving Questionnaires and Coding Manuals

Because information is a valuable resource and is subject to interpretation and other influences, researchers sometimes are asked to share their data with other researchers. The request to share data can be motivated by a variety of reasons, which may range from a sincere interest in replicating a report to concern that data may not have been analysed or interpreted correctly.

Where falsification or fabrication of data is suspected or alleged, it becomes essential that the original records upon which reported findings are based be available for audit purposes. In addition to the original questionnaires and/or computer files of raw data, the researcher must be able to provide for review the coding manual(s) developed for the study and the log(s) of all data changes which were made in the course of data coding, computerization and analysis. For example, if a data value had been altered because it had initially appeared as an outlier, then a record of the change and the reasons for making the change should have been recorded in the log for possible data audit purposes. Such information also is of value at the time of report preparation because it serves as a reminder about how the data which gave rise to the reported findings had actually been handled.

For these reasons, upon completion of a study, the researcher has an obligation to ensure that all basic data are appropriately archived for a reasonable period of time, and that they could be retrieved if the researcher were called upon to provide them.