In designing equipment it is of the utmost importance to take full account of the fact that a human operator has both capabilities and limitations in processing information, which are of a varying nature and which are found on various levels. Performance in actual work conditions strongly depends on the extent to which a design has either attended to or ignored these potentials and their limits. In the following a brief sketch will be offered of some of the chief issues. Reference will be made to other contributions of this volume, where an issue will be discussed in greater detail.

It is common to distinguish three main levels in the analysis of human information processing, namely, the perceptual level, the decision level and the motor level. The perceptual level is subdivided into three further levels, relating to sensory processing, feature extraction and identification of the percept. On the decision level, the operator receives perceptual information and chooses a reaction to it which is finally programmed and actualized on the motor level. This describes only the information flow in the simplest case of a choice reaction. It is evident, though, that perceptual information may accumulate and be combined and diagnosed before eliciting an action. Again, there may arise a need for selecting information in view of perceptual overload. Finally, choosing an appropriate action becomes more of a problem when there are several options some of which may be more appropriate than others. In the present discussion, the emphasis will be on the perceptual and decisional factors of information processing.

Perceptual Capabilities and Limits

Sensory limits

The first category of processing limits is sensory. Their relevance to information processing is obvious since processing becomes less reliable as information approaches threshold limits. This may seem a fairly trivial statement, but nonetheless, sensory problems are not always clearly recognized in designs. For example, alphanumerical characters in sign posting systems should be sufficiently large to be legible at a distance consistent with the need for appropriate action. Legibility, in turn, depends not only on the absolute size of the alphanumericals but also on contrast and—in view of lateral inhibition—also on the total amount of information on the sign. In particular, in conditions of low visibility (e.g., rain or fog during driving or flying) legibility is a considerable problem requiring additional measures. More recently developed traffic signposts and road markers are usually well designed, but signposts near and within buildings are often illegible. Visual display units are another example in which sensory limits of size, contrast and amount of information play an important role. In the auditory domain some main sensory problems are related to understanding speech in noisy environments or in poor quality audio transmission systems.

Feature extraction

Provided sufficient sensory information, the next set of information processing issues relates to extracting features from the information presented. Most recent research has shown ample evidence that an analysis of features precedes the perception of meaningful wholes. Feature analysis is particularly useful in locating a special deviant object amidst many others. For instance, an essential value on a display containing many values may be represented by a single deviant colour or size, which feature then draws immediate attention or “pops out”. Theoretically, there is the common assumption of “feature maps” for different colours, sizes, forms and other physical features. The attention value of a feature depends on the difference in activation of the feature maps that belong to the same class, for example, colour. Thus, the activation of a feature map depends on the discriminability of the deviant features. This means that when there are a few instances of many colours on a screen, most colour feature maps are about equally activated, which has the effect that none of the colours pops out.

In the same way a single moving advertisement pops out, but this effect disappears altogether when there are several moving stimuli in the field of view. The principle of the different activation of feature maps is also applied when aligning pointers that indicate ideal parameter values. A deviation of a pointer is indicated by a deviant slope which is rapidly detected. If this is impossible to realize, a dangerous deviation might be indicated by a change in colour. Thus, the general rule for design is to use only a very few deviant features on a screen and to reserve them only for the most essential information. Searching for relevant information becomes cumbersome in the case of conjunctions of features. For example, it is hard to locate a large red object amidst small red objects and large and small green objects. If possible, conjunctions should be avoided when trying to design for efficient search.

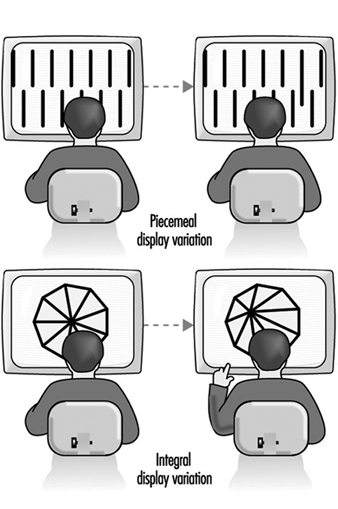

Separable versus integral dimensions

Features are separable when they can be changed without affecting the perception of other features of an object. Line lengths of histograms are a case in point. On the other hand, integral features refer to features which, when changed, change the total appearance of the object. For instance, one cannot change features of the mouth in a schematic drawing of a face without altering the total appearance of the picture. Again, colour and brightness are integral in the sense that one cannot change a colour without altering the brightness impression at the same time. The principles of separable and integral features, and of emergent properties evolving from changes of single features of an object, are applied in so-called integrated or diagnostic displays. The rationale of these displays is that, rather than displaying individual parameters, different parameters are integrated into a single display, the total composition of which indicates what may be actually wrong with a system.

Data presentation in control rooms is still often dominated by the philosophy that each individual measure should have its own indicator. Piecemeal presentation of the measures means that the operator has the task of integrating the evidence from the various individual displays so as to diagnose a potential problem. At the time of the problems in the Three Mile Island nuclear power plant in the United States some forty to fifty displays were registering some form of disorder. Thus, the operator had the task of diagnosing what was actually wrong by integrating the information from that myriad of displays. Integral displays may be helpful in diagnosing the kind of error, since they combine various measures into a single pattern. Different patterns of the integrated display, then, may be diagnostic with regard to specific errors.

A classical example of a diagnostic display, which has been proposed for nuclear control rooms, is shown in figure 1. It displays a number of measures as spokes of equal length so that a regular polygon always represents normal conditions, while different distortions may be connected with different types of problems in the process.

Figure 1. In the normal situation all parameter values are equal, creating a hexagon. In the deviation, some of the values have changed creating a specific distortion.

Not all integral displays are equally discriminable. To illustrate the issue, a positive correlation between the two dimensions of a rectangle creates differences in surface, while maintaining an equal shape. Alternatively, a negative correlation creates differences in shape while maintaining an equal surface. The case in which variation of integral dimensions creates a new shape has been referred to as revealing an emergent property of the patterning, which adds to the operator’s ability to discriminate the patterns. Emergent properties depend upon the identity and arrangement of parts but are not identifiable with any single part.

Not all integral displays are equally discriminable. To illustrate the issue, a positive correlation between the two dimensions of a rectangle creates differences in surface, while maintaining an equal shape. Alternatively, a negative correlation creates differences in shape while maintaining an equal surface. The case in which variation of integral dimensions creates a new shape has been referred to as revealing an emergent property of the patterning, which adds to the operator’s ability to discriminate the patterns. Emergent properties depend upon the identity and arrangement of parts but are not identifiable with any single part.

Object and configural displays are not always beneficial. The very fact that they are integral means that the characteristics of the individual variables are harder to perceive. The point is that, by definition, integral dimensions are mutually dependent, thus clouding their individual constituents. There may be circumstances in which this is unacceptable, while one may still wish to profit from the diagnostic patternlike properties, which are typical for the object display. One compromise might be a traditional bar graph display. On the one hand, bar graphs are quite separable. Yet, when positioned in sufficiently close vicinity, the differential lengths of the bars may together constitute an object-like pattern which may well serve a diagnostic aim.

Some diagnostic displays are better than others. Their quality depends on the extent that the display corresponds to the mental model of the task. For example, fault diagnosis on the basis of distortions of a regular polygon, as in figure 1, may still bear little relationship to the domain semantics or to the concept of the operator of the processes in a power plant. Thus, various types of deviations of the polygon do not obviously refer to a specific problem in the plant. Therefore, the design of the most suitable configural display is one that corresponds to the specific mental model of the task. Thus it should be emphasized that the surface of a rectangle is only a useful object display when the product of length and width is the variable of interest!

Interesting object displays stem from three-dimensional representations. For instance, a three-dimensional representation of air traffic—rather than the traditional two-dimensional radar representation—may provide the pilot with a greater “situational awareness” of other traffic. The three-dimensional display has been shown to be much superior to a two-dimensional one since its symbols indicate whether another aircraft is above or below one’s own.

Degraded conditions

Degraded viewing occurs under a variety of conditions. For some purposes, as with camouflage, objects are intentionally degraded so as to prevent their identification. On other occasions, for example in brightness amplification, features may become too blurred to allow one to identify the object. One research issue has concerned the minimal number of “lines” required on a screen or “the amount of detail” needed in order to avoid degradation. Unfortunately, this approach to image quality has not led to unequivocal results. The problem is that identifying degraded stimuli (e.g., a camouflaged armoured vehicle) depends too much on the presence or absence of minor object-specific details. The consequence is that no general prescription about line density can be formulated, except for the trivial statement that degradation decreases as the density increases.

Features of alphanumeric symbols

A major issue in the process of feature extraction concerns the actual number of features which together define a stimulus. Thus, the legibility of ornate characters like Gothic letters is poor because of the many redundant curves. In order to avoid confusion, the difference between letters with very similar features—like the i and the l, and the c and the e—should be accentuated. For the same reason, it is recommended to make the stroke and tail length of ascenders and descenders at least 40% of the total letter height.

It is evident that discrimination among letters is mainly determined by the number of features which they do not share. These mainly consist of straight line and circular segments which may have horizontal, vertical and oblique orientation and which may differ in size, as in lower- and upper-case letters.

It is obvious that, even when alphanumericals are well discriminable, they may easily lose that property in combination with other items. Thus, the digits 4 and 7 share only a few features but they do not do well in the context of larger otherwise identical groups (e.g., 384 versus 387) There is unanimous evidence that reading text in lower case is faster than in capitals. This is usually ascribed to the fact that lower case letters have more distinct features (e.g., dog, cat versus DOG, CAT). The superiority of lower case letters has not only been established for reading text but also for road signs such as those used for indicating towns at the exits of motorways.

Identification

The final perceptual process is concerned with identification and interpretation of percepts. Human limits arising on this level are usually related to discrimination and finding the appropriate interpretation of the percept. The applications of research on visual discrimination are manifold, relating to alphanumerical patterns as well as to more general stimulus identification. The design of brake lights in cars will serve as an example of the last category. Rear-end accidents account for a considerable proportion of traffic accidents, and are due in part to the fact that the traditional location of the brake light next to the rear lights makes it poorly discriminable and therefore extends the driver’s reaction time. As an alternative, a single light has been developed which appears to reduce the accident rate. It is mounted in the centre of the rear window at approximately eye level. In experimental studies on the road, the effect of the central braking light appears to be less when subjects are aware of the aim of the study, suggesting that stimulus identification in the traditional configuration improves when subjects focus on the task. Despite the positive effect of the isolated brake light, its identification might still be further improved by making the brake light more meaningful, giving it the form of an exclamation mark, “!”, or even an icon.

Absolute judgement

Very strict and often counterintuitive performance limits arise in cases of absolute judgement of physical dimensions. Examples occur in connection with colour coding of objects and the use of tones in auditory call systems. The point is that relative judgement is far superior to absolute judgement. The problem with absolute judgement is that the code has to be translated into another category. Thus a specific colour may be linked with an electrical resistance value or a specific tone may be intended for a person for which an ensuing message is meant. In fact, therefore, the problem is not one of perceptual identification but rather of response choice, which will be discussed later in this article. At this point it suffices to remark that one should not use more than four or five colours or pitches so as to avoid errors. When more alternatives are needed one may add extra dimensions, like loudness, duration and components of tones.

Word reading

The relevance of reading separate word units in traditional print is demonstrated by various widely experienced evidence, such as the fact that reading is very much hampered when spaces are omitted, printing errors remain often undetected, and it is very hard to read words in alternating cases (e.g., ALTeRnAtInG). Some investigators have emphasized the role of word shape in reading word units and suggested that spatial frequency analysers may be relevant in identifying word shape. In this view meaning would be derived from total word shape rather than by letter-by-letter analysis. Yet, the contribution of word shape analysis is probably limited to small common words—articles and endings—which is consistent with the finding that printing errors in small words and endings have a relatively low probability of detection.

Text in lower case has an advantage over upper case which is due to a loss of features in the upper case. Yet, the advantage of lower case words is absent or may even be reversed when searching for a single word. It could be that factors of letter size and letter case are confounded in searching: Larger-sized letters are detected more rapidly, which may offset the disadvantage of less distinctive features. Thus, a single word may be about equally legible in upper case as in lower case, while continuous text is read faster in lower case. Detecting a SINGLE capital word amidst many lower case words is very efficient, since it evokes pop-out. An even more efficient fast detection can be achieved by printing a single lower case word in bold, in which case the advantages of pop-out and of more distinctive features are combined.

The role of encoding features in reading is also clear from the impaired legibility of older low-resolution visual display unit screens, which consisted of fairly rough dot matrices and could portray alphanumericals only as straight lines. The common finding was that reading text or searching from a low-resolution monitor was considerably slower than from a paper-printed copy. The problem has largely disappeared with the present-day higher-resolution screens. Besides letter form there are a number of additional differences between reading from paper and reading from a screen. The spacing of the lines, the size of the characters, the type face, the contrast ratio between characters and background, the viewing distance, the amount of flicker and the fact that changing pages on a screen is done by scrolling are some examples. The common finding that reading is slower from computer screens—although comprehension seems about equal—may be due to some combination of these factors. Present-day text processors usually offer a variety of options in font, size, colour, format and style; such choices could give the false impression that personal taste is the major reason.

Icons versus words

In some studies the time taken by a subject in naming a printed word was found to be faster than that for a corresponding icon, while both times were about equally fast in other studies. It has been suggested that words are read faster than icons since they are less ambiguous. Even a fairly simple icon, like a house, may still elicit different responses among subjects, resulting in response conflict and, hence, a decrease in reaction speed. If response conflict is avoided by using really unambiguous icons the difference in response speed is likely to disappear. It is interesting to note that as traffic signs, icons are usually much superior to words, even in the case where the issue of understanding language is not seen as a problem. This paradox may be due to the fact that the legibility of traffic signs is largely a matter of the distance at which a sign can be identified. If properly designed, this distance is larger for symbols than for words, since pictures can provide considerably larger differences in shape and contain less fine details than words. The advantage of pictures, then, arises from the fact that discrimination of letters requires some ten to twelve minutes of arc and that feature detection is the initial prerequisite for discrimination. At the same time it is clear that the superiority of symbols is only guaranteed when (1) they do indeed contain little detail, (2) they are sufficiently distinct in shape and (3) they are unambiguous.

Capabilities and Limits for Decision

Once a precept has been identified and interpreted it may call for an action. In this context the discussion will be limited to deterministic stimulus-response relations, or, in other words, to conditions in which each stimulus has its own fixed response. In that case the major problems for equipment design arise from issues of compatibility, that is, the extent to which the identified stimulus and its related response have a “natural” or well-practised relationship. There are conditions in which an optimal relation is intentionally aborted, as in the case of abbreviations. Usually a contraction like abrvtin is much worse than a truncation like abbrev. Theoretically, this is due to the increasing redundancy of successive letters in a word, which allows “filling out” final letters on the basis of earlier ones; a truncated word can profit from this principle while a contracted one cannot.

Mental models and compatibility

In most compatibility problems there are stereotypical responses derived from generalized mental models. Choosing the null position in a circular display is a case in point. The 12 o’clock and 9 o’clock positions appear to be corrected faster than the 6 o’clock and 3 o’clock positions. The reason may be that a clockwise deviation and a movement in the upper part in the display are experienced as “increases” requiring a response that reduces the value. In the 3 and 6 o’clock positions both principles conflict and they may therefore be handled less efficiently. A similar stereotype is found in locking or opening the rear door of a car. Most people act on the stereotype that locking requires a clockwise movement. If the lock is designed in the opposite way, continuous errors and frustration in trying to lock the door are the most likely result.

With respect to control movements the well-known Warrick’s principle on compatibility describes the relation between the location of a control knob and the direction of the movement on a display. If the control knob is located to the right of the display, a clockwise movement is supposed to move the scale marker up. Or consider moving window displays. According to most people’s mental model, the upward direction of a moving display suggests that the values go up in the same way in which a rising temperature in a thermometer is indicated by a higher mercury column. There are problems in implementing this principle with a “fixed pointer-moving scale” indicator. When the scale in such an indicator moves down, its value is intended to increasing. Thus a conflict with the common stereotype occurs. If the values are inverted, the low values are on the top of the scale, which is also contrary to most stereotypes.

The term proximity compatibility refers to the correspondence of symbolic representations to people’s mental models of functional or even spatial relationships within a system. Issues of proximity compatibility are more pressing as the mental model of a situation is more primitive, global or distorted. Thus, a flow diagram of a complex automated industrial process is often displayed on the basis of a technical model which may not correspond at all with the mental model of the process. In particular, when the mental model of a process is incomplete or distorted, a technical representation of the progress adds little to develop or correct it. A daily-life example of poor proximity compatibility is an architectural map of a building that is intended for viewer orientation or for showing fire escape routes. These maps are usually entirely inadequate—full of irrelevant details—in particular for people who have only a global mental model of the building. Such convergence between map reading and orientation comes close to what has been called “situational awareness”, which is particularly relevant in three-dimensional space during an air flight. There have been interesting recent developments in three-dimensional object displays, representing attempts to achieve optimal proximity compatibility in this domain.

Stimulus-response compatibility

An example of stimulus-response (S-R) compatibility is typically found in the case of most text processing programs, which assume that operators know how commands correspond to specific key combinations. The problem is that a command and its corresponding key combination usually fail to have any pre-existing relation, which means that the S-R relations must be learned by a painstaking process of paired-associate learning. The result is that, even after the skill has been acquired, the task remains error-prone. The internal model of the program remains incomplete since less practised operations are liable to be forgotten, so that the operator can simply not come up with the appropriate response. Also, the text produced on the screen usually does not correspond in all respects to what finally appears on the printed page, which is another example of inferior proximity compatibility. Only a few programs utilize a stereotypical spatial internal model in connection with stimulus-response relations for controlling commands.

It has been correctly argued that there are much better pre-existing relations between spatial stimuli and manual responses—like the relation between a pointing response and a spatial location, or like that between verbal stimuli and vocal responses. There is ample evidence that spatial and verbal representations are relatively separate cognitive categories with little mutual interference but also with little mutual correspondence. Hence, a spatial task, like formatting a text, is most easily performed by spatial mouse-type movement, thus leaving the keyboard for verbal commands.

This does not mean that the keyboard is ideal for carrying out verbal commands. Typing remains a matter of manually operating arbitrary spatial locations which are basically incompatible with processing letters. It is actually another example of a highly incompatible task which is only mastered by extensive practise, and the skill is easily lost without continuous practice. A similar argument can be made for shorthand writing, which also consists of connecting arbitrary written symbols to verbal stimuli. An interesting example of an alternative method of keyboard operation is a chording keyboard.

The operator handles two keyboards (one for the left and one for the right hand) both consisting of six keys. Each letter of the alphabet corresponds to a chording response, that is, a combination of keys. The results of studies on such a keyboard showed striking savings in the time needed for acquiring typing skills. Motor limitations limited the maximal speed of the chording technique but, still, once learned, operator performance approached the speed of the conventional technique quite closely.

A classical example of a spatial compatibility effect concerns the traditional arrangements of stove burner controls: four burners in a 2 ´ 2 matrix, with the controls in a horizontal row. In this configuration, the relations between burner and control are not obvious and are poorly learned. However, despite many errors, the problem of lighting the stove, given time, can usually be solved. The situation is worse when one is faced with undefined display-control relations. Other examples of poor S-R compatibility are found in the display-control relations of video cameras, video recorders and television sets. The effect is that many options are never used or must be studied anew at each new trial. The claim that “it is all explained in the manual”, while true, is not useful since, in practice, most manuals are incomprehensible to the average user, in particular when they attempt to describe actions using incompatible verbal terms.

Stimulus-stimulus (S-S) and response-response (R-R) compatibility

Originally S-S and R-R compatibility were distinguished from S-R compatibility. A classical illustration of S-S compatibility concerns attempts in the late forties to support auditory sonar by a visual display in an effort to enhance signal detection. One solution was sought in a horizontal light beam with vertical perturbations travelling from left to right and reflecting a visual translation of the auditory background noise and potential signal. A signal consisted of a slightly larger vertical perturbation. The experiments showed that a combination of the auditory and visual displays did not do better than the single auditory display. The reason was sought in a poor S-S compatibility: the auditory signal is perceived as a loudness change; hence visual support should correspond most when provided in the form of a brightness change, since that is the compatible visual analogue of a loudness change.

It is of interest that the degree of S-S compatibility corresponds directly to how skilled subjects are in cross-modality matching. In a cross-modality match, subjects may be asked to indicate which auditory loudness corresponds to a certain brightness or to a certain weight; this approach has been popular in research on scaling sensory dimensions, since it allows one to avoid mapping sensory stimuli to numerals. R-R compatibility refers to correspondence of simultaneous and also of successive movements. Some movements are more easily coordinated than others, which provides clear constraints for the way a succession of actions—for example, successive operation of controls—is most efficiently done.

The above examples show clearly how compatibility issues pervade all user-machine interfaces. The problem is that the effects of poor compatibility are often softened by extended practice and so may remain unnoticed or underestimated. Yet, even when incompatible display-control relations are well-practised and do not seem to affect performance, there remains the point of a larger error probability. The incorrect compatible response remains a competitor for the correct incompatible one and is likely to come through on occasion, with the obvious risk of an accident. In addition, the amount of practice required for mastering incompatible S-R relations is formidable and a waste of time.

Limits of Motor Programming and Execution

One limit in motor programming was already briefly touched upon in the remarks on R-R compatibility. The human operator has clear problems in carrying out incongruent movement sequences, and in particular, changing from the one to another incongruent sequence is hard to accomplish. The results of studies on motor coordination are relevant to the design of controls in which both hands are active. Yet, practice can overcome much in this regard, as is clear from the surprising levels of acrobatic skills.

Many common principles in the design of controls derive from motor programming. They include the incorporation of resistance in a control and the provision of feedback indicating that it has been properly operated. A preparatory motor state is a highly relevant determinant of reaction time. Reacting to an unexpected sudden stimulus may take an additional second or so, which is considerable when a fast reaction is needed—as in reacting to a lead car’s brake light. Unprepared reactions are probably a main cause of chain collisions. Early warning signals are beneficial in preventing such collisions. A major application of research on movement execution concerns Fitt’s law, which relates movement, distance and the size of the target that is aimed at. This law appears to be quite general, applying equally to an operating lever, a joystick, a mouse or a light pen. Among others, it has been applied to estimate the time needed to make corrections on computer screens.

There is obviously much more to say than the above sketchy remarks. For instance, the discussion has been almost fully limited to issues of information flow on the level of a simple choice reaction. Issues beyond choice reactions have not been touched upon, nor problems of feedback and feed forward in the ongoing monitoring of information and motor activity. Many of the issues mentioned bear a strong relation to problems of memory and of planning of behaviour, which have not been addressed either. More extensive discussions are found in Wickens (1992), for example.