Humans play important roles in most of the processes leading up to accidents and in the majority of measures aimed at accident prevention. Therefore, it is vital that models of the accident process should provide clear guidance about the links between human actions and accidents. Only then will it be possible to carry out systematic accident investigation in order to understand these links and to make predictions about the effect of changes in the design and layout of workplaces, in the training, selection and motivation of workers and managers, and in the organization of work and management safety systems.

Early Modelling

Up until the 1960s, modelling human and organizational factors in accidents had been rather unsophisticated. These models had not differentiated human elements relevant to accidents beyond rough subdivisions such as skills, personality factors, motivational factors and fatigue. Accidents were seen as undifferentiated problems for which undifferentiated solutions were sought (as doctors two centuries ago sought to cure many then undifferentiated diseases by bleeding the patient).

Reviews of accident research literature that were published by Surry (1969) and by Hale and Hale (1972) were among the first attempts to go deeper and offer a basis for classifying accidents into types reflecting differentiated aetiologies, which were themselves linked to failures in different aspects of the man-technology-environment relationships. In both of these reviews, the authors drew upon the accumulating insights of cognitive psychology in order to develop models presenting people as information processors, responding to their environment and its hazards by trying to perceive and control the risks that are present. Accidents were considered in these models as failures of different parts of this process of control that occur when one or more of the control steps does not perform satisfactorily. The emphasis was also shifted in these models away from blaming the individual for failures or errors, and towards focusing on the mismatch between the behavioural demands of the task or system and the possibilities inherent in the way behaviour is generated and organized.

Human Behaviour

Later developments of these models by Hale and Glendon (1987) linked them to the work of Rasmussen and Reason (Reason 1990), which classified human behaviour into three levels of processing:

- automatic, largely unconscious responses to routine situations (skill-based behaviour)

- matching learned rules to a correct diagnosis of the prevailing situation (rule-based behaviour)

- conscious and time-consuming problem solving in novel situations (knowledge-based behaviour).

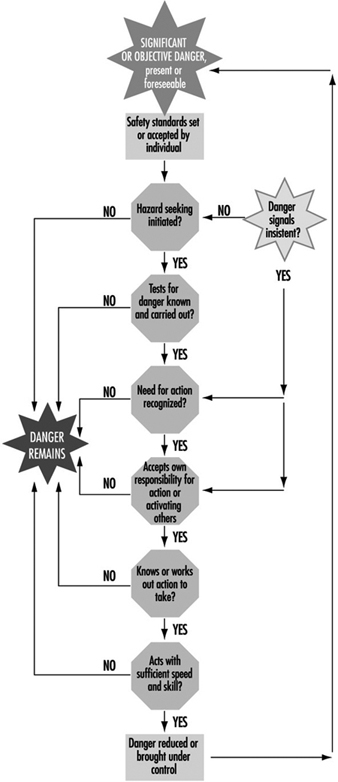

The typical failures of control differ from one level of behaviour to another, as do the types of accidents and the appropriate safety measures used to control them. The Hale and Glendon model, updated with more recent insights, is depicted in figure 1. It is made up of a number of building blocks which will be explained successively in order to arrive at the full model.

Figure 1. Individual problem solving in the face of danger

Link to deviation models

The starting point of the Hale and Glendon model is the way in which danger evolves in any workplace or system. Danger is considered to be always present, but kept under control by a large number of accident-prevention measures linked to hardware (e.g., the design of equipment and safeguards), people (e.g., skilled operators), procedures (e.g., preventive maintenance) and organization (e.g., allocation of responsibility for critical safety tasks). Provided that all relevant dangers and potential hazards have been foreseen and the preventive measures for them have been properly designed and chosen, no damage will occur. Only if a deviation from this desired, normal state takes place can the accident process start. (These deviation models are dealt with in detail in “Accident deviation models”.)

The task of the people in the system is to assure proper functioning of the accident-prevention measures so as to avert deviations, by using the correct procedures for each eventuality, handling safety equipment with care, and undertaking the necessary checks and adjustments. People also have the task of detecting and correcting many of the deviations which may occur and of adapting the system and its preventive measures to new demands, new dangers and new insights. All these actions are modelled in the Hale and Glendon model as detection and control tasks related to a danger.

Problem solving

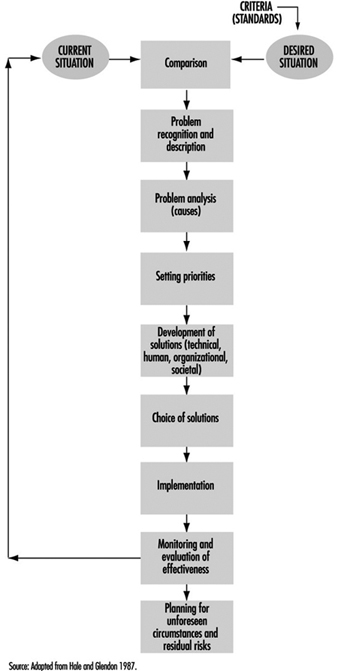

The Hale and Glendon model conceptualizes the role of human action in controlling danger as a problem-solving task. The steps in such a task can be described generically as in figure 2.

Figure 2. Problem-solving cycle

This task is a goal-seeking process, driven by the standards set in step one in figure 2. These are the standards of safety which workers set for themselves, or which are set by employers, manufacturers or legislators. The model has the advantage that it can be applied not only to individual workers faced with imminent or future danger, but also to groups of workers, departments or organizations aiming to control both existing danger from a process or industry and future danger from new technology or products at the design stage. Hence safety management systems can be modelled in a consistent way with human behaviour, allowing the designer or evaluator of safety management to take an appropriately focused or a wide view of the interlocking tasks of different levels of an organization (Hale et al. 1994).

Applying these steps to individual behaviour in the face of danger we obtain figure 3. Some examples of each step can clarify the task of the individual. Some degree of danger, as stated above, is assumed to be present all the time in all situations. The question is whether an individual worker responds to that danger. This will depend partly on how insistent the danger signals are and partly on the worker’s own consciousness of danger and standards of acceptable level of risk. When a piece of machinery unexpectedly glows red hot, or a fork-lift truck approaches at high speed, or smoke starts seeping from under the door, individual workers skip immediately to considering the need for action, or even to deciding what they or someone else can do.

Figure 3. Behaviour in the face of danger

These situations of imminent danger are rare in most industries, and it is normally desirable to activate workers to control danger when it is much less imminent. For example, workers should recognize slight wear on the machine guard and report it, and realize that a certain noise level will make them deaf if they are continuously exposed to it for some years. Designers should anticipate that a novice worker could be liable to use their proposed new product in a way that could be dangerous.

To do this, all persons responsible for safety must first consider the possibility that danger is or will be present. Consideration of danger is partly a matter of personality and partly of experience. It can also be encouraged by training and guaranteed by making it an explicit part of tasks and procedures at the design and execution phases of a process, where it may be confirmed and encouraged by colleagues and superiors. Secondly, workers and supervisors must know how to anticipate and recognize the signs of danger. To ensure the appropriate quality of alertness, they must accustom themselves to recognize potential accident scenarios—that is, indications and sets of indications that could lead to loss of control and so to damage. This is partly a question of understanding webs of cause and effect, such as how a process can get out of control, how noise damages hearing or how and when a trench can collapse.

Just as important is an attitude of creative mistrust. This involves considering that tools, machines and systems can be misused, go wrong, or show properties and interactions outside their designers’ intentions. It applies “Murphy’s Law” (whatever can go wrong will go wrong) creatively, by anticipating possible failures and affording the opportunity of eliminating or controlling them. Such an attitude, together with knowledge and understanding, also helps at the next step—that is, in really believing that some sort of danger is sufficiently likely or serious to warrant action.

Labelling something as dangerous enough to need action is again partly a matter of personality; for instance, it may have to do with how pessimistic a person may be about technology. More importantly, it is very strongly influenced by the kind of experience that will prompt workers to ask themselves such questions as, “Has it gone wrong in the past?” or “Has it worked for years with the same level of risk with no accidents?” The results of research on risk perception and on attempts to influence it by risk communication or feedback on accident and incident experience are given in more detail in other articles.

Even if the need for some action is realized, workers may take no action for many reasons: they do not, for example, think it is their place to interfere with someone else’s work; they do not know what to do; they see the situation as unchangeable (“it is just part of working in this industry”); or they fear reprisal for reporting a potential problem. Beliefs and knowledge about cause and effect and about the attribution of responsibility for accidents and accident prevention are important here. For example, supervisors who consider that accidents are largely caused by careless and accident-prone workers will not see any need for action on their own part, except perhaps to eliminate those workers from their section. Effective communications to mobilize and coordinate the people who can and should take action are also vital at this step.

The remaining steps are concerned with the knowledge of what to do to control the danger, and the skills needed to take appropriate action. This knowledge is acquired by training and experience, but good design can help greatly by making it obvious how to achieve a certain result so as to avert danger or to protect one’s self from it—for instance, by means of an emergency stop or shutdown, or an avoiding action. Good information resources such as operations manuals or computer support systems can help supervisors and workers to gain access to knowledge not available to them in the course of day-to-day activity. Finally, skill and practice determine whether the required response action can be carried out accurately enough and with the right timing to make it successful. A difficult paradox arises in this connection: the more alert and prepared that people are, and the more reliable the hardware is, the less frequently the emergency procedures will be needed and the harder it will be to sustain the level of skill needed to carry them out when they are called upon.

Links with behaviour based on skill, rules and knowledge

The final element in the Hale and Glendon model, which turns figure 3 into figure 1, is the addition of the link to the work of Reason and Rasmussen. This work emphasized that behaviour can be evinced at three different levels of conscious control—skill-based, rule-based and knowledge-based—which implicate different aspects of human functioning and are subject to different types and degrees of disturbance or error on account of external signals or internal processing failures.

Skill-based. The skill-based level is highly reliable, but subject to lapses and slips when disturbed, or when another, similar routine captures control. This level is particularly relevant to the kind of routine behaviour that involves automatic responses to known signals indicating danger, either imminent or more remote. The responses are known and practised routines, such as keeping our fingers clear of a grinding wheel while sharpening a chisel, steering a car to keep it on the road, or ducking to avoid a flying object coming at us. The responses are so automatic that workers may not even be aware that they are actively controlling danger with them.

Rule-based. The rule-based level is concerned with choosing from a range of known routines or rules the one which is appropriate to the situation—for example, choosing which sequence to initiate in order to close down a reactor which would otherwise become overpressurized, selecting the correct safety goggles to work with acids (as opposed to those for working with dusts ), or deciding, as a manager, to carry out a full safety review for a new plant rather than a short informal check. Errors here are often related to insufficient time spent matching the choice to the real situation, to relying on expectation rather than observation to understand the situation, or to being misled by outside information into making a wrong diagnosis. In the Hale and Glendon model, behaviour at this level is particularly relevant to detecting hazards and choosing correct procedures in familiar situations.

Knowledge-based. The knowledge-based level is engaged only when no pre-existing plans or procedures exist for coping with a developing situation. This is particularly true of the recognition of new hazards at the design stage, of detecting unsuspected problems during safety inspections or of coping with unforeseen emergencies. This level is predominant in the steps at the top of figure 1. It is the least predictable and least reliable mode of operation, but also the mode where no machine or computer can replace a human in detecting potential danger and in recovering from deviations.

Putting all the elements together results in figure 1, which provides a framework for both classifying where failures occurred in human behaviour in a past accident and analysing what can be done to optimize human behaviour in controlling danger in a given situation or task in advance of any accidents.