A system can be defined as a set of interdependent components combined in such a way as to perform a given function under specified conditions. A machine is a tangible and particularly clear-cut example of a system in this sense, but there are other systems, involving men and women on a team or in a workshop or factory, which are far more complex and not so easy to define. Safety suggests the absence of danger or risk of accident or harm. In order to avoid ambiguity, the general concept of an unwanted occurrence will be employed. Absolute safety, in the sense of the impossibility of a more or less unfortunate incident occurring, is not attainable; realistically one must aim for a very low, rather than a zero probability of unwanted occurrences.

A given system may be looked upon as safe or unsafe only with respect to the performance that is actually expected from it. With this in mind, the safety level of a system can be defined as follows: “For any given set of unwanted occurrences, the level of safety (or unsafeness) of a system is determined by the probability of these occurrences taking place over a given period of time”. Examples of unwanted occurrences that would be of interest in the present connection include: multiple fatalities, death of one or several persons, serious injury, slight injury, damage to the environment, harmful effects on living beings, destruction of plants or buildings, and major or limited material or equipment damage.

Purpose of the Safety System Analysis

The object of a system safety analysis is to ascertain the factors which have a bearing on the probability of the unwanted occurrences, to study the way in which these occurrences take place and, ultimately, to develop preventive measures to reduce their probability.

The analytic phase of the problem can be divided into two main aspects:

- identification and description of the types of dysfunction or maladjustment

- identification of the sequences of dysfunctions that combine one with another (or with more “normal” occurrences) to lead ultimately to the unwanted occurrence itself, and the assessment of their likelihood.

Once the various dysfunctions and their consequences have been studied, the system safety analysts can direct their attention to preventive measures. Research in this area will be based directly on earlier findings. This investigation of preventive means follows the two main aspects of the system safety analysis.

Methods of Analysis

System safety analysis may be conducted before or after the event (a priori or a posteriori); in both instances, the method used may be either direct or reverse. An a priori analysis takes place before the unwanted occurrence. The analyst takes a certain number of such occurrences and sets out to discover the various stages that may lead up to them. By contrast, an a posteriori analysis is carried out after the unwanted occurrence has taken place. Its purpose is to provide guidance for the future and, specifically, to draw any conclusions that may be useful for any subsequent a priori analyses.

Although it may seem that an a priori analysis would be very much more valuable than an a posteriori analysis, since it precedes the incident, the two are in fact complementary. Which method is used depends on the complexity of the system involved and on what is already known about the subject. In the case of tangible systems such as machines or industrial facilities, previous experience can usually serve in preparing a fairly detailed a priori analysis. However, even then the analysis is not necessarily infallible and is sure to benefit from a subsequent a posteriori analysis based essentially on a study of the incidents that occur in the course of operation. As to more complex systems involving persons, such as work shifts, workshops or factories, a posteriori analysis is even more important. In such cases, past experience is not always sufficient to permit detailed and reliable a priori analysis.

An a posteriori analysis may develop into an a priori analysis as the analyst goes beyond the single process that led up to the incident in question and starts to look into the various occurrences that could reasonably lead to such an incident or similar incidents.

Another way in which an a posteriori analysis can become an a priori analysis is when the emphasis is placed not on the occurrence (whose prevention is the main purpose of the current analysis) but on less serious incidents. These incidents, such as technical hitches, material damage and potential or minor accidents, of relatively little significance in themselves, can be identified as warning signs of more serious occurrences. In such cases, although carried out after the occurrence of minor incidents, the analysis will be an a priori analysis as regards more serious occurrences that have not yet taken place.

There are two possible methods of studying the mechanism or logic behind the sequence of two or more events:

- The direct, or inductive, method starts with the causes in order to predict their effects.

- The reverse, or deductive, method looks at the effects and works backwards to the causes.

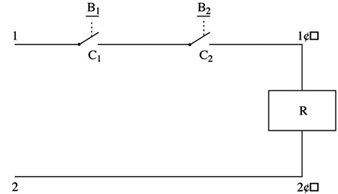

Figure 1 is a diagram of a control circuit requiring two buttons (B1 and B2) to be pressed simultaneously in order to activate the relay coil (R) and start the machine. This example may be used to illustrate, in practical terms, the direct and reverse methods used in system safety analysis.

Figure 1. Two-button control circuit

Direct method

In the direct method, the analyst begins by (1) listing faults, dysfunctions and maladjustments, (2) studying their effects and (3) determining whether or not those effects are a threat to safety. In the case of figure 1, the following faults may occur:

- a break in the wire between 2 and 2´

- unintentional contact at C1 (or C2) as a result of mechanical blocking

- accidental closing of B1 (or B2)

- short circuit between 1 and 1´.

The analyst can then deduce the consequences of these faults, and the findings can be set out in tabular form (table 1).

Table 1. Possible dysfunctions of a two-button control circuit and their consequences

|

Faults |

Consequences |

|

Break in the wire between 2 and 2’ |

Impossible to start the machine* |

|

Accidental closing of B1 (or B2 ) |

No immediate consequence |

|

Contact at C1 (or C2 ) as a result of |

No immediate consequence but possibility of the |

|

Short circuit between 1 and 1’ |

Activation of relay coil R—accidental starting of |

* Occurrence with a direct influence on the reliability of the system

** Occurrence responsible for a serious reduction in the safety level of the system

*** Dangerous occurrence to be avoided

See text and figure 1.

In table 1 consequences which are dangerous or liable to seriously reduce the safety level of the system can be designated by conventional signs such as ***.

Note: In table 1 a break in the wire between 2 and 2´ (shown in figure 1) results in an occurrence that is not considered dangerous. It has no direct effect on the safety of the system; however, the probability of such an incident occurring has a direct bearing on the reliability of the system.

The direct method is particularly appropriate for simulation. Figure 2 shows an analog simulator designed for studying the safety of press-control circuits. The simulation of the control circuit makes it possible to verify that, so long as there is no fault, the circuit is actually capable of ensuring the required function without infringing the safety criteria. In addition, the simulator can allow the analyst to introduce faults in the various components of the circuit, observe their consequences and thus distinguish those circuits that are properly designed (with few or no dangerous faults) from those which are poorly designed. This type of safety analysis may also be performed using a computer.

Figure 2. Simulator for the study of press-control circuits

Reverse method

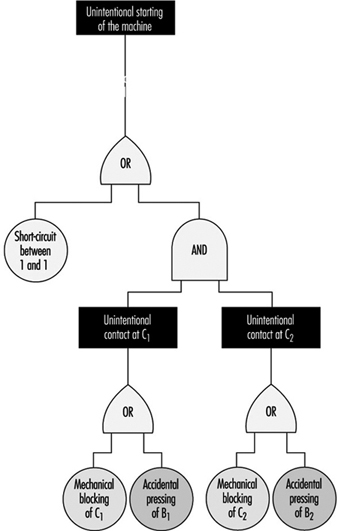

In the reverse method, the analyst works backwards from the undesirable occurrence, incident or accident, towards the various previous events to determine which may be capable of resulting in the occurrences to be avoided. In figure 1, the ultimate occurrence to be avoided would be the unintentional starting of the machine.

- The starting of the machine may be caused by an uncontrolled activation of the relay coil (R).

- The activation of the coil may, in turn, result from a short circuit between 1 and 1´ or from an unintentional and simultaneous closing of switches C1 and C2.

- Unintentional closing of C1 may be the consequence of a mechanical blocking of C1 or of the accidental pressing of B1. Similar reasoning applies to C2.

The findings of this analysis can be represented in a diagram which resembles a tree (for this reason the reverse method is known as “fault tree analysis”), such as depicted in figure 3.

Figure 3. Possible chain of events

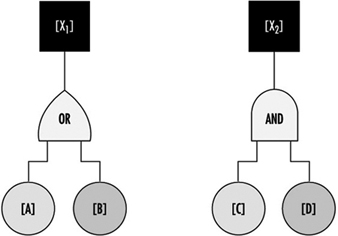

The diagram follows logical operations, the most important of which are the “OR” and “AND” operations. The “OR” operation signifies that [X1] will occur if either [A] or [B] (or both) take place. The “AND” operation signifies that before [X2] can occur, both [C] and [D] must have taken place (see figure 4).

Figure 4. Representation of two logical operations

The reverse method is very often used in a priori analysis of tangible systems, especially in the chemical, aeronautical, space and nuclear industries. It has also been found extremely useful as a method to investigate industrial accidents.

Although they are very different, the direct and reverse methods are complementary. The direct method is based on a set of faults or dysfunctions, and the value of such an analysis therefore largely depends on the relevance of the various dysfunctions taken into account at the start. Seen in this light, the reverse method seems to be more systematic. Given knowledge of what types of accidents or incidents may happen, the analyst can in theory apply this method to work back towards all the dysfunctions or combinations of dysfunctions capable of bringing them about. However, because all the dangerous behaviours of a system are not necessarily known in advance, they can be discovered by the direct method, applied by simulation, for example. Once these have been discovered, the hazards can be analysed in greater detail by the reverse method.

Problems of System Safety Analysis

The analytical methods described above are not just mechanical processes which need only to be applied automatically in order to reach useful conclusions for improving system safety. On the contrary, analysts encounter a number of problems in the course of their work, and the usefulness of their analyses will depend largely on how they set about solving them. Some of the typical problems that may arise are described below.

Understanding the system to be studied and its operating conditions

The fundamental problems in any system safety analysis are the definition of the system to be studied, its limitations and the conditions under which it is supposed to operate throughout its existence.

If the analyst takes into account a subsystem that is too limited, the result may be the adoption of a series of random preventive measures (a situation in which everything is geared to preventing certain particular types of occurrence, while equally serious hazards are ignored or underestimated). If, on the other hand, the system considered is too comprehensive or general in relation to a given problem, it may result in excessive vagueness of concept and responsibilities, and the analysis may not lead to the adoption of appropriate preventive measures.

A typical example which illustrates the problem of defining the system to be studied is the safety of industrial machines or plant. In this kind of situation, the analyst may be tempted to consider only the actual equipment, overlooking the fact that it has to be operated or controlled by one or more persons. Simplification of this kind is sometimes valid. However, what has to be analysed is not just the machine subsystem but the entire worker-plus-machine system in the various stages of the life of the equipment (including, for example, transport and handling, assembly, testing and adjusting, normal operation, maintenance, disassembly and, in some cases, destruction). At each stage the machine is part of a specific system whose purpose and modes of functioning and malfunctioning are totally different from those of the system at other stages. It must therefore be designed and manufactured in such a way as to permit the performance of the required function under good safety conditions at each of the stages.

More generally, as regards safety studies in firms, there are several system levels: the machine, workstation, shift, department, factory and the firm as a whole. Depending on which system level is being considered, the possible types of dysfunction—and the relevant preventive measures—are quite different. A good prevention policy must make allowance for the dysfunctions that may occur at various levels.

The operating conditions of the system may be defined in terms of the way in which the system is supposed to function, and the environmental conditions to which it may be subject. This definition must be realistic enough to allow for the actual conditions in which the system is likely to operate. A system that is very safe only in a very restricted operating range may not be so safe if the user is unable to keep within the theoretical operating range prescribed. A safe system must thus be robust enough to withstand reasonable variations in the conditions in which it functions, and must tolerate certain simple but foreseeable errors on the part of the operators.

System modelling

It is often necessary to develop a model in order to analyse the safety of a system. This may raise certain problems which are worth examining.

For a concise and relatively simple system such as a conventional machine, the model is almost directly derivable from the descriptions of the material components and their functions (motors, transmission, etc.) and the way in which these components are interrelated. The number of possible component failure modes is similarly limited.

Modern machines such as computers and robots, which contain complex components like microprocessors and electronic circuits with very large-scale integration, pose a special problem. This problem has not been fully resolved in terms either of modelling or of predicting the different possible failure modes, because there are so many elementary transistors in each chip and because of the use of diverse kinds of software.

When the system to be analysed is a human organization, an interesting problem encountered in modelling lies in the choice and definition of certain non-material or not fully material components. A particular workstation may be represented, for example, by a system comprising workers, software, tasks, machines, materials and environment. (The “task” component may prove difficult to define, for it is not the prescribed task that counts but the task as it is actually performed).

When modelling human organizations, the analyst may opt to break down the system under consideration into an information subsystem and one or more action subsystems. Analysis of failures at different stages of the information subsystem (information acquisition, transmission, processing and use) can be highly instructive.

Problems associated with multiple levels of analysis

Problems associated with multiple levels of analysis often develop because starting from an unwanted occurrence, the analyst may work back towards incidents that are more and more remote in time. Depending on the level of analysis considered, the nature of the dysfunctions that occur varies; the same applies to the preventive measures. It is important to be able to decide at what level analysis should be stopped and at what level preventive action should be taken. An example is the simple case of an accident resulting from a mechanical failure caused by the repeated utilization of a machine under abnormal conditions. This may have been caused by a lack of operator training or from poor organization of work. Depending on the level of analysis considered, the preventive action required may be the replacement of the machine by another machine capable of withstanding more severe conditions of use, the use of the machine only under normal conditions, changes in personnel training, or a reorganization of work.

The effectiveness and scope of a preventive measure depend on the level at which it is introduced. Preventive action in the immediate vicinity of the unwanted occurrence is more likely to have a direct and rapid impact, but its effects may be limited; on the other hand, by working backwards to a reasonable extent in the analysis of events, it should be possible to find types of dysfunction that are common to numerous accidents. Any preventive action taken at this level will be much wider in scope, but its effectiveness may be less direct.

Bearing in mind that there are several levels of analysis, there may also be numerous patterns of preventive action, each of which carries its own share of the work of prevention. This is an extremely important point, and one need only return to the example of the accident presently under consideration to appreciate the fact. Proposing that the machine be replaced by another machine capable of withstanding more severe conditions of use places the onus of prevention on the machine. Deciding that the machine should be used only under normal conditions means placing the onus on the user. In the same way, the onus may be placed on personnel training, organization of work or simultaneously on the machine, the user, the training function and the organization function.

For any given level of analysis, an accident often appears to be the consequence of the combination of several dysfunctions or maladjustments. Depending on whether action is taken on one dysfunction or another, or on several simultaneously, the pattern of preventive action adopted will vary.