Equilibrium

Balance System Function

Input

Perception and control of orientation and motion of the body in space is achieved by a system that involves simultaneous input from three sources: vision, the vestibular organ in the inner ear and sensors in the muscles, joints and skin that provide somatosensory or “proprioceptive” information about movement of the body and physical contact with the environment (figure 1). The combined input is integrated in the central nervous system which generates appropriate actions to restore and maintain balance, coordination and well-being. Failure to compensate in any part of the system may produce unease, dizziness and unsteadiness that can produce symptoms and/or falls.

Figure 1. An outline of the principal elements of the balance system

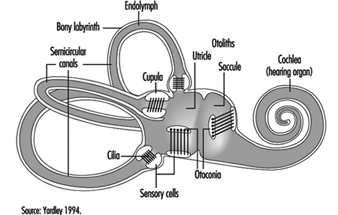

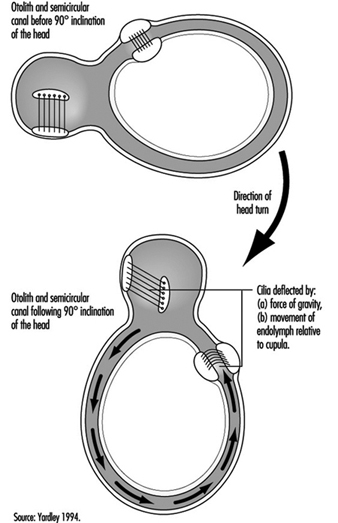

The vestibular system directly registers the orientation and movement of the head. The vestibular labyrinth is a tiny bony structure located in the inner ear, and comprises the semicircular canals filled with fluid (endolymph) and the otoliths (Figure 6). The three semicircular canals are positioned at right angles so that acceleration can be detected in each of the three possible planes of angular motion. During head turns, the relative movement of the endolymph within the canals (caused by inertia) results in deflection of the cilia projecting from the sensory cells, inducing a change in the neural signal from these cells (figure 2). The otoliths contain heavy crystals (otoconia) which respond to changes in the position of the head relative to the force of gravity and to linear acceleration or deceleration, again bending the cilia and so altering the signal from the sensory cells to which they are attached.

Figure 2. Schematic diagram of the vestibular labyrinth.

Figure 3. Schematic representation of the biomechanical effects of a ninety-degree (forward) inclination of the head.

Integration

The central interconnections within the balance system are extremely complex; information from the vestibular organs in both ears is combined with information derived from vision and the somatosensory system at various levels within the brainstem, cerebellum and cortex (Luxon 1984).

Output

This integrated information provides the basis not only for the conscious perception of orientation and self-motion, but also the preconscious control of eye movements and posture, by means of what are known as the vestibuloocular and vestibulospinal reflexes. The purpose of the vestibuloocular reflex is to maintain a stable point of visual fixation during head movement by automatically compensating for the head movement with an equivalent eye movement in the opposite direction (Howard 1982). The vestibulospinal reflexes contribute to postural stability and balance (Pompeiano and Allum 1988).

Balance System Dysfunction

In normal circumstances, the input from the vestibular, visual and somatosensory systems is congruent, but if an apparent mismatch occurs between the different sensory inputs to the balance system, the result is a subjective sensation of dizziness, disorientation, or illusory sense of movement. If the dizziness is prolonged or severe it will be accompanied by secondary symptoms such as nausea, cold sweating, pallor, fatigue, and even vomiting. Disruption of reflex control of eye movements and posture may result in a blurred or flickering visual image, a tendency to veer to one side when walking, or staggering and falling. The medical term for the disorientation caused by balance system dysfunction is “vertigo,” which can be caused by a disorder of any of the sensory systems contributing to balance or by faulty central integration. Only 1 or 2% of the population consult their doctor each year on account of vertigo, but the incidence of dizziness and imbalance rises steeply with age. “Motion sickness” is a form of disorientation induced by artificial environmental conditions with which our balance system has not been equipped by evolution to cope, such as passive transport by car or boat (Crampton 1990).

Vestibular causes of vertigo

The most common causes of vestibular dysfunction are infection (vestibular labyrinthitis or neuronitis), and benign positional paroxysmal vertigo (BPPV) which is triggered principally by lying on one side. Recurrent attacks of severe vertigo accompanied by loss of hearing and noises (tinnitus) in one ear are typical of a syndrome known as Menière’s disease. Vestibular damage can also result from disorders of the middle ear (including bacterial disease, trauma and cholesteatoma), ototoxic drugs (which should be used only in medical emergencies), and head injury.

Non-vestibular peripheral causesof vertigo

Disorders of the neck, which may alter the somatosensory information relating to head movement or interfere with the blood-supply to the vestibular system, are believed by many clinicians to be a cause of vertigo. Common aetiologies include whiplash injury and arthritis. Sometimes unsteadiness is related to a loss of feeling in the feet and legs, which may be caused by diabetes, alcohol abuse, vitamin deficiency, damage to the spinal cord, or a number of other disorders. Occasionally the origin of feelings of giddiness or illusory movement of the environment can be traced to some distortion of the visual input. An abnormal visual input may be caused by weakness of the eye muscles, or may be experienced when adjusting to powerful lenses or to bifocal glasses.

Central causes of vertigo

Although most cases of vertigo are attributable to peripheral (mainly vestibular) pathology, symptoms of disorientation can be caused by damage to the brainstem, cerebellum or cortex. Vertigo due to central dysfunction is almost always accompanied by some other symptom of central neurological disorder, such as sensations of pain, tingling or numbness in the face or limbs, difficulty speaking or swallowing, headache, visual disturbances, and loss of motor control or loss of consciousness. The more common central causes of vertigo include disorders of the blood supply to the brain (ranging from migraine to strokes), epilepsy, multiple sclerosis, alcoholism, and occasionally tumours. Temporary dizziness and imbalance is a potential side-effect of a vast array of drugs, including widely-used analgesics, contraceptives, and drugs used in the control of cardiovascular disease, diabetes and Parkinson’s disease, and in particular the centrally-acting drugs such as stimulants, sedatives, anti-convulsants, anti-depressants and tranquillizers (Ballantyne and Ajodhia 1984).

Diagnosis and treatment

All cases of vertigo require medical attention in order to ensure that the (relatively uncommon) dangerous conditions which can cause vertigo are detected and appropriate treatment is given. Medication can be given to relieve symptoms of acute vertigo in the short term, and in rare cases surgery may be required. However, if the vertigo is caused by a vestibular disorder the symptoms will generally subside over time as the central integrators adapt to the altered pattern of vestibular input—in the same way that sailors continuously exposed to the motion of waves gradually acquire their “sea legs”. For this to occur, it is essential to continue to make vigorous movements which stimulate the balance system, even though these will at first cause dizziness and discomfort. Since the symptoms of vertigo are frightening and embarrassing, sufferers may need physiotherapy and psychological support to combat the natural tendency to restrict their activities (Beyts 1987; Yardley 1994).

Vertigo in the Workplace

Risk factors

Dizziness and disorientation, which may become chronic, is a common symptom in workers exposed to organic solvents; furthermore, long-term exposure can result in objective signs of balance system dysfunction (e.g., abnormal vestibular-ocular reflex control) even in people who experience no subjective dizziness (Gyntelberg et al. 1986; Möller et al. 1990). Changes in pressure encountered when flying or diving can cause damage to the vestibular organ which results in sudden vertigo and hearing loss requiring immediate treatment (Head 1984). There is some evidence that noise-induced hearing loss can be accompanied by damage to the vestibular organs (van Dijk 1986). People who work for long periods at computer screens sometimes complain of dizziness; the cause of this remains unclear, although it may be related to the combination of a stiff neck and moving visual input.

Occupational difficulties

Unexpected attacks of vertigo, such as occur in Menière’s disease, can cause problems for people whose work involves heights, driving, handling dangerous machinery, or responsibility for the safety of others. An increased susceptibility to motion sickness is a common effect of balance system dysfunction and may interfere with travel.

Conclusion

Equilibrium is maintained by a complex multisensory system, and so disorientation and imbalance can result from a wide variety of aetiologies, in particular any condition which affects the vestibular system or the central integration of perceptual information for orientation. In the absence of central neurological damage the plasticity of the balance system will normally enable the individual to adapt to peripheral causes of disorientation, whether these are disorders of the inner ear which alter vestibular function, or environments which provoke motion sickness. However, attacks of dizziness are often unpredictable, alarming and disabling, and rehabilitation may be necessary to restore confidence and assist the balance function.

Physically-Induced Hearing Disorders

By virtue of its position within the skull, the auditory system is generally well protected against injuries from external physical forces. There are, however, a number of physical workplace hazards that may affect it. They include:

Barotrauma. Sudden variation in barometric pressure (due to rapid underwater descent or ascent, or sudden aircraft descent) associated with malfunction of the Eustachian tube (failure to equalize pressure) may lead to rupture of the tympanic membrane with pain and haemorrhage into the middle and external ears. In less severe cases stretching of the membrane will cause mild to severe pain. There will be a temporary impairment of hearing (conductive loss), but generally the trauma has a benign course with complete functional recovery.

Vibration. Simultaneous exposure to vibration and noise (continuous or impact) does not increase the risk or severity of sensorineural hearing loss; however, the rate of onset appears to be increased in workers with hand-arm vibration syndrome (HAVS). The cochlear circulation is presumed to be affected by reflex sympathetic spasm, when such workers have bouts of vasospasm (Raynaud’s phenomenon) in their fingers or toes.

Infrasound and ultrasound. The acoustic energy from both of these sources is normally inaudible to humans. The common sources of ultrasound, for example, jet engines, high-speed dental drills, and ultrasonic cleaners and mixers all emit audible sound so the effects of ultrasound on exposed subjects are not easily discernible. It is presumed to be harmless below 120 dB and therefore unlikely to cause NIHL. Likewise, low-frequency noise is relatively safe, but with high intensity (119-144 dB), hearing loss may occur.

“Welder’s ear”. Hot sparks may penetrate the external auditory canal to the level of the tympanic membrane, burning it. This causes acute ear pain and sometimes facial nerve paralysis. With minor burns, the condition requires no treatment, while in more severe cases, surgical repair of the membrane may be necessary. The risk may be avoided by correct positioning of the welder’s helmet or by wearing ear plugs.

Chemically-Induced Hearing Disorders

Hearing impairment due to the cochlear toxicity of several drugs is well documented (Ryback 1993). But until the latest decade there has been only little attention paid to audiologic effects of industrial chemicals. The recent research on chemically-induced hearing disorders has focused on solvents, heavy metals and chemicals inducing anoxia.

Solvents. In studies with rodents, a permanent decrease in auditory sensitivity to high-frequency tones has been demonstrated following weeks of high-level exposure to toluene. Histopathological and auditory brainstem response studies have indicated a major effect on the cochlea with damage to the outer hair cells. Similar effects have been found in exposure to styrene, xylenes or trichloroethylene. Carbon disulphide and n-hexane may also affect auditory functions while their major effect seems to be on more central pathways (Johnson and Nylén 1995).

Several human cases with damage to the auditory system together with severe neurologic abnormalities have been reported following solvent sniffing. In case series of persons with occupational exposure to solvent mixtures, to n-hexane or to carbon disulphide, both cochlear and central effects on auditory functions have been reported. Exposure to noise was prevalent in these groups, but the effect on hearing has been considered greater than expected from noise.

Only few controlled studies have so far addressed the problem of hearing impairment in humans exposed to solvents without a significant noise exposure. In a Danish study, a statistically significant elevated risk for self-reported hearing impairment at 1.4 (95% CI: 1.1-1.9) was found after exposure to solvents for five years or more. In a group exposed to both solvents and noise, no additional effect from solvent exposure was found. A good agreement between reporting hearing problems and audiometric criteria for hearing impairment was found in a subsample of the study population (Jacobsen et al. 1993).

In a Dutch study of styrene-exposed workers a dose-dependent difference in hearing thresholds was found by audiometry (Muijser et al. 1988).

In another study from Brazil the audiologic effect from exposure to noise, toluene combined with noise, and mixed solvents was examined in workers in printing and paint manufacturing industries. Compared to an unexposed control group, significantly elevated risks for audiometric high frequency hearing loss were found for all three exposure groups. For noise and mixed solvent exposures the relative risks were 4 and 5 respectively. In the group with combined toluene and noise exposure a relative risk of 11 was found, suggesting interaction between the two exposures (Morata et al. 1993).

Metals. The effect of lead on hearing has been studied in surveys of children and teenagers from the United States. A significant dose-response association between blood lead and hearing thresholds at frequencies from 0.5 to 4 kHz was found after controlling for several potential confounders. The effect of lead was present across the entire range of exposure and could be detected at blood lead levels below 10 μg/100ml. In children without clinical signs of lead toxicity a linear relationship between blood lead and latencies of waves III and V in brainstem auditory potentials (BAEP) has been found, indicating a site of action central to the cochlear nucleus (Otto et al. 1985).

Hearing loss is described as a common part of the clinical picture in acute and chronic methyl-mercury poisoning. Both cochlear and postcochlear lesions have been involved (Oyanagi et al. 1989). Inorganic mercury may also affect the auditory system, probably through damage to cochlear structures.

Exposure to inorganic arsenic has been implied in hearing disorders in children. A high frequency of severe hearing loss (>30 dB) has been observed in children fed with powdered milk contaminated with inorganic arsenic V. In a study from Czechoslovakia, environmental exposure to arsenic from a coal-burning power plant was associated with audiometric hearing loss in ten-year-old children. In animal experiments, inorganic arsenic compounds have produced extensive cochlear damage (WHO 1981).

In acute trimethyltin poisoning, hearing loss and tinnitus have been early symptoms. Audiometry has shown pancochlear hearing loss between 15 and 30 dB at presentation. It is not clear whether the abnormalities have been reversible (Besser et al. 1987). In animal experiments, trimethyltin and triethyltin compounds have produced partly reversible cochlear damage (Clerisi et al. 1991).

Asphyxiants. In reports on acute human poisoning by carbon monoxide or hydrogen sulphide, hearing disorders have often been noted along with central nervous system disease (Ryback 1992).

In experiments with rodents, exposure to carbon monoxide had a synergistic effect with noise on auditory thresholds and cochlear structures. No effect was observed after exposure to carbon monoxide alone (Fechter et al. 1988).

Summary

Experimental studies have documented that several solvents can produce hearing disorders under certain exposure circumstances. Studies in humans have indicated that the effect may be present following exposures that are common in the occupational environment. Synergistic effects between noise and chemicals have been observed in some human and experimental animal studies. Some heavy metals may affect hearing, most of them only at exposure levels that produce overt systemic toxicity. For lead, minor effects on hearing thresholds have been observed at exposures far below occupational exposure levels. A specific ototoxic effect from asphyxiants has not been documented at present although carbon monoxide may enhance the audiological effect of noise.

The Ear

Anatomy

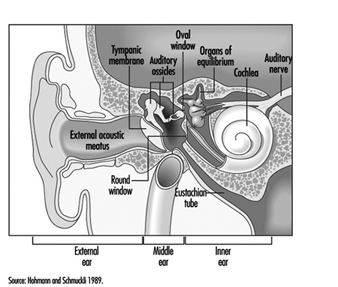

The ear is the sensory organ responsible for hearing and the maintenance of equilibrium, via the detection of body position and of head movement. It is composed of three parts: the outer, middle, and inner ear; the outer ear lies outside the skull, while the other two parts are embedded in the temporal bone (figure 1).

Figure 1. Diagram of the ear.

The outer ear consists of the auricle, a cartilaginous skin-covered structure, and the external auditory canal, an irregularly-shaped cylinder approximately 25 mm long which is lined by glands secreting wax.

The middle ear consists of the tympanic cavity, an air-filled cavity whose outer walls form the tympanic membrane (eardrum), and communicates proximally with the nasopharynx by the Eustachian tubes, which maintain pressure equilibrium on either side of the tympanic membrane. For instance, this communication explains how swallowing allows equalization of pressure and restoration of lost hearing acuity caused by rapid change in barometric pressure (e.g., landing airplanes, fast elevators). The tympanic cavity also contains the ossicles—the malleus, incus and stapes—which are controlled by the stapedius and tensor tympani muscles. The tympanic membrane is linked to the inner ear by the ossicles, specifically by the mobile foot of the stapes, which lies against the oval window.

The inner ear contains the sensory apparatus per se. It consists of a bony shell (the bony labyrinth) within which is found the membranous labyrinth—a series of cavities forming a closed system filled with endolymph, a potassium-rich liquid. The membranous labyrinth is separated from the bony labyrinth by the perilymph, a sodium-rich liquid.

The bony labyrinth itself is composed of two parts. The anterior portion is known as the cochlea and is the actual organ of hearing. It has a spiral shape reminiscent of a snail shell, and is pointed in the anterior direction. The posterior portion of the bony labyrinth contains the vestibule and the semicircular canals, and is responsible for equilibrium. The neurosensory structures involved in hearing and equilibrium are located in the membranous labyrinth: the organ of Corti is located in the cochlear canal, while the maculae of the utricle and the saccule and the ampullae of the semicircular canals are located in the posterior section.

Hearing organs

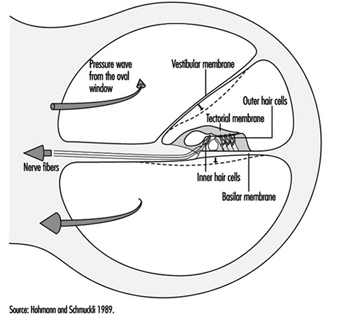

The cochlear canal is a spiral triangular tube, comprising two and one-half turns, which separates the scala vestibuli from the scala tympani. One end terminates in the spiral ligament, a process of the cochlea’s central column, while the other is connected to the bony wall of the cochlea.

The scala vestibuli and tympani end in the oval window (the foot of the stapes) and round window, respectively. The two chambers communicate through the helicotrema, the tip of the cochlea. The basilar membrane forms the inferior surface of the cochlear canal, and supports the organ of Corti, responsible for the transduction of acoustic stimuli. All auditory information is transduced by only 15,000 hair cells (organ of Corti), of which the so-called inner hair cells, numbering 3,500, are critically important, since they form synapses with approximately 90% of the 30,000 primary auditory neurons (figure 2). The inner and outer hair cells are separated from each other by an abundant layer of support cells. Traversing an extraordinarily thin membrane, the cilia of the hair cells are embedded in the tectorial membrane, whose free end is located above the cells. The superior surface of the cochlear canal is formed by Reissner’s membrane.

Figure 2. Cross-section of one loop of the cochlea. Diameter: approximately 1.5 mm.

The bodies of the cochlear sensory cells resting on the basilar membrane are surrounded by nerve terminals, and their approximately 30,000 axons form the cochlear nerve. The cochlear nerve crosses the inner ear canal and extends to the central structures of the brain stem, the oldest part of the brain. The auditory fibres end their tortuous path in the temporal lobe, the part of the cerebral cortex responsible for the perception of acoustic stimuli.

Organs of Equilibrium

The sensory cells are located in the ampullae of the semicircular canals and the maculae of the utricle and saccule, and are stimulated by pressure transmitted through the endolymph as a result of head or body movements. The cells connect with bipolar cells whose peripheral processes form two tracts, one from the anterior and external semicircular canals, the other from the posterior semicircular canal. These two tracts enter the inner ear canal and unite to form the vestibular nerve, which extends to the vestibular nuclei in the brainstem. Fibres from the vestibular nuclei, in turn, extend to cerebellar centres controlling eye movements, and to the spinal cord.

The union of the vestibular and cochlear nerves forms the 8th cranial nerve, also known as the vestibulocochlear nerve.

Physiology of Hearing

Sound conduction through air

The ear is composed of a sound conductor (the outer and middle ear) and a sound receptor (the inner ear).

Sound waves passing through the external auditory canal strike the tympanic membrane, causing it to vibrate. This vibration is transmitted to the stapes through the hammer and anvil. The surface area of the tympanic membrane is almost 16 times that of the foot of the stapes (55 mm2/3.5 mm2), and this, in combination with the lever mechanism of the ossicles, results in a 22-fold amplification of the sound pressure. Due to the middle ear’s resonant frequency, the transmission ratio is optimal between 1,000 and 2,000 Hz. As the foot of the stapes moves, it causes waves to form in the liquid within the vestibular canal. Since the liquid is incompressible, each inward movement of the foot of the stapes causes an equivalent outward movement of the round window, towards the middle ear.

When exposed to high sound levels, the stapes muscle contracts, protecting the inner ear (the attenuation reflex). In addition to this function, the muscles of the middle ear also extend the dynamic range of the ear, improve sound localization, reduce resonance in the middle ear, and control air pressure in the middle ear and liquid pressure in the inner ear.

Between 250 and 4,000 Hz, the threshold of the attenuation reflex is approximately 80 decibels (dB) above the hearing threshold, and increases by approximately 0.6 dB/dB as the stimulation intensity increases. Its latency is 150 ms at threshold, and 24-35 ms in the presence of intense stimuli. At frequencies below the natural resonance of the middle ear, contraction of the middle ear muscles attenuates sound transmission by approximately 10 dB. Because of its latency, the attenuation reflex provides adequate protection from noise generated at rates above two to three per second, but not from discrete impulse noise.

The speed with which sound waves propagate through the ear depends on the elasticity of the basilar membrane. The elasticity increases, and the wave velocity thus decreases, from the base of the cochlea to the tip. The transfer of vibration energy to Reissner’s membrane and the basilar membrane is frequency-dependent. At high frequencies, the wave amplitude is greatest at the base, while for lower frequencies, it is greatest at the tip. Thus, the point of greatest mechanical excitation in the cochlea is frequency-dependent. This phenomenon underlies the ability to detect frequency differences. Movement of the basilar membrane induces shear forces in the stereocilia of the hair cells and triggers a series of mechanical, electrical and biochemical events responsible for mechanical-sensory transduction and initial acoustic signal processing. The shear forces on the stereocilia cause ionic channels in the cell membranes to open, modifying the permeability of the membranes and allowing the entry of potassium ions into the cells. This influx of potassium ions results in depolarization and the generation of an action potential.

Neurotransmitters liberated at the synaptic junction of the inner hair cells as a result of depolarization trigger neuronal impulses which travel down the afferent fibres of the auditory nerve toward higher centres. The intensity of auditory stimulation depends on the number of action potentials per unit time and the number of cells stimulated, while the perceived frequency of the sound depends on the specific nerve fibre populations activated. There is a specific spatial mapping between the frequency of the sound stimulus and the section of the cerebral cortex stimulated.

The inner hair cells are mechanoreceptors which transform signals generated in response to acoustic vibration into electric messages sent to the central nervous system. They are not, however, responsible for the ear’s threshold sensitivity and its extraordinary frequency selectivity.

The outer hair cells, on the other hand, send no auditory signals to the brain. Rather, their function is to selectively amplify mechano-acoustic vibration at near-threshold levels by a factor of approximately 100 (i.e., 40 dB), and so facilitate stimulation of inner hair cells. This amplification is believed to function through micromechanical coupling involving the tectorial membrane. The outer hair cells can produce more energy than they receive from external stimuli and, by contracting actively at very high frequencies, can function as cochlear amplifiers.

In the inner ear, interference between outer and inner hair cells creates a feedback loop which permits control of auditory reception, particularly of threshold sensitivity and frequency selectivity. Efferent cochlear fibres may thus help reduce cochlear damage caused by exposure to intense acoustic stimuli. Outer hair cells may also undergo reflex contraction in the presence of intense stimuli. The attenuation reflex of the middle ear, active primarily at low frequencies, and the reflex contraction in the inner ear, active at high frequencies, are thus complementary.

Bone conduction of sound

Sound waves may also be transmitted through the skull. Two mechanisms are possible:

In the first, compression waves impacting the skull cause the incompressible perilymph to deform the round or oval window. As the two windows have differing elasticities, movement of the endolymph results in movement of the basilar membrane.

The second mechanism is based on the fact that movement of the ossicles induces movement in the scala vestibuli only. In this mechanism, movement of the basilar membrane results from the translational movement produced by the inertia.

Bone conduction is normally 30-50 dB lower than air conduction—as is readily apparent when both ears are blocked. This is only true, however, for air-mediated stimuli, direct bone stimulation being attenuated to a different degree.

Sensitivity range

Mechanical vibration induces potential changes in the cells of the inner ear, conduction pathways and higher centres. Only frequencies of 16 Hz–25,000 Hz and sound pressures (these can be expressed in pascals, Pa) of 20 μPa to 20 Pa can be perceived. The range of sound pressures which can be perceived is remarkable—a 1-million-fold range! The detection thresholds of sound pressure are frequency-dependent, lowest at 1,000-6,000 Hz and increasing at both higher and lower frequencies.

For practical purposes, the sound pressure level is expressed in decibels (dB), a logarithmic measurement scale corresponding to perceived sound intensity relative to the auditory threshold. Thus, 20 μPa is equivalent to 0 dB. As the sound pressure increases tenfold, the decibel level increases by 20 dB, in accordance with the following formula:

Lx = 20 log Px/P0

where:

Lx = sound pressure in dB

Px = sound pressure in pascals

P0 = reference sound pressure(2×10–5 Pa, the auditory threshold)

The frequency-discrimination threshold, that is the minimal detectable difference in frequency, is 1.5 Hz up to 500 Hz, and 0.3% of the stimulus frequency at higher frequencies. At sound pressures near the auditory threshold, the sound-pressure-discrimination threshold is approximately 20%, although differences of as little as 2% may be detected at high sound pressures.

If two sounds differ in frequency by a sufficiently small amount, only one tone will be heard. The perceived frequency of the tone will be midway between the two source tones, but its sound pressure level is variable. If two acoustic stimuli have similar frequencies but differing intensities, a masking effect occurs. If the difference in sound pressure is large enough, masking will be complete, with only the loudest sound perceived.

Localization of acoustic stimuli depends on the detection of the time lag between the arrival of the stimulus at each ear, and, as such, requires intact bilateral hearing. The smallest detectable time lag is 3 x 10–5 seconds. Localization is facilitated by the head’s screening effect, which results in differences in stimulus intensity at each ear.

The remarkable ability of human beings to resolve acoustic stimuli is a result of frequency decomposition by the inner ear and frequency analysis by the brain. These are the mechanisms that allow individual sound sources such as individual musical instruments to be detected and identified in the complex acoustic signals that make up the music of a full symphony orchestra.

Physiopathology

Ciliary damage

The ciliary motion induced by intense acoustic stimuli may exceed the mechanical resistance of the cilia and cause mechanical destruction of hair cells. As these cells are limited in number and incapable of regeneration, any cell loss is permanent, and if exposure to the harmful sound stimulus continues, progressive. In general, the ultimate effect of ciliary damage is the development of a hearing deficit.

Outer hair cells are the most sensitive cells to sound and toxic agents such as anoxia, ototoxic medications and chemicals (e.g., quinine derivates, streptomycin and some other antibiotics, some anti-tumour preparations), and are thus the first to be lost. Only passive hydromechanical phenomena remain operative in outer hair cells which are damaged or have damaged stereocilia. Under these conditions, only gross analysis of acoustic vibration is possible. In very rough terms, cilia destruction in outer hair cells results in a 40 dB increase in hearing threshold.

Cellular damage

Exposure to noise, especially if it is repetitive or prolonged, may also affect the metabolism of cells of the organ of Corti, and afferent synapses located beneath the inner hair cells. Reported extraciliary effects include modification of cell ultrastructure (reticulum, mitochondria, lysosomes) and, postsynaptically, swelling of afferent dendrites. Dendritic swelling is probably due to the toxic accumulation of neurotransmitters as a result of excessive activity by inner hair cells. Nevertheless, the extent of stereociliary damage appears to determine whether hearing loss is temporary or permanent.

Noise-induced Hearing Loss

Noise is a serious hazard to hearing in today’s increasingly complex industrial societies. For example, noise exposure accounts for approximately one-third of the 28 million cases of hearing loss in the United States, and NIOSH (the National Institute for Occupational Safety and Health) reports that 14% of American workers are exposed to potentially dangerous sound levels, that is levels exceeding 90 dB. Noise exposure is the most widespread harmful occupational exposure and is the second leading cause, after age-related effects, of hearing loss. Finally, the contribution of non-occupational noise exposure must not be forgotten, such as home workshops, over-amplified music especially with use of earphones, use of firearms, etc.

Acute noise-induced damage. The immediate effects of exposure to high-intensity sound stimuli (for example, explosions) include elevation of the hearing threshold, rupture of the eardrum, and traumatic damage to the middle and inner ears (dislocation of ossicles, cochlear injury or fistulas).

Temporary threshold shift. Noise exposure results in a decrease in the sensitivity of auditory sensory cells which is proportional to the duration and intensity of exposure. In its early stages, this increase in auditory threshold, known as auditory fatigue or temporary threshold shift (TTS), is entirely reversible but persists for some time after the cessation of exposure.

Studies of the recovery of auditory sensitivity have identified several types of auditory fatigue. Short-term fatigue dissipates in less than two minutes and results in a maximum threshold shift at the exposure frequency. Long-term fatigue is characterized by recovery in more than two minutes but less than 16 hours, an arbitrary limit derived from studies of industrial noise exposure. In general, auditory fatigue is a function of stimulus intensity, duration, frequency, and continuity. Thus, for a given dose of noise, obtained by integration of intensity and duration, intermittent exposure patterns are less harmful than continuous ones.

The severity of the TTS increases by approximately 6 dB for every doubling of stimulus intensity. Above a specific exposure intensity (the critical level), this rate increases, particularly if exposure is to impulse noise. The TTS increases asymptotically with exposure duration; the asymptote itself increases with stimulus intensity. Due to the characteristics of the outer and middle ears’ transfer function, low frequencies are tolerated the best.

Studies on exposure to pure tones indicate that as the stimulus intensity increases, the frequency at which the TTS is the greatest progressively shifts towards frequencies above that of the stimulus. Subjects exposed to a pure tone of 2,000 Hz develop TTS which is maximal at approximately 3,000 Hz (a shift of a semi-octave). The noise’s effect on the outer hair cells is believed to be responsible for this phenomenon.

The worker who shows TTS recovers to baseline hearing values within hours after removal from noise. However, repeated noise exposures result in less hearing recovery and resultant permanent hearing loss.

Permanent threshold shift. Exposure to high-intensity sound stimuli over several years may lead to permanent hearing loss. This is referred to as permanent threshold shift (PTS). Anatomically, PTS is characterized by degeneration of the hair cells, starting with slight histological modifications but eventually culminating in complete cell destruction. Hearing loss is most likely to involve frequencies to which the ear is most sensitive, as it is at these frequencies that the transmission of acoustic energy from the external environment to the inner ear is optimal. This explains why hearing loss at 4,000 Hz is the first sign of occupationally induced hearing loss (figure 3). Interaction has been observed between stimulus intensity and duration, and international standards assume the degree of hearing loss to a function of the total acoustic energy received by the ear (dose of noise).

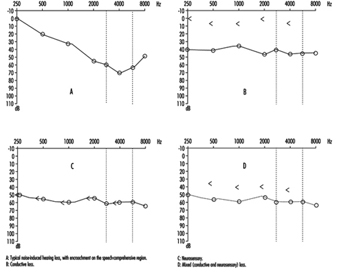

Figure 3. Audiogram showing bilateral noise-induced hearing loss.

The development of noise-induced hearing loss shows individual susceptibility. Various potentially important variables have been examined to explain this susceptibility, such as age, gender, race, cardiovascular disease, smoking, etc. The data were inconclusive.

An interesting question is whether the amount of TTS could be used to predict the risk of PTS. As noted above, there is a progressive shift of the TTS to frequencies above that of the stimulation frequency. On the other hand, most of the ciliary damage occurring at high stimulus intensities involves cells that are sensitive to the stimulus frequency. Should exposure persist, the difference between the frequency at which the PTS is maximal and the stimulation frequency progressively decreases. Ciliary damage and cell loss consequently occurs in the cells most sensitive to the stimulus frequencies. It thus appears that TTS and PTS involve different mechanisms, and that it is thus impossible to predict an individual’s PTS on the basis of the observed TTS.

Individuals with PTS are usually asymptomatic initially. As the hearing loss progresses, they begin to have difficulty following conversations in noisy settings such as parties or restaurants. The progression, which usually affects the ability to perceive high-pitched sounds first, is usually painless and relatively slow.

Examination of individuals suffering from hearing loss

Clinical examination

In addition to the history of the date when the hearing loss was first detected (if any) and how it has evolved, including any asymmetry of hearing, the medical questionnaire should elicit information on the patient’s age, family history, use of ototoxic medications or exposure to other ototoxic chemicals, the presence of tinnitus (i.e., buzzing, whistling or ringing sounds in one or both ears), dizziness or any problems with balance, and any history of ear infections with pain or discharge from the outer ear canal. Of critical importance is a detailed life-long history of exposures to high sound levels (note that, to the layperson, not all sounds are “noise”) on the job, in previous jobs and off-the-job. A history of episodes of TTS would confirm prior toxic exposures to noise.

Physical examination should include evaluation of the function of the other cranial nerves, tests of balance, and ophthalmoscopy to detect any evidence of increased cranial pressure. Visual examination of the external auditory canal will detect any impacted cerumen and, after it has been cautiously removed (no sharp object!), any evidence of scarring or perforation of the tympanic membrane. Hearing loss can be determined very crudely by testing the patient’s ability to repeat words and phrases spoken softly or whispered by the examiner when positioned behind and out of the sight of the patient. The Weber test (placing a vibrating tuning fork in the centre of the forehead to determine if this sound is “heard” in either or both ears) and the Rinné pitch-pipe test (placing a vibrating tuning fork on the mastoid process until the patient can no longer hear the sound, then quickly placing the fork near the ear canal; normally the sound can be heard longer through air than through bone) will allow classification of the hearing loss as transmission- or neurosensory.

The audiogram is the standard test to detect and evaluate hearing loss (see below). Specialized studies to complement the audiogram may be necessary in some patients. These include: tympanometry, word discrimination tests, evaluation of the attenuation reflex, electrophysical studies (electrocochleogram, auditory evoked potentials) and radiological studies (routine skull x rays complemented by CAT scan, MRI).

Audiometry

This crucial component of the medical evaluation uses a device known as an audiometer to determine the auditory threshold of individuals to pure tones of 250-8,000 Hz and sound levels between –10 dB (the hearing threshold of intact ears) and 110 dB (maximal damage). To eliminate the effects of TTSs, patients should not have been exposed to noise during the previous 16 hours. Air conduction is measured by earphones placed on the ears, while bone conduction is measured by placing a vibrator in contact with the skull behind the ear. Each ear’s hearing is measured separately and test results are reported on a graph known as an audiogram (Figure 3). The threshold of intelligibility, that is. the sound intensity at which speech becomes intelligible, is determined by a complementary test method known as vocal audiometry, based on the ability to understand words composed of two syllables of equal intensity (for instance, shepherd, dinner, stunning).

Comparison of air and bone conduction allows classification of hearing losses as transmission (involving the external auditory canal or middle ear) or neurosensory loss (involving the inner ear or auditory nerve) (figures 3 and 4). The audiogram observed in cases of noise-induced hearing loss is characterized by an onset of hearing loss at 4,000 Hz, visible as a dip in the audiogram (figure 3). As exposure to excessive noise levels continues, neighbouring frequencies are progressively affected and the dip broadens, encroaching, at approximately 3,000 Hz, on frequencies essential for the comprehension of conversation. Noise-induced hearing loss is usually bilateral and shows a similar pattern in both ears, that is, the difference between the two ears does not exceed 15 dB at 500 Hz, at 1,000 dB and at 2,000 Hz, and 30 dB at 3,000, at 4,000 and at 6,000 Hz. Asymmetric damage may, however, be present in cases of non-uniform exposure, for example, with marksmen, in whom hearing loss is higher on the side opposite to the trigger finger (the left side, in a right-handed person). In hearing loss unrelated to noise exposure, the audiogram does not exhibit the characteristic 4,000 Hz dip (figure 4).

Figure 4. Examples of right-ear audiograms. The circles represent air-conduction hearing loss, the ““ bone conduction.

There are two types of audiometric examinations: screening and diagnostic. Screening audiometry is used for the rapid examination of groups of individuals in the workplace, in schools or elsewhere in the community to identify those who appear to have some hearing loss. Often, electronic audiometers that permit self-testing are used and, as a rule, screening audiograms are obtained in a quiet area but not necessarily in a sound-proof, vibration-free chamber. The latter is considered to be a prerequisite for diagnostic audiometry which is intended to measure hearing loss with reproducible precision and accuracy. The diagnostic examination is properly performed by a trained audiologist (in some circumstances, formal certification of the competence of the audiologist is required). The accuracy of both types of audiometry depends on periodic testing and recalibration of the equipment being used.

In many jurisdictions, individuals with job-related, noise-induced hearing loss are eligible for workers’ compensation benefits. Accordingly, many employers are including audiometry in their preplacement medical examinations to detect any existing hearing loss that may be the responsibility of a previous employer or represent a non-occupational exposure.

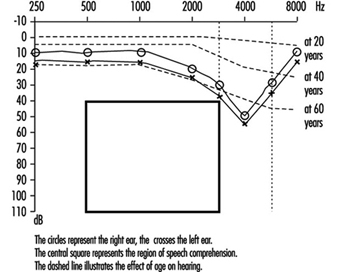

Hearing thresholds progressively increase with age, with higher frequencies being more affected (figure 3). The characteristic 4,000 Hz dip observed in noise-induced hearing loss is not seen with this type of hearing loss.

Calculation of hearing loss

In the United States the most widely accepted formula for calculating functional limitation related to hearing loss is the one proposed in 1979 by the American Academy of Otolaryngology (AAO) and adopted by the American Medical Association. It is based on the average of values obtained at 500, at 1,000, at 2,000 and at 3,000 Hz (table 1), with the lower limit for functional limitation set at 25 dB.

Table 1. Typical calculation of functional loss from an audiogram

| Frequency | |||||||

| 500 Hz |

1,000 Hz |

2,000 Hz |

3,000 Hz |

4,000 Hz |

6,000 Hz |

8,000 Hz |

|

| Right ear (dB) | 25 | 35 | 35 | 45 | 50 | 60 | 45 |

| Left ear (dB) | 25 | 35 | 40 | 50 | 60 | 70 | 50 |

| Unilateral loss |

| Percentage unilateral loss = (average at 500, 1,000, 2,000 and 3,000 Hz) – 25dB (lower limit) x1.5 |

| Example: Right ear: [([25 + 35 + 35 + 45]/4) – 25) x 1.5 = 15 (per cent) Left ear: [([25 + 35 + 40 + 50]/4) – 25) x 1.5 = 18.8 (per cent) |

| Bilateral loss |

| Percentage of bilateral loss = {(percentage of unilateral loss of the best ear x 5) + (percentage of unilateral loss of the worst ear)}/6 |

| Example: {(15 x 5) + 18.8}/6 = 15.6 (per cent) |

Source: Rees and Duckert 1994.

Presbycusis

Presbycusis or age-related hearing loss generally begins at about age 40 and progresses gradually with increasing age. It is usually bilateral. The characteristic 4,000 Hz dip observed in noise-induced hearing loss is not seen with presbycusis. However, it is possible to have the effects of ageing superimposed on noise-related hearing loss.

Treatment

The first essential of treatment is avoidance of any further exposure to potentially toxic levels of noise (see “Prevention” below). It is generally believed that no more subsequent hearing loss occurs after the removal from noise exposure than would be expected from the normal ageing process.

While conduction losses, for example, those related to acute traumatic noise-induced damage, are amenable to medical treatment or surgery, chronic noise-induced hearing loss cannot be corrected by treatment. The use of a hearing aid is the sole “remedy” possible, and is only indicated when hearing loss affects the frequencies critical for speech comprehension (500 to 3,000 Hz). Other types of support, for example lip-reading and sound amplifiers (on telephones, for example), may, however, be possible.

Prevention

Because noise-induced hearing loss is permanent, it is essential to apply any measure likely to reduce exposure. This includes reduction at the source (quieter machines and equipment or encasing them in sound-proof enclosures) or the use of individual protective devices such as ear plugs and/or ear muffs. If reliance is placed on the latter, it is imperative to verify that their manufacturers’ claims for effectiveness are valid and that exposed workers are using them properly at all times.

The designation of 85 dB (A) as the highest permissible occupational exposure limit was to protect the greatest number of people. But, since there is significant interpersonal variation, strenuous efforts to keep exposures well below that level are indicated. Periodic audiometry should be instituted as part of the medical surveillance programme to detect as early as possible any effects that may indicate noise toxicity.

Managing Hazardous Waste Disposal Under ISO 14000

A formal Environmental Management System (EMS), using the International Organization for Standardization (ISO) standard 14001 as the performance specification, has been developed and is being implemented in one of the largest teaching health care complexes in Canada. The Health Sciences Centre (HSC) consists of five hospitals and associated clinical and research laboratories, occupying a 32-acre site in central Winnipeg. Of the 32 segregated solid waste streams at the facility, hazardous wastes account for seven. This summary focuses on the hazardous waste disposal aspect of the hospital’s operations.

ISO 14000

The ISO 14000 standards system is a typical continuous improvement model based on a controlled management system. The ISO 14001 standard addresses the environmental management system structure exclusively. To conform with the standard, an organization must have processes in place for:

- adopting an environmental policy that sets environmental protection as a high priority

- identifying environmental impacts and setting performance goals

- identifying and complying with legal requirements

- assigning environmental accountability and responsibility throughout the organization

- applying controls to achieve performance goals and legal requirements

- monitoring and reporting environmental performance; auditing the EMS system

- conducting management reviews/ identifying opportunities for improvement.

The hierarchy for carrying out these processes in the HSC is presented in table 1.

Table 1. HSC EMS documentation hierarchy

|

EMS level |

Purpose |

|

Governance document |

Includes the Board’s expectations on each core performance category and its requirements for corporate competency in each category. |

|

Level 1 |

Prescribes the outputs that will be delivered in response to customer and stakeholder (C/S) needs (including government regulatory requirements). |

|

Level 2 |

Prescribes the methodologies, systems, processes and resources to be used for achieving C/S requirements; the goals, objectives and performance standards essential for confirming that the C/S requirements have been met (e.g., a schedule of required systems and processes including responsibility centre for each). |

|

Level 3 |

Prescribes the design of each business system or process that will be operated to achieve the C/S requirements (e.g., criteria and boundaries for system operation; each information collection and data reporting point; position responsible for the system and for each component of the process, etc.). |

|

Level 4 |

Prescribes detailed task instructions (specific methods and techniques), for each work activity (e.g., describe the task to be done; identify the position responsible for completing the task; state skills required for the task; prescribe education or training methodology to achieve required skills; identify task completion and conformance data, etc.). |

|

Level 5 |

Organizes and records measurable outcome data on the operation of systems, processes and tasks designed to verify completion according to specification. (e.g., measures for system or process compliance; resource allocation and budget compliance; effectiveness, efficiency, quality, risk, ethics, etc.). |

|

Level 6 |

Analyses records and processes to establish corporate performance in relation to standards set for each output requirement (Level 1) related to C/S needs (e.g., compliance, quality, effectiveness, risk, utilization, etc.); and financial and staff resources. |

ISO standards encourage businesses to integrate all environmental considerations into mainstream business decisions and not restrict attention to concerns that are regulated. Since the ISO standards are not technical documents, the function of specifying numerical standards remains the responsibility of governments or independent expert bodies.

Management System Approach

Applying the generic ISO framework in a health care facility requires the adoption of management systems along the lines of those in table 1, which describes how this has been addressed by the HSC. Each level in the system is supported by appropriate documentation to confirm diligence in the process. While the volume of work is substantial, it is compensated by the resulting performance consistency and by the “expert” information that remains within the corporation when experienced persons leave.

The main objective of the EMS is to establish consistent, controlled and repeatable processes for addressing the environmental aspects of the corporation’s operations. To facilitate management review of the hospital’s performance, an EMS Score Card was conceived based on the ISO 14001 standard. The Score Card closely follows the requirements in the ISO 14001 standard and, with use, will be developed into the hospital’s audit protocol.

Application of the EMS to the Hazardous Waste Process

Facility hazardous waste process

The HSC hazardous waste process currently consists of the following elements:

- procedure statement assigning responsibilities

- process description, in both text and flowchart formats

- Disposal Guide for Hazardous Waste for staff

- education programme for staff

- performance tracking system

- continuous improvement through multidisciplinary team process

- a process for seeking external partners.

The roles and responsibilities of the four main organizational units involved in the hazardous waste process are listed in table 2.

Table 2. Role and responsibilities

|

Organizational unit |

Responsibility |

|

S&DS |

Operates the process and is the process owner/leader, and arranges responsible disposal of waste. |

|

UD–User Departments |

Identifies waste, selects packaging, initiates disposal activities. |

|

DOEM |

Provides specialist technical support in identifying risks and protective measures associated with materials used by HSC and identifies improvement opportunities. |

|

EPE |

Provides specialist support in process performance monitoring and reporting, identifies emerging regulatory trends and compliance requirements, and identifies improvement opportunities. |

|

ALL–All participants |

Shares responsibility for process development activities. |

Process description

The initial step in preparing a process description is to identify the inputs (see table 3 ).

Table 3. Process inputs

|

Organizational unit |

Examples of process inputs and supporting inputs |

|

S&DS (S&DS) |

Maintain stock of Hazardous Waste Disposal Requisition forms and labels |

|

S&DS (UD, DOEM, EPE) (S&DS) |

Maintain supply of packaging containers in warehouse for UDs |

|

DOEM |

Produce SYMBAS Classification Decision Chart. |

|

EPE |

Produce the list of materials for which HSC is registered as a waste generator with regulatory department. |

|

S&DS |

Produce a database of SYMBAS classifications, packaging requirements, TDG classifications, and tracking information for each material disposed by HSC. |

The next process component is the list of specific activities required for proper disposal of waste (see table 4 ).

Table 4. List of activities

|

Unit |

Examples of activities required |

|

UD |

Order Hazardous Waste Disposal Requisition, label and packaging from S&DS as per standard stock ordering procedure. |

|

S&DS |

Deliver Requisition, label and packaging to UD. |

|

UD |

Determine whether a waste material is hazardous (check MSDS, DOEM, and such considerations as dilution, mixture with other chemicals, etc.). |

|

UD |

Assign the Classification to the waste material using SYMBAS Chemical Decision Chart and WHMIS information. Classification can be checked with the S&DS Data Base for materials previously disposed by HSC. Call first S&DS and second DOEM for assistance if required. |

|

UD |

Determine appropriate packaging requirements from WHMIS information using professional judgement or from S&DS Data Base of materials previously disposed by HSC. Call first S&DS and second DOEM for assistance if required. |

Communication

To support the process description, the hospital produced a Disposal Guide for Hazardous Waste to assist staff in the proper disposal of hazardous waste materials. The guide contains information on the specific steps to follow in identifying hazardous waste and preparing it for disposal. Supplemental information is also provided on legislation, the Workplace Hazardous Materials Information System (WHMIS) and key contacts for assistance.

A database was developed to track all relevant information pertaining to each hazardous waste event from originating source to final disposal. In addition to waste data, information is also collected on the performance of the process (e.g., source and frequency of phone calls for assistance to identify areas which may require further training; source, type, quantity and frequency of disposal requests from each user department; consumption of containers and packaging). Any deviations from the process are recorded on the corporate incident reporting form. Results from performance monitoring are reported to the executive and the board of directors. To support effective implementation of the process, a staff education programme was developed to elaborate on the information in the guide. Each of the core participants in the process carries specific responsibilities on staff education.

Continuous Improvement

To explore continuous improvement opportunities, the HSC established a multidisciplinary Waste Process Improvement Team. The Team’s mandate is to address all issues pertaining to waste management. Further to encourage continuous improvement, the hazardous waste process includes specific triggers to initiate process revisions. Typical improvement ideas generated to date include:

- prepare list of high hazard materials to be tracked from time of procurement

- develop material “shelf life” information, where appropriate, for inclusion in the materials classification database

- review shelving for physical integrity

- purchase spill containing trays

- examine potential for spills entering sewer system

- determine whether present storage rooms are adequate for anticipated waste volume

- produce a procedure for disposing of old, incorrectly identified materials.

The ISO standards require regulatory issues to be addressed and state that business processes must be in place for this purpose. Under the ISO standards, the existence of corporate commitments, performance measuring and documentation provide a more visible and more convenient trail for regulators to check for compliance. It is conceivable that the opportunity for consistency provided by the ISO documents could automate reporting of key environmental performance factors to government authorities.

Hospital Waste Management

An adaptation of current guidelines on the disposal of hospital wastes, as well as improvements in internal safety and hygiene, must be part of an overall plan of hospital waste management that establishes the procedures to follow. This should be done through properly coordinating internal and external services, as well as defining responsibilities in each of the management phases. The main goal of this plan is to protect the health of health care personnel, patients, visitors and the general public both in the hospital and beyond.

At the same time, the health of the people who come in contact with the waste once it leaves the medical centre should not be overlooked, and the risks to them should also be minimized.

Such a plan should be campaigned for and applied according to a global strategy that always keeps in mind the realities of the workplace, as well as the knowledge and the training of the personnel involved.

Stages followed in the implementation of a waste management plan are:

- informing the management of the medical centre

- designating those responsible at the executive level

- creating a committee on hospital wastes made up of personnel from the general services, nursing and medical departments that is chaired by the medical centre’s waste manager.

The group should include personnel from the general services department, personnel from the nursing department and personnel from the medical department. The medical centre’s waste manager should coordinate the committee by:

- putting together a report on the present performance of the centre’s waste management

- putting together an internal plan for advanced management

- creating a training programme for the entire staff of the medical centre, with the collaboration of the human resources department

- launching the plan, with follow-up and control by the waste management committee.

Classification of hospital wastes

Until 1992, following the classical waste management system, the practice was to classify most hospital wastes as hazardous. Since then, applying an advanced management technique, only a very small proportion of the large volume of these wastes is considered hazardous.

The tendency has been to adopt an advanced management technique. This technique classifies wastes starting from the baseline assumption that only a very small percentage of the volume of wastes generated is hazardous.

Wastes should always be classified at the point where they are generated. According to the nature of the wastes and their source, they are classified as follows:

- Group I: those wastes that can be assimilated into urban refuse

- Group II: non-specific hospital wastes

- Group III: specific hospital wastes or hazardous wastes

- Group IV: cytostatic wastes (surplus antineoplastic drugs that are not fit for therapeutic use, as well as the single-use materials that have been in contact with them, e.g., needles, syringes, catheters, gloves and IV set-ups).

According to their physical state, wastes can be classified as follows:

- solids: wastes that contain less than 10% liquid

- liquids: wastes that contain more than 10% liquid

Gaseous wastes, such as CFCs from freezers and refrigerators, are not normally captured (see article “Waste anaesthetic gases”).

By definition, the following wastes are not considered sanitary wastes:

- radioactive wastes that, because of their very nature, are already managed in a specific way by the radiological protection service

- human cadavers and large anatomical parts which are cremated or incinerated according to regulations

- waste water.

Group I Wastes

All wastes generated within the medical centre that are not directly related to sanitary activities are considered solid urban wastes (SUW). According to the local ordinances in Cataluna, Spain, as in most communities, the municipalities must remove these wastes selectively, and it is therefore convenient to facilitate this task for them. The following are considered wastes that can be assimilated to urban refuse according to their point of origin:

Kitchen wastes:

- food wastes

- wastes from leftovers or single-use items

- containers.

Wastes generated by people treated in the hospital and non-medical personnel:

- wastes from cleaning products

- wastes left behind in the rooms (e.g., newspapers, magazines and flowers)

- wastes from gardening and renovations.

Wastes from administrative activities:

- paper and cardboard

- plastics.

Other wastes:

- glass containers

- plastic containers

- packing cartons and other packaging materials

- dated single-use items.

So long as they are not included on other selective removal plans, SUW will be placed in white polyethylene bags that will be removed by janitorial personnel.

Group II Wastes

Group II wastes include all those wastes generated as a by-product of medical activities that do not pose a risk to health or the environment. For reasons of safety and industrial hygiene the type of internal management recommended for this group is different from that recommended for Group I wastes. Depending on where they originate, Group II wastes include:

Wastes derived from hospital activities, such as:

- blood-stained materials

- gauze and materials used in treating non-infectious patients

- used medical equipment

- mattresses

- dead animals or parts thereof, from rearing stables or experimental laboratories, so long as they have not been inoculated with infectious agents.

Group II wastes will be deposited in yellow polyethylene bags that will be removed by janitorial personnel.

Group III Wastes

Group III includes hospital wastes which, due to their nature or their point of origin, could pose risks to health or the environment if several special precautions are not observed during handling and removal.

Group III wastes can be classified in the following way:

Sharp and pointed instruments:

- needles

- scalpels.

Infectious wastes. Group III wastes (including single-use items) generated by the diagnosis and treatment of patients who suffer from one of the infectious diseases are listed in table 1.

Table 1. Infectious diseases and Group III wastes

|

Infections |

Wastes contaminated with |

|

Viral haemorrhagic fevers |

All wastes |

|

Brucellosis |

Pus |

|

Diphtheria |

Pharyngeal diphtheria: respiratory secretions |

|

Cholera |

Stools |

|

Creutzfelt-Jakob encephalitis |

Stools |

|

Borm |

Secretions from skin lesions |

|

Tularaemia |

Pulmonary tularaemia: respiratory secretions |

|

Anthrax |

Cutaneous anthrax: pus |

|

Plague |

Bubonic plague: pus |

|

Rabies |

Respiratory secretions |

|

Q Fever |

Respiratory secretions |

|

Active tuberculosis |

Respiratory secretions |

Laboratory wastes:

- material contaminated with biological wastes

- waste from work with animals inoculated with biohazardous substances.

Wastes of the Group III type will be placed in single-use, rigid, colour-coded polyethylene containers and hermetically sealed (in Cataluna, black containers are required). The containers should be clearly labelled as “Hazardous hospital wastes” and kept in the room until collected by janitorial personnel. Group III wastes should never be compacted.

To facilitate their removal and reduce risks to a minimum, containers should not be filled to capacity so that they can be closed easily. Wastes should never be handled once they are placed in these rigid containers. It is forbidden to dispose of biohazardous wastes by dumping them into the drainage system.

Group IV Wastes

Group IV wastes are surplus antineoplastic drugs that are not fit for therapeutic use, as well as all single-use material that has been in contact with the same (needles, syringes, catheters, gloves, IV set-ups and so on).

Given the danger they pose to persons and the environment, Group IV hospital wastes must be collected in rigid, watertight, sealable single-use, colour-coded containers (in Cataluna, they are blue) which should be clearly labelled “Chemically contaminated material: Cytostatic agents”.

Other Wastes

Guided by environmental concerns and the need to enhance waste management for the community, medical centres, with the cooperation of all personnel, staff and visitors, should encourage and facilitate the selective disposal (i.e., in special containers designated for specific materials) of recyclable materials such as:

- paper and cardboard

- glass

- used oils

- batteries and power cells

- toner cartridges for laser printers

- plastic containers.

The protocol established by the local sanitation department for the collection, transport and disposal of each of these types of materials should be followed.

Disposal of large pieces of equipment, furniture and other materials not covered in these guidelines should follow the directions recommended by the appropriate environmental authorities.

Internal transport and storage of wastes

Internal transport of all the wastes generated within the hospital building should be done by the janitorial personnel, according to established schedules. It is important that the following recommendations be observed when transporting wastes within the hospital:

- The containers and the bags will always be closed during transport.

- The carts used for this purpose will have smooth surfaces and be easy to clean.

- The carts will be used exclusively for transporting waste.

- The carts will be washed daily with water, soap and lye.

- The waste bags or containers should never be dragged on the floor.

- Waste should never be transferred from one receptacle to another.

The hospital must have an area specifically for the storage of wastes; it should conform to current guidelines and fulfil, in particular, the following conditions:

- It should be covered.

- It should be clearly marked by signs.

- It should be built with smooth surfaces that are easy to clean.

- It should have running water.

- It should have drains to remove the possible spillage of waste liquids and the water used for cleaning the storage area.

- It should be provided with a system to protect it against animal pests.

- It should be located far away from windows and from the intake ducts of the ventilation system.

- It should be provided with fire extinguishing systems.

- It should have restricted access.

- It should be used exclusively for the storage of wastes.

All the transport and storage operations that involve hospital wastes must be conducted under conditions of maximum safety and hygiene. In particular, one must remember:

- Direct contact with the wastes must be avoided.

- Bags should not be overfilled so that they may be closed easily.

- Bags should not be emptied into other bags.

Liquid Wastes: Biological and Chemical

Liquid wastes can be classified as biological or chemical.

Liquid biological wastes

Liquid biological wastes can usually be poured directly into the hospital’s drainage system since they do not require any treatment before disposal. The exceptions are the liquid wastes of patients with infectious diseases and the liquid cultures of microbiology laboratories. These should be collected in specific containers and treated before being dumped.

It is important that the waste be dumped directly into the drainage system with no splashing or spraying. If this is not possible and wastes are gathered in disposable containers that are difficult to open, the containers should not be forced open. Instead, the entire container should be disposed of, as with Group III solid wastes. When liquid waste is eliminated like Group III solid waste, it should be taken into consideration that the conditions of work differ for the disinfection of solid and liquid wastes. This must be kept in mind in order to ensure the effectiveness of the treatment.

Liquid chemical wastes

Liquid wastes generated in the hospital (generally in the laboratories) can be classified in three groups:

- liquid wastes that should not be dumped into the drains

- liquid wastes that can be dumped into the drains after being treated

- liquid wastes that can be dumped into the drains without being previously treated.

This classification is based on considerations related to the health and quality of life of the entire community. These include:

- protection of the water supply

- protection of the sewer system

- protection of the waste water purification stations.

Liquid wastes that can pose a serious threat to people or to the environment because they are toxic, noxious, flammable, corrosive or carcinogenic should be separated and collected so that they can subsequently be recovered or destroyed. They should be collected as follows:

- Each type of liquid waste should go into a separate container.

- The container should be labelled with the name of the product or the major component of the waste, by volume.

- Each laboratory, except the pathological anatomy laboratory, should provide its own individual receptacles to collect liquid wastes that are correctly labelled with the material or family of materials it contains. Periodically (at the end of each work day would be most desirable), these should be emptied into specifically labelled containers which are held in the room until collected at appropriate intervals by the assigned waste removal subcontractor.

- Once each receptacle is correctly labelled with the product or the family of products it contains, it should be placed in specific containers in the labs.

- The person responsible for the laboratory, or someone directly delegated by that person, will sign and stamp a control ticket. The subcontractor will then be responsible for delivering the control ticket to the department that supervises safety, hygiene and the environment.

Mixtures of chemical and biological liquid wastes

Treatment of chemical wastes is more aggressive than treatment of biological wastes. Mixtures of these two wastes should be treated using the steps indicated for liquid chemical wastes. Labels on containers should note the presence of biological wastes.

Any liquid or solid materials that are carcinogenic, mutagenic or teratogenic should be disposed of in rigid colour-coded containers specifically designed and labelled for this type of waste.

Dead animals that have been inoculated with biohazardous substances will be disposed of in closed rigid containers, which will be sterilized before being reused.

Disposal of Sharp and Pointed Instruments

Sharp and pointed instruments (e.g., needles and lancets), once used, must be placed in specifically designed, rigid “sharps” containers that have been strategically placed throughout the hospital. These wastes will be disposed of as hazardous wastes even if used on uninfected patients. They must never be disposed of except in the rigid sharps container.

All HCWs must be repeatedly reminded of the danger of accidental cuts or punctures with this type of material, and instructed to report them when they occur, so that appropriate preventive measures may be instituted. They should be specifically instructed not to attempt to recap used hypodermic needles before dropping them into the sharps container.

Whenever possible, needles to be placed in the sharps container without recapping may be separated from the syringes which, without the needle, can generally be disposed of as Group II waste. Many sharps containers have a special fitting for separating the syringe without risk of a needlestick to the worker; this saves space in the sharps containers for more needles. The sharps containers, which should never be opened by hospital personnel, should be removed by designated janitorial personnel and forwarded for appropriate disposal of their contents.

If it is not possible to separate the needle in adequately safe conditions, the whole needle-syringe combination must be considered as biohazardous and must be placed in the rigid sharps containers.

These sharps containers will be removed by the janitorial personnel.

Staff Training

There must be an ongoing training programme in waste management for all hospital personnel aimed at indoctrinating the staff on all levels with the imperative of always following the established guidelines for collecting, storing and disposing wastes of all kinds. It is particularly important that the housekeeping and janitorial staffs be trained in the details of the protocols for recognizing and dealing with the various categories of hazardous waste. The janitorial, security and fire-fighting staff must also be drilled in the correct course of action in the event of an emergency.

It is also important for the janitorial personnel to be informed and trained on the correct course of action in case of an accident.

Particularly when the programme is first launched, the janitorial staff should be instructed to report any problems that may hinder their performance of these assigned duties. They may be given special cards or forms on which to record such findings.

Waste Management Committee

To monitor the performance of the waste management programme and resolve any problems that may arise as it is implemented, a permanent waste management committee should be created and meet regularly, quarterly at a minimum. The committee should be accessible to any member of the hospital staff with a waste disposal problem or concern and should have access as needed to top management.

Implementing the Plan

The way the waste management programme is implemented may well determine whether it succeeds or not.

Since the support and cooperation of the various hospital committees and departments is essential, details of the programme should be presented to such groups as the administrative teams of the hospital, the health and safety committee and the infection control committee. It is necessary also to obtain validation of the programme from such community agencies as the departments of health, environmental protection and sanitation. Each of these may have helpful modifications to suggest, particularly with respect to the way the programme impinges on their areas of responsibility.

Once the programme design has been finalized, a pilot test in a selected area or department should permit rough edges to be polished and any unforeseen problems resolved. When this has been completed and its results analysed, the programme may be implemented progressively throughout the entire medical centre. A presentation, with audio-visual supports and distribution of descriptive literature, can be delivered in each unit or department, followed by delivery of bags and/or containers as required. Following the start-up of the programme, the department or unit should be visited so that any needed revisions may be instituted. In this manner, the participation and support of the entire hospital staff, without which the programme would never succeed, can be earned.

Hospitals: Environmental and Public Health Issues

A hospital is not an isolated social environment; it has, given its mission, very serious intrinsic social responsibilities. A hospital needs to be integrated with its surroundings and should minimize its impact upon them, thus contributing to the welfare of the people who live near it.