In risk perception, two psychological processes may be distinguished: hazard perception and risk assessment. Saari (1976) defines the information processed during the accomplishment of a task in terms of the following two components: (1) the information required to execute a task (hazard perception) and (2) the information required to keep existing risks under control (risk assessment). For instance, when construction workers on the top of ladders who are drilling holes in a wall have to simultaneously keep their balance and automatically coordinate their body-hand movements, hazard perception is crucial to coordinate body movement to keep dangers under control, whereas conscious risk assessment plays only a minor role, if any. Human activities generally seem to be driven by automatic recognition of signals which trigger a flexible, yet stored hierarchy of action schemata. (The more deliberate process leading to the acceptance or rejection of risk is discussed in another article.)

Risk Perception

From a technical point of view, a hazard represents a source of energy with the potential of causing immediate injury to personnel and damage to equipment, environment or structure. Workers may also be exposed to diverse toxic substances, such as chemicals, gases or radioactivity, some of which cause health problems. Unlike hazardous energies, which have an immediate effect on the body, toxic substances have quite different temporal characteristics, ranging from immediate effects to delays over months and years. Often there is an accumulating effect of small doses of toxic substances which are imperceptible to the exposed workers.

Conversely, there may be no harm to persons from hazardous energy or toxic substances provided that no danger exists. Danger expresses the relative exposure to hazard. In fact there may be little danger in the presence of some hazards as a result of the provision of adequate precautions. There is voluminous literature pertaining to factors people use in the final assessment of whether a situation is determined hazardous, and, if so, how hazardous. This has become known as risk perception. (The word risk is being used in the same sense that danger is used in occupational safety literature; see Hoyos and Zimolong 1988.)

Risk perception deals with the understanding of perceptual realities and indicators of hazards and toxic substances—that is, the perception of objects, sounds, odorous or tactile sensations. Fire, heights, moving objects, loud noise and acid smells are some examples of the more obvious hazards which do not need to be interpreted. In some instances, people are similarly reactive in their responses to the sudden presence of imminent danger. The sudden occurrence of loud noise, loss of balance, and objects rapidly increasing in size (and so appearing about to strike one’s body), are fear stimuli, prompting automatic responses such as jumping, dodging, blinking and clutching. Other reflex reactions include rapidly withdrawing a hand which has touched a hot surface. Rachman (1974) concludes that the prepotent fear stimuli are those which have the attributes of novelty, abruptness and high intensity.

Probably most hazards and toxic substances are not directly perceptible to the human senses, but are inferred from indicators. Examples are electricity; colourless, odourless gases such as methane and carbon monoxide; x rays and radioactive subs-tances; and oxygen-deficient atmospheres. Their presence must be signalled by devices which translate the presence of the hazard into something which is recognizable. Electrical currents can be perceived with the help of a current checking device, such as may be used for signals on the gauges and meters in a control-room register that indicate normal and abnormal levels of temperature and pressure at a particular state of a chemical process. There are also situations where hazards exist which are not perceivable at all or cannot be made perceivable at a given time. One example is the danger of infection when one opens blood probes for medical tests. The knowledge that hazards exist must be deduced from one’s knowledge of the common principles of causality or acquired by experience.

Risk Assessment

The next step in information-processing is risk assessment, which refers to the decision process as it is applied to such issues as whether and to what extent a person will be exposed to danger. Consider, for instance, driving a car at high speed. From the perspective of the individual, such decisions have to be made only in unexpected circumstances such as emergencies. Most of the required driving behaviour is automatic and runs smoothly without continuous attentional control and conscious risk assessment.

Hacker (1987) and Rasmussen (1983) distinguished three levels of behaviour: (1) skill-based behaviour, which is almost entirely automatic; (2) rule-based behaviour, which operates through the application of consciously chosen but fully pre-programmed rules; and (3) knowledge-based behaviour, under which all sorts of conscious planning and problem solving are grouped. At the skill-based level, an incoming piece of information is connected directly to a stored response that is executed automatically and carried out without conscious deliberation or control. If there is no automatic response available or any extraordinary event occurring, the risk assessment process moves to the rule-based level, where the appropriate action is selected from a sample of procedures taken out of storage and then executed. Each of the steps involves a finely tuned perceptual-motor programme, and usually, no step in this organizational hierarchy involves any decisions based on risk considerations. Only at the transitions is a conditional check applied, just to verify whether the progress is according to plan. If not, automatic control is halted and the ensuing problem solved at a higher level.

Reason’s GEMS (1990) model describes how the transition from automatic control to conscious problem solving takes place when exceptional circumstances arise or novel situations are encountered. Risk assessment is absent at the bottom level, but may be fully present at the top level. At the middle level one can assume some sort of “quick-and-dirty” risk assessment, while Rasmussen excludes any type of assessment that is not incorporated in fixed rules. Much of the time there will be no conscious perception or consideration of hazards as such. “The lack of safety consciousness is both a normal and a healthy state of affairs, despite what has been said in countless books, articles and speeches. Being constantly conscious of danger is a reasonable definition of paranoia” (Hale and Glendon 1987). People doing their jobs on a routine basis rarely consider these hazards or accidents in advance: they run risks, but they do not take them.

Hazard Perception

Perception of hazards and toxic substances, in the sense of direct perception of shape and colour, loudness and pitch, odours and vibrations, is restricted by the capacity limitations of the perceptual senses, which can be temporarily impaired due to fatigue, illness, alcohol or drugs. Factors such as glare, brightness or fog can put heavy stress on perception, and dangers can fail to be detected because of distractions or insufficient alertness.

As has already been mentioned, not all hazards are directly perceptible to the human senses. Most toxic substances are not even visible. Ruppert (1987) found in his investigation of an iron and steel factory, of municipal garbage collecting and of medical laboratories, that from 2,230 hazard indicators named by 138 workers, only 42% were perceptible by the human senses. Twenty-two per cent of the indicators have to be inferred from comparisons with standards (e.g., noise levels). Hazard perception is based in 23% of cases on clearly perceptible events which have to be interpreted with respect to knowledge about hazardousness (e.g., a glossy surface of a wet floor indicates slippery). In 13% of reports, hazard indicators can be retrieved only from memory of proper steps to be taken (e.g., current in a wall socket can be made perceivable only by the proper checking device). These results demonstrate that the requirements of hazard perception range from pure detection and perception to elaborate cognitive inference processes of anticipation and assessment. Cause-and-effect relationships are sometimes unclear, scarcely detectable, or misinterpreted, and delayed or accumulating effects of hazards and toxic substances are likely to impose additional burdens on individuals.

Hoyos et al. (1991) have listed a comprehensive picture of hazard indicators, behavioural requirements and safety-relevant conditions in industry and public services. A Safety Diagnosis Questionnaire (SDQ) has been developed to provide a practical instrument to analyse hazards and dangers through observation (Hoyos and Ruppert 1993). More than 390 workplaces, and working and environmental conditions in 69 companies concerned with agriculture, industry, manual work and the service industries, have been assessed. Because the companies had accident rates greater than 30 accidents per 1,000 employees with a minimum of 3 lost working days per accident, there appears to be a bias in these studies towards dangerous worksites. Altogether 2,373 hazards have been reported by the observers using SDQ, indicating a detection rate of 6.1 hazards per workplace and between 7 and 18 hazards have been detected at approximately 40% of all workplaces surveyed. The surprisingly low mean rate of 6.1 hazards per workplace has to be interpreted with consideration toward the safety measures broadly introduced in industry and agriculture during the last 20 years. Hazards reported do not include those attributable to toxic substances, nor hazards controlled by technical safety devices and measures, and thus reflect the distribution of “residual hazards”.

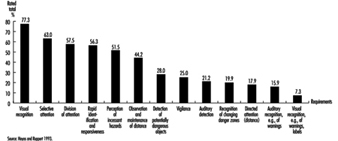

In figure 1 an overview of requirements for perceptual processes of hazard detection and perception is presented. Observers had to assess all hazards at a particular workplace with respect to 13 requirements, as indicated in the figure. On the average, 5 requirements per hazard were identified, including visual recognition, selective attention, auditory recognition and vigilance. As expected, visual recognition dominates by comparison with auditory recognition (77.3% of the hazards were detected visually and only 21.2% by auditory detection). In 57% of all hazards observed, workers had to divide their attention between tasks and hazard control, and divided attention is a very strenuous mental achievement likely to contribute to errors. Accidents have frequently been traced back to failures in attention while performing dual tasks. Even more alarming is the finding that in 56% of all hazards, workers had to cope with rapid activities and responsiveness to avoid being hit and injured. Only 15.9% and 7.3% of all hazards were indicated by acoustical or optical warnings, respectively: consequently, hazard detection and perception was self-initiated.

Figure 1. Detection and perception of hazard indicators in industry

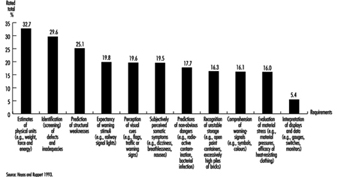

In some cases (16.1%) perception of hazards is supported by signs and warnings, but usually, workers rely on knowledge, training and work experience. Figure 2 shows the requirements of anticipation and assessment required to control hazards at the worksite. The core characteristic of all activities summarized in this figure is the need for knowledge and experience gained in the work process, including: technical knowledge about weight, forces and energies; training to identify defects and inadequacies of work tools and machinery; and experience to predict structural weaknesses of equipment, buildings and material. As Hoyos et al. (1991) have demonstrated, workers have little knowledge relating to hazards, safety rules and proper personal preventive behaviour. Only 60% of the construction workers and 61% of the auto-mechanics questioned knew the right solutions to the safety-related problems generally encountered at their workplaces.

Figure 2. Anticipation and assessment of hazard indicators

The analysis of hazard perception indicates that different cognitive processes are involved, such as visual recognition; selective and divided attention; rapid identification and responsiveness; estimates of technical parameters; and predictions of non-observable hazards and dangers. In fact, hazards and dangers are frequently unknown to job incumbents: they impose a heavy burden on people who have to cope sequentially with dozens of visual- and auditory-based requirements and are a source of proneness to error when work and hazard control is performed simultaneously. This requires much more emphasis to be placed on regular analysis and identification of hazards and dangers at the workplace. In several countries, formal risk assessments of workplaces are mandatory: for example, the health and safety Directives of the EEC require risk assessment of computer workplaces prior to commencing work in them, or when major alterations at work have been introduced; and the US Occupational Safety and Health Administration (OSHA) requires regular hazard risk analyses of process units.

Coordination of Work and Hazard Control

As Hoyos and Ruppert (1993) point out, (1) work and hazard control may require attention simultaneously; (2) they may be managed alternatively in sequential steps; or (3) prior to the commencement of work, precautionary measures may be taken (e.g., putting on a safety helmet).

In the case of simultaneously occurring requirements, hazard control is based on visual, auditory and tactile recognition. In fact, it is difficult to separate work and hazard control in routine tasks. For example, a source of constant danger is present when performing the task of cutting off threads from yarns in a cotton-mill factory—a task requiring a sharp knife. The only two types of protection against cuts are skill in wielding the knife and use of protective equipment. If either or both are to succeed, they must be totally incorporated into the worker’s action sequences. Habits such as cutting in a direction away from the hand which is holding the thread must be ingrained into the worker’s skills from the outset. In this example hazard control is fully integrated into task control; no separate process of hazard detection is required. Probably there is a continuum of integration into work, the degree depending on the skill of the worker and the requirements of the task. On the one hand, hazard perception and control is inherently integrated into work skills; on the other hand, task execution and hazard control are distinctly separate activities. Work and hazard control may be carried out alternatively, in sequential steps, when during the task, danger potential steadily increases or there is an abrupt, alerting danger signal. As a consequence, workers interrupt the task or process and take preventive measures. For example, the checking of a gauge is a typical example of a simple diagnostic test. A control room operator detects a deviation from standard level on a gauge which at first glance does not constitute a dramatic sign of danger, but which prompts the operator to search further on other gauges and meters. If there are other deviations present, a rapid series of scanning activities will be carried out at the rule-based level. If deviations on other meters do not fit into a familiar pattern, the diagnosis process shifts to the knowledge-based level. In most cases, guided by some strategies, signals and symptoms are actively looked for to locate causes of the deviations (Konradt 1994). The allocation of resources of the attentional control system is set to general monitoring. A sudden signal, such as a warning tone or, as in the case above, various deviations of pointers from a standard, shifts the attentional control system onto the specific topic of hazard control. It initiates an activity which seeks to identify the causes of the deviations on the rule-based level, or in case of misfortune, on the knowledge-based level (Reason 1990).

Preventive behaviour is the third type of coordination. It occurs prior to work, and the most prominent example is the use of personal protective equipment (PPE).

The Meanings of Risk

Definitions of risks and methods to assess risks in industry and society have been developed in economics, engineering, chemistry, safety sciences and ergonomics (Hoyos and Zimolong 1988). There is a wide variety of interpretations of the term risk. On the one hand, it is interpreted to mean “probability of an undesired event”. It is an expression of the likelihood that something unpleasant will happen. A more neutral definition of risk is used by Yates (1992a), who argues that risk should be perceived as a multidimensional concept that as a whole refers to the prospect of loss. Important contributions to our current understanding of risk assessment in society have come from geography, sociology, political science, anthropology and psychology. Research focused originally on understanding human behaviour in the face of natural hazards, but it has since broadened to incorporate technological hazards as well. Sociological research and anthropological studies have shown that assessment and acceptance of risks have their roots in social and cultural factors. Short (1984) argues that responses to hazards are mediated by social influences transmitted by friends, family, co-workers and respected public officials. Psychological research on risk assessment originated in empirical studies of probability assessment, utility assessment and decision-making processes (Edwards 1961).

Technical risk assessment usually focuses on the potential for loss, which includes the probability of the loss’s occurring and the magnitude of the given loss in terms of death, injury or damages. Risk is the probability that damage of a specified type will occur in a given system over a defined time period. Different assessment techniques are applied to meet the various requirements of industry and society. Formal analysis methods to estimate degrees of risk are derived from different kinds of fault tree analyses; by use of data banks comprising error probabilities such as THERP (Swain and Guttmann 1983); or on decomposition methods based on subjective ratings such as SLIM-Maud (Embrey et al. 1984). These techniques differ considerably in their potential to predict future events such as mishaps, errors or accidents. In terms of error prediction in industrial systems, experts attained the best results with THERP. In a simulation study, Zimolong (1992) found a close match between objectively derived error probabilities and their estimates derived with THERP. Zimolong and Trimpop (1994) argued that such formal analyses have the highest “objectivity” if conducted properly, as they separated facts from beliefs and took many of the judgemental biases into account.

The public’s sense of risk depends on more than the probability and magnitude of loss. It may depend on factors such as potential degree of damage, unfamiliarity with possible consequences, the involuntary nature of exposure to risk, the uncontrollability of damage, and possible biased media coverage. The feeling of control in a situation may be a particularly important factor. For many, flying seems very unsafe because one has no control over one’s fate once in the air. Rumar (1988) found that the perceived risk in driving a car is typically low, since in most situations the drivers believe in their own ability to achieve control and are accustomed to the risk. Other research has addressed emotional reactions to risky situations. The potential for serious loss generates a variety of emotional reactions, not all of which are necessarily unpleasant. There is a fine line between fear and excitement. Again, a major determinant of perceived risk and of affective reactions to risky situations seems to be a person’s feeling of control or lack thereof. As a consequence, for many people, risk may be nothing more than a feeling.

Decision Making under Risk

Risk taking may be the result of a deliberate decision process entailing several activities: identification of possible courses of action; identification of consequences; evaluation of the attractiveness and chances of the consequences; or deciding according to a combination of all the previous assessments. The overwhelming evidence that people often make poor choices in risky situations implies the potential to make better decisions. In 1738, Bernoulli defined the notion of a “best bet” as one which maximizes the expected utility (EU) of the decision. The EU concept of rationality asserts that people ought to make decisions by evaluating uncertainties and considering their choices, the possible consequences, and one’s preferences for them (von Neumann and Morgenstern 1947). Savage (1954) later generalized the theory to allow probability values to represent subjective or personal probabilities.

Subjective expected utility (SEU) is a normative theory which describes how people should proceed when making decisions. Slovic, Kunreuther and White (1974) stated, “Maximization of expected utility commands respect as a guideline for wise behaviour because it is deduced from axiomatic principles that presumably would be accepted by any rational man.” A good deal of debate and empirical research has centred around the question of whether this theory could also describe both the goals that motivate actual decision makers and the processes they employ when reaching their decisions. Simon (1959) criticized it as a theory of a person selecting among fixed and known alternatives, to each of which known consequences are attached. Some researchers have even questioned whether people should obey the principles of expected utility theory, and after decades of research, SEU applications remain controversial. Research has revealed that psychological factors play an important role in decision making and that many of these factors are not adequately captured by SEU models.

In particular, research on judgement and choice has shown that people have methodological deficiencies such as understanding probabilities, negligence of the effect of sample sizes, reliance on misleading personal experiences, holding judgements of fact with unwarranted confidence, and misjudging risks. People are more likely to underestimate risks if they have been voluntarily exposed to risks over a longer period, such as living in areas subject to floods or earthquakes. Similar results have been reported from industry (Zimolong 1985). Shunters, miners, and forest and construction workers all dramatically underestimate the riskiness of their most common work activities as compared to objective accident statistics; however, they tend to overestimate any obvious dangerous activities of fellow workers when required to rate them.

Unfortunately, experts’ judgements appear to be prone to many of the same biases as those of the public, particularly when experts are forced to go beyond the limits of available data and rely upon their intuitions (Kahneman, Slovic and Tversky 1982). Research further indicates that disagreements about risk should not disappear completely even when sufficient evidence is available. Strong initial views are resistant to change because they influence the way that subsequent information is interpreted. New evidence appears reliable and informative if it is consistent with one’s initial beliefs; contrary evidence tends to be dismissed as unreliable, erroneous or unrepresentative (Nisbett and Ross 1980). When people lack strong prior opinions, the opposite situation prevails—they are at the mercy of the formulation of the problem. Presenting the same information about risk in different ways (e.g., mortality rates as opposed to survival rates) alters their perspectives and their actions (Tversky and Kahneman 1981). The discovery of this set of mental strategies, or heuristics, that people implement in order to structure their world and predict their future courses of action, has led to a deeper understanding of decision making in risky situations. Although these rules are valid in many circumstances, in others they lead to large and persistent biases with serious implications for risk assessment.

Personal Risk Assessment

The most common approach in studying how people make risk assessments uses psychophysical scaling and multivariate analysis techniques to produce quantitative representations of risk attitudes and assessment (Slovic, Fischhoff and Lichtenstein 1980). Numerous studies have shown that risk assessment based on subjective judgements is quantifiable and predictable. They also have shown that the concept of risk means different things to different people. When experts judge risk and rely on personal experience, their responses correlate highly with technical estimates of annual fatalities. Laypeople’s judgements of risk are related more to other characteristics, such as catastrophic potential or threat to future generations; as a result, their estimates of loss probabilities tend to differ from those of experts.

Laypeople’s risk assessments of hazards can be grouped into two factors (Slovic 1987). One of the factors reflects the degree to which a risk is understood by people. Understanding a risk relates to the degree to which it is observable, is known to those exposed, and can be detected immediately. The other factor reflects the degree to which the risk evokes a feeling of dread. Dread is related to the degree of uncontrollability, of serious consequences, of exposure of high risks to future generations, and of involuntary increase of risk. The higher a hazard’s score on the latter factor, the higher its assessed risk, the more people want to see its current risks reduced, and the more they want to see strict regulation employed to achieve the desired reduction in risk. Consequently, many conflicts about risk may result from experts’ and laypeople’s views originating from different definitions of the concept. In such cases, expert citations of risk statistics or of the outcome of technical risk assessments will do little to change people’s attitudes and assessments (Slovic 1993).

The characterization of hazards in terms of “knowledge” and “threat” leads back to the previous discussion of hazard and danger signals in industry in this section, which were discussed in terms of “perceptibility”. Forty-two per cent of the hazard indicators in industry are directly perceptible by human senses, 45% of cases have to be inferred from comparisons with standards, and 3% from memory. Perceptibility, knowledge and the threats and thrills of hazards are dimensions which are closely related to people’s experience of hazards and perceived control; however, to understand and predict individual behaviour in the face of danger we have to gain a deeper understanding of their relationships with personality, requirements of tasks, and societal variables.

Psychometric techniques seem well-suited to identify similarities and differences among groups with regard to both personal habits of risk assessment and to attitudes. However, other psychometric methods such as multidimensional analysis of hazard similarity judgements, applied to quite different sets of hazards, produce different representations. The factor-analytical approach, while informative, by no means provides a universal representation of hazards. Another weakness of psychometric studies is that people face risk only in written statements, and divorce the assessment of risk from behaviour in actual risky situations. Factors that affect a person’s considered assessment of risk in a psychometric experiment may be trivial when confronted with an actual risk. Howarth (1988) suggests that such conscious verbal knowledge usually reflects social stereotypes. By contrast, risk-taking responses in traffic or work situations are controlled by the tacit knowledge that underlies skilled or routine behaviour.

Most of the personal risk decisions in everyday life are not conscious decisions at all. People are, by and large, not even aware of risk. In contrast, the underlying notion of psychometric experiments is presented as a theory of deliberate choice. Assessments of risks usually performed by means of a questionnaire are conducted deliberately in an “armchair” fashion. In many ways, however, a person’s responses to risky situations are more likely to result from learned habits that are automatic, and which are below the general level of awareness. People do not normally evaluate risks, and therefore it cannot be argued that their way of evaluating risk is inaccurate and needs to be improved. Most risk-related activities are necessarily executed at the bottom level of automated behaviour, where there is simply no room for consideration of risks. The notion that risks, identified after the occurrence of accidents, are accepted after a conscious analysis, may have emerged from a confusion between normative SEU and descriptive models (Wagenaar 1992). Less attention was paid to the conditions in which people will act automatically, follow their gut feeling, or accept the first choice that is offered. However, there is a widespread acceptance in society and among health and safety professionals that risk taking is a prime factor in causing mishaps and errors. In a representative sample of Swedes aged between 18 and 70 years, 90% agreed that risk taking is the major source of accidents (Hovden and Larsson 1987).

Preventive Behaviour

Individuals may deliberately take preventive measures to exclude hazards, to attenuate the energy of hazards or to protect themselves by precautionary measures (for instance, by wearing safety glasses and helmets). Often people are required by a company’s directives or even by law to comply with protective measures. For example, a roofer builds a scaffolding prior to working on a roof to prevent the eventuality of suffering a fall. This choice might be the result of a conscious risk assessment process of hazards and of one’s own coping skills, or, more simply, it may be the outcome of a habituation process, or it may be a requirement which is enforced by law. Often warnings are used to indicate mandatory preventive actions.

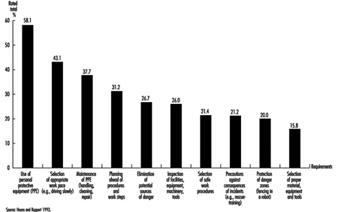

Several forms of preventive activities in industry have been analysed by Hoyos and Ruppert (1993). Some of them are shown in figure 3, together with their frequency of requirement. As indicated, preventive behaviour is partly self-controlled and partly enforced by the legal standards and requirements of the company. Preventive activities comprise some of the following measures: planning work procedures and steps ahead; use of PPE; application of safety work technique; selection of safe work procedures by means of proper material and tools; setting an appropriate work pace; and inspection of facilities, equipment, machinery and tools.

Figure 3. Typical examples of personal preventive behaviour in industry and frequency of preventive measure

Personal Protective Equipment

The most frequent preventive measure required is the use of PPE. Together with correct handling and maintenance, it is by far the most common requirement in industry. There exist major differences in the usage of PPE between companies. In some of the best companies, mainly in chemical plants and petroleum refineries, the usage of PPE approaches 100%. In contrast, in the construction industry, safety officials have problems even in attempts to introduce particular PPE on a regular basis. It is doubtful that risk perception is the major factor which makes the difference. Some of the companies have successfully enforced the use of PPE which then becomes habitualized (e.g., the wearing of safety helmets) by establishing the “right safety culture” and subsequently altered personal risk assessment. Slovic (1987) in his short discussion on the usage of seat-belts shows that about 20% of road users wear seat-belts voluntarily, 50% would use them only if it were made mandatory by law, and beyond this number, only control and punishment will serve to improve automatic use.

Thus, it is important to understand what factors govern risk perception. However, it is equally important to know how to change behaviour and subsequently how to alter risk perception. It seems that many more precautionary measures need to be undertaken at the level of the organization, among the planners, designers, managers and those authorities that make decisions which have implications for many thousands of people. Up to now, there is little understanding at these levels as to which factors risk perception and assessment depend upon. If companies are seen as open systems, where different levels of organizations mutually influence each other and are in steady exchange with society, a systems approach may reveal those factors which constitute and influence risk perception and assessment.

Warning Labels

The use of labels and warnings to combat potential hazards is a controversial procedure for managing risks. Too often they are seen as a way for manufacturers to avoid responsibility for unreasonably risky products. Obviously, labels will be successful only if the information they contain is read and understood by members of the intended audience. Frantz and Rhoades (1993) found that 40% of clerical personnel filling a file cabinet noticed a warning label placed on the top drawer of the cabinet, 33% read part of it, and no one read the entire label. Contrary to expectation, 20% complied completely by not placing any material in the top drawer first. Obviously it is insufficient to scan the most important elements of the notice. Lehto and Papastavrou (1993) provided a thorough analysis of findings pertaining to warning signs and labels by examining receiver-, task-, product- and message-related factors. Furthermore, they provided a significant contribution to understanding the effectiveness of warnings by considering different levels of behaviour.

The discussion of skilled behaviour suggests that a warning notice will have little impact on the way people perform a familiar task, as it simply will not be read. Lehto and Papastavrou (1993) concluded from research findings that interrupting familiar task performance may effectively increase workers’ noticing warning signs or labels. In the experiment by Frantz and Rhoades (1993), noticing the warning labels on filing cabinets increased to 93% when the top drawer was sealed shut with a warning indicating that a label could be found within the drawer. The authors concluded, however, that ways of interrupting skill-based behaviour are not always available and that their effectiveness after initial use can diminish considerably.

At a rule-based level of performance, warning information should be integrated into the task (Lehto 1992) so that it can be easily mapped to immediate relevant actions. In other words, people should try to get the task executed following the directions of the warning label. Frantz (1992) found that 85% of subjects expressed the need for a requirement on the directions of use of a wood preservative or drain cleaner. On the negative side, studies of comprehension have revealed that people may poorly comprehend the symbols and text used in warning signs and labels. In particular, Koslowski and Zimolong (1992) found that chemical workers understood the meaning of only approximately 60% of the most important warning signs used in the chemical industry.

At a knowledge-based level of behaviour, people seem likely to notice warnings when they are actively looking for them. They expect to find warnings close to the product. Frantz (1992) found that subjects in unfamiliar settings complied with instructions 73% of the time if they read them, compared to only 9% when they did not read them. Once read, the label must be understood and recalled. Several studies of comprehension and memory also imply that people may have trouble remembering the information they read from either instruction or warning labels. In the United States, the National Research Council (1989) provides some assistance in designing warnings. They emphasize the importance of two-way communication in enhancing understanding. The communicator should facilitate information feedback and questions on the part of the recipient. The conclusions of the report are summarized in two checklists, one for use by managers, the other serving as a guide for the recipient of the information.