The concept of risk acceptance asks the question, “How safe is safe enough?” or, in more precise terms, “The conditional nature of risk assessment raises the question of which standard of risk we should accept against which to calibrate human biases” (Pidgeon 1991). This question takes importance in issues such as: (1) Should there be an additional containment shell around nuclear power plants? (2) Should schools containing asbestos be closed? or (3) Should one avoid all possible trouble, at least in the short run? Some of these questions are aimed at government or other regulatory bodies; others are aimed at the individual who must decide between certain actions and possible uncertain dangers.

The question whether to accept or reject risks is the result of decisions made to determine the optimal level of risk for a given situation. In many instances, these decisions will follow as an almost automatic result of the exercise of perceptions and habits acquired from experience and training. However, whenever a new situation arises or changes in seemingly familiar tasks occur, such as in performing non-routine or semi-routine tasks, decision making becomes more complex. To understand more about why people accept certain risks and reject others we shall need to define first what risk acceptance is. Next, the psychological processes that lead to either acceptance or rejection have to be explained, including influencing factors. Finally, methods to change too high or too low levels of risk acceptance will be addressed.

Understanding Risk

Generally speaking, whenever risk is not rejected, people have either voluntarily, thoughtlessly or habitually accepted it. Thus, for example, when people participate in traffic, they accept the danger of damage, injury, death and pollution for the opportunity of benefits resulting from increased mobility; when they decide to undergo surgery or not to undergo it, they decide that the costs and/or benefits of either decision are greater; and when they are investing money in the financial market or deciding to change business products, all decisions accepting certain financial dangers and opportunities are made with some degree of uncertainty. Finally, the decision to work in any job also has varying probabilities of suffering an injury or fatality, based on statistical accident history.

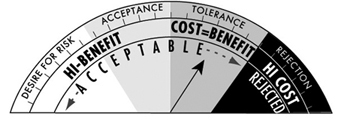

Defining risk acceptance by referring only to what has not been rejected leaves two important issues open; (1) what exactly is meant by the term risk, and (2) the often made assumption that risks are merely potential losses that have to be avoided, while in reality there is a difference between merely tolerating risks, fully accepting them, or even wishing for them to occur to enjoy thrill and excitement. These facets might all be expressed through the same behaviour (such as participating in traffic) but have different underlying cognitive, emotional and physiological processes. It seems obvious that a merely tolerated risk relates to a different level of commitment than if one even has the desire for a certain thrill, or “risky” sensation. Figure 1 summarizes facets of risk acceptance.

Figure 1. Facets of risk acceptance and risk rejection

If one looks up the term risk in the dictionaries of several languages, it often has the double meaning of “chance, opportunity” on one hand and “danger, loss” (e.g., wej-ji in Chinese, Risiko in German, risico in Dutch and Italian, risque in French, etc.) on the other. The word risk was created and became popular in the sixteenth century as a consequence of a change in people’s perceptions, from being totally manipulated by “good and evil spirits,” towards the concept of the chance and danger of every free individual to influence his or her own future. (Probable origins of risk lie in the Greek word rhiza, meaning “root and/or cliff”, or the Arabic word rizq meaning “what God and fate provide for your life”.) Similarly, in our everyday language we use proverbs such as “Nothing ventured, nothing gained” or “God helps the brave”, thereby promoting risk taking and risk acceptance. The concept always related to risk is that of uncertainty. As there is almost always some uncertainty about success or failure, or about the probability and quantity of consequences, accepting risks always means accepting uncertainties (Schäfer 1978).

Safety research has largely reduced the meaning of risk to its dangerous aspects (Yates 1992b). Only lately have positive consequences of risk re-emerged with the increase in adventurous leisure time activities (bungee jumping, motorcycling, adventure travels, etc.) and with a deeper understanding of how people are motivated to accept and take risks (Trimpop 1994). It is argued that we can understand and influence risk acceptance and risk taking behaviour only if we take the positive aspects of risks into account as well as the negative.

Risk acceptance therefore refers to the behaviour of a person in a situation of uncertainty that results from the decision to engage in that behaviour (or not to engage in it), after weighing the estimated benefits as greater (or lesser) than the costs under the given circumstances. This process can be extremely quick and not even enter the conscious decision-making level in automatic or habitual behaviour, such as shifting gears when the noise of the engine rises. At the other extreme, it may take very long and involve deliberate thinking and debates among several people, such as when planning a hazardous operation such as a space flight.

One important aspect of this definition is that of perception. Because perception and subsequent evaluation is based on a person’s individual experiences, values and personality, the behavioural acceptance of risks is based more on subjective risk than on objective risk. Furthermore, as long as a risk is not perceived or considered, a person cannot respond to it, no matter how grave the hazard. Thus, the cognitive process leading to the acceptance of risk is an information-processing and evaluation procedure residing within each person that can be extremely quick.

A model describing the identification of risks as a cognitive process of identification, storage and retrieval was discussed by Yates and Stone (1992). Problems can arise at each stage of the process. For example, accuracy in the identification of risks is rather unreliable, especially in complex situations or for dangers such as radiation, poison or other not easily perceptible stimuli. Furthermore, the identification, storage and retrieval mechanisms underlie common psychological phenomena, such as primacy and recency effects, as well as familiarity habituation. That means that people familiar with a certain risk, such as driving at high speed, will get used to it, accept it as a given “normal” situation and estimate the risk at a far lower value than people not familiar with the activity. A simple formalization of the process is a model with the components of:

Stimulus → Perception → Evaluation → Decision → Behaviour → Feedback loop

For example, a slowly moving vehicle in front of a driver may be the stimulus to pass. Checking the road for traffic is perception. Estimating the time needed to pass, given the acceleration capabilities of one’s car, is evaluation. The value of saving time leads to the decision and following behaviour to pass the car or not. The degree of success or failure is noticed immediately and this feedback influences subsequent decisions about passing behaviour. At each step of this process, the final decision whether to accept or reject risks can be influenced. Costs and benefits are evaluated based on individual-, context- and object-related factors that have been identified in scientific research to be of importance for risk acceptance.

Which Factors Influence Risk Acceptance?

Fischhoff et al. (1981) identified the factors (1) individual perception, (2) time, (3) space and (4) context of behaviour, as important dimensions of risk taking that should be considered in studying risks. Other authors have used different categories and different labels for the factors and contexts influencing risk acceptance. The categories of properties of the task or risk object, individual factors and context factors have been used to structure this large number of influential factors, as summarized in figure 2.

Figure 2. Factors influencing risk acceptance

In normal models of risk acceptance, consequences of new technological risks (e.g., genetic research) were often described by quantitative summary measures (e.g., deaths, damage, injuries), and probability distributions over consequences were arrived at through estimation or simulation (Starr 1969). Results were compared to risks already “accepted” by the public, and thus offered a measure of acceptability of the new risk. Sometimes data were presented in a risk index to compare the different types of risk. The methods used most often were summarized by Fischhoff et al. (1981) as professional judgement by experts, statistical and historical information and formal analyses, such as fault tree analyses. The authors argued that properly conducted formal analyses have the highest “objectivity” as they separate facts from beliefs and take many influences into account. However, safety experts stated that the public and individual acceptance of risks may be based on biased value judgements and on opinions publicized by the media, and not on logical analyses.

It has been suggested that the general public is often misinformed by the media and political groups that produce statistics in favour of their arguments. Instead of relying on individual biases, only professional judgements based on expert knowledge should be used as a basis for accepting risks, and the general public should be excluded from such important decisions. This has drawn substantial criticism as it is viewed as a question of both democratic values (people should have a chance to decide issues that may have catastrophic consequences for their health and safety) and social values (does the technology or risky decision benefit receivers more than those who pay the costs). Fischhoff, Furby and Gregory (1987) suggested the use of either expressed preferences (interviews, questionnaires) or revealed preferences (observations) of the “relevant” public to determine the acceptability of risks. Jungermann and Rohrmann have pointed out the problems of identifying who is the “relevant public” for technologies such as nuclear power plants or genetic manipulations, as several nations or the world population may suffer or benefit from the consequences.

Problems with solely relying on expert judgements have also been discussed. Expert judgements based on normal models approach statistical estimations more closely than those of the public (Otway and von Winterfeldt 1982). However, when asked specifically to judge the probability or frequency of death or injuries related to a new technology, the public’s views are much more similar to the expert judgements and to the risk indices. Research also showed that although people do not change their first quick estimate when provided with data, they do change when realistic benefits or dangers are raised and discussed by experts. Furthermore, Haight (1986) pointed out that because expert judgements are subjective, and experts often disagree about risk estimates, that the public is sometimes more accurate in its estimate of riskiness, if judged after the accident has occurred (e.g., the catastrophe at Chernobyl). Thus, it is concluded that the public uses other dimensions of risk when making judgements than statistical number of deaths or injuries.

Another aspect that plays a role in accepting risks is whether the perceived effects of taking risks are judged positive, such as adrenaline high, “flow” experience or social praise as a hero. Machlis and Rosa (1990) discussed the concept of desired risk in contrast to tolerated or dreaded risk and concluded that in many situations increased risks function as an incentive, rather than as a deterrent. They found that people may behave not at all averse to risk in spite of media coverage stressing the dangers. For example, amusement park operators reported a ride becoming more popular when it reopened after a fatality. Also, after a Norwegian ferry sank and the passengers were set afloat on icebergs for 36 hours, the operating company experienced the greatest demand it had ever had for passage on its vessels. Researchers concluded that the concept of desired risk changes the perception and acceptance of risks, and demands different conceptual models to explain risk-taking behaviour. These assumptions were supported by research showing that for police officers on patrol the physical danger of being attacked or killed was ironically perceived as job enrichment, while for police officers engaged in administrative duties, the same risk was perceived as dreadful. Vlek and Stallen (1980) suggested the inclusion of more personal and intrinsic reward aspects in cost/benefit analyses to explain the processes of risk assessment and risk acceptance more completely.

Individual factors influencing risk acceptance

Jungermann and Slovic (1987) reported data showing individual differences in perception, evaluation and acceptance of “objectively” identical risks between students, technicians and environmental activists. Age, sex and level of education have been found to influence risk acceptance, with young, poorly educated males taking the highest risks (e.g., wars, traffic accidents). Zuckerman (1979) provided a number of examples for individual differences in risk acceptance and stated that they are most likely influenced by personality factors, such as sensation seeking, extroversion, overconfidence or experience seeking. Costs and benefits of risks also contribute to individual evaluation and decision processes. In judging the riskiness of a situation or action, different people reach a wide variety of verdicts. The variety can manifest itself in terms of calibration—for example, due to value-induced biases which let the preferred decision appear less risky so that overconfident people choose a different anchor value. Personality aspects, however, account for only 10 to 20% of the decision to accept a risk or to reject it. Other factors have to be identified to explain the remaining 80 to 90%.

Slovic, Fischhoff and Lichtenstein (1980) concluded from factor-analytical studies and interviews that non-experts assess risks qualitatively differently by including the dimensions of controllability, voluntariness, dreadfulness and whether the risk has been previously known. Voluntariness and perceived controllability were discussed in great detail by Fischhoff et al. (1981). It is estimated that voluntarily chosen risks (motorcycling, mountain climbing) have a level of acceptance which is about 1,000 times as high as that of involuntarily chosen, societal risks. Supporting the difference between societal and individual risks, the importance of voluntariness and controllability has been posited in a study by von Winterfeldt, John and Borcherding (1981). These authors reported lower perceived riskiness for motorcycling, stunt work and auto racing than for nuclear power and air traffic accidents. Renn (1981) reported a study on voluntariness and perceived negative effects. One group of subjects was allowed to choose between three types of pills, while the other group was administered these pills. Although all pills were identical, the voluntary group reported significantly fewer “side-effects” than the administered group.

When risks are individually perceived as having more dreadful consequences for many people, or even catastrophic consequences with a near zero probability of occurrence, these risks are often judged as unacceptable in spite of the knowledge that there have not been any or many fatal accidents. This holds even more true for risks previously unknown to the person judging. Research also shows that people use their personal knowledge and experience with the particular risk as the key anchor of judgement for accepting well-defined risks while previously unknown risks are judged more by levels of dread and severity. People are more likely to underestimate even high risks if they have been exposed for an extended period of time, such as people living below a power dam or in earthquake zones, or having jobs with a “habitually” high risk, such as in underground mining, logging or construction (Zimolong 1985). Furthermore, people seem to judge human-made risks very differently from natural risks, accepting natural ones more readily than self-constructed, human-made risks. The approach used by experts to base risks for new technologies within the low-end and high-end “objective risks” of already accepted or natural risks seems not to be perceived as adequate by the public. It can be argued that already “accepted risks” are merely tolerated, that new risks add on to the existing ones and that new dangers have not been experienced and coped with yet. Thus, expert statements are essentially viewed as promises. Finally, it is very hard to determine what has been truly accepted, as many people are seemingly unaware of many risks surrounding them.

Even if people are aware of the risks surrounding them, the problem of behavioural adaptation occurs. This process is well described in risk compensation and risk homeostasis theory (Wilde 1986), which states that people adjust their risk acceptance decision and their risk-taking behaviour towards their target level of perceived risk. That means that people will behave more cautiously and accept fewer risks when they feel threatened, and, conversely, they will behave more daringly and accept higher levels of risk when they feel safe and secure. Thus, it is very difficult for safety experts to design safety equipment, such as seat-belts, ski boots, helmets, wide roads, fully enclosed machinery and so on, without the user’s offsetting the possible safety benefit by some personal benefit, such as increased speed, comfort, decreased attention or other more “risky” behaviour.

Changing the accepted level of risk by increasing the value of safe behaviour may increase the motivation to accept the less dangerous alternative. This approach aims at changing individual values, norms and beliefs to motivate alternative risk acceptance and risk-taking behaviour. Among the factors that increase or decrease the likelihood of risk acceptance, are those such as whether the technology provides a benefit corresponding to present needs, increases the standard of living, creates new jobs, facilitates economic growth, enhances national prestige and independence, requires strict security measures, increases the power of big business, or leads to centralization of political and economic systems (Otway and von Winterfeldt 1982). Similar influences of situational frames on risk evaluations were reported by Kahneman and Tversky (1979 and 1984). They reported that if they phrased the outcome of a surgical or radiation therapy as 68% probability of survival, 44% of the subjects chose it. This can be compared to only 18% who chose the same surgical or radiation therapy, if the outcome was phrased as 32% probability of death, which is mathematically equivalent. Often subjects choose a personal anchor value (Lopes and Ekberg 1980) to judge the acceptability of risks, especially when dealing with cumulative risks over time.

The influence of “emotional frames” (affective context with induced emotions) on risk assessment and acceptance was shown by Johnson and Tversky (1983). In their frames, positive and negative emotions were induced through descriptions of events such as personal success or the death of a young man. They found that subjects with induced negative feelings judged the risks of accidental and violent fatality rates as significantly higher, regardless of other context variables, than subjects of the positive emotional group. Other factors influencing individual risk acceptance include group values, individual beliefs, societal norms, cultural values, the economic and political situation, and recent experiences, such as seeing an accident. Dake (1992) argued that risk is—apart from its physical component—a concept very much dependent on the respective system of beliefs and myths within a cultural frame. Yates and Stone (1992) listed the individual biases (figure 3) that have been found to influence the judgement and acceptance of risks.

Figure 3. Individual biases that influence risk evaluation and risk acceptance

Cultural factors influencing risk acceptance

Pidgeon (1991) defined culture as the collection of beliefs, norms, attitudes, roles and practices shared within a given social group or population. Differences in cultures lead to different levels of risk perception and acceptance, for example in comparing the work safety standards and accident rates in industrialized countries with those in developing countries. In spite of the differences, one of the most consistent findings across cultures and within cultures is that usually the same concepts of dreadfulness and unknown risks, and those of voluntariness and controllability emerge, but they receive different priorities (Kasperson 1986). Whether these priorities are solely culture dependent remains a question of debate. For example, in estimating the hazards of toxic and radioactive waste disposal, British people focus more on transportation risks; Hungarians more on operating risks; and Americans more on environmental risks. These differences are attributed to cultural differences, but may just as well be the consequence of a perceived population density in Britain, operating reliability in Hungary and the environmental concerns in the United States, which are situational factors. In another study, Kleinhesselink and Rosa (1991) found that Japanese perceive atomic power as a dreadful but not unknown risk, while for Americans atomic power is a predominantly unknown source of risk.

The authors attributed these differences to different exposure, such as to the atomic bombs dropped on Hiroshima and Nagasaki in 1945. However, similar differences were reported between Hispanic and White American residents of the San Francisco area. Thus, local cultural, knowledge and individual differences may play an equally important role in risk perception as general cultural biases do (Rohrmann 1992a).

These and similar discrepancies in conclusions and interpretations derived from identical facts led Johnson (1991) to formulate cautious warnings about the causal attribution of cultural differences to risk perception and risk acceptance. He worried about the widely spread differences in the definition of culture, which make it almost an all-encompassing label. Moreover, differences in opinions and behaviours of subpopulations or individual business organizations within a country add further problems to a clear-cut measurement of culture or its effects on risk perception and risk acceptance. Also, the samples studied are usually small and not representative of the cultures as a whole, and often causes and effects are not separated properly (Rohrmann 1995). Other cultural aspects examined were world views, such as individualism versus egalitarianism versus belief in hierarchies, and social, political, religious or economic factors.

Wilde (1994) reported, for example, that the number of accidents is inversely related to a country’s economic situation. In times of recession the number of traffic accidents drops, while in times of growth the number of accidents rises. Wilde attributed these findings to a number of factors, such as that in times of recession since more people are unemployed and gasoline and spare parts are more costly, people will consequently take more care to avoid accidents. On the other hand, Fischhoff et al. (1981) argued that in times of recession people are more willing to accept dangers and uncomfortable working conditions in order to keep a job or to get one.

The role of language and its use in mass media were discussed by Dake (1991), who cited a number of examples in which the same “facts” were worded such that they supported the political goals of specific groups, organizations or governments. For example, are worker complaints about suspected occupational hazards “legitimate concerns” or “narcissistic phobias”? Is hazard information available to the courts in personal injury cases “sound evidence” or “scientific flotsam”? Do we face ecological “nightmares” or simply “incidences” or “challenges”? Risk acceptance thus depends on the perceived situation and context of the risk to be judged, as well as on the perceived situation and context of the judges themselves (von Winterfeldt and Edwards 1984). As the previous examples show, risk perception and acceptance strongly depend on the way the basic “facts” are presented. The credibility of the source, the amount and type of media coverage—in short, risk communication—is a factor determining risk acceptance more often than the results of formal analyses or expert judgements would suggest. Risk communication is thus a context factor that is specifically used to change risk acceptance.

Changing Risk Acceptance

To best achieve a high degree of acceptance for a change, it has proven very successful to include those who are supposed to accept the change in the planning, decision and control process to bind them to support the decision. Based on successful project reports, figure 4 lists six steps that should be considered when dealing with risks.

Figure 4. Six steps for choosing, deciding upon and accepting optimal risks

Determining “optimal risks”

In steps 1 and 2, major problems occur in identifying the desirability and the “objective risk” of the objective. while in step 3, it seems to be difficult to eliminate the worst options. For individuals and organizations alike, large-scale societal, catastrophic or lethal dangers seem to be the most dreaded and least acceptable options. Perrow (1984) argued that most societal risks, such as DNA research, power plants, or the nuclear arms race, possess many closely coupled subsystems, meaning that if one error occurs in a subsystem, it can trigger many other errors. These consecutive errors may remain undetected, due to the nature of the initial error, such as a nonfunctioning warning sign. The risks of accidents happening due to interactive failures increases in complex technical systems. Thus, Perrow (1984) suggested that it would be advisable to leave societal risks loosely coupled (i.e., independently controllable) and to allow for independent assessment of and protection against risks and to consider very carefully the necessity for technologies with the potential for catastrophic consequences.

Communicating “optimal choices”

Steps 3 to 6 deal with accurate communication of risks, which is a necessary tool to develop adequate risk perception, risk estimation and optimal risk-taking behaviour. Risk communication is aimed at different audiences, such as residents, employees, patients and so on. Risk communication uses different channels such as newspapers, radio, television, verbal communication and all of these in different situations or “arenas”, such as training sessions, public hearings, articles, campaigns and personal communications. In spite of little research on the effectiveness of mass media communication in the area of health and safety, most authors agree that the quality of the communication largely determines the likelihood of attitudinal or behavioural changes in risk acceptance of the targeted audience. According to Rohrmann (1992a), risk communication also serves different purposes, some of which are listed in figure 5.

Figure 5. Purposes of risk communication

Risk communication is a complex issue, with its effectiveness seldom proven with scientific exactness. Rohrmann (1992a) listed necessary factors for evaluating risk communication and gave some advice about communicating effectively. Wilde (1993) separated the source, the message, the channel and the recipient and gave suggestions for each aspect of communication. He cited data that show, for example, that the likelihood of effective safety and health communication depends on issues such as those listed in figure 6.

Figure 6. Factors influencing the effectiveness of risk communication

Establishing a risk optimization culture

Pidgeon (1991) defined safety culture as a constructed system of meanings through which a given people or group understands the hazards of the world. This system specifies what is important and legitimate, and explains relationships to matters of life and death, work and danger. A safety culture is created and recreated as members of it repeatedly behave in ways that seem to be natural, obvious and unquestionable and as such will construct a particular version of risk, danger and safety. Such versions of the perils of the world also will embody explanatory schemata to describe the causation of accidents. Within an organization, such as a company or a country, the tacit and explicit rules and norms governing safety are at the heart of a safety culture. Major components are rules for handling hazards, attitudes toward safety, and reflexivity on safety practice.

Industrial organizations that already live an elaborate safety culture emphasize the importance of common visions, goals, standards and behaviours in risk taking and risk acceptance. As uncertainties are unavoidable within the context of work, an optimal balance of taking chances and control of hazards has to be stricken. Vlek and Cvetkovitch (1989) stated:

Adequate risk management is a matter of organizing and maintaining a sufficient degree of (dynamic) control over a technological activity, rather than continually, or just once, measuring accident probabilities and distributing the message that these are, and will be, “negligibly low”. Thus more often than not, “acceptable risk” means “sufficient control”.

Summary

When people perceive themselves to possess sufficient control over possible hazards, they are willing to accept the dangers to gain the benefits. Sufficient control, however, has to be based on sound information, assessment, perception, evaluation and finally an optimal decision in favour of or against the “risky objective”.