Psychosocial Factors, Stress and Health

In the language of engineering, stress is “a force which deforms bodies”. In biology and medicine, the term usually refers to a process in the body, to the body’s general plan for adapting to all the influences, changes, demands and strains to which it is exposed. This plan swings into action, for example, when a person is assaulted on the street, but also when someone is exposed to toxic substances or to extreme heat or cold. It is not just physical exposures which activate this plan however; mental and social ones do so as well. For instance, if we are insulted by our supervisor, reminded of an unpleasant experience, expected to achieve something of which we do not believe we are capable, or if, with or without cause, we worry about our job or marriage.

There is something common to all these cases in the way the body attempts to adapt. This common denominator—a kind of “revving up” or “stepping on the gas”—is stress. Stress is, then, a stereotype in the body’s responses to influences, demands or strains. Some level of stress is always to be found in the body, just as, to draw a rough parallel, a country maintains a certain state of military preparedness, even in peacetime. Occasionally this preparedness is intensified, sometimes with good cause and at other times without.

In this way the stress level affects the rate at which processes of wear and tear on the body take place. The more “gas” given, the higher the rate at which the body’s engine is driven, and hence the more quickly the “fuel” is used up and the “engine” wears out. Another metaphor also applies: if you burn a candle with a high flame, at both ends, it will give off brighter light but will also burn down more quickly. A certain amount of fuel is necessary otherwise the engine will stand still, the candle will go out; that is, the organism would be dead. Thus, the problem is not that the body has a stress response, but that the degree of stress—the rate of wear and tear—to which it is subject may be too great. This stress response varies from one minute to another even in one individual, the variation depending in part on the nature and state of the body and in part on the external influences and demands—the stressors—to which the body is exposed. (A stressor is thus something that produces stress.)

Sometimes it is difficult to determine whether stress in a particular situation is good or bad. Take, for instance, the exhausted athlete on the winner’s stand, or the newly appointed but stress-racked executive. Both have achieved their goals. In terms of pure accomplishment, one would have to say that their results were well worth the effort. In psychological terms, however, such a conclusion is more doubtful. A good deal of torment may have been necessary to get so far, involving long years of training or never-ending overtime, usually at the expense of family life. From the medical viewpoint such achievers may be considered to have burnt their candles at both ends. The result could be physiological; the athlete may rupture a muscle or two and the executive develop high blood pressure or have a heart attack.

Stress in relation to work

An example may clarify how stress reactions can arise at work and what they might lead to in terms of health and quality of life. Let us imagine the following situation for a hypothetical male worker. Based on economic and technical considerations, management has decided to break up a production process into very simple and primitive elements which are to be performed on an assembly line. Through this decision, a social structure is created and a process set into motion which can constitute the starting point in a stress- and disease-producing sequence of events. The new situation becomes a psychosocial stimulus for the worker, when he first perceives it. These perceptions may be further influenced by the fact that the worker may have previously received extensive training, and thus was consequently expecting a work assignment which required higher qualifications, not reduced skill levels. In addition, past experience of work on an assembly line was strongly negative (that is, earlier environmental experiences will influence the reaction to the new situation). Furthermore, the worker’s hereditary factors make him more prone to react to stressors with an increase in blood pressure. Because he is more irritable, perhaps his wife criticizes him for accepting his new assignment and bringing his problems home. As a result of all these factors, the worker reacts to the feelings of distress, perhaps with an increase in alcohol consumption or by experiencing undesirable physiological reactions, such as the elevation in blood pressure. The troubles at work and in the family continue, and his reactions, originally of a transient type, become sustained. Eventually, he may enter a chronic anxiety state or develop alcoholism or chronic hypertensive disease. These problems, in turn, increase his difficulties at work and with his family, and may also increase his physiological vulnerability. A vicious cycle may set in which may end in a stroke, a workplace accident or even suicide. This example illustrates the environmental programming involved in the way a worker reacts behaviourally, physiologically and socially, leading to increased vulnerability, impaired health and even death.

Psychosocial conditions in present working life

According to an important International Labour Organization (ILO) (1975) resolution, work should not only respect workers’ lives and health and leave them free time for rest and leisure, but also allow them to serve society and achieve self-fulfilment by developing their personal capabilities. These principles were also set down as early as 1963, in a report from the London Tavistock Institute (Document No. T813) which provided the following general guidelines for job design:

- The job should be reasonably demanding in terms other than sheer endurance and provide at least a minimum of variety.

- The worker should be able to learn on the job and go on learning.

- The job should comprise some area of decision-making that the individual can call his or her own.

- There should be some degree of social support and recognition in the workplace.

- The worker should be able to relate what he or she does or produces to social life.

- The worker should feel that the job leads to some sort of desirable future.

The Organization for Economic Cooperation and Development (OECD), however, draws a less hopeful picture of the reality of working life, pointing out that:

- Work has been accepted as a duty and a necessity for most adults.

- Work and workplaces have been designed almost exclusively with reference to criteria of efficiency and cost.

- Technological and capital resources have been accepted as the imperative determinants of the optimum nature of jobs and work systems.

- Changes have been motivated largely by aspirations to unlimited economic growth.

- The judgement of the optimum designs of jobs and choice of work objectives has resided almost wholly with managers and technologists, with only a slight intrusion from collective bargaining and protective legislation.

- Other societal institutions have taken on forms that serve to sustain this type of work system.

In the short run, benefits of the developments which have proceeded according to this OECD list have brought more productivity at lesser cost, as well as an increase in wealth. However, the long-term disadvantages of such developments are often more worker dissatisfaction, alienation and possibly ill health which, when considering society in general, in turn, may affect the economic sphere, although the economic costs of these effects have only recently been taken into consideration (Cooper, Luikkonen and Cartwright 1996; Levi and Lunde-Jensen 1996).

We also tend to forget that, biologically, humankind has not changed much during the last 100,000 years, whereas the environment—and in particular the work environment—has changed dramatically, particularly during the past century and decades. This change has been partly for the better; however, some of these “improvements” have been accompanied by unexpected side effects. For example, data collected by the National Swedish Central Bureau of Statistics during the 1980s showed that:

- 11% of all Swedish employees are continuously exposed to deafening noise.

- 15% have work which makes them very dirty (oil, paint, etc.).

- 17% have inconvenient working hours, i.e., not only daytime work but also early or late night work, shift work or other irregular working hours.

- 9% have gross working hours exceeding 11 per day (this concept includes hours of work, breaks, travelling time, overtime, etc.; in other words, that part of the day which is set aside for work).

- 11% have work that is considered both “hectic” and “monotonous”.

- 34% consider their work “mentally exacting”.

- 40% consider themselves “without influence on the arrangement of time for breaks”.

- 45% consider themselves without “opportunities to learn new things” at their work.

- 26% have an instrumental attitude to their work. They consider “their work to yield nothing except the pay—i.e. no feeling of personal satisfaction”. Work is regarded purely as an instrument for acquiring an income.

In its major study of conditions of work in the 12 member States of the European Union at that time (1991/92), the European Foundation (Paoli 1992) found that 30% of the workforce regarded their work to risk their health, 23 million to have night work more than 25% of total hours worked, each third to report highly repetitive, monotonous work, each fifth male and each sixth female to work under “continuous time pressure”, and each fourth worker to carry heavy loads or to work in a twisted or painful position more than 50% of his or her working time.

Main psychosocial stressors at work

As already indicated, stress is caused by a bad “person- environment fit”, objectively, subjectively, or both, at work or elsewhere and in an interaction with genetic factors. It is like a badly fitting shoe: environmental demands are not matched to individual ability, or environmental opportunities do not measure up to individual needs and expectations. For example, the individual is able to perform a certain amount of work, but much more is required, or on the other hand no work at all is offered. Another example would be that the worker needs to be part of a social network, to experience a sense of belonging, a sense that life has meaning, but there may be no opportunity to meet these needs in the existing environment and the “fit” becomes bad.

Any fit will depend on the “shoe” as well as on the “foot”, on situational factors as well as on individual and group characteristics. The most important situational factors that give rise to “misfit” can be categorized as follows:

Quantitative overload. Too much to do, time pressure and repetitive work-flow. This is to a great extent the typical feature of mass production technology and routinized office work.

Qualitative underload. Too narrow and one-sided job content, lack of stimulus variation, no demands on creativity or problem- solving, or low opportunities for social interaction. These jobs seem to become more common with suboptimally designed automation and increased use of computers in both offices and manufacturing even though there may be instances of the opposite.

Role conflicts. Everybody occupies several roles concurrently. We are the superiors of some people and the subordinates of others. We are children, parents, marital partners, friends and members of clubs or trade unions. Conflicts easily arise among our various roles and are often stress evoking, as when, for instance, demands at work clash with those from a sick parent or child or when a supervisor is divided between loyalty to superiors and to fellow workers and subordinates.

Lack of control over one’s own situation. When someone else decides what to do, when and how; for example, in relation to work pace and working methods, when the worker has no influence, no control, no say. Or when there is uncertainty or lack of any obvious structure in the work situation.

Lack of social support at home and from your boss or fellow workers.

Physical stressors. Such factors can influence the worker both physically and chemically, for example, direct effects on the brain of organic solvents. Secondary psychosocial effects can also originate from the distress caused by, say, odours, glare, noise, extremes of air temperature or humidity and so on. These effects can also be due to the worker’s awareness, suspicion or fear that he is exposed to life-threatening chemical hazards or to accident risks.

Finally, real life conditions at work and outside work usually imply a combination of many exposures. These might become superimposed on each other in an additive or synergistic way. The straw which breaks the camel’s back may therefore be a rather trivial environmental factor, but one that comes on top of a very considerable, pre-existing environmental load.

Some of the specific stressors in industry merit special discussion, namely those characteristic of:

- mass production technology

- highly automated work processes

- shift work

Mass production technology. Over the past century work has become fragmented in many workplaces, changing from a well defined job activity with a distinct and recognized end-product, into numerous narrow and highly specified subunits which bear little apparent relation to the end-product. The growing size of many factory units has tended to result in a long chain of command between management and the individual workers, accentuating remoteness between the two groups. The worker also becomes remote from the consumer, since rapid elaborations for marketing, distribution and selling interpose many steps between the producer and the consumer.

Mass production, thus, normally involves not just a pronounced fragmentation of the work process but also a decrease in worker control of the process. This is partly because work organization, work content and work pace are determined by the machine system. All these factors usually result in monotony, social isolation, lack of freedom and time pressure, with possible long-term effects on health and well-being.

Mass production, moreover, favours the introduction of piece rates. In this regard, it can be assumed that the desire—or necessity—to earn more can, for a time, induce the individual to work harder than is good for the organism and to ignore mental and physical “warnings”, such as a feeling of tiredness, nervous problems and functional disturbances in various organs or organ systems. Another possible effect is that the employee, bent on raising output and earnings, infringes safety regulations thereby increasing the risk of occupational disease and of accidents to oneself and others (e.g., lorry drivers on piece rates).

Highly automated work processes. In automated work the repetitive, manual elements are taken over by machines, and the workers are left with mainly supervisory, monitoring and controlling functions. This kind of work is generally rather skilled, not regulated in detail and the worker is free to move about. Accordingly, the introduction of automation eliminates many of the disadvantages of the mass-production technology. However, this holds true mainly for those stages of automation where the operator is indeed assisted by the computer and maintains some control over its services. If, however, operator skills and knowledge are gradually taken over by the computer—a likely development if decision making is left to economists and technologists—a new impoverishment of work may result, with a re-introduction of monotony, social isolation and lack of control.

Monitoring a process usually calls for sustained attention and readiness to act throughout a monotonous term of duty, a requirement that does not match the brain’s need for a reasonably varied flow of stimuli in order to maintain optimal alertness. It is well documented that the ability to detect critical signals declines rapidly even during the first half-hour in a monotonous environment. This may add to the strain inherent in the awareness that temporary inattention and even a slight error could have extensive economic and other disastrous consequences.

Other critical aspects of process control are associated with very special demands on mental skill. The operators are concerned with symbols, abstract signals on instrument arrays and are not in touch with the actual product of their work.

Shift work. In the case of shift work, rhythmical biological changes do not necessarily coincide with corresponding environmental demands. Here, the organism may “step on the gas” and activation occurs at a time when the worker needs to sleep (for example, during the day after a night shift), and deactivation correspondingly occurs at night, when the worker may need to work and be alert.

A further complication arises because workers usually live in a social environment which is not designed for the needs of shift workers. Last but not least, shift workers must often adapt to regular or irregular changes in environmental demands, as in the case of rotating shifts.

In summary, the psychosocial demands of the modern workplace are often at variance with the workers’ needs and capabilities, leading to stress and ill health. This discussion provides only a snapshot of psychosocial stressors at work, and how these unhealthy conditions can arise in today’s workplace. In the sections that follow, psychosocial stressors are analysed in greater detail with respect to their sources in modern work systems and technologies, and with respect to their assessment and control.

Psychosocial and Organizational Factors

In 1966, long before job stress and psychosocial factors became household expressions, a special report entitled “Protecting the Health of Eighty Million Workers—A National Goal for Occupational Health” was issued to the Surgeon General of the United States (US Department of Health and Human Services 1966). The report was prepared under the auspices of the National Advisory Environmental Health Committee to provide direction to Federal programmes in occupational health. Among its many observations, the report noted that psychological stress was increasingly apparent in the workplace, presenting “... new and subtle threats to mental health,” and possible risk of somatic disorders such as cardiovascular disease. Technological change and the increasing psychological demands of the workplace were listed as contributing factors. The report concluded with a list of two dozen “urgent problems” requiring priority attention, including occupational mental health and contributing workplace factors.

Thirty years later, this report has proven remarkably prophetic. Job stress has become a leading source of worker disability in North America and Europe. In 1990, 13% of all worker disability cases handled by Northwestern National Life, a major US underwriter of worker compensation claims, were due to disorders with a suspected link to job stress (Northwestern National Life 1991). A 1985 study by the National Council on Compensation Insurance found that one type of claim, involving psychological disability due to “gradual mental stress” at work, had grown to 11% of all occupational disease claims (National Council on Compensation Insurance 1985)

* In the United States, occupational disease claims are distinct from injury claims, which tend to greatly outnumber disease claims.

These developments are understandable considering the demands of modern work. A 1991 survey of European Union members found that “The proportion of workers who complain from organizational constraints, which are in particular conducive to stress, is higher than the proportion of workers complaining from physical constraints” (European Foundation for the Improvement of Living and Working Conditions 1992). Similarly, a more recent study of the Dutch working population found that one-half of the sample reported a high work pace, three-fourths of the sample reported poor possibilities of promotion, and one-third reported a poor fit between their education and their jobs (Houtman and Kompier 1995). On the American side, data on the prevalence of job stress risk factors in the workplace are less available. However, in a recent survey of several thousand US workers, over 40% of the workers reported excessive workloads and said they were “used up” and “emotionally drained” at the end of the day (Galinsky, Bond and Friedman 1993).

The impact of this problem in terms of lost productivity, disease and reduced quality of life is undoubtedly formidable, although difficult to estimate reliably. However, recent analyses of data from over 28,000 workers by the Saint Paul Fire and Marine Insurance company are of interest and relevance. This study found that time pressure and other emotional and personal problems at work were more strongly associated with reported health problems than any other personal life stressor; more so than even financial or family problems, or death of a loved one (St. Paul Fire and Marine Insurance Company 1992).

Looking to the future, rapid changes in the fabric of work and the workforce pose unknown, and possibly increased, risks of job stress. For example, in many countries the workforce is rapidly ageing at a time when job security is decreasing. In the United States, corporate downsizing continues almost unabated into the last half of the decade at a rate of over 30,000 jobs lost per month (Roy 1995). In the above-cited study by Galinsky, Bond and Friedman (1993) nearly one-fifth of the workers thought it likely they would lose their jobs in the forthcoming year. At the same time the number of contingent workers, who are generally without health benefits and other safety nets, continues to grow and now comprises about 5% of the workforce (USBLS 1995).

The aim of this chapter is to provide an overview of current knowledge on conditions which lead to stress at work and associated health and safety problems. These conditions, which are commonly referred to as psychosocial factors, include aspects of the job and work environment such as organizational climate or culture, work roles, interpersonal relationships at work, and the design and content of tasks (e.g., variety, meaning, scope, repetitiveness, etc.). The concept of psychosocial factors extends also to the extra-organizational environment (e.g., domestic demands) and aspects of the individual (e.g., personality and attitudes) which may influence the development of stress at work. Frequently, the expressions work organization or organizational factors are used interchangeably with psychosocial factors in reference to working conditions which may lead to stress.

This section of the Encyclopaedia begins with descriptions of several models of job stress which are of current scientific interest, including the job demands-job control model, the person- environment (P-E) fit model, and other theoretical approaches to stress at work. Like all contemporary notions of job stress, these models have a common theme: job stress is conceptualized in terms of the relationship between the job and the person. According to this view, job stress and the potential for ill health develop when job demands are at variance with the needs, expectations or capacities of the worker. This core feature is implicit in figure 1, which shows the basic elements of a stress model favoured by researchers at the National Institute for Occupational Safety and Health (NIOSH). In this model, work-related psychosocial factors (termed stressors) result in psychological, behavioural and physical reactions which may ultimately influence health. However, as illustrated in figure 1, individual and contextual factors (termed stress moderators) intervene to influence the effects of job stressors on health and well-being. (See Hurrell and Murphy 1992 for a more elaborate description of the NIOSH stress model.)

Figure 1. The Job Stress Model of the National Institute for Occupational Safety and Health (NIOSH)

But putting aside this conceptual similarity, there are also non-trivial theoretical differences among these models. For example, unlike the NIOSH and P-E fit models of job stress, which acknowledge a host of potential psychosocial risk factors in the workplace, the job demands-job control model focuses most intensely on a more limited range of psychosocial dimensions pertaining to psychological workload and opportunity for workers to exercise control (termed decision latitude) over aspects of their jobs. Further, both the demand-control and the NIOSH models can be distinguished from the P-E fit models in terms of the focus placed on the individual. In the P-E fit model, emphasis is placed on individuals’ perceptions of the balance between features of the job and individual attributes. This focus on perceptions provides a bridge between P-E fit theory and another variant of stress theory attributed to Lazarus (1966), in which individual differences in appraisal of psychosocial stressors and in coping strategies become critically important in determining stress outcomes. In contrast, while not denying the importance of individual differences, the NIOSH stress model gives primacy to environmental factors in determining stress outcomes as suggested by the geometry of the model illustrated in figure 1. In essence, the model suggests that most stressors will be threatening to most of the people most of the time, regardless of circumstances. A similar emphasis can be seen in other models of stress and job stress (e.g., Cooper and Marshall 1976; Kagan and Levi 1971; Matteson and Ivancevich 1987).

These differences have important implications for both guiding job stress research and intervention strategies at the workplace. The NIOSH model, for example, argues for primary prevention of job stress via attention first to psychosocial stressors in the workplace and, in this regard, is consistent with a public health model of prevention. Although a public health approach recognizes the importance of host factors or resistance in the aetiology of disease, the first line of defence in this approach is to eradicate or reduce exposure to environmental pathogens.

The NIOSH stress model illustrated in figure 1 provides an organizing framework for the remainder of this section. Following the discussions of job stress models are short articles containing summaries of current knowledge on workplace psychosocial stressors and on stress moderators. These subsections address conditions which have received wide attention in the literature as stressors and stress moderators, as well as topics of emerging interest such as organizational climate and career stage. Prepared by leading authorities in the field, each summary provides a definition and brief overview of relevant literature on the topic. Further, to maximize the utility of these summaries, each contributor has been asked to include information on measurement or assessment methods and on prevention practices.

The final subsection of the chapter reviews current knowledge on a wide range of potential health risks of job stress and underlying mechanisms for these effects. Discussion ranges from traditional concerns, such as psychological and cardiovascular disorders, to emerging topics such as depressed immune function and musculoskeletal disease.

In summary, recent years have witnessed unprecedented changes in the design and demands of work, and the emergence of job stress as a major concern in occupational health. This section of the Encyclopaedia tries to promote understanding of psychosocial risks posed by the evolving work environment, and thus better protect the well-being of workers.

Genetic Determinants of Toxic Response

It has long been recognized that each person’s response to environmental chemicals is different. The recent explosion in molecular biology and genetics has brought a clearer understanding about the molecular basis of such variability. Major determinants of individual response to chemicals include important differences among more than a dozen superfamilies of enzymes, collectively termed xenobiotic- (foreign to the body) or drug-metabolizing enzymes. Although the role of these enzymes has classically been regarded as detoxification, these same enzymes also convert a number of inert compounds to highly toxic intermediates. Recently, many subtle as well as gross differences in the genes encoding these enzymes have been identified, which have been shown to result in marked variations in enzyme activity. It is now clear that each individual possesses a distinct complement of xenobiotic-metabolizing enzyme activities; this diversity might be thought of as a “metabolic fingerprint”. It is the complex interplay of these many different enzyme superfamilies which ultimately determines not only the fate and the potential for toxicity of a chemical in any given individual, but also assessment of exposure. In this article we have chosen to use the cytochrome P450 enzyme superfamily to illustrate the remarkable progress made in understanding individual response to chemicals. The development of relatively simple DNA-based tests designed to identify specific gene alterations in these enzymes, is now providing more accurate predictions of individual response to chemical exposure. We hope the result will be preventive toxicology. In other words, each individual might learn about those chemicals to which he or she is particularly sensitive, thereby avoiding previously unpredictable toxicity or cancer.

Although it is not generally appreciated, human beings are exposed daily to a barrage of innumerable diverse chemicals. Many of these chemicals are highly toxic, and they are derived from a wide variety of environmental and dietary sources. The relationship between such exposures and human health has been, and continues to be, a major focus of biomedical research efforts worldwide.

What are some examples of this chemical bombardment? More than 400 chemicals from red wine have been isolated and characterized. At least 1,000 chemicals are estimated to be produced by a lighted cigarette. There are countless chemicals in cosmetics and perfumed soaps. Another major source of chemical exposure is agriculture: in the United States alone, farmlands receive more than 75,000 chemicals each year in the form of pesticides, herbicides and fertilizing agents; after uptake by plants and grazing animals, as well as fish in nearby waterways, humans (at the end of the food chain) ingest these chemicals. Two other sources of large concentrations of chemicals taken into the body include (a) drugs taken chronically and (b) exposure to hazardous substances in the workplace over a lifetime of employment.

It is now well established that chemical exposure may adversely affect many aspects of human health, causing chronic diseases and the development of many cancers. In the last decade or so, the molecular basis of many of these relationships has begun to be unravelled. In addition, the realization has emerged that humans differ markedly in their susceptibility to the harmful effects of chemical exposure.

Current efforts to predict human response to chemical exposure combine two fundamental approaches (figure 1): monitoring the extent of human exposure through biological markers (biomarkers), and predicting the likely response of an individual to a given level of exposure. Although both of these approaches are extremely important, it should be emphasized that the two are distinctly different from one another. This article will focus on the genetic factors underlying individual susceptibility to any particular chemical exposure. This field of research is broadly termed ecogenetics, or pharmacogenetics (see Kalow 1962 and 1992). Many of the recent advances in determining individual susceptibility to chemical toxicity have evolved from a greater appreciation of the processes by which humans and other mammals detoxify chemicals, and the remarkable complexity of the enzyme systems involved.

Figure 1. The interrelationships among exposure assessment, ethnic differences, age, diet, nutrition and genetic susceptibility assessment - all of which play a role in the individual risk of toxicity and cancer

We will first describe the variability of toxic responses in humans. We will then introduce some of the enzymes responsible for such variation in response, due to differences in the metabolism of foreign chemicals. Next, the history and nomenclature of the cytochrome P450 superfamily will be detailed. Five human P450 polymorphisms as well as several non-P450 polymorphisms will be briefly described; these are responsible for human differences in toxic response. We will then discuss an example to emphasize the point that genetic differences in individuals can influence exposure assessment, as determined by environmental monitoring. Lastly, we will discuss the role of these xenobiotic-metabolizing enzymes in critical life functions.

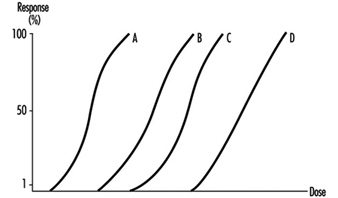

Variation in Toxic Response Among the Human Population

Toxicologists and pharmacologists commonly speak about the average lethal dose for 50% of the population (LD50), the average maximal tolerated dose for 50% of the population (MTD50), and the average effective dose of a particular drug for 50% of the population (ED50). However, how do these doses affect each of us on an individual basis? In other words, a highly sensitive individual may be 500 times more affected or 500 times more likely to be affected than the most resistant individual in a population; for these people, the LD50 (and MTD50 and ED50) values would have little meaning. LD50, MTD50 and ED50 values are only relevant when referring to the population as a whole.

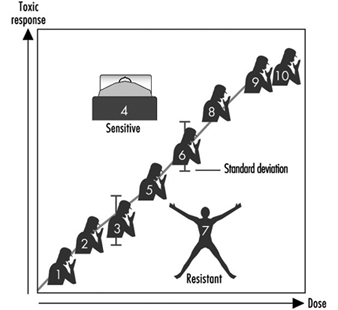

Figure 2 illustrates a hypothetical dose-response relationship for a toxic response by individuals in any given population. This generic diagram might represent bronchogenic carcinoma in response to the number of cigarettes smoked, chloracne as a function of dioxin levels in the workplace, asthma as a function of air concentrations of ozone or aldehyde, sunburn in response to ultraviolet light, decreased clotting time as a function of aspirin intake, or gastrointestinal distress in response to the number of jalapeño peppers consumed. Generally, in each of these instances, the greater the exposure, the greater the toxic response. Most of the population will exhibit the mean and standard deviation of toxic response as a function of dose. The “resistant outlier” (lower right in figure 2) is an individual having less of a response at higher doses or exposures. A “sensitive outlier” (upper left) is an individual having an exaggerated response to a relatively small dose or exposure. These outliers, with extreme differences in response compared to the majority of individuals in the population, may represent important genetic variants that can help scientists in attempting to understand the underlying molecular mechanisms of a toxic response.

Figure 2. Generic relationship between any toxic response and the dose of any environmental, chemical or physical agent

Using these outliers in family studies, scientists in a number of laboratories have begun to appreciate the importance of Mendelian inheritance for a given toxic response. Subsequently, one can then turn to molecular biology and genetic studies to pinpoint the underlying mechanism at the gene level (genotype) responsible for the environmentally caused disease (phenotype).

Xenobiotic- or Drug-metabolizing Enzymes

How does the body respond to the myriad of exogenous chemicals to which we are exposed? Humans and other mammals have evolved highly complex metabolic enzyme systems comprising more than a dozen distinct superfamilies of enzymes. Almost every chemical to which humans are exposed will be modified by these enzymes, in order to facilitate removal of the foreign substance from the body. Collectively, these enzymes are frequently referred to as drug-metabolizing enzymes or xenobiotic-metabolizing enzymes. Actually, both terms are misnomers. First, many of these enzymes not only metabolize drugs but hundreds of thousands of environmental and dietary chemicals. Second, all of these enzymes also have normal body compounds as substrates; none of these enzymes metabolizes only foreign chemicals.

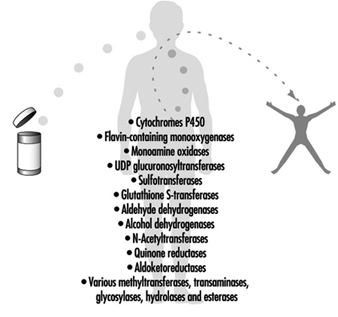

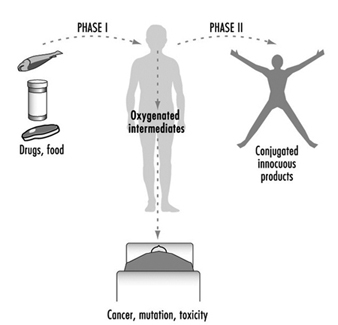

For more than four decades, the metabolic processes mediated by these enzymes have commonly been classified as either Phase I or Phase II reactions (figure 3). Phase I (“functionalization”) reactions generally involve relatively minor structural modifications of the parent chemical via oxidation, reduction or hydrolysis in order to produce a more water-soluble metabolite. Frequently, Phase I reactions provide a “handle” for further modification of a compound by subsequent Phase II reactions. Phase I reactions are primarily mediated by a superfamily of highly versatile enzymes, collectively termed cytochromes P450, although other enzyme superfamilies can also be involved (figure 4).

Figure 3. The classical designation of Phase I and Phase II xenobiotic- or drug-metabolizing enzymes

Figure 4. Examples of drug-metabolizing enzymes

Phase II reactions involve the coupling of a water-soluble endogenous molecule to a chemical (parent chemical or Phase I metabolite) in order to facilitate excretion. Phase II reactions are frequently termed “conjugation” or “derivatization” reactions. The enzyme superfamilies catalyzing Phase II reactions are generally named according to the endogenous conjugating moiety involved: for example, acetylation by the N-acetyltransferases, sulphation by the sulphotransferases, glutathione conjugation by the glutathione transferases, and glucuronidation by the UDP glucuronosyltransferases (figure 4). Although the major organ of drug metabolism is the liver, the levels of some drug- metabolizing enzymes are quite high in the gastrointestinal tract, gonads, lung, brain and kidney, and such enzymes are undoubtedly present to some extent in every living cell.

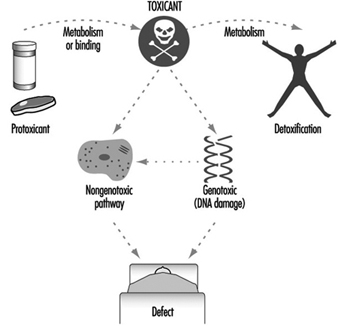

Xenobiotic-metabolizing Enzymes Represent Double-edged Swords

As we learn more about the biological and chemical processes leading to human health aberrations, it has become increasingly evident that drug-metabolizing enzymes function in an ambivalent manner (figure 3). In the majority of cases, lipid-soluble chemicals are converted to more readily excreted water-soluble metabolites. However, it is clear that on many occasions the same enzymes are capable of transforming other inert chemicals into highly reactive molecules. These intermediates can then interact with cellular macromolecules such as proteins and DNA. Thus, for each chemical to which humans are exposed, there exists the potential for the competing pathways of metabolic activation and detoxification.

Brief Review of Genetics

In human genetics, each gene (locus) is located on one of the 23 pairs of chromosomes. The two alleles (one present on each chromosome of the pair) can be the same, or they can be different from one another. For example, the B and b alleles, in which B (brown eyes) is dominant over b (blue eyes): individuals of the brown-eyed phenotype can have either the BB or Bb genotypes, whereas individuals of the blue-eyed phenotype can only have the bb genotype.

A polymorphism is defined as two or more stably inherited phenotypes (traits)—derived from the same gene(s)—that are maintained in the population, often for reasons not necessarily obvious. For a gene to be polymorphic, the gene product must not be essential for development, reproductive vigour or other critical life processes. In fact, a “balanced polymorphism,” wherein the heterozygote has a distinct survival advantage over either homozygote (e.g., resistance to malaria, and the sickle-cell haemoglobin allele) is a common explanation for maintaining an allele in the population at otherwise unexplained high frequencies (see Gonzalez and Nebert 1990).

Human Polymorphisms of Xenobiotic-metabolizing Enzymes

Genetic differences in the metabolism of various drugs and environmental chemicals have been known for more than four decades (Kalow 1962 and 1992). These differences are frequently referred to as pharmacogenetic or, more broadly, ecogenetic polymorphisms. These polymorphisms represent variant alleles that occur at a relatively high frequency in the population and are generally associated with aberrations in enzyme expression or function. Historically, polymorphisms were usually identified following unexpected responses to therapeutic agents. More recently, recombinant DNA technology has enabled scientists to identify the precise alterations in genes that are responsible for some of these polymorphisms. Polymorphisms have now been characterized in many drug-metabolizing enzymes—including both Phase I and Phase II enzymes. As more and more polymorphisms are identified, it is becoming increasingly apparent that each individual may possess a distinct complement of drug-metabolizing enzymes. This diversity might be described as a “metabolic fingerprint”. It is the complex interplay of the various drug- metabolizing enzyme superfamilies within any individual that will ultimately determine his or her particular response to a given chemical (Kalow 1962 and 1992; Nebert 1988; Gonzalez and Nebert 1990; Nebert and Weber 1990).

Expressing Human Xenobiotic-metabolizingEnzymes in Cell Culture

How might we develop better predictors of human toxic responses to chemicals? Advances in defining the multiplicity of drug-metabolizing enzymes must be accompanied by precise knowledge as to which enzymes determine the metabolic fate of individual chemicals. Data gleaned from laboratory rodent studies have certainly provided useful information. However, significant interspecies differences in xenobiotic-metabolizing enzymes necessitate caution in extrapolating data to human populations. To overcome this difficulty, many laboratories have developed systems in which various cell lines in culture can be engineered to produce functional human enzymes that are stable and in high concentrations (Gonzalez, Crespi and Gelboin 1991). Successful production of human enzymes has been achieved in a variety of diverse cell lines from sources including bacteria, yeast, insects and mammals.

In order to define the metabolism of chemicals even more accurately, multiple enzymes have also been successfully produced in a single cell line (Gonzalez, Crespi and Gelboin 1991). Such cell lines provide valuable insights into the precise enzymes involved in the metabolic processing of any given compound and likely toxic metabolites. If this information can then be combined with knowledge regarding the presence and level of an enzyme in human tissues, these data should provide valuable predictors of response.

Cytochrome P450

History and nomenclature

The cytochrome P450 superfamily is one of the most studied drug-metabolizing enzyme superfamilies, having a great deal of individual variability in response to chemicals. Cytochrome P450 is a convenient generic term used to describe a large superfamily of enzymes pivotal in the metabolism of innumerable endogenous and exogenous substrates. The term cytochrome P450 was first coined in 1962 to describe an unknown pigment in cells which, when reduced and bound with carbon monoxide, produced a characteristic absorption peak at 450 nm. Since the early 1980s, cDNA cloning technology has resulted in remarkable insights into the multiplicity of cytochrome P450 enzymes. To date, more than 400 distinct cytochrome P450 genes have been identified in animals, plants, bacteria and yeast. It has been estimated that any one mammalian species, such as humans, may possess 60 or more distinct P450 genes (Nebert and Nelson 1991). The multiplicity of P450 genes has necessitated the development of a standardized nomenclature system (Nebert et al. 1987; Nelson et al. 1993). First proposed in 1987 and updated on a biannual basis, the nomenclature system is based on divergent evolution of amino acid sequence comparisons between P450 proteins. The P450 genes are divided into families and subfamilies: enzymes within a family display greater than 40% amino acid similarity, and those within the same subfamily display 55% similarity. P450 genes are named with the root symbol CYP followed by an arabic numeral designating the P450 family, a letter denoting the subfamily, and a further arabic numeral designating the individual gene (Nelson et al. 1993; Nebert et al. 1991). Thus, CYP1A1 represents P450 gene 1 in family 1 and subfamily A.

As of February 1995, there are 403 CYP genes in the database, composed of 59 families and 105 sub- families. These include eight lower eukaryotic families, 15 plant families, and 19 bacterial families. The 15 human P450 gene families comprise 26 subfamilies, 22 of which have been mapped to chromosomal locations throughout most of the genome. Some sequences are clearly orthologous across many species—for example, only one CYP17 (steroid 17α-hydroxylase) gene has been found in all vertebrates examined to date; other sequences within a subfamily are highly duplicated, making the identification of orthologous pairs impossible (e.g., the CYP2C subfamily). Interestingly, human and yeast share an orthologous gene in the CYP51 family. Numerous comprehensive reviews are available for readers seeking further information on the P450 superfamily (Nelson et al. 1993; Nebert et al. 1991; Nebert and McKinnon 1994; Guengerich 1993; Gonzalez 1992).

The success of the P450 nomenclature system has resulted in similar terminology systems being developed for the UDP glucuronosyltransferases (Burchell et al. 1991) and flavin-containing mono-oxygenases (Lawton et al. 1994). Similar nomenclature systems based on divergent evolution are also under development for several other drug-metabolizing enzyme superfamilies (e.g., sulphotransferases, epoxide hydrolases and aldehyde dehydrogenases).

Recently, we divided the mammalian P450 gene superfamily into three groups (Nebert and McKinnon 1994)—those involved principally with foreign chemical metabolism, those involved in the synthesis of various steroid hormones, and those participating in other important endogenous functions. It is the xenobiotic-metabolizing P450 enzymes that assume the most significance for prediction of toxicity.

Xenobiotic-metabolizing P450 enzymes

P450 enzymes involved in the metabolism of foreign compounds and drugs are almost always found within families CYP1, CYP2, CYP3 and CYP4. These P450 enzymes catalyze a wide variety of metabolic reactions, with a single P450 often capable of meta-bolizing many different compounds. In addition, multiple P450 enzymes may metabolize a single compound at different sites. Also, a compound may be metabolized at the same, single site by several P450s, although at varying rates.

A most important property of the drug-metabolizing P450 enzymes is that many of these genes are inducible by the very substances which serve as their substrates. On the other hand, other P450 genes are induced by nonsubstrates. This phenomenon of enzyme induction underlies many drug-drug interactions of therapeutic importance.

Although present in many tissues, these particular P450 enzymes are found in relatively high levels in the liver, the primary site of drug metabolism. Some of the xenobiotic-metabolizing P450 enzymes exhibit activity toward certain endogenous substrates (e.g., arachidonic acid). However, it is generally believed that most of these xenobiotic-metabolizing P450 enzymes do not play important physiological roles—although this has not been established experimentally as yet. The selective homozygous disruption, or “knock-out,” of individual xenobiotic-metabolizing P450 genes by means of gene targeting methodologies in mice is likely to provide unequivocal information soon with regard to physiological roles of the xenobiotic-metabolizing P450s (for a review of gene targeting, see Capecchi 1994).

In contrast to P450 families encoding enzymes involved primarily in physiological processes, families encoding xenobiotic-metabolizing P450 enzymes display marked species specificity and frequently contain many active genes per subfamily (Nelson et al. 1993; Nebert et al. 1991). Given the apparent lack of physiological substrates, it is possible that P450 enzymes in families CYP1, CYP2, CYP3 and CYP4 that have appeared in the past several hundred million years have evolved as a means of detoxifying foreign chemicals encountered in the environment and diet. Clearly, evolution of the xenobiotic-metabolizing P450s would have occurred over a time period which far precedes the synthesis of most of the synthetic chemicals to which humans are now exposed. The genes in these four gene families may have evolved and diverged in animals due to their exposure to plant metabolites during the last 1.2 billion years—a process descriptively termed “animal-plant warfare” (Gonzalez and Nebert 1990). Animal-plant warfare is the phenomenon in which plants developed new chemicals (phytoalexins) as a defence mechanism in order to prevent ingestion by animals, and animals, in turn, responded by developing new P450 genes to accommodate the diversifying substrates. Providing further impetus to this proposal are the recently described examples of plant-insect and plant-fungus chemical warfare involving P450 detoxification of toxic substrates (Nebert 1994).

The following is a brief introduction to several of the human xenobiotic-metabolizing P450 enzyme polymorphisms in which genetic determinants of toxic response are believed to be of high significance. Until recently, P450 polymorphisms were generally suggested by unexpected variance in patient response to administered therapeutic agents. Several P450 polymorphisms are indeed named according to the drug with which the polymorphism was first identified. More recently, research efforts have focused on identification of the precise P450 enzymes involved in the metabolism of chemicals for which variance is observed and the precise characterization of the P450 genes involved. As described earlier, the measurable activity of a P450 enzyme towards a model chemical can be called the phenotype. Allelic differences in a P450 gene for each individual is termed the P450 genotype. As more and more scrutiny is applied to the analysis of P450 genes, the precise molecular basis of previously documented phenotypic variance is becoming clearer.

The CYP1A subfamily

The CYP1A subfamily comprises two enzymes in humans and all other mammals: these are designated CYP1A1 and CYP1A2 under standard P450 nomenclature. These enzymes are of considerable interest, because they are involved in the metabolic activation of many procarcinogens and are also induced by several compounds of toxicological concern, including dioxin. For example, CYP1A1 metabolically activates many compounds found in cigarette smoke. CYP1A2 metabolically activates many arylamines—associated with urinary bladder cancer—found in the chemical dye industry. CYP1A2 also metabolically activates 4-(methylnitrosamino)-1-(3-pyridyl)-1-butanone (NNK), a tobacco-derived nitrosamine. CYP1A1 and CYP1A2 are also found at higher levels in the lungs of cigarette smokers, due to induction by polycyclic hydrocarbons present in the smoke. The levels of CYP1A1 and CYP1A2 activity are therefore considered to be important determinants of individual response to many potentially toxic chemicals.

Toxicological interest in the CYP1A subfamily was greatly intensified by a 1973 report correlating the level of CYP1A1 inducibility in cigarette smokers with individual susceptibility to lung cancer (Kellermann, Shaw and Luyten-Kellermann 1973). The molecular basis of CYP1A1 and CYP1A2 induction has been a major focus of numerous laboratories. The induction process is mediated by a protein termed the Ah receptor to which dioxins and structurally related chemicals bind. The name Ah is derived from the aryl hydrocarbon nature of many CYP1A inducers. Interestingly, differences in the gene encoding the Ah receptor between strains of mice result in marked differences in chemical response and toxicity. A polymorphism in the Ah receptor gene also appears to occur in humans: approximately one-tenth of the population displays high induction of CYP1A1 and may be at greater risk than the other nine-tenths of the population for development of certain chemically induced cancers. The role of the Ah receptor in the control of enzymes in the CYP1A subfamily, and its role as a determinant of human response to chemical exposure, has been the subject of several recent reviews (Nebert, Petersen and Puga 1991; Nebert, Puga and Vasiliou 1993).

Are there other polymorphisms that might control the level of CYP1A proteins in a cell? A polymorphism in the CYP1A1 gene has also been identified, and this appears to influence lung cancer risk amongst Japanese cigarette smokers, although this same polymorphism does not appear to influence risk in other ethnic groups (Nebert and McKinnon 1994).

CYP2C19

Variations in the rate at which individuals metabolize the anticonvulsant drug (S)-mephenytoin have been well documented for many years (Guengerich 1989). Between 2% and 5% of Caucasians and as many as 25% of Asians are deficient in this activity and may be at greater risk of toxicity from the drug. This enzyme defect has long been known to involve a member of the human CYP2C subfamily, but the precise molecular basis of this deficiency has been the subject of considerable controversy. The major reason for this difficulty was the six or more genes in the human CYP2C subfamily. It was recently demonstrated, however, that a single-base mutation in the CYP2C19 gene is the primary cause of this deficiency (Goldstein and de Morais 1994). A simple DNA test, based on the polymerase chain reaction (PCR), has also been developed to identify this mutation rapidly in human populations (Goldstein and de Morais 1994).

CYP2D6

Perhaps the most extensively characterized variation in a P450 gene is that involving the CYP2D6 gene. More than a dozen examples of mutations, rearrangements and deletions affecting this gene have been described (Meyer 1994). This polymorphism was first suggested 20 years ago by clinical variability in patients’ response to the antihypertensive agent debrisoquine. Alterations in the CYP2D6 gene giving rise to altered enzyme activity are therefore collectively termed the debrisoquine polymorphism.

Prior to the advent of DNA-based studies, individuals had been classified as poor or extensive metabolizers (PMs, EMs) of debrisoquine based on metabolite concentrations in urine samples. It is now clear that alterations in the CYP2D6 gene may result in individuals displaying not only poor or extensive debrisoquine metabolism, but also ultrarapid metabolism. Most alterations in the CYP2D6 gene are associated with partial or total deficiency of enzyme function; however, individuals in two families have recently been described who possess multiple functional copies of the CYP2D6 gene, giving rise to ultrarapid metabolism of CYP2D6 substrates (Meyer 1994). This remarkable observation provides new insights into the wide spectrum of CYP2D6 activity previously observed in population studies. Alterations in CYP2D6 function are of particular significance, given the more than 30 commonly prescribed drugs metabolized by this enzyme. An individual’s CYP2D6 function is therefore a major determinant of both therapeutic and toxic response to administered therapy. Indeed, it has recently been argued that consideration of a patient’s CYP2D6 status is necessary for the safe use of both psychiatric and cardiovascular drugs.

The role of the CYP2D6 polymorphism as a determinant of individual susceptibility to human diseases such as lung cancer and Parkinson’s disease has also been the subject of intense study (Nebert and McKinnon 1994; Meyer 1994). While conclusions are difficult to define given the diverse nature of the study protocols utilized, the majority of studies appear to indicate an association between extensive metabolizers of debrisoquine (EM phenotype) and lung cancer. The reasons for such an association are presently unclear. However, the CYP2D6 enzyme has been shown to metabolize NNK, a tobacco-derived nitrosamine.

As DNA-based assays improve—enabling even more accurate assessment of CYP2D6 status—it is anticipated that the precise relationship of CYP2D6 to disease risk will be clarified. Whereas the extensive metabolizer may be linked with susceptibility to lung cancer, the poor metabolizer (PM phenotype) appears to be associated with Parkinson’s disease of unknown cause. Whereas these studies are also difficult to compare, it appears that PM individuals having a diminished capacity to metabolize CYP2D6 substrates (e.g., debrisoquine) have a 2- to 2.5-fold increase in risk of developing Parkinson’s disease.

CYP2E1

The CYP2E1 gene encodes an enzyme that metabolizes many chemicals, including drugs and many low-molecular-weight carcinogens. This enzyme is also of interest because it is highly inducible by alcohol and may play a role in liver injury induced by chemicals such as chloroform, vinyl chloride and carbon tetrachloride. The enzyme is primarily found in the liver, and the level of enzyme varies markedly between individuals. Close scrutiny of the CYP2E1 gene has resulted in the identification of several polymorphisms (Nebert and McKinnon 1994). A relationship has been reported between the presence of certain structural variations in the CYP2E1 gene and apparent lowered lung cancer risk in some studies; however, there are clear interethnic differences which require clarification of this possible relationship.

The CYP3A subfamily

In humans, four enzymes have been identified as members of the CYP3A subfamily due to their similarity in amino acid sequence. The CYP3A enzymes metabolize many commonly prescribed drugs such as erythromycin and cyclosporin. The carcinogenic food contaminant aflatoxin B1 is also a CYP3A substrate. One member of the human CYP3A subfamily, designated CYP3A4, is the principal P450 in human liver as well as being present in the gastrointestinal tract. As is true for many other P450 enzymes, the level of CYP3A4 is highly variable between individuals. A second enzyme, designated CYP3A5, is found in only approximately 25% of livers; the genetic basis of this finding has not been elucidated. The importance of CYP3A4 or CYP3A5 variability as a factor in genetic determinants of toxic response has not yet been established (Nebert and McKinnon 1994).

Non-P450 Polymorphisms

Numerous polymorphisms also exist within other xenobiotic-metabolizing enzyme superfamilies (e.g., glutathione transferases, UDP glucuronosyltransferases, para-oxonases, dehydrogenases, N-acetyltransferases and flavin-containing mono-oxygenases). Because the ultimate toxicity of any P450-generated intermediate is dependent on the efficiency of subsequent Phase II detoxification reactions, the combined role of multiple enzyme polymorphisms is important in determining susceptibility to chemically induced diseases. The metabolic balance between Phase I and Phase II reactions (figure 3) is therefore likely to be a major factor in chemically induced human diseases and genetic determinants of toxic response.

The GSTM1 gene polymorphism

A well studied example of a polymorphism in a Phase II enzyme is that involving a member of the glutathione S-transferase enzyme superfamily, designated GST mu or GSTM1. This particular enzyme is of considerable toxicological interest because it appears to be involved in the subsequent detoxification of toxic metabolites produced from chemicals in cigarette smoke by the CYP1A1 enzyme. The identified polymorphism in this glutathione transferase gene involves a total absence of functional enzyme in as many as half of all Caucasians studied. This lack of a Phase II enzyme appears to be associated with increased susceptibility to lung cancer. By grouping individuals on the basis of both variant CYP1A1 genes and the deletion or presence of a functional GSTM1 gene, it has been demonstrated that the risk of developing smoking-induced lung cancer varies significantly (Kawajiri, Watanabe and Hayashi 1994). In particular, individuals displaying one rare CYP1A1 gene alteration, in combination with an absence of the GSTM1 gene, were at higher risk (as much as ninefold) of developing lung cancer when exposed to a relatively low level of cigarette smoke. Interestingly, there appear to be interethnic differences in the significance of variant genes which necessitate further study in order to elucidate the precise role of such alterations in susceptibility to disease (Kalow 1962; Nebert and McKinnon 1994; Kawajiri, Watanabe and Hayashi 1994).

Synergistic effect of two or more polymorphisms on the toxic response

A toxic response to an environmental agent may be greatly exaggerated by the combination of two pharmacogenetic defects in the same individual, for example, the combined effects of the N-acetyltransferase (NAT2) polymorphism and the glucose-6-phosphate dehydrogenase (G6PD) polymorphism.

Occupational exposure to arylamines constitutes a grave risk of urinary bladder cancer. Since the elegant studies of Cartwright in 1954, it has become clear that the N-acetylator status is a determinant of azo-dye-induced bladder cancer. There is a highly significant correlation between the slow-acetylator phenotype and the occurrence of bladder cancer, as well as the degree of invasiveness of this cancer in the bladder wall. On the contrary, there is a significant association between the rapid-acetylator phenotype and the incidence of colorectal carcinoma. The N-acetyltransferase (NAT1, NAT2) genes have been cloned and sequenced, and DNA-based assays are now able to detect the more than a dozen allelic variants which account for the slow-acetylator phenotype. The NAT2 gene is polymorphic and responsible for most of the variability in toxic response to environmental chemicals (Weber 1987; Grant 1993).

Glucose-6-phosphate dehydrogenase (G6PD) is an enzyme critical in the generation and maintenance of NADPH. Low or absent G6PD activity can lead to severe drug- or xenobiotic-induced haemolysis, due to the absence of normal levels of reduced glutathione (GSH) in the red blood cell. G6PD deficiency affects at least 300 million people worldwide. More than 10% of African-American males exhibit the less severe phenotype, while certain Sardinian communities exhibit the more severe “Mediterranean type” at frequencies as high as one in every three persons. The G6PD gene has been cloned and localized to the X chromosome, and numerous diverse point mutations account for the large degree of phenotypic heterogeneity seen in G6PD-deficient individuals (Beutler 1992).

Thiozalsulphone, an arylamine sulpha drug, was found to cause a bimodal distribution of haemolytic anaemia in the treated population. When treated with certain drugs, individuals with the combination of G6PD deficiency plus the slow-acetylator phenotype are more affected than those with the G6PD deficiency alone or the slow-acetylator phenotype alone. G6PD-deficient slow acetylators are at least 40 times more susceptible than normal-G6PD rapid acetylators to thiozalsulphone-induced haemolysis.

Effect of genetic polymorphisms on exposure assessment

Exposure assessment and biomonitoring (figure 1) also requires information on the genetic make-up of each individual. Given identical exposure to a hazardous chemical, the level of haemoglobin adducts (or other biomarkers) might vary by two or three orders of magnitude among individuals, depending upon each person’s metabolic fingerprint.

The same combined pharmacogenetics has been studied in chemical factory workers in Germany (table 1). Haemoglobin adducts among workers exposed to aniline and acetanilide are by far the highest in G6PD-deficient slow acetylators, as compared with the other possible combined pharmacogenetic phenotypes. This study has important implications for exposure assessment. These data demonstrate that, although two individuals might be exposed to the same ambient level of hazardous chemical in the work place, the amount of exposure (via biomarkers such as haemoglobin adducts) might be estimated to be two or more orders of magnitude less, due to the underlying genetic predisposition of the individual. Likewise, the resulting risk of an adverse health effect may vary by two or more orders of magnitude.

Table 1: Haemoglobin adducts in workers exposed to aniline and acetanilide

| Acetylator status | G6PD deficiency | |||

| Fast | Slow | No | Yes | Hgb adducts |

| + | + | 2 | ||

| + | + | 30 | ||

| + | + | 20 | ||

| + | + | 100 | ||

Source: Adapted from Lewalter and Korallus 1985.

Genetic differences in binding as well as metabolism

It should be emphasized that the same case made here for meta-bolism can also be made for binding. Heritable differences in the binding of environmental agents will greatly affect the toxic response. For example, differences in the mouse cdm gene can profoundly affect individual sensitivity to cadmium-induced testicular necrosis (Taylor, Heiniger and Meier 1973). Differences in the binding affinity of the Ah receptor are likely affect dioxin-induced toxicity and cancer (Nebert, Petersen and Puga 1991; Nebert, Puga and Vasiliou 1993).

Figure 5 summarizes the role of metabolism and binding in toxicity and cancer. Toxic agents, as they exist in the environment or following metabolism or binding, elicit their effects by either a genotoxic pathway (in which damage to DNA occurs) or a non-genotoxic pathway (in which DNA damage and mutagenesis need not occur). Interestingly, it has recently become clear that “classical” DNA-damaging agents can operate via a reduced glutathione (GSH)-dependent nongenotoxic signal transduction pathway, which is initiated on or near the cell surface in the absence of DNA and outside the cell nucleus (Devary et al. 1993). Genetic differences in metabolism and binding remain, however, as the major determinants in controlling different individual toxic responses.

Figure 5. The general means by which toxicity occurs

Role of Drug-metabolizing Enzymesin Cellular Function

Genetically based variation in drug-metabolizing enzyme function is of major importance in determining individual response to chemicals. These enzymes are pivotal in determining the fate and time course of a foreign chemical following exposure.

As illustrated in figure 5, the importance of drug-metabolizing enzymes in individual susceptibility to chemical exposure may in fact present a far more complex issue than is evident from this simple discussion of xenobiotic metabolism. In other words, during the past two decades, genotoxic mechanisms (measurements of DNA adducts and protein adducts) have been greatly emphasized. However, what if nongenotoxic mechanisms are at least as important as genotoxic mechanisms in causing toxic responses?

As mentioned earlier, the physiological roles of many drug-metabolizing enzymes involved in xenobiotic metabolism have not been accurately defined. Nebert (1994) has proposed that, because of their presence on this planet for more than 3.5 billion years, drug-metabolizing enzymes were originally (and are now still primarily) responsible for regulating the cellular levels of many nonpeptide ligands important in the transcriptional activation of genes affecting growth, differentiation, apoptosis, homeostasis and neuroendocrine functions. Furthermore, the toxicity of most, if not all, environmental agents occurs by means of agonist or antagonist action on these signal transduction pathways (Nebert 1994). Based on this hypothesis, genetic variability in drug-metabolizing enzymes may have quite dramatic effects on many critical biochemical processes within the cell, thereby leading to important differences in toxic response. It is indeed possible that such a scenario may also underlie many idiosyncratic adverse reactions encountered in patients using commonly prescribed drugs.

Conclusions

The past decade has seen remarkable progress in our understanding of the genetic basis of differential response to chemicals in drugs, foods and environmental pollutants. Drug-metabolizing enzymes have a profound influence on the way humans respond to chemicals. As our awareness of drug-metabolizing enzyme multiplicity continues to evolve, we are increasingly able to make improved assessments of toxic risk for many drugs and environmental chemicals. This is perhaps most clearly illustrated in the case of the CYP2D6 cytochrome P450 enzyme. Using relatively simple DNA-based tests, it is possible to predict the likely response of any drug predominantly metabolized by this enzyme; this prediction will ensure the safer use of valuable, yet potentially toxic, medication.

The future will no doubt see an explosion in the identification of further polymorphisms (phenotypes) involving drug-metabolizing enzymes. This information will be accompanied by improved, minimally invasive DNA-based tests to identify genotypes in human populations.

Such studies should be particularly informative in evaluating the role of chemicals in the many environmental diseases of presently unknown origin. The consideration of multiple drug-metabolizing enzyme polymorphisms, in combination (e.g., table 1), is also likely to represent a particularly fertile research area. Such studies will clarify the role of chemicals in the causation of cancers. Collectively, this information should enable the formulation of increasingly individualized advice on avoidance of chemicals likely to be of individual concern. This is the field of preventive toxicology. Such advice will no doubt greatly assist all individuals in coping with the ever increasing chemical burden to which we are exposed.

Effect of Age, Sex and Other Factors

There are often large differences among humans in the intensity of response to toxic chemicals, and variations in susceptibility of an individual over a lifetime. These can be attributed to a variety of factors capable of influencing absorption rate, distribution in the body, biotransformation and/or excretion rate of a particular chemical. Apart from the known hereditary factors which have been clearly demonstrated to be linked with increased susceptibility to chemical toxicity in humans (see “Genetic determinants of toxic response”), other factors include: constitutional characteristics related to age and sex; pre-existing disease states or a reduction in organ function (non-hereditary, i.e., acquired); dietary habits, smoking, alcohol consumption and use of medications; concomitant exposure to biotoxins (various micro- organisms) and physical factors (radiation, humidity, extremely low or high temperatures or barometric pressures particularly relevant to the partial pressure of a gas), as well as concomitant physical exercise or psychological stress situations; previous occupational and/or environmental exposure to a particular chemical, and in particular concomitant exposure to other chemicals, not necessarily toxic (e.g., essential metals). The possible contributions of the aforementioned factors in either increasing or decreasing susceptibility to adverse health effects, as well as the mechanisms of their action, are specific for a particular chemical. Therefore only the most common factors, basic mechanisms and a few characteristic examples will be presented here, whereas specific information concerning each particular chemical can be found in elsewhere in this Encyclopaedia.

According to the stage at which these factors act (absorption, distribution, biotransformation or excretion of a particular chemical), the mechanisms can be roughly categorized according to two basic consequences of interaction: (1) a change in the quantity of the chemical in a target organ, that is, at the site(s) of its effect in the organism (toxicokinetic interactions), or (2) a change in the intensity of a specific response to the quantity of the chemical in a target organ (toxicodynamic interactions). The most common mechanisms of either type of interaction are related to competition with other chemical(s) for binding to the same compounds involved in their transport in the organism (e.g., specific serum proteins) and/or for the same biotransformation pathway (e.g., specific enzymes) resulting in a change in the speed or sequence between initial reaction and final adverse health effect. However, both toxicokinetic and toxicodynamic interactions may influence individual susceptibility to a particular chemical. The influence of several concomitant factors can result in either: (a) additive effects—the intensity of the combined effect is equal to the sum of the effects produced by each factor separately, (b) synergistic effects—the intensity of the combined effect is greater than the sum of the effects produced by each factor separately, or (c) antagonistic effects—the intensity of the combined effect is smaller than the sum of the effects produced by each factor separately.

The quantity of a particular toxic chemical or characteristic metabolite at the site(s) of its effect in the human body can be more or less assessed by biological monitoring, that is, by choosing the correct biological specimen and optimal timing of specimen sampling, taking into account biological half-lives for a particular chemical in both the critical organ and in the measured biological compartment. However, reliable information concerning other possible factors that might influence individual susceptibility in humans is generally lacking, and consequently the majority of knowledge regarding the influence of various factors is based on experimental animal data.

It should be stressed that in some cases relatively large differences exist between humans and other mammals in the intensity of response to an equivalent level and/or duration of exposure to many toxic chemicals; for example, humans appear to be considerably more sensitive to the adverse health effects of several toxic metals than are rats (commonly used in experimental animal studies). Some of these differences can be attributed to the fact that the transportation, distribution and biotransformation pathways of various chemicals are greatly dependent on subtle changes in the tissue pH and the redox equilibrium in the organism (as are the activities of various enzymes), and that the redox system of the human differs considerably from that of the rat.

This is obviously the case regarding important antioxidants such as vitamin C and glutathione (GSH), which are essential for maintaining redox equilibrium and which have a protective role against the adverse effects of the oxygen- or xenobiotic-derived free radicals which are involved in a variety of pathological conditions (Kehrer 1993). Humans cannot auto-synthesize vitamin C, contrary to the rat, and levels as well as the turnover rate of erythrocyte GSH in humans are considerably lower than that in the rat. Humans also lack some of the protective antioxidant enzymes, compared to the rat or other mammals (e.g., GSH- peroxidase is considered to be poorly active in human sperm). These examples illustrate the potentially greater vulnerability to oxidative stress in humans (particularly in sensitive cells, e.g., apparently greater vulnerability of the human sperm to toxic influences than that of the rat), which can result in different response or greater susceptibility to the influence of various factors in humans compared to other mammals (Telišman 1995).

Influence of Age

Compared to adults, very young children are often more susceptible to chemical toxicity because of their relatively greater inhalation volumes and gastrointestinal absorption rate due to greater permeability of the intestinal epithelium, and because of immature detoxification enzyme systems and a relatively smaller excretion rate of toxic chemicals. The central nervous system appears to be particularly susceptible at the early stage of development with regard to neurotoxicity of various chemicals, for example, lead and methylmercury. On the other hand, the elderly may be susceptible because of chemical exposure history and increased body stores of some xenobiotics, or pre-existing compromised function of target organs and/or relevant enzymes resulting in lowered detoxification and excretion rate. Each of these factors can contribute to weakening of the body’s defences—a decrease in reserve capacity, causing increased susceptibility to subsequent exposure to other hazards. For example, the cytochrome P450 enzymes (involved in the biotransformation pathways of almost all toxic chemicals) can be either induced or have lowered activity because of the influence of various factors over a lifetime (including dietary habits, smoking, alcohol, use of medications and exposure to environmental xenobiotics).

Influence of Sex

Gender-related differences in susceptibility have been described for a large number of toxic chemicals (approximately 200), and such differences are found in many mammalian species. It appears that males are generally more susceptible to renal toxins and females to liver toxins. The causes of the different response between males and females have been related to differences in a variety of physiological processes (e.g., females are capable of additional excretion of some toxic chemicals through menstrual blood loss, breast milk and/or transfer to the foetus, but they experience additional stress during pregnancy, delivery and lactation), enzyme activities, genetic repair mechanisms, hormonal factors, or the presence of relatively larger fat depots in females, resulting in greater accumulation of some lipophilic toxic chemicals, such as organic solvents and some medications.

Influence of Dietary Habits