Ergonomics and Standardization

Origins

Standardization in the field of ergonomics has a relatively short history. It started in the beginning of the 1970s when the first committees were founded on the national level (e.g., in Germany within the standardization institute DIN), and it continued on an international level after the foundation of the ISO (International Organization for Standardization) TC (Technical Committee) 159 “Ergonomics”, in 1975. In the meantime ergonomics standardization takes place on regional levels as well, for example, on the European level within the CEN (Commission européenne de normalisation), which established its TC 122 “Ergonomics” in 1987. The existence of the latter committee underscores the fact that one of the important reasons for establishing committees for the standardization of ergonomics knowledge and principles can be found in legal (and quasi-legal) regulations, especially with respect to safety and health, which require the application of ergonomics principles and findings in the design of products and work systems. National laws requiring the application of well-established ergonomics findings were the reason for the establishment of the German ergonomics committee in 1970, and European Directives, especially the Machinery Directive (relating to safety standards), were responsible for establishing an ergonomics committee on the European level. Since legal regulations usually are not, cannot and should not be very specific, the task of specifying which ergonomics principles and findings should be applied was given to or taken up by ergonomics standardization committees. Especially on the European level, it can be recognized that ergonomics standardization can contribute to the task of providing for broad and comparable conditions of machinery safety, thus removing barriers to the free trade of machinery within the continent itself.

Perspectives

Ergonomics standardization thus started with a strong protective, although preventive, perspective, with ergonomics standards being developed with the aim of protecting workers against adverse effects at different levels of health protection. Ergonomics standards were thus prepared with the following intentions in view:

- to ensure that assigned tasks do not exceed the limits of the performance capacities of the worker

- to prevent injury or any detrimental effects to the health of the worker whether permanent or transient, either in the short or in the long run, even if the tasks in question can be performed, if only for a short time, without negative effects

- to provide that tasks and working conditions will not lead to impairments, even if recuperation is possible with time.

International standardization, which was not so closely coupled to legislation, on the other hand, always also tried to open a perspective in the direction of producing standards which would go beyond the prevention of and protection against adverse effects (e.g., by specifying minimal/maximal values) and instead proactively provide for optimal working conditions to promote the well-being and personal development of the worker, as well as the effectiveness, efficiency, reliability and productivity of the work system.

This is a point where it becomes evident that ergonomics, and especially ergonomics standardization, has very distinct social and political dimensions. Whereas the protective approach with respect to safety and health is generally accepted and agreed upon among the parties involved (employers, unions, administration and ergonomics experts) for all levels of standardization, the proactive approach is not equally accepted by all parties in the same way. This might be due to the fact that, especially where legislation requires the application of ergonomics principles (and thus either explicitly or implicitly the application of ergonomics standards), some parties feel that such standards might limit their freedom of action or negotiation. Since international standards are less compelling (transferring them into the body of national standards is at the discretion of the national standardization committees) the proactive approach has been developed furthest at the international level of ergonomics standardization.

The fact that certain regulations would indeed restrict the discretion of those to whom they applied served to discourage standardization in certain areas, for example in connection with the European Directives under Article 118a of the Single European Act, relating to safety and health in the use and operation of machinery at the workplace, and in the design of work systems and workplace design. On the other hand, under the Directives issued under Article 100a, relating to safety and health in the design of machinery with regard to the free trade of this machinery within the European Union (EU), European ergonomics standardization is mandated by the European Commission.

From an ergonomics point of view, however, it is difficult to understand why ergonomics in the design of machinery should be different from that in the use and operation of machinery within a work system. It is thus to be hoped that the distinction will be given up in the future, since it seems to be more detrimental than beneficial to the development of a consistent body of ergonomics standards.

Types of Ergonomics Standards

The first international ergonomics standard to have been developed (based on a German DIN national standard) is ISO 6385, “Ergonomic principles in the design of work systems”, published in 1981. It is the basic standard of the ergonomics standards series and set the stage for the standards which followed by defining the basic concepts and stating the general principles of the ergonomic design of work systems, including tasks, tools, machinery, workstations, work space, work environment and work organization. This international standard, which is now undergoing revision, is a guideline standard, and as such provides guidelines to be followed. It does not, however, provide technical or physical specifications which have to be met. These can be found in a different type of standards, that is, specification standards, for example, those on anthropometry or thermal conditions. Both types of standards fulfil different functions. While guideline standards intend to show their users “what to do and how to do it” and indicate those principles that must or should be observed, for example, with respect to mental workload, specification standards provide users with detailed information about safety distances or measurement procedures, for example, that have to be met and where compliance with these prescriptions can be tested by specified procedures. This is not always possible with guideline standards, although despite their relative lack of specificity it can usually be demonstrated when and where guidelines have been violated. A subset of specification standards are “database” standards, which provide the user with relevant ergonomics data, for example, body dimensions.

CEN standards are classified as A-, B- and C-type standards, depending on their scope and field of application. A-type standards are general, basic standards which apply to all kinds of applications, B-type standards are specific for an area of application (which means that most of the ergonomics standards within the CEN will be of this type), and C-type standards are specific for a certain kind of machinery, for example, hand-held drilling machines.

Standardization Committees

Ergonomics standards, like other standards, are produced in the appropriate technical committees (TCs), their subcommittees (SCs) or working groups (WGs). For the ISO this is TC 159, for CEN it is TC 122, and on the national level, the respective national committees. Besides the ergonomics committees, ergonomics is also dealt with in TCs working on machine safety (e.g., CEN TC 114 and ISO TC 199) with which liaison and close cooperation is maintained. Liaisons are also established with other committees for which ergonomics might be of relevance. Responsibility for ergonomics standards, however, is reserved to the ergonomics committees themselves.

A number of other organizations are engaged in the production of ergonomics standards, such as the IEC (International Electrotechnical Commission); CENELEC, or the respective national committees in the electrotechnical field; CCITT (Comité consultative international des organisations téléphoniques et télégraphiques) or ETSI (European Telecommunication Standards Institute) in the field of telecommunications; ECMA (European Computer Manufacturers Association) in the field of computer systems; and CAMAC (Computer Assisted Measurement and Control Association) in the field of new technologies in manufacturing, to name only a few. With some of these the ergonomics committees do have liaisons in order to avoid duplication of work or inconsistent specifications; with some organizations (e.g., the IEC) even joint technical committees are established for cooperation in areas of mutual interest. With other committees, however, there is no coordination or cooperation at all. The main purpose of these committees is to produce (ergonomics) standards that are specific to their field of activity. Since the number of such organizations at the different levels is rather large, it becomes quite complicated (if not impossible) to carry out a complete overview of ergonomics standardization. The present review will therefore be restricted to ergonomics standardization in the international and European ergonomics committees.

Structure of Standardization Committees

Ergonomics standardization committees are quite similar to one another in structure. Usually one TC within a standardization organization is responsible for ergonomics. This committee (e.g., ISO TC 159) mainly has to do with decisions about what should be standardized (e.g., work items) and how to organize and coordinate the standardization within the committee, but usually no standards are prepared at this level. Below the TC level are other committees. For example, the ISO has subcommittees (SCs), which are responsible for a defined field of standardization: SC 1 for general ergonomic guiding principles, SC 3 for anthropometry and biomechanics, SC 4 for human-system interaction and SC 5 for the physical work environment. CEN TC 122 has working groups (WGs) below the TC level which are so constituted as to deal with specified fields within ergonomics standardization. SCs within ISO TC 159 operate as steering committees for their field of responsibility and do the first voting, but usually they do not also prepare standards. This is done in their WGs, which are composed of experts nominated by their national committees, whereas SC and TC meetings are attended by national delegations representing national points of view. Within the CEN, duties are not sharply distinguished at the WG level; WGs operate both as steering and production committees, although a good deal of work is accomplished in ad hoc groups, which are composed of members of the WG (nominated by their national committees) and established to prepare the drafts for a standard. WGs within an ISO SC are established to do the practical standardization work, that is, prepare drafts, work on comments, identify needs for standardization, and prepare proposals to the SC and TC, which will then take the appropriate decisions or actions.

Preparation of Ergonomics Standards

The preparation of ergonomics standards has changed quite markedly within the last years in view of the stronger emphasis now being placed on European and other international developments. In the beginning, national standards, which had been prepared by experts from one country in their national committee and agreed upon by the interested parties among the general public of that country in a specified voting procedure, were transferred as input to the responsible SC and WG of ISO TC 159, after a formal vote had been taken at the TC level that such an international standard should be prepared. The working group, composed of ergonomics experts (and experts from politically interested parties) from all participating member bodies (i.e., the national standardization organizations) of TC 159 who were willing to cooperate in this work project, would then work on any inputs and prepare a working draft (WD). After this draft proposal is agreed upon in the WG, it becomes a committee draft (CD), which is distributed to the member bodies of the SC for approval and comments. If the draft receives substantial support from the SC member bodies (i.e., if at least two-thirds vote in favour) and after comments by the national committees have been incorporated by the WG in the improved version, a Draft International Standard (DIS) is submitted for voting to all members of TC 159. If substantial support, at this step from the member bodies of the TC, is achieved (and perhaps after incorporating editorial changes), this version will then be published as an International Standard (IS) by the ISO. Voting of the member bodies at the TC and SC level is based on voting at the national level, and comments can be supplied through the member bodies by experts or interested parties in each country. The procedure is roughly equivalent in CEN TC 122, with the exception that there are no SCs below the TC level and that voting takes part with weighted votes (according to the size of the country) whereas within the ISO the rule is one country, one vote. If a draft fails at any step, and unless the WG decides that an agreeable revision cannot be achieved, it has to be revised and then has to pass through the voting procedure again.

International standards are then transferred into national standards if the national committees vote accordingly. By contrast, European Standards (ENs) have to be transferred into national standards by the CEN members and conflicting national standards have to be withdrawn. That means that harmonized ENs will be effective in all CEN countries (and, due to their influence on trade, will be relevant to manufacturers in all other countries who intend to sell goods to a customer in a CEN country).

ISO-CEN Cooperation

In order to avoid conflicting standards and duplication of work and to allow non-CEN members to take part in developments in the CEN, a cooperative agreement between the ISO and the CEN has been achieved (the so-called Vienna Agreement) which regulates the formalities and provides for a so-called parallel voting procedure, which allows the same drafts to be voted upon in the CEN and the ISO in parallel, if the responsible committees agree to do so. Among the ergonomics committees the tendency is quite clear: avoid duplication of work (manpower and financial resources are too limited), avoid conflicting specifications, and try to achieve a consistent body of ergonomics standards based on a division of labour. Whereas CEN TC 122 is bound by the decisions of the EU administration and gets mandated work items to stipulate the specifications of European directives, ISO TC 159 is free to standardize whatever it thinks necessary or appropriate in the field of ergonomics. This has led to shifts in the emphasis of both committees, with the CEN concentrating on machinery and safety-related topics and the ISO concentrating on areas where broader market interests than Europe are concerned (e.g., work with VDUs and control-room design for process and related industries); on areas where the operation of machinery is concerned, as in work system design; and on such areas as work environment and work organization as well. The intention, however, is to transfer work results from the CEN to the ISO, and vice versa, in order to build up a body of consistent ergonomics standards which in fact are effective all over the world.

The formal procedure of producing standards is still the same today. But since the emphasis has shifted more and more to the international or the European level, more and more activities are being transferred to these committees. Drafts are now usually worked out directly in these committees and are no longer based on existing national standards. After the decision has been made that a standard should be developed, work directly starts at one of these supranational levels, based on whatever input there may be available, sometimes starting from zero. This changes the role of the national ergonomics committees quite dramatically. While heretofore they formally developed their own national standards according to their national rules, they now have the task of observing and influencing standardization on the supranational levels—via the experts who work out the standards or via comments made at the different steps of voting (within the CEN, a national standardization project will be halted if a comparable project is being simultaneously worked on at the CEN level). This makes the task still more complicated, since this influence can only be exerted indirectly and since the preparation of ergonomics standards is not just a matter of pure science but a matter of bargaining, consensus and agreement (not least due to the political implications which the standard might have). This, of course, is one of the reasons why the process of producing an international or European ergonomics standard usually takes several years and why ergonomics standards cannot reflect the latest state of the art in ergonomics. International ergonomics standards thus have to be examined every five years, and, if necessary, undergo revision.

Fields of Ergonomics Standardization

International ergonomics standardization started with guidelines on the general principles of ergonomics in the design of work systems; they were laid down in ISO 6385, which is now under revision in order to incorporate new developments. The CEN has produced a similar basic standard (EN 614, Part 1, 1994)—this is oriented more to machinery and safety—and is preparing a standard with guidelines on task design as a second part of this basic standard. The CEN thus emphasizes the importance of operator tasks in the design of machinery or work systems, for which appropriate tools or machinery have to be designed.

Another area where concepts and guidelines have been laid down in standards is the field of mental workload. ISO 10075, Part 1, defines terms and concepts (e.g., fatigue, monotony, reduced vigilance), and Part 2 (at the stage of a DIS in the latter half of the 1990s) provides guidelines for the design of work systems with respect to mental workload in order to avoid impairments.

SC 3 of ISO TC 159 and WG 1 of CEN TC 122 produce standards on anthropometry and biomechanics, covering, among other topics, methods of anthropometric measurements, body dimensions, safety distances and access dimensions, the evaluation of working postures and the design of workplaces in relation to machinery, recommended limits of physical strength and problems of manual handling.

SC 4 of ISO 159 shows how technological and social changes affect ergonomics standardization and the programme of such a subcommittee. SC 4 started as “Signals and Controls” by standardizing principles for displaying information and designing control actuators, with one of its work items being the visual display unit (VDU), used for office tasks. It soon became apparent, however, that standardizing the ergonomics of VDUs would not be sufficient, and that standardization “around” this workstation—in the sense of a work system—was required, covering areas such as hardware (e.g., the VDU itself, including displays, keyboards, non-keyboard input devices, workstations), work environment (e.g., lighting), work organization (e.g., task requirements), and software (e.g., dialogue principles, menu and direct manipulation dialogues). This led to a multipart standard (ISO 9241) covering “ergonomic requirements for office work with VDUs” with at the moment 17 parts, 3 of which have reached the status of an IS already. This standard will be transferred to the CEN (as EN 29241) which will specify requirements for the VDU directive (90/270 EEC) of the EU—although this is a directive under article 118a of the Single European Act. This series of standards provides guidelines as well as specifications, depending on the subject of the given part of the standard, and introduces a new concept of standardization, the user performance approach, which might help to solve some of the problems in ergonomics standardization. It is described more fully in the chapter Visual Display Units .

The user performance approach is based on the idea that the aim of standardization is to prevent impairment and to provide for optimal working conditions for the operator, but not to establish technical specification per se. Specification is thus regarded only as a means to the end of unimpaired, optimal user performance. The important thing is to achieve this unimpaired performance of the operator, regardless of whether a certain physical specification is met. This requires that the unimpaired operator performance which has to be achieved, for example, reading performance on a VDU, must be specified in the first place, and second, that technical specifications be developed which will enable the desired performance to be achieved, based on the available evidence. The manufacturer is then free to follow these technical specifications, which will ensure that the product complies with the ergonomics requirements. Or he may demonstrate, by comparison with a product that is known to fulfil the requirements (either by compliance with the technical specifications of the standard or by proven performance), that with the new product the performance requirements are equally or better fulfilled than with the reference product, with or without compliance to the technical specifications of the standard. A test procedure which has to be followed for demonstrating conformance with the user performance requirements of the standard is specified in the standard.

This approach helps to overcome two problems. Standards, by virtue of their specifications, which are based on the state of the art (and technology) at the time of preparation of the standard, can restrict new developments. Specifications that are based on a certain technology (e.g., cathode-ray tubes) may be inappropriate for other technologies. Independently of technology, however, the user of a display device (for instance) should be able to read and understand the information displayed effectively and efficiently without any impairments, irrespective of whatever technique may be used. Performance in this case must, however, not be restricted to the pure output (as measured in terms of speed or accuracy) but must include considerations of comfort and effort as well.

The second problem that can be dealt with by this approach is the problem of interactions between conditions. Physical specification usually is unidimensional, leaving other conditions out of consideration. In the case of interactive effects, however, this can be misleading or even wrong. By specifying performance requirements, on the other hand, and leaving the means to achieve these to the manufacturer, any solution that satisfies these performance requirements will be acceptable. Treating specification as a means to an end thus represents a genuine ergonomic perspective.

Another standard with a work system approach is under preparation in SC 4, which relates to the design of control rooms, for instance, for process industries or power stations. A multipart standard (ISO 11064) is expected to be prepared as a result, with the different parts dealing with such aspects of control-room design as layout, operator workstation design, and the design of displays and input devices for process control. Because these work items and the approach taken clearly exceed problems of the design of “displays and controls”, SC 4 has been renamed “Human-System Interaction”.

Environmental problems, especially those relating to thermal conditions and communication in noisy environments, are dealt with in SC 5, where standards have been or are being prepared on measurement methods, methods for the estimation of heat stress, conditions of thermal comfort, metabolic heat production, and on auditory and visual danger signals, speech interference level and the assessment of speech communication.

CEN TC 122 covers roughly the same fields of ergonomics standardization, although with a different emphasis and a different structure of its working groups. It is intended, however, that by a division of labour between the ergonomics committees, and mutual acceptance of work results, a general and usable set of ergonomics standards will be developed.

Analysis of Activities, Tasks and Work Systems

It is difficult to speak of work analysis without putting it in the perspective of recent changes in the industrial world, because the nature of activities and the conditions in which they are carried out have undergone considerable evolution in recent years. The factors giving rise to these changes have been numerous, but there are two whose impact has proved crucial. On the one hand, technological progress with its ever-quickening pace and the upheavals brought about by information technologies have revolutionized jobs (De Keyser 1986). On the other hand, the uncertainty of the economic market has required more flexibility in personnel management and work organization. If the workers have gained a wider view of the production process that is less routine-oriented and undoubtedly more systematic, they have at the same time lost exclusive links with an environment, a team, a production tool. It is difficult to view these changes with serenity, but we have to face the fact that a new industrial landscape has been created, sometimes more enriching for those workers who can find their place in it, but also filled with pitfalls and worries for those who are marginalized or excluded. However, one idea is being taken up in firms and has been confirmed by pilot experiments in many countries: it should be possible to guide changes and soften their adverse effects with the use of relevant analyses and by using all resources for negotiation between the different work actors. It is within this context that we must place work analyses today—as tools allowing us to describe tasks and activities better in order to guide interventions of different kinds, such as training, the setting up of new organizational modes or the design of tools and work systems. We speak of analyses, and not just one analysis, since there exist a large number of them, depending on the theoretical and cultural contexts in which they are developed, the particular goals they pursue, the evidence they collect, or the analyser’s concern for either specificity or generality. In this article, we will limit ourselves to presenting a few characteristics of work analyses and emphasizing the importance of collective work. Our conclusions will highlight other paths that the limits of this text prevent us from pursuing in greater depth.

Some Characteristics of Work Analyses

The context

If the primary goal of any work analysis is to describe what the operator does, or should do, placing it more precisely into its context has often seemed indispensable to researchers. They mention, according to their own views, but in a broadly similar manner, the concepts of context, situation, environment, work domain, work world or work environment. The problem lies less in the nuances between these terms than in the selection of variables that need to be described in order to give them a useful meaning. Indeed, the world is vast and the industry is complex, and the characteristics that could be referred to are innumerable. Two tendencies can be noted among authors in the field. The first one sees the description of the context as a means of capturing the reader’s interest and providing him or her with an adequate semantic framework. The second has a different theoretical perspective: it attempts to embrace both context and activity, describing only those elements of the context that are capable of influencing the behavior of operators.

The semantic framework

Context has evocative power. It is enough, for an informed reader, to read about an operator in a control room engaged in a continuous process to call up a picture of work through commands and surveillance at a distance, where the tasks of detection, diagnosis, and regulation predominate. What variables need to be described in order to create a sufficiently meaningful context? It all depends on the reader. Nonetheless, there is a consensus in the literature on a few key variables. The nature of the economic sector, the type of production or service, the size and the geographical location of the site are useful.

The production processes, the tools or machines and their level of automation allow certain constraints and certain necessary qualifications to be guessed at. The structure of the personnel, together with age and level of qualification and experience are crucial data whenever the analysis concerns aspects of training or of organizational flexibility. The organization of work established depends more on the firm’s philosophy than on technology. Its description includes, notably, work schedules, the degree of centralization of decisions and the types of control exercised over the workers. Other elements may be added in different cases. They are linked to the firm’s history and culture, its economic situation, work conditions, and any restructuring, mergers, and investments. There exist at least as many systems of classification as there are authors, and there are numerous descriptive lists in circulation. In France, a special effort has been made to generalize simple descriptive methods, notably allowing for the ranking of certain factors according to whether or not they are satisfactory for the operator (RNUR 1976; Guelaud et al. 1977).

The description of relevant factors regarding the activity

The taxonomy of complex systems described by Rasmussen, Pejtersen, and Schmidts (1990) represents one of the most ambitious attempts to cover at the same time the context and its influence on the operator. Its main idea is to integrate, in a systematic fashion, the different elements of which it is composed and to bring out the degrees of freedom and the constraints within which individual strategies can be developed. Its exhaustive aim makes it difficult to manipulate, but the use of multiple modes of representation, including graphs, to illustrate the constraints has a heuristic value that is bound to be attractive to many readers. Other approaches are more targeted. What the authors seek is the selection of factors that can influence a precise activity. Hence, with an interest in the control of processes in a changing environment, Brehmer (1990) proposes a series of temporal characteristics of the context which affect the control and anticipation of the operator (see figure 1). This author’s typology has been developed from “micro-worlds”, computerized simulations of dynamic situations, but the author himself, along with many others since, used it for the continuous-process industry (Van Daele 1992). For certain activities, the influence of the environment is well known, and the selection of factors is not too difficult. Thus, if we are interested in heart rate in the work environment, we often limit ourselves to describing the air temperatures, the physical constraints of the task or the age and training of the subject—even though we know that by doing so we perhaps leave out relevant elements. For others, the choice is more difficult. Studies on human error, for example, show that the factors capable of producing them are numerous (Reason 1989). Sometimes, when theoretical knowledge is insufficient, only statistical processing, combining context and activity analysis, allows us to bring out the relevant contextual factors (Fadier 1990).

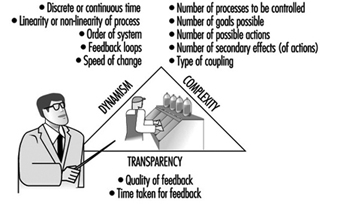

Figure 1. The criteria and sub-criteria of the taxonomy of micro-worlds proposed by Brehmer (1990)

The Task or the Activity?

The task

The task is defined by its objectives, its constraints and the means it requires for achievement. A function within the firm is generally characterized by a set of tasks. The realized task differs from the prescribed task scheduled by the firm for a large number of reasons: the strategies of operators vary within and among individuals, the environment fluctuates and random events require responses that are often outside the prescribed framework. Finally, the task is not always scheduled with the correct knowledge of its conditions of execution, hence the need for adaptations in real-time. But even if the task is updated during the activity, sometimes to the point of being transformed, it still remains the central reference.

Questionnaires, inventories, and taxonomies of tasks are numerous, especially in the English-language literature—the reader will find excellent reviews in Fleishman and Quaintance (1984) and in Greuter and Algera (1989). Certain of these instruments are merely lists of elements—for example, the action verbs to illustrate tasks—that are checked off according to the function studied. Others have adopted a hierarchical principle, characterizing a task as interlocking elements, ordered from the global to the particular. These methods are standardized and can be applied to a large number of functions; they are simple to use, and the analytical stage is much shortened. But where it is a question of defining specific work, they are too static and too general to be useful.

Next, there are those instruments requiring more skill on the part of the researcher; since the elements of analysis are not predefined, it is up to the researcher to characterize them. The already outdated critical incident technique of Flanagan (1954), where the observer describes a function by reference to its difficulties and identifies the incidents which the individual will have to face, belongs to this group.

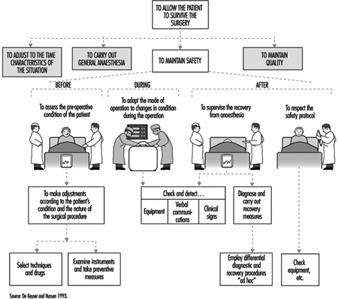

It is also the path adopted by cognitive task analysis (Roth and Woods 1988). This technique aims to bring to light the cognitive requirements of a job. One way to do this is to break the job down into goals, constraints and means. Figure 2 shows how the task of an anesthetist, characterized first by a very global goal of patient survival, can be broken down into a series of sub-goals, which can themselves be classified as actions and means to be employed. More than 100 hours of observation in the operating theatre and subsequent interviews with anesthetists were necessary to obtain this synoptic “photograph” of the requirements of the function. This technique, although quite laborious, is nevertheless useful in ergonomics in determining whether all the goals of a task are provided with the means of attaining them. It also allows for an understanding of the complexity of a task (its particular difficulties and conflicting goals, for example) and facilitates the interpretation of certain human errors. But it suffers, as do other methods, from the absence of a descriptive language (Grant and Mayes 1991). Moreover, it does not permit hypotheses to be formulated as to the nature of the cognitive processes brought into play to attain the goals in question.

Figure 2. Cognitive analysis of the task: general anesthesia

Other approaches have analyzed the cognitive processes associated with given tasks by drawing up hypotheses as to the information processing necessary to accomplish them. A frequently employed cognitive model of this kind is Rasmussen’s (1986), which provides, according to the nature of the task and its familiarity for the subject, three possible levels of activity-based either on skill-based habits and reflexes, on acquired rule-based procedures or on knowledge-based procedures. But other models or theories that reached the height of their popularity during the 1970s remain in use. Hence, the theory of optimal control, which considers man as a controller of discrepancies between assigned and observed goals, is sometimes still applied to cognitive processes. And modeling by means of networks of interconnected tasks and flow charts continues to inspire the authors of cognitive task analysis; figure 3 provides a simplified description of the behavioral sequences in an energy-control task, constructing a hypothesis about certain mental operations. All these attempts reflect the concern of researchers to bring together in the same description not only elements of the context but also the task itself and the cognitive processes that underlie it—and to reflect the dynamic character of work as well.

Figure 3. A simplified description of the determinants of a behavior sequence in energy control tasks: a case of unacceptable consumption of energy

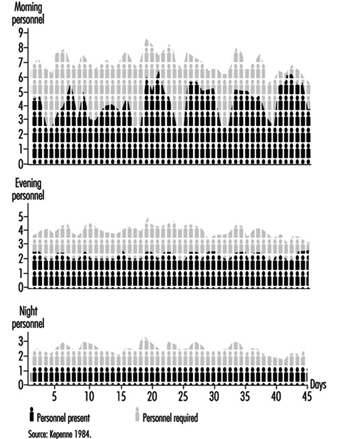

Since the arrival of the scientific organization of work, the concept of the prescribed task has been adversely criticized because it has been viewed as involving the imposition on workers of tasks that are not only designed without consulting their needs but are often accompanied by specific performance time, a restriction not welcomed by many workers. Even if the imposition aspect has become rather more flexible today and even if the workers contribute more often to the design of tasks, an assigned time for tasks remains necessary for schedule planning and remains an essential component of work organization. The quantification of time should not always be perceived in a negative manner. It constitutes a valuable indicator of workload. A simple but common method of measuring the time pressure exerted on a worker consists of determining the quotient of the time necessary for the execution of a task divided by the available time. The closer this quotient is to unity, the greater the pressure (Wickens 1992). Moreover, quantification can be used in flexible but appropriate personnel management. Let us take the case of nurses where the technique of predictive analysis of tasks has been generalized, for example, in the Canadian regulation Planning of Required Nursing (PRN 80) (Kepenne 1984) or one of its European variants. Thanks to such task lists, accompanied by their meantime of execution, one can, each morning, taking into account the number of patients and their medical conditions, establish a care schedule and a distribution of personnel. Far from being a constraint, PRN 80 has, in a number of hospitals, demonstrated that a shortage of nursing personnel exists, since the technique allows a difference to be established (see figure 4) between the desired and the observed, that is, between the number of staff necessary and the number available, and even between the tasks planned and the tasks carried out. The times calculated are only averages, and the fluctuations in the situation do not always make them applicable, but this negative aspect is minimized by a flexible organization that accepts adjustments and allows the personnel to participate in effecting those adjustments.

Figure 4. Discrepancies between the numbers of personnel present and required on the basis of PRN80

The activity, the evidence, and the performance

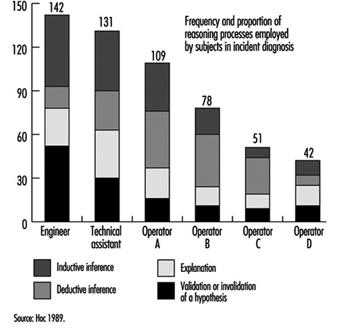

An activity is defined as the set of behaviors and resources used by the operator so that work occurs—that is to say, the transformation or production of goods or the rendering of a service. This activity can be understood through observation in different ways. Faverge (1972) has described four forms of analysis. The first is an analysis in terms of gestures and postures, where the observer locates, within the visible activity of the operator, classes of behavior that are recognizable and repeated during work. These activities are often coupled with a precise response: for example, the heart rate, which allows us to assess the physical load associated with each activity. The second form of analysis is in terms of information uptake. What is discovered, through direct observation—or with the aid of cameras or recorders of eye movements—is the set of signals picked up by the operator in the information field surrounding him or her. This analysis is particularly useful in cognitive ergonomics in trying to better understand the information processing carried out by the operator. The third type of analysis is in terms of regulation. The idea is to identify the adjustments of activity carried out by the operator in order to deal with either fluctuation in the environment or changes in his own condition. There we find the direct intervention of context within the analysis. One of the most frequently cited research projects in this area is that of Sperandio (1972). This author studied the activity of air traffic controllers and identified important strategy changes during an increase in air traffic. He interpreted them as an attempt to simplify the activity by aiming to maintain an acceptable load level, while at the same time continuing to meet the requirements of the task. The fourth is an analysis in terms of thought processes. This type of analysis has been widely used in the ergonomics of highly automated posts. Indeed, the design of computerized aids and notably intelligent aids for the operator requires a thorough understanding of the way in which the operator reasons in order to solve certain problems. The reasoning involved in scheduling, anticipation, and diagnosis has been the subject of analyses, an example of which can be found in figure 5. However, evidence of mental activity can only be inferred. Apart from certain observable aspects of behavior, such as eye movements and problem-solving time, most of these analyses resort to the verbal response. Particular emphasis has been placed, in recent years, on the knowledge necessary to accomplish certain activities, with researchers trying not to postulate them at the outset but to make them apparent through the analysis itself.

Figure 5. Analysis of mental activity. Strategies in the control of processes with long response times: the need for computerized support in diagnosis

Such efforts have brought to light the fact that almost identical performances can be obtained with very different levels of knowledge, as long as operators are aware of their limits and apply strategies adapted to their capabilities. Hence, in our study of the start-up of a thermoelectric plant (De Keyser and Housiaux 1989), the start-ups were carried out by both engineers and operators. The theoretical and procedural knowledge that these two groups possessed, which had been elicited by means of interviews and questionnaires, were very different. The operators in particular sometimes had an erroneous understanding of the variables in the functional links of the process. In spite of this, the performances of the two groups were very close. But the operators took into account more variables in order to verify the control of the start-up and undertook more frequent verifications. Such results were also obtained by Amalberti (1991), who mentioned the existence of metaknowledge allowing experts to manage their own resources.

What evidence of activity is appropriate to elicit? Its nature, as we have seen, depends closely on the form of analysis planned. Its form varies according to the degree of methodological care exercised by the observer. Provoked evidence is distinguished from spontaneous evidence and concomitant from subsequent evidence. Generally speaking, when the nature of the work allows, concomitant and spontaneous evidence are to be preferred. They are free of various drawbacks such as the unreliability of memory, observer interference, the effect of rationalizing reconstruction on the part of the subject, and so forth. To illustrate these distinctions, we will take the example of verbalizations. Spontaneous verbalizations are verbal exchanges, or monologues expressed spontaneously without being requested by the observer; provoked verbalizations are those made at the specific request of the observer, such as the request made to the subject to “think aloud”, which is well known in the cognitive literature. Both types can be done in real-time, during work, and are thus concomitant.

They can also be subsequent, as in interviews, or subjects’ verbalizations when they view videotapes of their work. As for the validity of the verbalizations, the reader should not ignore the doubt raised in this regard by the controversy between Nisbett and De Camp Wilson (1977) and White (1988) and the precautions suggested by numerous authors aware of their importance in the study of mental activity in view of the methodological difficulties encountered (Ericson and Simon 1984; Savoyant and Leplat 1983; Caverni 1988; Bainbridge 1986).

The organization of this evidence, its processing and its formalization require descriptive languages and sometimes analyses that go beyond field observation. Those mental activities which are inferred from the evidence, for example, remain hypothetical. Today they are often described using languages derived from artificial intelligence, making use of representations in terms of schemes, production rules, and connecting networks. Moreover, the use of computerized simulations—of micro-worlds—to pinpoint certain mental activities has become widespread, even though the validity of the results obtained from such computerized simulations, in view of the complexity of the industrial world, is subject to debate. Finally, we must mention the cognitive modelings of certain mental activities extracted from the field. Among the best known is the diagnosis of the operator of a nuclear power plant, carried out in ISPRA (Decortis and Cacciabue 1990), and the planning of the combat pilot perfected in Centre d’études et de recherches de médecine aérospatiale (CERMA) (Amalberti et al. 1989).

Measurement of the discrepancies between the performance of these models and that of real, living operators is a fruitful field in activity analysis. Performance is the outcome of the activity, the final response given by the subject to the requirements of the task. It is expressed at the level of production: productivity, quality, error, incident, accident—and even, at a more global level, absenteeism or turnover. But it must also be identified at the individual level: the subjective expression of satisfaction, stress, fatigue or workload, and many physiological responses are also performance indicators. Only the entire set of data permits interpretation of the activity—that is to say, judging whether or not it furthers the desired goals while remaining within human limits. There exists a set of norms which, up to a certain point, guide the observer. But these norms are not situated—they do not take into account the context, its fluctuations and the condition of the worker. This is why in design ergonomics, even when rules, norms, and models exist, designers are advised to test the product using prototypes as early as possible and to evaluate the users’ activity and performance.

Individual or Collective Work?

While in the vast majority of cases, work is a collective act, most work analyses focus on tasks or individual activities. Nonetheless, the fact is that technological evolution, just like work organization, today emphasizes distributed work, whether it be between workers and machines or simply within a group. What paths have been explored by authors so as to take this distribution into account (Rasmussen, Pejtersen and Schmidts 1990)? They focus on three aspects: structure, the nature of exchanges and structural lability.

Structure

Whether we view structure as elements of the analysis of people, or of services, or even of different branches of a firm working in a network, the description of the links that unite them remains a problem. We are very familiar with the organigrams within firms that indicate the structure of authority and whose various forms reflect the organizational philosophy of the firm—very hierarchically organized for a Taylor-like structure, or flattened like a rake, even matrix-like, for a more flexible structure. Other descriptions of distributed activities are possible: an example is given in figure 6. More recently, the need for firms to represent their information exchanges at a global level has led to a rethinking of information systems. Thanks to certain descriptive languages—for example, design schemas, or entity-relations-attribute matrixes—the structure of relations at the collective level can today be described in a very abstract manner and can serve as a springboard for the creation of computerized management systems.

Figure 6. Integrated life cycle design

The nature of exchanges

Simply having a description of the links uniting the entities says little about the content itself of the exchanges; of course the nature of the relation can be specified—movement from place to place, information transfers, hierarchical dependence, and so on—but this is often quite inadequate. The analysis of communications within teams has become a favored means of capturing the very nature of collective work, encompassing subjects mentioned, creation of a common language in a team, modification of communications when circumstances are critical, and so forth (Tardieu, Nanci and Pascot 1985; Rolland 1986; Navarro 1990; Van Daele 1992; Lacoste 1983; Moray, Sanderson and Vincente 1989). Knowledge of these interactions is particularly useful for the creation of computer tools, notably decision-making aids for understanding errors. The different stages and the methodological difficulties linked to the use of this evidence have been well described by Falzon (1991).

Structural lability

It is the work on activities rather than on tasks that have opened up the field of structural lability—that is to say, of the constant reconfigurations of collective work under the influence of contextual factors. Studies such as those of Rogalski (1991), who over a long period analyzed the collective activities dealing with forest fires in France, and Bourdon and Weill Fassina (1994), who studied the organizational structure set up to deal with railway accidents, are both very informative. They clearly show how the context molds the structure of exchanges, the number, and type of actors involved, the nature of the communications and the number of parameters essential to the work. The more this context fluctuates, the further the fixed descriptions of the task are removed from reality. Knowledge of this lability, and a better understanding of the phenomena that take place within it, are essential in planning for the unpredictable and in order to provide better training for those involved in collective work in a crisis.

Conclusions

The various phases of the work analysis that have been described are an iterative part of any human factors design cycle (see figure 6). In this design of any technical object, whether a tool, a workstation or a factory, in which human factors are a consideration, certain information is needed in time. In general, the beginning of the design cycle is characterized by a need for data involving environmental constraints, the types of jobs that are to be carried out, and the various characteristics of the users. This initial information allows the specifications of the object to be drawn up so as to take into account work requirements. But this is, in some sense, only a coarse model compared to the real work situation. This explains why models and prototypes are necessary that, from their inception, allow not the jobs themselves, but the activities of the future users to be evaluated. Consequently, while the design of the images on a monitor in a control room can be based on a thorough cognitive analysis of the job to be done, only a data-based analysis of the activity will allow an accurate determination of whether the prototype will actually be of use in the actual work situation (Van Daele 1988). Once the finished technical object is put into operation, greater emphasis is put on the performance of the users and on dysfunctional situations, such as accidents or human error. The gathering of this type of information allows the final corrections to be made that will increase the reliability and usability of the completed object. Both the nuclear industry and the aeronautics industry serve as an example: operational feedback involves reporting every incident that occurs. In this way, the design loop comes full circle.

The Nature and Aims of Ergonomics

Definition and Scope

Ergonomics means literally the study or measurement of work. In this context, the term work signifies purposeful human function; it extends beyond the more restricted concept of work as labour for monetary gain to incorporate all activities whereby a rational human operator systematically pursues an objective. Thus it includes sports and other leisure activities, domestic work such as child care and home maintenance, education and training, health and social service, and either controlling engineered systems or adapting to them, for example, as a passenger in a vehicle.

The human operator, the focus of study, may be a skilled professional operating a complex machine in an artificial environment, a customer who has casually purchased a new piece of equipment for personal use, a child sitting in a classroom or a disabled person in a wheelchair. The human being is highly adaptable but not infinitely so. There are ranges of optimum conditions for any activity. One of the tasks of ergonomics is to define what these ranges are and to explore the undesirable effects which occur if the limits are transgressed—for example if a person is expected to work in conditions of excessive heat, noise or vibration, or if the physical or mental workload is too high or too low.

Ergonomics examines not only the passive ambient situation but also the unique advantages of the human operator and the contributions that can be made if a work situation is designed to permit and encourage the person to make the best use of his or her abilities. Human abilities may be characterized not only with reference to the generic human operator but also with respect to those more particular abilities that are called upon in specific situations where high performance is essential. For example, an automobile manufacturer will consider the range of physical size and strength of the population of drivers who are expected to use a particular model to ensure that the seats are comfortable, that the controls are readily identifiable and within reach, that there is clear visibility to the front and the rear, and that the internal instruments are easy to read. Ease of entry and egress will also be taken into account. By contrast, the designer of a racing car will assume that the driver is athletic so that ease of getting in and out, for example, is not important and, in fact, design features as a whole as they relate to the driver may well be tailored to the dimensions and preferences of a particular driver to ensure that he or she can exercise his or her full potential and skill as a driver.

In all situations, activities and tasks the focus is the person or persons involved. It is assumed that the structure, the engineering and any other technology is there to serve the operator, not the other way round.

History and Status

About a century ago it was recognized that working hours and conditions in some mines and factories were not tolerable in terms of safety and health, and the need was evident to pass laws to set permissible limits in these respects. The determination and statement of those limits can be regarded as the beginning of ergonomics. They were, incidentally, the beginning of all the activities which now find expression through the work of the International Labour Organization (ILO).

Research, development and application proceeded slowly until the Second World War. This triggered greatly accelerated development of machines and instrumentation such as vehicles, aircraft, tanks, guns and vastly improved sensing and navigation devices. As technology advanced, greater flexibility was available to allow adaptation to the operator, an adaptation that became the more necessary because human performance was limiting the performance of the system. If a powered vehicle can travel at a speed of only a few kilometres per hour there is no need to worry about the performance of the driver, but when the vehicle’s maximum speed is increased by a factor of ten or a hundred, then the driver has to react more quickly and there is no time to correct mistakes to avert disaster. Similarly, as technology is improved there is less need to worry about mechanical or electrical failure (for instance) and attention is freed to think about the needs of the driver.

Thus ergonomics, in the sense of adapting engineering technology to the needs of the operator, becomes simultaneously both more necessary and more feasible as engineering advances.

The term ergonomics came into use about 1950 when the priorities of developing industry were taking over from the priorities of the military. The development of research and application for the following thirty years is described in detail in Singleton (1982). The United Nations agencies, particularly the ILO and the World Health Organization (WHO), became active in this field in the 1960s.

In immediate postwar industry the overriding objective, shared by ergonomics, was greater productivity. This was a feasible objective for ergonomics because so much industrial productivity was determined directly by the physical effort of the workers involved—speed of assembly and rate of lifting and movement determined the extent of output. Gradually, mechanical power replaced human muscle power. More power, however, leads to more accidents on the simple principle that an accident is the consequence of power in the wrong place at the wrong time. When things are happening faster, the potential for accidents is further increased. Thus the concern of industry and the aim of ergonomics gradually shifted from productivity to safety. This occurred in the 1960s and early 1970s. About and after this time, much of manufacturing industry shifted from batch production to flow and process production. The role of the operator shifted correspondingly from direct participation to monitoring and inspection. This resulted in a lower frequency of accidents because the operator was more remote from the scene of action but sometimes in a greater severity of accidents because of the speed and power inherent in the process.

When output is determined by the speed at which machines function then productivity becomes a matter of keeping the system running: in other words, reliability is the objective. Thus the operator becomes a monitor, a trouble-shooter and a maintainer rather than a direct manipulator.

This historical sketch of the postwar changes in manufacturing industry might suggest that the ergonomist has regularly dropped one set of problems and taken up another set but this is not the case for several reasons. As explained earlier, the concerns of ergonomics are much wider than those of manufacturing industry. In addition to production ergonomics, there is product or design ergonomics, that is, adapting the machine or product to the user. In the car industry, for example, ergonomics is important not only to component manufacturing and the production lines but also to the eventual driver, passenger and maintainer. It is now routine in the marketing of cars and in their critical appraisal by others to review the quality of the ergonomics, considering ride, seat comfort, handling, noise and vibration levels, ease of use of controls, visibility inside and outside, and so on.

It was suggested above that human performance is usually optimized within a tolerance range of a relevant variable. Much of the early ergonomics attempted to reduce both muscle power output and the extent and variety of movement by way of ensuring that such tolerances were not exceeded. The greatest change in the work situation, the advent of computers, has created the opposite problem. Unless it is well designed ergonomically, a computer workspace can induce too fixed a posture, too little bodily movement and too much repetition of particular combinations of joint movements.

This brief historical review is intended to indicate that, although there has been continuous development of ergonomics, it has taken the form of adding more and more problems rather than changing the problems. However, the corpus of knowledge grows and becomes more reliable and valid, energy expenditure norms are not dependent on how or why the energy is expended, postural issues are the same in aircraft seats and in front of computer screens, much human activity now involves using videoscreens and there are well-established principles based on a mix of laboratory evidence and field studies.

Ergonomics and Related Disciplines

The development of a science-based application which is intermediate between the well-established technologies of engineering and medicine inevitably overlaps into many related disciplines. In terms of its scientific basis, much of ergonomic knowledge derives from the human sciences: anatomy, physiology and psychology. The physical sciences also make a contribution, for example, to solving problems of lighting, heating, noise and vibration.

Most of the European pioneers in ergonomics were workers among the human sciences and it is for this reason that ergonomics is well-balanced between physiology and psychology. A physiological orientation is required as a background to problems such as energy expenditure, posture and application of forces, including lifting. A psychological orientation is required to study problems such as information presentation and job satisfaction. There are of course many problems which require a mixed human sciences approach such as stress, fatigue and shift work.

Most of the American pioneers in this field were involved in either experimental psychology or engineering and it is for this reason that their typical occupational titles—human engineering and human factors—reflect a difference in emphasis (but not in core interests) from European ergonomics. This also explains why occupational hygiene, from its close relationship to medicine, particularly occupational medicine, is regarded in the United States as quite different from human factors or ergonomics. The difference in other parts of the world is less marked. Ergonomics concentrates on the human operator in action, occupational hygiene concentrates on the hazards to the human operator present in the ambient environment. Thus the central interest of the occupational hygienist is toxic hazards, which are outside the scope of the ergonomist. The occupational hygienist is concerned about effects on health, either long-term or short-term; the ergonomist is, of course, concerned about health but he or she is also concerned about other consequences, such as productivity, work design and workspace design. Safety and health are the generic issues which run through ergonomics, occupational hygiene, occupational health and occupational medicine. It is, therefore, not surprising to find that in a large institution of a research, design or production kind, these subjects are often grouped together. This makes possible an approach based on a team of experts in these separate subjects, each making a specialist contribution to the general problem of health, not only of the workers in the institution but also of those affected by its activities and products. By contrast, in institutions concerned with design or provision of services, the ergonomist might be closer to the engineers and other technologists.

It will be clear from this discussion that because ergonomics is interdisciplinary and still quite new there is an important problem of how it should best be fitted into an existing organization. It overlaps onto so many other fields because it is concerned with people and people are the basic and all-pervading resource of every organization. There are many ways in which it can be fitted in, depending on the history and objectives of the particular organization. The main criteria are that ergonomics objectives are understood and appreciated and that mechanisms for implementation of recommendations are built into the organization.

Aims of Ergonomics

It will be clear already that the benefits of ergonomics can appear in many different forms, in productivity and quality, in safety and health, in reliability, in job satisfaction and in personal development.

The reason for this breadth of scope is that its basic aim is efficiency in purposeful activity—efficiency in the widest sense of achieving the desired result without wasteful input, without error and without damage to the person involved or to others. It is not efficient to expend unnecessary energy or time because insufficient thought has been given to the design of the work, the workspace, the working environment and the working conditions. It is not efficient to achieve the desired result in spite of the situation design rather than with support from it.

The aim of ergonomics is to ensure that the working situation is in harmony with the activities of the worker. This aim is self-evidently valid but attaining it is far from easy for a variety of reasons. The human operator is flexible and adaptable and there is continuous learning, but there are quite large individual differences. Some differences, such as physical size and strength, are obvious, but others, such as cultural differences and differences in style and in level of skill, are less easy to identify.

In view of these complexities it might seem that the solution is to provide a flexible situation where the human operator can optimize a specifically appropriate way of doing things. Unfortunately such an approach is sometimes impracticable because the more efficient way is often not obvious, with the result that a worker can go on doing something the wrong way or in the wrong conditions for years.

Thus it is necessary to adopt a systematic approach: to start from a sound theory, to set measurable objectives and to check success against these objectives. The various possible objectives are considered below.

Safety and health

There can be no disagreement about the desirability of safety and health objectives. The difficulty stems from the fact that neither is directly measurable: their achievement is assessed by their absence rather than their presence. The data in question always pertain to departures from safety and health.

In the case of health, much of the evidence is long-term as it is based on populations rather than individuals. It is, therefore, necessary to maintain careful records over long periods and to adopt an epidemiological approach through which risk factors can be identified and measured. For example, what should be the maximum hours per day or per year required of a worker at a computer workstation? It depends on the design of the workstation, the kind of work and the kind of person (age, vision, abilities and so on). The effects on health can be diverse, from wrist problems to mental apathy, so it is necessary to carry out comprehensive studies covering quite large populations while simultaneously keeping track of differences within the populations.

Safety is more directly measurable in a negative sense in terms of kinds and frequencies of accidents and damage. There are problems in defining different kinds of accidents and identifying the often multiple causal factors and there is often a distant relationship between the kind of accident and the degree of harm, from none to fatality.

Nevertheless, an enormous body of evidence concerning safety and health has been accumulated over the past fifty years and consistencies have been discovered which can be related back to theory, to laws and standards and to principles operative in particular kinds of situations.

Productivity and efficiency

Productivity is usually defined in terms of output per unit of time, whereas efficiency incorporates other variables, particularly the ratio of output to input. Efficiency incorporates the cost of what is done in relation to achievement, and in human terms this requires the consideration of the penalties to the human operator.

In industrial situations, productivity is relatively easy to measure: the amount produced can be counted and the time taken to produce it is simple to record. Productivity data are often used in before/after comparisons of working methods, situations or conditions. It involves assumptions about equivalence of effort and other costs because it is based on the principle that the human operator will perform as well as is feasible in the circumstances. If the productivity is higher then the circumstances must be better. There is much to recommend this simple approach provided that it is used with due regard to the many possible complicating factors which can disguise what is really happening. The best safeguard is to try to make sure that nothing has changed between the before and after situations except the aspects being studied.

Efficiency is a more comprehensive but always a more difficult measure. It usually has to be specifically defined for a particular situation and in assessing the results of any studies the definition should be checked for its relevance and validity in terms of the conclusions being drawn. For example, is bicycling more efficient than walking? Bicycling is much more productive in terms of the distance that can be covered on a road in a given time, and it is more efficient in terms of energy expenditure per unit of distance or, for indoor exercise, because the apparatus required is cheaper and simpler. On the other hand, the purpose of the exercise might be energy expenditure for health reasons or to climb a mountain over difficult terrain; in these circumstances walking will be more efficient. Thus, an efficiency measure has meaning only in a well-defined context.

Reliability and quality

As explained above, reliability rather than productivity becomes the key measure in high technology systems (for instance, transport aircraft, oil refining and power generation). The controllers of such systems monitor performance and make their contribution to productivity and to safety by making tuning adjustments to ensure that the automatic machines stay on line and function within limits. All these systems are in their safest states either when they are quiescent or when they are functioning steadily within the designed performance envelope. They become more dangerous when moving or being moved between equilibrium states, for example, when an aircraft is taking off or a process system is being shut down. High reliability is the key characteristic not only for safety reasons but also because unplanned shut-down or stoppage is extremely expensive. Reliability is straightforward to measure after performance but is extremely difficult to predict except by reference to the past performance of similar systems. When or if something goes wrong human error is invariably a contributing cause, but it is not necessarily an error on the part of the controller: human errors can originate at the design stage and during setting up and maintenance. It is now accepted that such complex high-technology systems require a considerable and continuous ergonomics input from design to the assessment of any failures that occur.

Quality is related to reliability but is very difficult if not impossible to measure. Traditionally, in batch and flow production systems, quality has been checked by inspection after output, but the current established principle is to combine production and quality maintenance. Thus each operator has parallel responsibility as an inspector. This usually proves to be more effective, but it may mean abandoning work incentives based simply on rate of production. In ergonomic terms it makes sense to treat the operator as a responsible person rather than as a kind of robot programmed for repetitive performance.

Job satisfaction and personal development

From the principle that the worker or human operator should be recognized as a person and not a robot it follows that consideration should be given to responsibilities, attitudes, beliefs and values. This is not easy because there are many variables, mostly detectable but not quantifiable, and there are large individual and cultural differences. Nevertheless a great deal of effort now goes into the design and management of work with the aim of ensuring that the situation is as satisfactory as is reasonably practicable from the operator’s viewpoint. Some measurement is possible by using survey techniques and some principles are available based on such working features as autonomy and empowerment.

Even accepting that these efforts take time and cost money, there can still be considerable dividends from listening to the suggestions, opinions and attitudes of the people actually doing the work. Their approach may not be the same as that of the external work designer and not the same as the assumptions made by the work designer or manager. These differences of view are important and can provide a refreshing change in strategy on the part of everyone involved.

It is well established that the human being is a continuous learner or can be, given the appropriate conditions. The key condition is to provide feedback about past and present performance which can be used to improve future performance. Moreover, such feedback itself acts as an incentive to performance. Thus everyone gains, the performer and those responsible in a wider sense for the performance. It follows that there is much to be gained from performance improvement, including self-development. The principle that personal development should be an aspect of the application of ergonomics requires greater designer and manager skills but, if it can be applied successfully, can improve all the aspects of human performance discussed above.

Successful application of ergonomics often follows from doing no more than developing the appropriate attitude or point of view. The people involved are inevitably the central factor in any human effort and the systematic consideration of their advantages, limitations, needs and aspirations is inherently important.

Conclusion

Ergonomics is the systematic study of people at work with the objective of improving the work situation, the working conditions and the tasks performed. The emphasis is on acquiring relevant and reliable evidence on which to base recommendation for changes in specific situations and on developing more general theories, concepts, guidelines and procedures which will contribute to the continually developing expertise available from ergonomics.

Overview

In the 3rd edition of the ILO’s Encyclopaedia, published in 1983, ergonomics was summarized in one article that was only about four pages long. Since the publication of the 3rd edition, there has been a major change in emphasis and in understanding of interrelationships in safety and health: the world is no longer easily classifiable into medicine, safety and hazard prevention. In the last decade almost every branch in the production and service industries has expended great effort in improving productivity and quality. This restructuring process has yielded practical experience which clearly shows that productivity and quality are directly related to the design of working conditions. One direct economical measure of productivity—the costs of absenteeism through illness—is affected by working conditions. Therefore it should be possible to increase productivity and quality and to avoid absenteeism by paying more attention to the design of working conditions.

In sum, the simple hypothesis of modern ergonomics can be stated thus: Pain and exhaustion cause health hazards, wasted productivity and reduced quality, which are measures of the costs and benefits of human work.

This simple hypothesis can be contrasted to occupational medicine which generally restricts itself to establishing the aetiology of occupational diseases. Occupational medicine’s goal is to establish conditions under which the probability of developing such diseases is minimized. Using ergonomic principles these conditions can be most easily formulated in the form of demands and load limitations. Occupational medicine can be summed up as establishing “limitations through medico-scientific studies”. Traditional ergonomics regards its role as one of formulating the methods where, using design and work organization, the limitations established through occupational medicine can be put into practice. Traditional ergonomics could then be described as developing “corrections through scientific studies”, where “corrections” are understood to be all work design recommendations that call for attention to be paid to load limits only in order to prevent health hazards. It is a characteristic of such corrective recommendations that practitioners are finally left alone with the problem of applying them—there is no multidisciplinary team effort.

The original aim of inventing ergonomics in 1857 stands in contrast to this kind of “ergonomics by correction”:

... a scientific approach enabling us to reap, for the benefit of ourselves and others, the best fruits of life’s labour for the minimum effort and maximum satisfaction (Jastrzebowski 1857).

The root of the term “ergonomics” stems from the Greek “nomos” meaning rule, and “ergo” meaning work. One could propose that ergonomics should develop “rules” for a more forward-looking, prospective concept of design. In contrast to “corrective ergonomics”, the idea of prospective ergonomics is based on applying ergonomic recommendations which simultaneously take into consideration profitability margins (Laurig 1992).

The basic rules for the development of this approach can be deduced from practical experience and reinforced by the results of occupational hygiene and ergonomics research. In other words, prospective ergonomics means searching for alternatives in work design which prevent fatigue and exhaustion on the part of the working subject in order to promote human productivity (“... for the benefit of ourselves and others”). This comprehensive approach of prospective ergonomics includes workplace and equipment design as well as the design of working conditions determined by an increasing amount of information processing and a changing work organization. Prospective ergonomics is, therefore, an interdisciplinary approach of researchers and practitioners from a wide range of fields united by the same goal, and one part of a general basis for a modern understanding of occupational safety and health (UNESCO 1992).