In the last few years microprocessors have played an ever-increasing role in the field of safety technology. Because entire computers (i.e., central processing unit, memory and peripheral components) are now available in a single component as “single-chip computers”, microprocessor technology is being employed not only in complex machine control, but also in safeguards of relatively simple design (e.g., light grids, two-hand control devices and safety edges). The software controlling these systems comprises between one thousand and several tens of thousands of single commands and usually consists of several hundred program branches. The programs operate in real time and are mostly written in the programmers’ assembly language.

The introduction of computer-controlled systems in the sphere of safety technology has been accompanied in all large-scale technical equipment not only by expensive research and development projects but also by significant restrictions designed to enhance safety. (Aerospace technology, military technology and atomic power technology may here be cited as examples of large-scale applications.) The collective field of industrial mass production has up to now been treated only in a very limited fashion. This is partly for the reason that the rapid cycles of innovation characteristic of industrial machine design make it difficult to carry over, in any but a very restricted manner, such knowledge as may be derived from research projects concerned with the final testing of large-scale safety devices. This makes the development of rapid and low-cost assessment procedures a desideratum (Reinert and Reuss 1991).

This article first examines machines and facilities in which computer systems presently perform safety tasks, using examples of accidents occurring preponderantly in the area of machine safeguards to depict the particular role which computers play in safety technology. These accidents give some indication as to which precautions must be taken so that the computer-controlled safety equipment currently coming into increasingly wide use will not lead to a rise in the number of accidents. The final section of the article sketches out a procedure which will enable even small computer systems to be brought to an appropriate level of technical safety at justifiable expense and within an acceptable period of time. The principles indicated in this final part are currently being introduced into international standardization procedures and will have implications for all areas of safety technology in which computers find application.

Examples of the Use of Software and Computers in the Field of Machine Safeguards

The following four examples make it clear that software and computers are currently entering more and more into safety-related applications in the commercial domain.

Personal-emergency signal installations consist, as a rule, of a central receiving station and a number of personal emergency signalling devices. The devices are carried by persons working onsite by themselves. If any of these persons working alone find themselves in an emergency situation, they can use the device to trip an alarm by radio signal in the central receiving station. Such a will-dependent alarm trigger may also be supplemented by a will-independent triggering mechanism activated by sensors built into the personal emergency devices. Both the individual devices and the central receiving station are frequently controlled by microcomputers. It is conceivable that failure of specific single functions of the built-in computer could lead, in an emergency situation, to a failure to trip the alarm. Precautions must therefore be taken to perceive and to repair such loss of function in time.

Printing presses used today to print magazines are large machines. The paper webs are normally prepared by a separate machine in such a way as to enable a seamless transition to a new paper roll. The printed pages are folded by a folding machine and subsequently worked through a chain of further machines. This results in pallets loaded with fully sewn magazines. Although such plants are automated, there are two points at which manual interventions must be made: (1) in the threading of the paper paths, and (2) in clearing obstructions caused by paper tears at danger spots on the rotating rollers. For this reason, a reduced speed of operation or a path- or time-limited jogging mode must be ensured by the control technology while the presses are being adjusted. On account of the complex steering procedures involved, every single printing station must be equipped with its own programmable logic controller. Any failure occurring in the control of a printing plant while guard grids are open must be kept from leading either to the unexpected start-up of a stopped machine or to operation in excess of appropriately reduced speeds.

In large factories and warehouses, driverless, automated guided robot vehicles move about on specially marked tracks. These tracks can be walked upon at any time by persons, or materials and equipment may be inadvertently left on the tracks, since they are not separated structurally from other lines of traffic. For this reason, some sort of collision-prevention equipment must be used to ensure that the vehicle will be brought to a halt before any dangerous collision with a person or object occurs. In more recent applications, collision prevention is effected by means of ultrasonic or laser light scanners used in combination with a safety bumper. Since these systems work under computer control, it is possible to configure several permanent detection zones so that a vehicle can modify its reaction depending on the specific detection zone in which a person is located. Failures in the protective device must not lead to a dangerous collision with a person.

Paper-cutting control device guillotines are used to press and then cut thick stacks of paper. They are triggered by a two-hand control device. The user must reach into the danger zone of the machine after each cut is made. An immaterial safeguard, usually a light grid, is used in conjunction with both the two-hand control device and a safe machine-control system to prevent injuries when paper is fed during the cutting operation. Nearly all the larger, more modern guillotines in use today are controlled by multichannel microcomputer systems. Both the two-hand operation and the light grid must also be guaranteed to function safely.

Accidents with Computer-Controlled Systems

In nearly all fields of industrial application, accidents with software and computers are reported (Neumann 1994). In most cases, computer failures do not lead to injury to persons. Such failures are in any case made public only when they are of general public interest. This means that the instances of malfunction or accident related to computers and software in which injury to persons is involved make up a relatively high proportion of all publicized cases. Unfortunately, accidents which do not cause much of a public sensation are not investigated as to their causes with quite the same intensity as are more prominent accidents, typically in large-scale plants. For this reason, the examples which follow refer to four descriptions of malfunctions or accidents typical of computer-controlled systems outside the field of machine safeguards, which are used to suggest what has to be taken into account when judgements concerning safety technology are made.

Accidents caused by random failures in hardware

The following mishap was caused by a concentration of random failures in the hardware combined with programming failure: A reactor overheated in a chemical plant, whereupon relief valves were opened, allowing the contents of the reactor to be discharged into the atmosphere. This mishap occurred a short time after a warning had been given that the oil level in a gearbox was too low. Careful investigation of the mishap showed that shortly after the catalyst had initiated the reaction in the reactor—in consequence of which the reactor would have required more cooling—the computer, on the basis of the report of low oil levels in the gearbox, froze all magnitudes under its control at a fixed value. This kept the cold water flow at too low a level and the reactor overheated as a result. Further investigation showed that the indication of low oil levels had been signalled by a faulty component.

The software had responded according to the specification with the tripping of an alarm and the fixing of all operative variables. This was a consequence of the HAZOP (hazards and operability analysis) study (Knowlton 1986) done prior to the event, which required that all controlled variables not be modified in the event of a failure. Since the programmer was not acquainted with the procedure in detail, this requirement was interpreted to mean that the controlled actuators (control valves in this case) were not to be modified; no attention was paid to the possibility of a rise in temperature. The programmer did not take into consideration that after having received an erroneous signal the system might find itself in a dynamic situation of a type requiring the active intervention of the computer to prevent a mishap. The situation which led to the mishap was so unlikely, moreover, that it had not been analysed in detail in the HAZOP study (Levenson 1986). This example provides a transition to a second category of causes of software and computer accidents. These are the systematic failures which are in the system from the beginning, but which manifest themselves only in certain very specific situations which the developer has not taken into account.

Accidents caused by operating failures

In field testing during the final inspection of robots, one technician borrowed the cassette of a neighbouring robot and substituted a different one without informing his colleague that he had done so. Upon returning to his workplace, the colleague inserted the wrong cassette. Since he stood next to the robot and expected a particular sequence of movements from it—a sequence which came out differently on account of the exchanged program—a collision occurred between robot and human. This accident describes the classical example of an operating failure. The role of such failures in malfunctions and accidents is currently increasing due to increasing complexity in the application of computer-controlled safety mechanisms.

Accidents caused by systematic failures in hardware or software

A torpedo with a warhead was to have been fired for training purposes, from a warship on the high seas. On account of a defect in the drive apparatus the torpedo remained in the torpedo tube. The captain decided to return to the home port in order to salvage the torpedo. Shortly after the ship had begun to make its way back home, the torpedo exploded. An analysis of the accident revealed that the torpedo’s developers had been obliged to build into the torpedo a mechanism designed to prevent its returning to the launching pad after having been fired and thus destroying the ship that had launched it. The mechanism chosen for this was as follows: After the firing of the torpedo a check was made, using the inertial navigation system, to see whether its course had altered by 180°. As soon as the torpedo sensed that it had turned 180°, the torpedo detonated immediately, supposedly at a safe distance from the launching pad. This detection mechanism was actuated in the case of the torpedo which had not been properly launched, with the result that the torpedo exploded after the ship had changed its course by 180°. This is a typical example of an accident occurring on account of a failure in specifications. The requirement in the specifications that the torpedo should not destroy its own ship should its course change was not formulated precisely enough; the precaution was thus programmed erroneously. The error became apparent only in a particular situation, one which the programmer had not taken into account as a possibility.

On 14 September 1993, a Lufthansa Airbus A 320 crashed while landing in Warsaw (figure 1). A careful investigation of the accident showed that modifications in the landing logic of the on-board computer made after an accident with a Lauda Air Boeing 767 in 1991 were partly responsible for this crash landing. What had happened in the 1991 accident was that the thrust deflection, which diverts some part of the motor gases so as to brake the airplane during landing, had engaged while still in the air, thus forcing the machine into an uncontrollable nose-dive. For this reason, an electronic locking of the thrust deflection had been built into the Airbus machines. This mechanism permitted thrust deflection to come into effect only after sensors on both sets of landing gear had signalled the compression of the shock absorbers under the pressure of the wheels touching down. On the basis of incorrect information, the pilots of the plane in Warsaw anticipated a strong side wind.

Figure 1. Lufthansa Airbus after accident in Warsaw 1993

For this reason they brought the machine in at a slight tilt and the Airbus touched down with the right wheel only, leaving the left bearing less than full weight. On account of the electronic locking of the thrust deflection, the on-board computer denied to the pilot for the space of nine seconds such manoeuvers as would have allowed the airplane to land safely despite adverse circumstances. This accident demonstrates very clearly that modifications in computer systems can lead to new and hazardous situations if the range of their possible consequences is not considered in advance.

The following example of a malfunction also demonstrates the disastrous effects which the modification of one single command can have in computer systems. The alcohol content of blood is determined, in chemical tests, using clear blood serum from which the blood corpuscles have been centrifuged out in advance. The alcohol content of serum is therefore higher (by a factor of 1.2) than that of the thicker whole blood. For this reason the alcohol values in serum must be divided by a factor of 1.2 in order to establish the legally and medically critical parts-per-thousand figures. In the inter-laboratory test held in 1984, the blood alcohol values ascertained in identical tests performed at different research institutions using serum were to have been compared with each other. Since it was a question of comparison only, the command to divide by 1.2 was moreover erased from the program at one of the institutions for the duration of the experiment. After the inter-laboratory test had come to an end, a command to multiply by 1.2 was erroneously introduced into the program at this spot. Roughly 1,500 incorrect parts-per-thousand values were calculated between August 1984 and March 1985 as a result. This error was critical for the professional careers of truck drivers with blood alcohol levels between 1.0 and 1.3 per thousand, since a legal penalty entailing confiscation of a driver’s licence for a prolonged period is the consequence of a 1.3 per thousand value.

Accidents caused by influences from operating stresses or from environmental stresses

As a consequence of a disturbance caused by collection of waste in the effective area of a CNC (computer numeric control) punching and nibbling machine, the user put into effect the “programmed stop”. As he was trying to remove the waste with his hands, the push rod of the machine started moving in spite of the programmed stop and severely injured the user. An analysis of the accident revealed that it had not been a question of an error in the program. The unexpected start-up could not be reproduced. Similar irregularities had been observed in the past on other machines of the same type. It seems plausible to deduce from these that the accident must have been caused by electromagnetic interference. Similar accidents with industrial robots are reported from Japan (Neumann 1987).

A malfunction in the Voyager 2 space probe on January 18, 1986, makes even more clear the influence of environmental stresses on computer-controlled systems. Six days before the closest approach to Uranus, large fields of black-and-white lines covered over the pictures from Voyager 2. A precise analysis showed that a single bit in a command word of the flight data subsystem had caused the failure, observed as the pictures were compressed in the probe. This bit had most likely been knocked out of place within the program memory by the impact of a cosmic particle. Error-free transmission of the compressed photographs from the probe was effected only two days later, using a replacement program capable of bypassing the failed memory point (Laeser, McLaughlin and Wolff 1987).

Summary of the accidents presented

The accidents analysed show that certain risks that might be neglected under conditions using simple, electro-mechanical technology, gain in significance when computers are used. Computers permit the processing of complex and situation-specific safety functions. An unambiguous, error-free, complete and testable specification of all safety functions becomes for this reason especially important. Errors in specifications are difficult to discover and are frequently the cause of accidents in complex systems. Freely programmable controls are usually introduced with the intention of being able to react flexibly and quickly to the changing market. Modifications, however—particularly in complex systems—have side effects which are difficult to foresee. All modifications must therefore be subjected to a strictly formal management of change procedure in which a clear separation of safety functions from partial systems not relevant to safety will help keep the consequences of modifications for safety technology easy to survey.

Computers work with low levels of electricity. They are therefore susceptible to interference from external radiation sources. Since the modification of a single signal among millions can lead to a malfunction, it is worth paying special attention to the theme of electromagnetic compatibility in connection with computers.

The servicing of computer-controlled systems is currently becoming more and more complex and thus more unclear. The software ergonomics of user and configuration software is therefore becoming more interesting from the point of view of safety technology.

No computer system is 100% testable. A simple control mechanism with 32 binary input ports and 1,000 different software paths requires 4.3 × 1012 tests for a complete check. At a rate of 100 tests per second executed and evaluated, a complete test would take 1,362 years.

Procedures and Measures for the Improvement of Computer-Controlled Safety Devices

Procedures have been developed within the last 10 years which permit mastery of specific safety-related challenges in connection with computers. These procedures address themselves to the computer failures described in this section. The examples described of software and computers in machine safeguards and the accidents analysed, show that the extent of damage and thus also the risk involved in various applications are extremely variable. It is therefore clear that the requisite precautions for the improvement of computers and software used in safety technology should be established in relation to the risk.

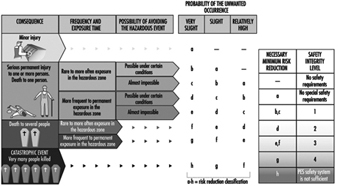

Figure 2 shows a qualitative procedure whereby the necessary risk reduction obtainable using safety systems can be determined independently of the extent to which and the frequency with which damage occurs (Bell and Reinert 1992). The types of failures in computer systems analysed in the section “Accidents with computer-controlled systems” (above) may be brought into relation with the so-called Safety Integrity Levels—that is, the technical facilities for risk reduction.

Figure 2. Qualitative procedure for risk determination

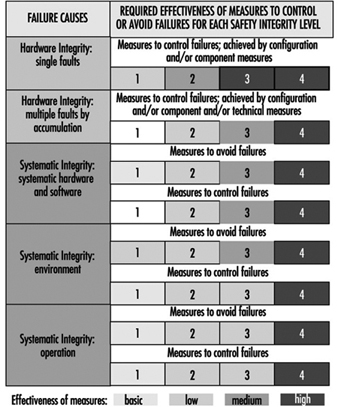

Figure 3 makes it clear that the effectiveness of measures taken, in any given case, to reduce error in software and computers needs to grow with increasing risk (DIN 1994; IEC 1993).

Figure 3, Effectiveness of precautions taken against errors independently of risk

The analysis of the accidents sketched above shows that the failure of computer-controlled safeguards is caused not only by random component faults, but also by particular operating conditions which the programmer has failed to take into account. The not immediately obvious consequences of program modifications made in the course of system maintenance constitute a further source of error. It follows that there can be failures in safety systems controlled by microprocessors which, though made during the development of the system, can lead to a dangerous situation only during operation. Precautions against such failures must therefore be taken while safety-related systems are in the development stage. These so-called failure-avoidance measures must be taken not only during the concept phase, but also in the process of development, installation and modification. Certain failures can be avoided if they are discovered and corrected during this process (DIN 1990).

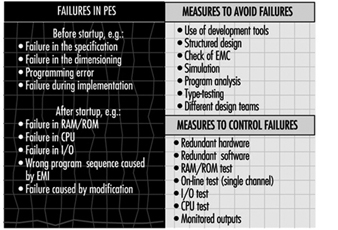

As the last mishap described makes clear, the breakdown of a single transistor can lead to the technical failure of highly complex automated equipment. Since each single circuit is composed of many thousands of transistors and other components, numerous failure-avoidance measures must be taken to recognize such failures as turn up in operation and to initiate an appropriate reaction in the computer system. Figure 4 describes types of failures in programmable electronic systems as well as examples of precautions which may be taken to avoid and control failures in computer systems (DIN 1990; IEC 1992).

Figure 4. Examples of precautions taken to control and avoid errors in computer systems

Possibilities and Prospects of Programmable Electronic Systems in Safety Technology

Modern machines and plants are becoming increasingly complex and must achieve ever more comprehensive tasks in ever shorter periods of time. For this reason, computer systems have taken over nearly all areas of industry since the mid-1970s. This increase in complexity alone has contributed significantly to the rising costs involved in improving safety technology in such systems. Although software and computers pose a great challenge to safety in the workplace, they also make possible the implementation of new error-friendly systems in the field of safety technology.

A droll but instructive verse by Ernst Jandl will help to explain what is meant by the concept error-friendly. “Lichtung: Manche meinen lechts und rinks kann man nicht velwechsern, werch ein Illtum”. (“Dilection: Many berieve light and reft cannot be intelchanged, what an ellol”.) Despite the exchange of the letters r and l, this phrase is easily understood by a normal adult human. Even someone with low fluency in the English language can translate it into English. The task is, however, nearly impossible for a translating computer on its own.

This example shows that a human being can react in a much more error-friendly fashion than a language computer can. This means that humans, like all other living creatures, can tolerate failures by referring them to experience. If one looks at the machines in use today, one can see that the majority of machines penalize user failures not with an accident, but with a decrease in production. This property leads to the manipulation or evasion of safeguards. Modern computer technology places systems at the disposal of work safety which can react intelligently—that is, in a modified way. Such systems thus make possible an error-friendly mode of behaviour in novel machines. They warn users during a wrong operation first of all and shut the machine off only when this is the only way to avoid an accident. The analysis of accidents shows that there exists in this area a considerable potential for reducing accidents (Reinert and Reuss 1991).