Work and Mental Health

This chapter provides an overview of major types of mental health disorder that can be associated with work—mood and affective disorders (e.g., dissatisfaction), burnout, post-traumatic stress disorder (PTSD), psychoses, cognitive disorders and substance abuse. The clinical picture, available assessment techniques, aetiological agents and factors, and specific prevention and management measures will be provided. The relationship with work, occupation or branch of industry will be illustrated and discussed where possible.

This introductory article first will provide a general perspective on occupational mental health itself. The concept of mental health will be elaborated upon, and a model will be presented. Next, we will discuss why attention should be paid to mental (ill) health and which occupational groups are at greatest risk. Finally, we will present a general intervention framework for successfully managing work-related mental health problems.

What Is Mental Health: A Conceptual Model

There are many different views about the components and processes of mental health. The concept is heavily value laden, and one definition is unlikely to be agreed upon. Like the strongly associated concept of “stress”, mental health is conceptualized as:

- a state—for example, a state of total psychological and social well-being of an individual in a given sociocultural environment, indicative of positive moods and affects (e.g., pleasure, satisfaction and comfort) or negative ones (e.g., anxiety, depressive mood and dissatisfaction).

- a process indicative of coping behaviour—for example, striving for independence, being autonomous (which are key aspects of mental health).

- the outcome of a process—a chronic condition resulting either from an acute, intense confrontation with a stressor, such as is the case in a post-traumatic stress disorder, or from the continuing presence of a stressor which may not necessarily be intense. This is the case in burnout, as well as in psychoses, major depressive disorders, cognitive disorders and substance abuse. Cognitive disorders and substance abuse are, however, often considered as neurological problems, since pathophysiological processes (e.g., degeneration of the myelin sheath) resulting from ineffective coping or from the stressor itself (alcohol use or occupational exposition to solvents, respectively) can underlie these chronic conditions.

Mental health may also be associated with:

- Person characteristics like “coping styles”—competence (including effective coping, environmental mastery and self-efficacy) and aspiration are characteristic of a mentally healthy person, who shows interest in the environment, engages in motivational activity and seeks to extend him- or herself in ways that are personally significant.

Thus, mental health is conceptualized not only as a process or outcome variable, but also as an independent variable—that is, as a personal characteristic that influences our behaviour.

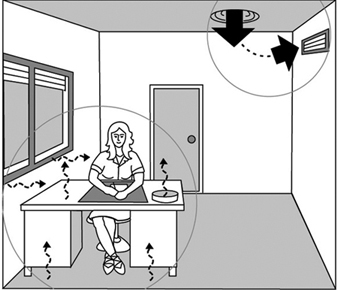

In figure 1 a mental health model is presented. Mental health is determined by environmental characteristics, both in and outside the work situation, and by characteristics of the individual. Major environmental job characteristics are elaborated upon in the chapter “Psychosocial and organizational factors”, but some points on these environmental precursors of mental (ill) health have to be made here as well.

Figure 1. A model for mental health.

There are many models, most of them stemming from the field of work and organizational psychology, that identify precursors of mental ill health. These precursors are often labelled “stressors”. Those models differ in their scope and, related to this, in the number of stressor dimensions identified. An example of a relatively simple model is that of Karasek (Karasek and Theorell 1990), describing only three dimensions: psychological demands, decision latitude (incorporating skill discretion and decision authority) and social support. A more elaborate model is that of Warr (1994), with nine dimensions: opportunity for control (decision authority), opportunity for skill use (skill discretion), externally generated goals (quantitative and qualitative demands), variety, environmental clarity (information about consequences of behaviour, availability of feedback, information about the future, information about required behaviour), availability of money, physical security (low physical risk, absence of danger), opportunity for interpersonal contact (prerequisite for social support), and valued social position (cultural and company evaluations of status, personal evaluations of significance). From the above it is clear that the precursors of mental (ill) health are generally psychosocial in nature, and are related to work content, as well as working conditions, conditions of employment and (formal and informal) relationships at work.

Environmental risk factors for mental (ill) health generally result in short-term effects such as changes in mood and affect, like feelings of pleasure, enthusiasm or a depressed mood. These changes are often accompanied by changes in behaviour. We may think of restless behaviour, palliative coping (e.g., drinking) or avoiding, as well as active problem-solving behaviour. These affects and behaviours are generally accompanied by physiological changes as well, indicative of arousal and sometimes also of a disturbed homeostasis. When one or more of these stressors remains active, the short-term, reversible responses may result in more stable, less reversible mental health outcomes like burnout, psychoses or major depressive disorder. Situations that are extremely threatening may even immediately result in chronic mental health disorders (e.g., PTSD) which are difficult to reverse.

Person characteristics may interact with psychosocial risk factors at work and exacerbate or buffer their effects. The (perceived) coping ability may not only moderate or mediate the effects of environmental risk factors, but may also determine the appraisal of the risk factors in the environment. Part of the effect of the environmental risk factors on mental health results from this appraisal process.

Person characteristics (e.g., physical fitness) may not only act as precursors in the development of mental health, but may also change as a result of the effects. Coping ability may, for example, increase as the coping process progresses successfully (“learning”). Long-term mental health problems will, on the other hand, often reduce coping ability and capacity in the long run.

In occupational mental health research, attention has been particularly directed to affective well-being—factors such as job satisfaction, depressive moods and anxiety. The more chronic mental health disorders, resulting from long-term exposure to stressors and to a greater or lesser extent also related to personality disorders, have a much lower prevalence in the working population. These chronic mental health problems have a multitude of causal factors. Occupational stressors will consequently be only partly responsible for the chronic condition. Also, people suffering from these kinds of chronic problem will have great difficulty in maintaining their position at work, and many are on sick leave or have dropped out of work for quite a long period of time (1 year), or even permanently. These chronic problems, therefore, are often studied from a clinical perspective.

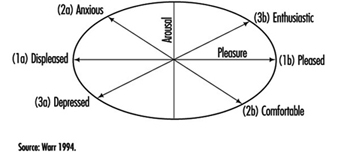

Since, in particular, affective moods and affects are so frequently studied in the occupational field, we will elaborate on them a little bit more. Affective well-being has been treated both in a rather undifferentiated way (ranging from feeling good to feeling bad), as well as by considering two dimensions: “pleasure” and “arousal” (figure 2). When variations in arousal are uncorrelated with pleasure, these variations alone are generally not considered to be an indicator of well-being.

Figure 2. Three principal axes for the measurement of affective well-being.

When, however, arousal and pleasure are correlated, four quadrants can be distinguished:

- Highly aroused and pleased indicates enthusiasm.

- Low aroused and pleased indicates comfort.

- Highly aroused and displeased indicates anxiety.

- Low aroused and displeased indicates depressed mood (Warr 1994).

Well-being can be studied at two levels: a general, context-free level and a context-specific level. The work environment is such a specific context. Data analyses support the general notion that the relation between job characteristics and context-free, non-work mental health is mediated by an effect on work-related mental health. Work-related affective well-being has commonly been studied along the horizontal axis (Figure 2) in terms of job satisfaction. Affects related to comfort in particular have, however, largely been ignored. This is regrettable, since this affect might indicate resigned job satisfaction: people may not complain about their jobs, but may still be apathetic and uninvolved (Warr 1994).

Why Pay Attention to Mental Health Issues?

There are several reasons that illustrate the need for attention to mental health issues. First of all, national statistics of several countries indicate that a lot of people drop out of work because of mental health problems. In the Netherlands, for example, for one-third of those employees who are diagnosed as disabled for work each year, the problem is related to mental health. The majority of this category, 58%, is reported to be work related (Gründemann, Nijboer and Schellart 1991). Together with musculoskeletal problems, mental health problems account for about two-thirds of those who drop out for medical reasons each year.

Mental ill health is an extensive problem in other countries as well. According to the Health and Safety Executive Booklet, it has been estimated that 30 to 40% of all sickness absence from work in the UK is attributable to some form of mental illness (Ross 1989; O’Leary 1993). In the UK, it has been estimated that one in five of the working population suffers each year from some form of mental illness. It is difficult to be precise about the number of working days lost each year because of mental ill health. For the UK, a figure of 90 million certified days—or 30 times that lost as a result of industrial disputes—is widely quoted (O’Leary 1993). This compares with 8 million days lost as a result of alcoholism and drink-related diseases and 35 million days as a result of coronary heart disease and strokes.

Apart from the fact that mental ill health is costly, both in human and financial terms, there is a legal framework provided by the European Union (EU) in its framework directive on health and safety at work (89/391/EEC), enacted in 1993. Although mental health is not as such an element which is central to this directive, a certain amount of attention is given to this aspect of health in Article 6. The framework directive states, among other things, that the employer has:

“a duty to ensure the safety and health of workers in every aspect related to work, following general principles of prevention: avoiding risks, evaluating the risks which cannot be avoided, combating the risks at source, adapting the work to the individual, especially as regards the design of workplaces, the choice of work equipment and the choice of work and production methods, with a view, in particular, to alleviating monotonous work and work at a predetermined work rate and to reduce their effects on health.”

Despite this directive, not all European countries have adopted framework legislation on health and safety. In a study comparing regulations, policies and practices concerning mental health and stress at work in five European countries, those countries with such framework legislation (Sweden, the Netherlands and the UK) recognize mental health issues at work as important health and safety topics, whereas those countries which do not have such a framework (France, Germany) do not recognize mental health issues as important (Kompier et al. 1994).

Last but not least, prevention of mental ill health (at its source) pays. There are strong indications that important benefits result from preventive programmes. For example, of the employers in a national representative sample of companies from three major branches of industry, 69% state that motivation increased; 60%, that absence due to sickness decreased ; 49%, that the atmosphere improved; and 40%, that productivity increased as a result of a prevention programme (Houtman et al. 1995).

Occupational Risk Groups of Mental Health

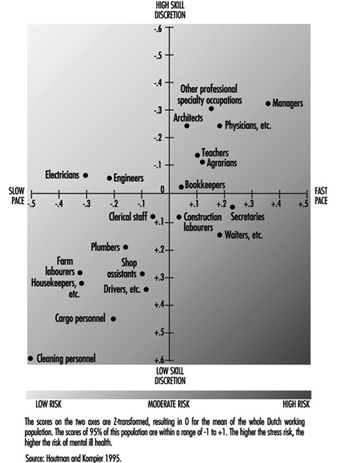

Are specific groups of the working population at risk of mental health problems? This question cannot be answered in a straightforward manner, since hardly any national or international monitoring systems exist which identify risk factors, mental health consequences or risk groups. Only a “scattergram” can be given. In some countries national data exist for the distribution of occupational groups with respect to major risk factors (e.g., for the Netherlands, Houtman and Kompier 1995; for the United States, Karasek and Theorell 1990). The distribution of the occupational groups in the Netherlands on the dimensions of job demands and skill discretion (figure 3) agree fairly well with the US distribution shown by Karasek and Theorell, for those groups that are in both samples. In those occupations with high work pace and/or low skill discretion, the risk of mental health disorders is highest.

Figure 3. Risk for stress and mental ill health for different occupational groups, as determined by the combined effects of work pace and skill discretion.

Also, in some countries there are data for mental health outcomes as related to occupational groups. Occupational groups that are especially prone to drop out for reasons of mental ill health in the Netherlands are those in the service sector, such as health care personnel and teachers, as well as cleaning personnel, housekeepers and occupations in the transport branch (Gründemann, Nijboer and Schellart1991).

In the United States, occupations which were highly prone to major depressive disorder, as diagnosed with standardized coding systems (i.e., the third edition of the Diagnostic and Statistical Manual of Mental Disorders (DSM III)) (American Psychiatric Association 1980), are juridicial employees, secretaries and teachers (Eaton et al. 1990).

Management of Mental Health Problems

The conceptual model (figure 1) suggests at least two targets of intervention in mental health issues:

- The (work) environment.

- The person—either his or her characteristics or the mental health consequences.

Primary prevention, the type of prevention that should prevent mental ill health from occurring, should be directed at the precursors by alleviating or managing the risks in the environment and increasing the coping ability and capacity of the individual. Secondary prevention is directed at the maintenance of people at work who already have some form of (mental) health problem. This type of prevention should embrace the primary prevention strategy, accompanied by strategies to make both employees and their supervisors sensitive to signals of early mental ill health in order to reduce the consequences or prevent them from getting worse. Tertiary prevention is directed at the rehabilitation of people who have dropped out of work due to mental health problems. This type of prevention should be directed at adapting the workplace to the possibilities of the individual (which is often found to be quite effective), along with individual counselling and treatment. Table 1 provides a schematic framework for the management of mental health disorders at the workplace. Effective preventive policy plans of organizations should, in principle, take into account all three types of strategy (primary, secondary and tertiary prevention), as well as be directed at risks, consequences and person characteristics.

Table 1. A schematic overview of management strategies on mental health problems, and some examples.

|

Type of |

Intervention level |

|

|

Work environment |

Person characteristics and/or health outcomes |

|

|

Primary |

Redesign of task content Redesign of communication structure |

Training groups of employees on signalling and handling specific work- related problems (e.g., how to manage time pressure, robberies etc.) |

|

Secondary |

Introduction of a policy on how to act in case of absenteeism (e.g., training supervisors to discuss absence and return with employees concerned) Provide facilities within the organization, especially for risk groups (e.g., counsellor for sexual harassment) |

Training in relaxation techniques |

|

Tertiary |

Adaptation of an individual workplace |

Individual counselling Individual treatment or therapy (may also be with medication) |

The schedule as presented provides a method for systematic analysis of all possible types of measure. One can discuss whether a certain measure belongs somewhere else in the schedule; such a discussion is, however, not very fruitful, since it is often the case that primary preventive measures can work out positively for secondary prevention as well. The proposed systematic analysis may well result in a large number of potential measures, several of which may be adopted, either as a general aspect of the (health and safety) policy or in a specific case.

In conclusion: Although mental health is not a clearly defined state, process or outcome, it covers a generally agreed upon area of (ill) health. Part of this area can be covered by generally accepted diagnostic criteria (e.g., psychosis, major depressive disorder); the diagnostic nature of other parts is neither as clear nor as generally accepted. Examples of the latter are moods and affects, and also burnout. Despite this, there are many indications that mental (ill) health, including the more vague diagnostic criteria, is a major problem. Its costs are high, both in human and financial terms. In the following articles of this chapter, several mental health disorders—moods and affects (e.g., dissatisfaction), burnout, post-traumatic stress disorder, psychoses, cognitive disorders and substance abuse—will be discussed in much more depth with respect to the clinical picture, available assessment techniques, aetiological agents and factors, and specific prevention and management measures.

Types of Lamps and Lighting

A lamp is an energy converter. Although it may carry out secondary functions, its prime purpose is the transformation of electrical energy into visible electromagnetic radiation. There are many ways to create light. The standard method for creating general lighting is the conversion of electrical energy into light.

Types of Light

Incandescence

When solids and liquids are heated, they emit visible radiation at temperatures above 1,000 K; this is known as incandescence.

Such heating is the basis of light generation in filament lamps: an electrical current passes through a thin tungsten wire, whose temperature rises to around 2,500 to 3,200 K, depending upon the type of lamp and its application.

There is a limit to this method, which is described by Planck’s Law for the performance of a black body radiator, according to which the spectral distribution of energy radiated increases with temperature. At about 3,600 K and above, there is a marked gain in emission of visible radiation, and the wavelength of maximum power shifts into the visible band. This temperature is close to the melting point of tungsten, which is used for the filament, so the practical temperature limit is around 2,700 K, above which filament evaporation becomes excessive. One result of these spectral shifts is that a large part of the radiation emitted is not given off as light but as heat in the infrared region. Filament lamps can thus be effective heating devices and are used in lamps designed for print drying, food preparation and animal rearing.

Electric discharge

Electrical discharge is a technique used in modern light sources for commerce and industry because of the more efficient production of light. Some lamp types combine the electrical discharge with photoluminescence.

An electric current passed through a gas will excite the atoms and molecules to emit radiation of a spectrum which is characteristic of the elements present. Two metals are commonly used, sodium and mercury, because their characteristics give useful radiations within the visible spectrum. Neither metal emits a continuous spectrum, and discharge lamps have selective spectra. Their colour rendering will never be identical to continuous spectra. Discharge lamps are often classed as high pressure or low pressure, although these terms are only relative, and a high-pressure sodium lamp operates at below one atmosphere.

Types of Luminescence

Photoluminescence occurs when radiation is absorbed by a solid and is then re-emitted at a different wavelength. When the re-emitted radiation is within the visible spectrum the process is called fluorescence or phosphorescence.

Electroluminescence occurs when light is generated by an electric current passed through certain solids, such as phosphor materials. It is used for self-illuminated signs and instrument panels but has not proved to be a practical light source for the lighting of buildings or exteriors.

Evolution of Electric Lamps

Although technological progress has enabled different lamps to be produced, the main factors influencing their development have been external market forces. For example, the production of filament lamps in use at the start of this century was possible only after the availability of good vacuum pumps and the drawing of tungsten wire. However, it was the large-scale generation and distribution of electricity to meet the demand for electric lighting that determined market growth. Electric lighting offered many advantages over gas- or oil-generated light, such as steady light that requires infrequent maintenance as well as the increased safety of having no exposed flame, and no local by-products of combustion.

During the period of recovery after the Second World War, the emphasis was on productivity. The fluorescent tubular lamp became the dominant light source because it made possible the shadow-free and comparatively heat-free lighting of factories and offices, allowing maximum use of the space. The light output and wattage requirements for a typical 1,500 mm fluorescent tubular lamp is given in table 1.

Table 1. Improved light output and wattage requirements of some typical 1,500 mm fluorescent tube lamps

|

Rating (W) |

Diameter (mm) |

Gas fill |

Light output (lumens) |

|

80 |

38 |

argon |

4,800 |

|

65 |

38 |

argon |

4,900 |

|

58 |

25 |

krypton |

5,100 |

|

50 |

25 |

argon |

5,100 |

By the 1970s oil prices rose and energy costs became a significant part of operating costs. Fluorescent lamps that produce the same amount of light with less electrical consumption were demanded by the market. Lamp design was refined in several ways. As the century closes there is a growing awareness of global environment issues. Better use of declining raw materials, recycling or safe disposal of products and the continuing concern over energy consumption (particularly energy generated from fossil fuels) are impacting on current lamp designs.

Performance Criteria

Performance criteria vary by application. In general, there is no particular hierarchy of importance of these criteria.

Light output: The lumen output of a lamp will determine its suitability in relation to the scale of the installation and the quantity of illumination required.

Colour appearance and colour rendering: Separate scales and numerical values apply to colour appearance and colour rendering. It is important to remember that the figures provide guidance only, and some are only approximations. Whenever possible, assessments of suitability should be made with actual lamps and with the colours or materials that apply to the situation.

Lamp life: Most lamps will require replacement several times during the life of the lighting installation, and designers should minimize the inconvenience to the occupants of odd failures and maintenance. Lamps are used in a wide variety of applications. The anticipated average life is often a compromise between cost and performance. For example, the lamp for a slide projector will have a life of a few hundred hours because the maximum light output is important to the quality of the image. By contrast, some roadway lighting lamps may be changed every two years, and this represents some 8,000 burning hours.

Further, lamp life is affected by operating conditions, and thus there is no simple figure that will apply in all conditions. Also, the effective lamp life may be determined by different failure modes. Physical failure such as filament or lamp rupture may be preceded by reduction in light output or changes in colour appearance. Lamp life is affected by external environmental conditions such as temperature, vibration, frequency of starting, supply voltage fluctuations, orientation and so on.

It should be noted that the average life quoted for a lamp type is the time for 50% failures from a batch of test lamps. This definition of life is not likely to be applicable to many commercial or industrial installations; thus practical lamp life is usually less than published values, which should be used for comparison only.

Efficiency: As a general rule the efficiency of a given type of lamp improves as the power rating increases, because most lamps have some fixed loss. However, different types of lamps have marked variation in efficiency. Lamps of the highest efficiency should be used, provided that the criteria of size, colour and lifetime are also met. Energy savings should not be at the expense of the visual comfort or the performance ability of the occupants. Some typical efficacies are given in table 2.

Table 2. Typical lamp efficacies

|

Lamp efficacies |

|

|

100 W filament lamp |

14 lumens/watt |

|

58 W fluorescent tube |

89 lumens/watt |

|

400 W high-pressure sodium |

125 lumens/watt |

|

131 W low-pressure sodium |

198 lumens/watt |

Main lamp types

Over the years, several nomenclature systems have been developed by national and international standards and registers.

In 1993, the International Electrotechnical Commission (IEC) published a new International Lamp Coding System (ILCOS) intended to replace existing national and regional coding systems. A list of some ILCOS short form codes for various lamps is given in table 3.

Table 3. International Lamp Coding System (ILCOS) short form coding system for some lamp types

|

Type (code) |

Common ratings (watts) |

Colour rendering |

Colour temperature (K) |

Life (hours) |

|

Compact fluorescent lamps (FS) |

5–55 |

good |

2,700–5,000 |

5,000–10,000 |

|

High-pressure mercury lamps (QE) |

80–750 |

fair |

3,300–3,800 |

20,000 |

|

High-pressure sodium lamps (S-) |

50–1,000 |

poor to good |

2,000–2,500 |

6,000–24,000 |

|

Incandescent lamps (I) |

5–500 |

good |

2,700 |

1,000–3,000 |

|

Induction lamps (XF) |

23–85 |

good |

3,000–4,000 |

10,000–60,000 |

|

Low-pressure sodium lamps (LS) |

26–180 |

monochromatic yellow colour |

1,800 |

16,000 |

|

Low-voltage tungsten halogen lamps (HS) |

12–100 |

good |

3,000 |

2,000–5,000 |

|

Metal halide lamps (M-) |

35–2,000 |

good to excellent |

3,000–5,000 |

6,000–20,000 |

|

Tubular fluorescent lamps (FD) |

4–100 |

fair to good |

2,700–6,500 |

10,000–15,000 |

|

Tungsten halogen lamps (HS) |

100–2,000 |

good |

3,000 |

2,000–4,000 |

Incandescent lamps

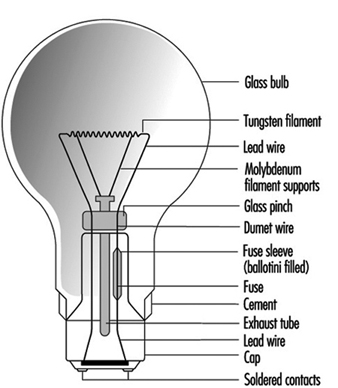

These lamps use a tungsten filament in an inert gas or vacuum with a glass envelope. The inert gas suppresses tungsten evaporation and lessens the envelope blackening. There is a large variety of lamp shapes, which are largely decorative in appearance. The construction of a typical General Lighting Service (GLS) lamp is given in figure 1.

Figure 1. Construction of a GLS lamp

Incandescent lamps are also available with a wide range of colours and finishes. The ILCOS codes and some typical shapes include those shown in table 4.

Table 4. Common colours and shapes of incandescent lamps, with their ILCOS codes

|

Colour/Shape |

Code |

|

Clear |

/C |

|

Frosted |

/F |

|

White |

/W |

|

Red |

/R |

|

Blue |

/B |

|

Green |

/G |

|

Yellow |

/Y |

|

Pear shaped (GLS) |

IA |

|

Candle |

IB |

|

Conical |

IC |

|

Globular |

IG |

|

Mushroom |

IM |

Incandescent lamps are still popular for domestic lighting because of their low cost and compact size. However, for commercial and industrial lighting the low efficacy generates very high operating costs, so discharge lamps are the normal choice. A 100 W lamp has a typical efficacy of 14 lumens/watt compared with 96 lumens/watt for a 36 W fluorescent lamp.

Incandescent lamps are simple to dim by reducing the supply voltage, and are still used where dimming is a desired control feature.

The tungsten filament is a compact light source, easily focused by reflectors or lenses. Incandescent lamps are useful for display lighting where directional control is needed.

Tungsten halogen lamps

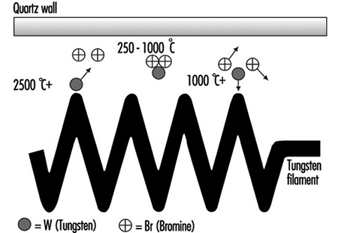

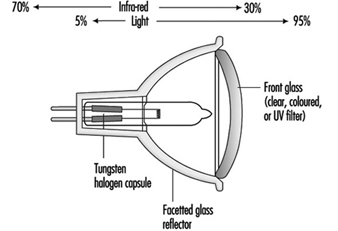

These are similar to incandescent lamps and produce light in the same manner from a tungsten filament. However the bulb contains halogen gas (bromine or iodine) which is active in controlling tungsten evaporation. See figure 2.

Figure 2. The halogen cycle

Fundamental to the halogen cycle is a minimum bulb wall temperature of 250 °C to ensure that the tungsten halide remains in a gaseous state and does not condense on the bulb wall. This temperature means bulbs made from quartz in place of glass. With quartz it is possible to reduce the bulb size.

Most tungsten halogen lamps have an improved life over incandescent equivalents and the filament is at a higher temperature, creating more light and whiter colour.

Tungsten halogen lamps have become popular where small size and high performance are the main requirement. Typical examples are stage lighting, including film and TV, where directional control and dimming are common requirements.

Low-voltage tungsten halogen lamps

These were originally designed for slide and film projectors. At 12 V the filament for the same wattage as 230 V becomes smaller and thicker. This can be more efficiently focused, and the larger filament mass allows a higher operating temperature, increasing light output. The thick filament is more robust. These benefits were realized as being useful for the commercial display market, and even though it is necessary to have a step-down transformer, these lamps now dominate shop-window lighting. See figure 3.

Figure 3. Low-voltage dichroic reflector lamp

Although users of film projectors want as much light as possible, too much heat damages the transparency medium. A special type of reflector has been developed, which reflects only the visible radiation, allowing infrared radiation (heat) to pass through the back of lamp. This feature is now part of many low-voltage reflector lamps for display lighting as well as projector equipment.

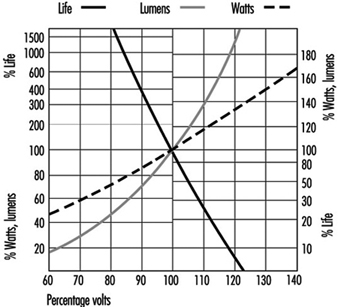

Voltage sensitivity: All filament lamps are sensitive to voltage variation, and light output and life are affected. The move to “harmonize” the supply voltage throughout Europe at 230 V is being achieved by widening the tolerances to which the generating authorities can operate. The move is towards ±10%, which is a voltage range of 207 to 253 V. Incandescent and tungsten halogen lamps cannot be operated sensibly over this range, so it will be necessary to match actual supply voltage to lamp ratings. See figure 4.

Figure 4. GLS filament lamps and supply voltage

Discharge lamps will also be affected by this wide voltage variation, so the correct specification of control gear becomes important.

Tubular fluorescent lamps

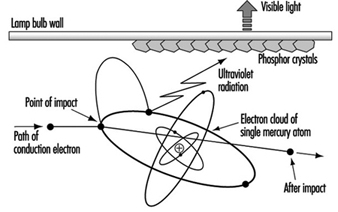

These are low pressure mercury lamps and are available as “hot cathode” and “cold cathode” versions. The former is the conventional fluorescent tube for offices and factories; “hot cathode” relates to the starting of the lamp by pre-heating the electrodes to create sufficient ionization of the gas and mercury vapour to establish the discharge.

Cold cathode lamps are mainly used for signage and advertising. See figure 5.

Figure 5. Principle of fluorescent lamp

Fluorescent lamps require external control gear for starting and to control the lamp current. In addition to the small amount of mercury vapour, there is a starting gas (argon or krypton).

The low pressure of mercury generates a discharge of pale blue light. The major part of the radiation is in the UV region at 254 nm, a characteristic radiation frequency for mercury. Inside of the tube wall is a thin phosphor coating, which absorbs the UV and radiates the energy as visible light. The colour quality of the light is determined by the phosphor coating. A range of phosphors are available of varying colour appearance and colour rendering.

During the 1950s phosphors available offered a choice of reasonable efficacy (60 lumens/watt) with light deficient in reds and blues, or improved colour rendering from “deluxe” phosphors of lower efficiency (40 lumens/watt).

By the 1970s new, narrow-band phosphors had been developed. These separately radiated red, blue and green light but, combined, produced white light. Adjusting the proportions gave a range of different colour appearances, all with similar excellent colour rendering. These tri-phosphors are more efficient than the earlier types and represent the best economic lighting solution, even though the lamps are more expensive. Improved efficacy reduces operating and installation costs.

The tri-phosphor principle has been extended by multi-phosphor lamps where critical colour rendering is necessary, such as for art galleries and industrial colour matching.

The modern narrow-band phosphors are more durable, have better lumen maintenance, and increase lamp life.

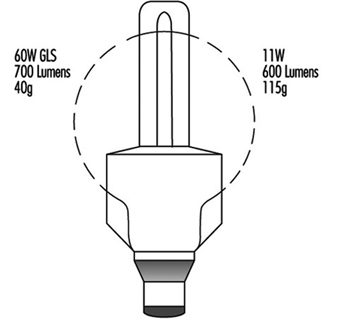

Compact fluorescent lamps

The fluorescent tube is not a practical replacement for the incandescent lamp because of its linear shape. Small, narrow-bore tubes can be configured to approximately the same size as the incandescent lamp, but this imposes a much higher electrical loading on the phosphor material. The use of tri-phosphors is essential to achieve acceptable lamp life. See figure 6.

Figure 6. Four-leg compact fluorescent

All compact fluorescent lamps use tri-phosphors, so, when they are used together with linear fluorescent lamps, the latter should also be tri-phosphor to ensure colour consistency.

Some compact lamps include the operating control gear to form retro-fit devices for incandescent lamps. The range is increasing and enables easy upgrading of existing installations to more energy-efficient lighting. These integral units are not suitable for dimming where that was part of the original controls.

High-frequency electronic control gear: If the normal supply frequency of 50 or 60 Hz is increased to 30 kHz, there is a 10% gain in efficacy of fluorescent tubes. Electronic circuits can operate individual lamps at such frequencies. The electronic circuit is designed to provide the same light output as wire-wound control gear, from reduced lamp power. This offers compatibility of lumen package with the advantage that reduced lamp loading will increase lamp life significantly. Electronic control gear is capable of operating over a range of supply voltages.

There is no common standard for electronic control gear, and lamp performance may differ from the published information issued by the lamp makers.

The use of high-frequency electronic gear removes the normal problem of flicker, to which some occupants may be sensitive.

Induction lamps

Lamps using the principle of induction have recently appeared on the market. They are low-pressure mercury lamps with tri-phosphor coating and as light producers are similar to fluorescent lamps. The energy is transferred to the lamp by high-frequency radiation, at approximately 2.5 MHz from an antenna positioned centrally within the lamp. There is no physical connection between the lamp bulb and the coil. Without electrodes or other wire connections the construction of the discharge vessel is simpler and more durable. Lamp life is mainly determined by the reliability of the electronic components and the lumen maintenance of the phosphor coating.

High-pressure mercury lamps

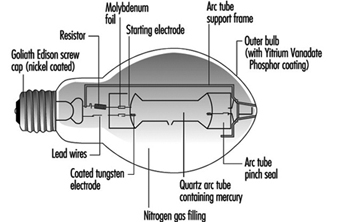

High-pressure discharges are more compact and have higher electrical loads; therefore, they require quartz arc tubes to withstand the pressure and temperature. The arc tube is contained in an outer glass envelope with a nitrogen or argon-nitrogen atmosphere to reduce oxidation and arcing. The bulb effectively filters the UV radiation from the arc tube. See figure 7.

Figure 7. Mercury lamp construction

At high pressure, the mercury discharge is mainly blue and green radiation. To improve the colour a phosphor coating of the outer bulb adds red light. There are deluxe versions with an increased red content, which give higher light output and improved colour rendering.

All high-pressure discharge lamps take time to reach full output. The initial discharge is via the conducting gas fill, and the metal evaporates as the lamp temperature increases.

At the stable pressure the lamp will not immediately restart without special control gear. There is a delay while the lamp cools sufficiently and the pressure reduces, so that the normal supply voltage or ignitor circuit is adequate to re-establish the arc.

Discharge lamps have a negative resistance characteristic, and so the external control gear is necessary to control the current. There are losses due to these control gear components so the user should consider total watts when considering operating costs and electrical installation. There is an exception for high-pressure mercury lamps, and one type contains a tungsten filament which both acts as the current limiting device and adds warm colours to the blue/green discharge. This enables the direct replacement of incandescent lamps.

Although mercury lamps have a long life of about 20,000 hours, the light output will fall to about 55% of the initial output at the end of this period, and therefore the economic life can be shorter.

Metal halide lamps

The colour and light output of mercury discharge lamps can be improved by adding different metals to the mercury arc. For each lamp the dose is small, and for accurate application it is more convenient to handle the metals in powder form as halides. This breaks down as the lamp warms up and releases the metal.

A metal halide lamp can use a number of different metals, each of which give off a specific characteristic colour. These include:

- dysprosium—broad blue-green

- indium—narrow blue

- lithium—narrow red

- scandium—broad blue-green

- sodium—narrow yellow

- thallium—narrow green

- tin—broad orange-red

There is no standard mixture of metals, so metal halide lamps from different manufacturers may not be compatible in appearance or operating performance. For lamps with the lower wattage ratings, 35 to 150 W, there is closer physical and electrical compatibility with a common standard.

Metal halide lamps require control gear, but the lack of compatibility means that it is necessary to match each combination of lamp and gear to ensure correct starting and running conditions.

Low-pressure sodium lamps

The arc tube is similar in size to the fluorescent tube but is made of special ply glass with an inner sodium resistant coating. The arc tube is formed in a narrow “U” shape and is contained in an outer vacuum jacket to ensure thermal stability. During starting, the lamps have a strong red glow from the neon gas fill.

The characteristic radiation from low-pressure sodium vapour is a monochromatic yellow. This is close to the peak sensitivity of the human eye, and low-pressure sodium lamps are the most efficient lamps available at nearly 200 lumens/watt. However the applications are limited to where colour discrimination is of no visual importance, such as trunk roads and underpasses, and residential streets.

In many situations these lamps are being replaced by high-pressure sodium lamps. Their smaller size offers better optical control, particularly for roadway lighting where there is growing concern over excessive sky glow.

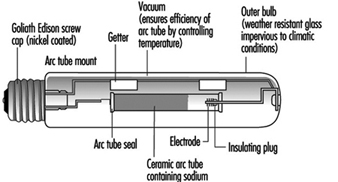

High-pressure sodium lamps

These lamps are similar to high-pressure mercury lamps but offer better efficacy (over 100 lumens/watt) and excellent lumen maintenance. The reactive nature of sodium requires the arc tube to be manufactured from translucent polycrystalline alumina, as glass or quartz are unsuitable. The outer glass bulb contains a vacuum to prevent arcing and oxidation. There is no UV radiation from the sodium discharge so phosphor coatings are of no value. Some bulbs are frosted or coated to diffuse the light source. See figure 8.

Figure 8. High-pressure sodium lamp construction

As the sodium pressure is increased, the radiation becomes a broad band around the yellow peak, and the appearance is golden white. However, as the pressure increases, the efficiency decreases. There are currently three separate types of high-pressure sodium lamps available, as shown in table 5.

Table 5. Types of high-pressure sodium lamp

|

Lamp type (code) |

Colour (K) |

Efficacy (lumens/watt) |

Life (hours) |

|

Standard |

2,000 |

110 |

24,000 |

|

Deluxe |

2,200 |

80 |

14,000 |

|

White (SON) |

2,500 |

50 |

Generally the standard lamps are used for exterior lighting, deluxe lamps for industrial interiors, and White SON for commercial/display applications.

Dimming of Discharge Lamps

The high-pressure lamps cannot be satisfactorily dimmed, as changing the lamp power changes the pressure and thus the fundamental characteristics of the lamp.

Fluorescent lamps can be dimmed using high-frequency supplies generated typically within the electronic control gear. The colour appearance remains very constant. In addition, the light output is approximately proportional to the lamp power, with consequent saving in electrical power when the light output is reduced. By integrating the light output from the lamp with the prevailing level of natural daylight, a near constant level of illuminance can be provided in an interior.

Indoor Air: Ionization

Ionization is one of the techniques used to eliminate particulate matter from air. Ions act as condensation nuclei for small particles which, as they stick together, grow and precipitate.

The concentration of ions in closed indoor spaces is, as a general rule and if there are no additional sources of ions, inferior to that of open spaces. Hence the belief that increasing the concentration of negative ions in indoor air improves air quality.

Some studies based on epidemiological data and on planned experimental research assert that increasing the concentration of negative ions in work environments leads to improved worker efficiency and enhances the mood of employees, while positive ions have an adverse affect. However, parallel studies show that existing data on the effects of negative ionization on workers’ productivity are inconsistent and contradictory. Therefore, it seems that it is still not possible to assert unequivocally that the generation of negative ions is really beneficial.

Natural Ionization

Individual gas molecules in the atmosphere can ionize negatively by gaining, or positively by losing, an electron. For this to occur a given molecule must first gain enough energy—usually called the ionization energy of that particular molecule. Many sources of energy, both of cosmic and terrestrial origin, occur in nature that are capable of producing this phenomenon: background radiation in the atmosphere; electromagnetic solar waves (especially ultraviolet ones), cosmic rays, atomization of liquids such as the spray caused by waterfalls, the movement of great masses of air over the earth’s surface, electrical phenomena such as lightning and storms, the process of combustion and radioactive substances.

The electrical configurations of the ions that are formed this way, while not completely known yet, seems to include the ions of carbonation and H+, H3O+, O+, N+, OH–, H2O– and O2–. These ionized molecules can aggregate through adsorption on suspended particles (fog, silica and other contaminants). Ions are classified according to their size and their mobility. The latter is defined as a velocity in an electrical field expressed as a unit such as centimetres per second by voltage per centimetre (cm/s/V/cm), or, more compactly,

![]()

Atmospheric ions tend to disappear by recombination. Their half-life depends on their size and is inversely proportional to their mobility. Negative ions are statistically smaller and their half-life is of several minutes, while positive ions are larger and their half-life is about one half hour. The spatial charge is the quotient of the concentration of positive ions and the concentration of negative ions. The value of this relation is greater than one and depends on factors such as climate, location and season of the year. In living spaces this coefficient can have values that are lower than one. Characteristics are given in table 1.

Table 1. Characteristics of ions of given mobilities and diameter

|

Mobility (cm2/Vs) |

Diameter (mm) |

Characteristics |

|

3.0–0.1 |

0.001–0.003 |

Small, high mobility, short life |

|

0.1–0.005 |

0.003–0.03 |

Intermediate, slower than small ions |

|

0.005–0.002 |

>0.03 |

Slow ions, aggregates on particulate matter |

Artificial Ionization

Human activity modifies the natural ionization of air. Artificial ionization can be caused by industrial and nuclear processes and fires. Particulate matter suspended in air favours the formation of Langevin ions (ions aggregated on particulate matter). Electrical radiators increase the concentration of positive ions considerably. Air-conditioners also increase the spatial charge of indoor air.

Workplaces have machinery that produces positive and negative ions simultaneously, as in the case of machines that are important local sources of mechanical energy (presses, spinning and weaving machines), electrical energy (motors, electronic printers, copiers, high-voltage lines and installations), electromagnetic energy (cathode-ray screens, televisions, computer monitors) or radioactive energy (cobalt-42 therapy). These kinds of equipment create environments with higher concentrations of positive ions due to the latter’s higher half-life as compared to negative ions.

Environmental Concentrations of Ions

Concentrations of ions vary with environmental and meteorological conditions. In areas with little pollution, such as in forests and mountains, or at great altitudes, the concentration of small ions grows; in areas close to radioactive sources, waterfalls, or river rapids the concentrations can reach thousands of small ions per cubic centimetre. In the proximity of the sea and when the levels of humidity are high, on the other hand, there is an excess of large ions. In general, the average concentration of negative and positive ions in clean air is 500 and 600 ions per cubic centimetre respectively.

Some winds can carry great concentrations of positive ions—the Föhn in Switzerland, the Santa Ana in the United States, the Sirocco in North Africa, the Chinook in the Rocky Mountains and the Sharav in the Middle East.

In workplaces where there are no significant ionizing factors there is often an accumulation of large ions. This is especially true, for example, in places that are hermetically sealed and in mines. The concentration of negative ions decreases significantly in indoor spaces and in contaminated areas or areas that are dusty. There are many reasons why the concentration of negative ions also decreases in indoor spaces that have air-conditioning systems. One reason is that negative ions remain trapped in air ducts and air filters or are attracted to surfaces that are positively charged. Cathode-ray screens and computer monitors, for example, are positively charged, creating in their immediate vicinity a microclimate deficient in negative ions. Air filtration systems designed for “clean rooms” that require that levels of contamination with particulate matter be kept at a very low minimum seem also to eliminate negative ions.

On the other hand, an excess of humidity condenses ions, while a lack of it creates dry environments with large amounts of electrostatic charges. These electrostatic charges accumulate in plastic and synthetic fibres, both in the room and on people.

Ion Generators

Generators ionize air by delivering a large amount of energy. This energy may come from a source of alpha radiation (such as tritium) or from a source of electricity by the application of a high voltage to a sharply pointed electrode. Radioactive sources are forbidden in most countries because of the secondary problems of radioactivity.

Electric generators are made of a pointed electrode surrounded by a crown; the electrode is supplied with a negative voltage of thousands of volts, and the crown is grounded. Negative ions are expelled while positive ions are attracted to the generator. The amount of negative ions generated increases in proportion to the voltage applied and to the number of electrodes that it contains. Generators that have a greater number of electrodes and use a lower voltage are safer, because when voltage exceeds 8,000 to 10,000 volts the generator will produce not only ions, but also ozone and some nitrous oxides. The dissemination of ions is achieved by electrostatic repulsion.

The migration of ions will depend on the alignment of the magnetic field generated between the emission point and the objects that surround it. The concentration of ions surrounding the generators is not homogeneous and diminishes significantly as the distance from them increases. Fans installed in this equipment will increase the ionic dispersion zone. It is important to remember that the active elements of the generators need to be cleaned periodically to insure proper functioning.

The generators may also be based on atomizing water, on thermoelectric effects or on ultraviolet rays. There are many different types and sizes of generators. They may be installed on ceilings and walls or may be placed anywhere if they are the small, portable type.

Measuring Ions

Ion measuring devices are made by placing two conductive plates 0.75 cm apart and applying a variable voltage. Collected ions are measured by a picoamperemeter and the intensity of the current is registered. Variable voltages permit the measurement of concentrations of ions with different mobilities. The concentration of ions (N) is calculated from the intensity of the electrical current generated using the following formula:

![]()

where I is the current in amperes, V is the speed of the air flow, q is the charge of a univalent ion (1.6x10–19) in Coulombs and A is the effective area of the collector plates. It is assumed that all ions have a single charge and that they are all retained in the collector. It should be kept in mind that this method has its limitations due to background current and the influence of other factors such as humidity and fields of static electricity.

The Effects of Ions on the Body

Small negative ions are the ones which are supposed to have the greatest biological effect because of their greater mobility. High concentrations of negative ions can kill or block the growth of microscopic pathogens, but no adverse effects on humans have been described.

Some studies suggest that exposure to high concentrations of negative ions produces biochemical and physiological changes in some people that have a relaxing effect, reduce tension and headaches, improve alertness and cut reaction time. These effects could be due to the suppression of the neural hormone serotonin (5-HT) and of histamine in environments loaded with negative ions; these factors could affect a hypersensitive segment of the population. However, other studies reach different conclusions on the effects of negative ions on the body. Therefore, the benefits of negative ionization are still open to debate and further study is needed before the matter is decided.

Heating and Air-Conditioning Systems

With regard to heating, a given person’s needs will depend on many factors. They can be classified into two main groups, those related to the surroundings and those related to human factors. Among those related to the surroundings one might count geography (latitude and altitude), climate, the type of exposure of the space the person is in, or the barriers that protect the space against the external environment, etc. Among the human factors are the worker’s energy consumption, the pace of work or the amount of exertion needed for the job, the clothing or garments used against the cold and personal preferences or tastes.

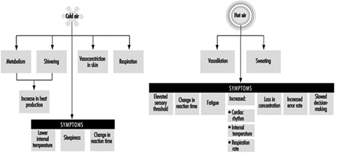

The need for heating is seasonal in many regions, but this does not mean that heating is dispensable during the cold season. Cold environmental conditions affect health, mental and physical efficiency, precision and occasionally may increase the risk of accidents. The goal of a heating system is to maintain pleasant thermal conditions that will prevent or minimize adverse health effects.

The physiological characteristics of the human body allow it to withstand great variations in thermal conditions. Human beings maintain their thermal balance through the hypothalamus, by means of thermal receptors in the skin; body temperature is kept between 36 and 38°C as shown in figure 1.

Figure 1. Thermoregulatory mechanisms in human beings

Heating systems need to have very precise control mechanisms, especially in cases where workers carry out their tasks in a sitting or a fixed position that does not stimulate blood circulation to their extremities. Where the work performed allows a certain mobility, the control of the system may be somewhat less precise. Finally, where the work performed takes place in abnormally adverse conditions, as in refrigerated chambers or in very cold climatic conditions, support measures may be undertaken to protect special tissues, to regulate the time spent under those conditions or to supply heat by electrical systems incorporated into the worker’s garments.

Definition and Description of the Thermal Environment

A requirement that can be demanded of any properly functioning heating or air conditioning system is that it should allow for control of the variables that define the thermal environment, within specified limits, for each season of the year. These variables are

- air temperature

- average temperature of the inside surfaces that define the space

- air humidity

- speeds and uniformity of speeds of air flow within the space

It has been shown that there is a very simple relation between the temperature of the air and of the wall surfaces of a given space, and the temperatures that provide the same perceived thermal sensation in a different room. This relation can be expressed as

![]()

where

Teat = equivalent air temperature for a given thermal sensation

Tdbt = air temperature measured with a dry bulb thermometer

Tast = measured average surface temperature of the walls.

For example, if in a given space the air and the walls are at 20° C, the equivalent temperature will be 20°C, and the perceived sensation of heat will be the same as in a room where the average temperature of the walls is 15°C and the air temperature is 25°C, because that room would have the same equivalent temperature. From the standpoint of temperature, the perceived sensation of thermal comfort would be the same.

Properties of humid air

In implementing an air-conditioning plan, three things that must be taken into consideration are the thermodynamic state of the air in the given space, of the air outside, and of the air that will be supplied to the room. The selection of a system capable of transforming the thermodynamic properties of the air supplied to the room will then be based on the existing thermal loads of each component. We therefore need to know the thermodynamic properties of humid air. They are as follows:

Tdbt = the dry bulb temperature reading, measured with a thermometer insulated from radiated heat

Tdpt = the dew point temperature reading. This is the temperature at which nonsaturated dry air reaches the saturation point

W = a humidity relation that ranges from zero for dry air to Ws for saturated air. It is expressed as kg of water vapour by kg of dry air

RH = relative humidity

t* = thermodynamic temperature with moist bulb

v = specific volume of air and water vapour (expressed in units of m3/kg). It is the inverse of density

H = enthalpy, kcal/kg of dry air and associated water vapour.

Of the above variables, only three are directly measurable. They are the dry bulb temperature reading, the dew point temperature reading and relative humidity. There is a fourth variable that is experimentally measurable, defined as the wet bulb temperature. The wet bulb temperature is measured with a thermometer whose bulb has been moistened and which is moved, typically with the aid of a sling, through nonsaturated moist air at a moderate speed. This variable differs by an insignificant amount from the thermodynamic temperature with a dry bulb (3 per cent), so they can both be used for calculations without erring too much.

Psychrometric diagram

The properties defined in the previous section are functionally related and can be portrayed in graphic form. This graphic representation is called a psychrometric diagram. It is a simplified graph derived from tables of the American Society of Heating, Refrigerating and Air Conditioning Engineers (ASHRAE). Enthalpy and the degree of humidity are shown on the coordinates of the diagram; the lines drawn show dry and humid temperatures, relative humidity and specific volume. With the psychrometric diagram, knowing any two of the aforementioned variables enables you to derive all the properties of humid air.

Conditions for thermal comfort

Thermal comfort is defined as a state of mind that expresses satisfaction with the thermal environment. It is influenced by physical and physiological factors.

It is difficult to prescribe general conditions that should be met for thermal comfort because conditions differ in various work situations; different conditions could even be required for the same work post when it is occupied by different people. A technical norm for thermal conditions required for comfort cannot be applied to all countries because of the different climatic conditions and their different customs governing dress.

Studies have been carried out with workers that do light manual labour, establishing a series of criteria for temperature, speed and humidity that are shown in table 1 (Bedford and Chrenko 1974).

Table 1. Proposed norms for environmental factors

|

Environmental factor |

Proposed norm |

|

Air temperature |

21 °C |

|

Average radiant temperature |

≥ 21 °C |

|

Relative humidity |

30–70% |

|

Speed of air flow |

0.05–0.1 metre/second |

|

Temperature gradient (from head to foot) |

≤ 2.5 °C |

The above factors are interrelated, requiring a lower air temperature in cases where there is high thermal radiation and requiring a higher air temperature when the speed of the air flow is also higher.

Generally, the corrections that should be carried out are the following:

The air temperature should be increased:

- if the speed of the air flow is high

- for sedentary work situations

- if clothing used is light

- when people must be acclimatized to high indoor temperatures.

The air temperature should be decreased:

- if the work involves heavy manual labour

- when warm clothing is used.

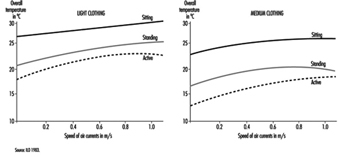

For a good sensation of thermal comfort the most desirable situation is one where the temperature of the environment is slightly higher than the temperature of the air, and where the flow of radiating thermal energy is the same in all directions and is not excessive overhead. The increase in temperature by height should be minimized, keeping feet warm without creating too much of a thermal load overhead. An important factor that has a bearing on the sensation of thermal comfort is the speed of the air flow. There are diagrams that give recommended air speeds as a function of the activity that is being carried out and the kind of clothing used (figure 2).

Figure 2. Comfort zones based on readings of overall temperatures and speed of air currents

In some countries there are norms for minimal environmental temperatures, but optimal values have not yet been established. Typically, the maximum value for air temperature is given as 20°C. With recent technical improvements, the complexity of measuring thermal comfort has increased. Many indexes have appeared, including the index of effective temperature (ET) and the index of effective temperature, corrected (CET); the index of caloric overload; the Heat Stress Index (HSI); the wet bulb globe temperature (WBGT); and the Fanger index of median values (IMV), among others. The WBGT index allows us to determine the intervals of rest required as a function of the intensity of the work performed so as to preclude thermal stress under working conditions. This is discussed more fully in the chapter Heat and Cold.

Thermal comfort zone in a psychrometric diagram

The range on the psychrometric diagram corresponding to conditions under which an adult perceives thermal comfort has been carefully studied and has been defined in the ASHRAE norm based on the effective temperature, defined as the temperature measured with a dry bulb thermometer in a uniform room with 50 per cent relative humidity, where people would have the same interchange of heat by radiant energy, convection and evaporation as they would with the level of humidity in the given local environment. The scale of effective temperature is defined by ASHRAE for a level of clothing of 0.6 clo—clo is a unit of insulation; 1 clo corresponds to the insulation provided by a normal set of clothes—that assumes a level of thermal insulation of 0.155 K m2W–1, where K is the exchange of heat by conduction measured in Watts per square metre (W m–2) for a movement of air of 0.2 m s–1 (at rest), for an exposure of one hour at a chosen sedentary activity of 1 met (unit of metabolic rate=50 Kcal/m2h). This comfort zone is seen in figure 2 and can be used for thermal environments where the measured temperature from radiant heat is approximately the same as the temperature measured by a dry bulb thermometer, and where the speed of air flow is below 0.2 m s–1 for people dressed in light clothing and carrying out sedentary activities.

Comfort formula: The Fanger method

The method developed by PO Fanger is based on a formula that relates variables of ambient temperature, average radiant temperature, relative speed of air flow, pressure of water vapour in ambient air, level of activity and thermal resistance of the clothing worn. An example derived from the comfort formula is shown in table 2, which can be used in practical applications for obtaining a comfortable temperature as a function of the clothing worn, the metabolic rate of the activity carried out and the speed of the air flow.

Table 2. Temperatures of thermal comfort (°C), at 50% relative humidity (based on the formula by PO Fanger)

|

Metabolism (Watts) |

105 |

|||

|

Radiating temperature |

clo |

20 °C |

25 °C |

30 °C |

|

Clothing (clo) |

|

|

|

|

|

0.5 |

30.5 |

29.0 |

27.0 |

|

|

1.5 |

30.6 |

29.5 |

28.3 |

|

|

Clothing (clo) |

|

|

|

|

|

0.5 |

26.7 |

24.3 |

22.7 |

|

|

1.5 |

27.0 |

25.7 |

24.5 |

|

|

Metabolism (Watts) |

157 |

|||

|

Radiating temperature |

clo |

20 °C |

25 °C |

30 °C |

|

Clothing (clo) |

|

|

|

|

|

0.5 |

23.0 |

20.7 |

18.3 |

|

|

1.5 |

23.5 |

23.3 |

22.0 |

|

|

Clothing (clo) |

|

|

|

|

|

0.5 |

16.0 |

14.0 |

11.5 |

|

|

1.5 |

18.3 |

17.0 |

15.7 |

|

|

Metabolism (Watts) |

210 |

|||

|

Radiating temperature |

clo |

20 °C |

25 °C |

30 °C |

|

Clothing (clo) |

|

|

|

|

|

0.5 |

15.0 |

13.0 |

7.4 |

|

|

1.5 |

18.3 |

17.0 |

16.0 |

|

|

Clothing (clo) |

|

|

|

|

|

0.5 |

–1.5 |

–3.0 |

/ |

|

|

1.5 |

–5.0 |

2.0 |

1.0 |

|

Heating Systems

The design of any heating system should be directly related to the work to be performed and the characteristics of the building where it will be installed. It is hard to find, in the case of industrial buildings, projects where the heating needs of the workers are considered, often because the processes and workstations have yet to be defined. Normally systems are designed with a very free range, considering only the thermal loads that will exist in the building and the amount of heat that needs to be supplied to maintain a given temperature within the building, without regard to heat distribution, the situation of workstations and other similarly less general factors. This leads to deficiencies in the design of certain buildings that translate into shortcomings like cold spots, draughts, an insufficient number of heating elements and other problems.

To end up with a good heating system in planning a building, the following are some of the considerations that should be addressed:

- Consider the proper placement of insulation to save energy and to minimize temperature gradients within the building.

- Reduce as much as possible the infiltration of cold air into the building to minimize temperature variations in the work areas.

- Control air pollution through localized extraction of air and ventilation by displacement or diffusion.

- Control the emissions of heat due to the processes used in the building and their distribution in occupied areas of the building.

When heating is provided by burners without exhaust chimneys, special consideration should be given to the inhalation of the products of combustion. Normally, when the combustible materials are heating oil, gas or coke, they produce sulphur dioxide, nitrogen oxides, carbon monoxide and other combustion products. There exist human exposure limits for these compounds and they should be controlled, especially in closed spaces where the concentration of these gases can increase rapidly and the efficiency of the combustion reaction can decrease.

Planning a heating system always entails balancing various considerations, such as a low initial cost, flexibility of the service, energy efficiency and applicability. Therefore, the use of electricity during off-peak hours when it might be cheaper, for example, could make electric heaters cost-effective. The use of chemical systems for heat storage that can then be put to use during peak demand (using sodium sulphide, for example) is another option. It is also possible to study the placement of several different systems together, making them work in such a way that costs can be optimized.

The installation of heaters that are capable of using gas or heating oil is especially interesting. The direct use of electricity means consuming first-class energy that may turn out to be costly in many cases, but that may afford the needed flexibility under certain circumstances. Heat pumps and other cogeneration systems that take advantage of residual heat can afford solutions that may be very advantageous from the financial point of view. The problem with these systems is their high initial cost.

Today the tendency of heating and air conditioning systems is to aim to deliver optimal functioning and energy savings. New systems therefore include sensors and controls distributed throughout the spaces to be heated, obtaining a supply of heat only during the times necessary to obtain thermal comfort. These systems can save up to 30% of the energy costs of heating. Figure 3 shows some of the heating systems available, indicating their positive characteristics and their drawbacks.

Figure 3. Characteristics of the most common heating systems employed in worksites

Air-conditioning systems

Experience shows that industrial environments that are close to the comfort zone during summer months increase productivity, tend to register fewer accidents, have lower absenteeism and, in general, contribute to improved human relations. In the case of retail establishments, hospitals and buildings with large surfaces, air conditioning usually needs to be directed to be able to provide thermal comfort when outside conditions require it.

In certain industrial environments where external conditions are very severe, the goal of heating systems is geared more to providing enough heat to prevent possible adverse health effects than to providing enough heat for a comfortable thermal environment. Factors that should be carefully monitored are the maintenance and proper use of the air-conditioning equipment, especially when equipped with humidifiers, because they can become sources of microbial contamination with the risks that these contaminants may pose to human health.

Today ventilation and climate-control systems tend to cover, jointly and often using the same installation, the needs for heating, refrigerating and conditioning the air of a building. Multiple classifications may be used for refrigerating systems.

Depending on the configuration of the system they may be classified in the following way:

- Hermetically sealed units, with refrigerating fluid installed at the factory, that can be opened and recharged in a repair shop. These are air-conditioning units normally used in offices, dwellings and the like.

- Semi-hermetic units of medium size, factory made, that are of larger size than home units and that can be repaired through openings designed for that purpose.

- Segmented systems for warehouses and large surfaces, which consist of parts and components that are clearly differentiated and physically separate (the compressor and the condenser are physically separate from the evaporator and the expansion valve). They are used for large office buildings, hotels, hospitals, large factories and industrial buildings.

Depending on the coverage they provide, they can be classified in the following way:

- Systems for a single zone: one air treatment unit serves various rooms in the same building and at the same time. The rooms served have similar heating, refrigeration and ventilation needs and they are regulated by a common control (a thermostat or similar device). Systems of this type can end up being unable to supply an adequate level of comfort to each room if the design plan did not take into consideration the different thermal loads between rooms in the same zone. This may happen when there is an increase in the occupancy of a room or when lighting or other heat sources are added, like computers or copying machines, that were unforeseen during the original design of the system. Discomfort may also occur because of seasonal changes in the amount of solar radiation a room receives, or even because of the changes from one room to the next during the day.

- Systems for multiple zones: systems of this type can provide different zones with air at different temperatures and humidities by heating, cooling, humidifying or dehumidifying air in each zone and by varying the flow of air. These systems, even if they generally have a common and centralized air cooling unit (compressor, evaporator, etc.), are equipped with a variety of elements, such as devices that control the flow of air, heating coils and humidifiers. These systems are capable of adjusting the conditions of a room based on specific thermal loads, which they detect by means of sensors distributed in the rooms throughout the area they serve.

- Depending on the flow of air that these systems pump into the building they are classified in the following way:

- Constant volume (CV): these systems pump a constant flow of air into each room. Temperature changes are effected by heating or cooling the air. These systems frequently mix a percentage of outside air with recycled indoor air.

- Variable volume (VAV): these systems maintain thermal comfort by varying the amount of heated or cooled air supplied to each space. Even though they function primarily based on this mixing principle, they can also be combined with systems that change the temperature of the air they introduce into the room.

The problems that most frequently plague these types of systems are excess heating or cooling if the system is not adjusted to respond to variations in thermal loads, or a lack of ventilation if the system does not introduce a minimal amount of outside air to renew the circulating indoor air. This creates stale indoor environments in which the quality of air deteriorates.

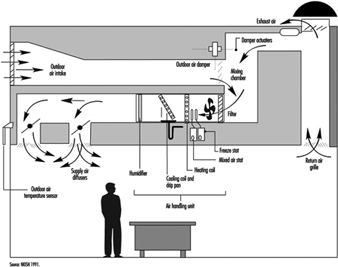

The basic elements of all air-conditioning systems are (see also figure 4):

- Units to retain solid matter, usually bag filters or electrostatic precipitators.

- Air heating or cooling units: heat is exchanged in these units by thermal exchange with cold water or refrigerating liquids, by forced ventilation in the summer and by heating with electrical coils or by combustion in the winter.

- Units to control humidity: in winter humidity can be added by directly injecting water vapour or by direct water evaporation; in the summer it can be removed by refrigerated coils that condense excess humidity in the air, or by a refrigerated water system in which moist air flows through a curtain of drops of water that is colder than the dew point of the moist air.

Figure 4. Simplified schematic of air-conditioning system

Ventilation Criteria for Nonindustrial Buildings

One of the chief functions of a building in which nonindustrial activities are carried out (offices, schools, dwellings, etc.) is to provide the occupants with a healthy and comfortable environment in which to work. The quality of this environment depends, to a large degree, on whether the ventilation and climatization systems of the building are adequately designed and maintained and function properly.

These systems must therefore provide acceptable thermal conditions (temperature and humidity) and an acceptable quality of indoor air. In other words, they should aim for a suitable mix of outside air with indoor air and should employ filtration and cleaning systems capable of eliminating pollutants found in the indoor environment.

The idea that clean outdoor air is necessary for well-being in indoor spaces has been expressed since the eighteenth century. Benjamin Franklin recognized that air in a room is healthier if it is provided with natural ventilation by opening the windows. The idea that providing great quantities of outside air could help reduce the risk of contagion for illnesses like tuberculosis gained currency in the nineteenth century.

Studies carried out during the 1930s showed that, in order to dilute human biological effluvia to concentrations that would not cause discomfort due to odours, the volume of new outside air required for a room is between 17 and 30 cubic metres per hour per occupant.

In standard No. 62 set in 1973, the American Society of Heating, Refrigerating and Air Conditioning Engineers (ASHRAE) recommends a minimum flow of 34 cubic metres of outside air per hour per occupant to control odours. An absolute minimum of 8.5 m3/hr/occupant is recommended to prevent carbon dioxide from surpassing 2,500 ppm, which is half of the exposure limit set for industrial settings.

This same organization, in standard No. 90, set in 1975—in the middle of an energy crisis—adopted the aforementioned absolute minimum leaving aside, temporarily, the need for greater ventilation flows to dilute pollutants such as tobacco smoke, biological effluvia and so forth.

In its standard No. 62 (1981) ASHRAE rectified this omission and established its recommendation as 34 m3/hr/occupant for areas where smoking is permitted and 8.5 m3/hr/occupant in areas where smoking is forbidden.

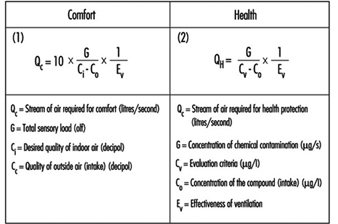

The last standard published by ASHRAE, also No. 62 (1989), established a minimum of 25.5 m3/hr/occupant for occupied indoor spaces independently of whether smoking is permitted or not. It also recommends increasing this value when the air brought into the building is not mixed adequately in the breathing zone or if there are unusual sources of pollution present in the building.