Children categories

27. Biological Monitoring (6)

27. Biological Monitoring

Chapter Editor: Robert Lauwerys

Table of Contents

Tables and Figures

General Principles

Vito Foà and Lorenzo Alessio

Quality Assurance

D. Gompertz

Metals and Organometallic Compounds

P. Hoet and Robert Lauwerys

Organic Solvents

Masayuki Ikeda

Genotoxic Chemicals

Marja Sorsa

Pesticides

Marco Maroni and Adalberto Ferioli

Tables

Click a link below to view table in article context.

1. ACGIH, DFG & other limit values for metals

2. Examples of chemicals & biological monitoring

3. Biological monitoring for organic solvents

4. Genotoxicity of chemicals evaluated by IARC

5. Biomarkers & some cell/tissue samples & genotoxicity

6. Human carcinogens, occupational exposure & cytogenetic end points

8. Exposure from production & use of pesticides

9. Acute OP toxicity at different levels of ACHE inhibition

10. Variations of ACHE & PCHE & selected health conditions

11. Cholinesterase activities of unexposed healthy people

12. Urinary alkyl phosphates & OP pesticides

13. Urinary alkyl phosphates measurements & OP

14. Urinary carbamate metabolites

15. Urinary dithiocarbamate metabolites

16. Proposed indices for biological monitoring of pesticides

17. Recommended biological limit values (as of 1996)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

28. Epidemiology and Statistics (12)

28. Epidemiology and Statistics

Chapter Editors: Franco Merletti, Colin L. Soskolne and Paolo Vineis

Table of Contents

Tables and Figures

Epidemiological Method Applied to Occupational Health and Safety

Franco Merletti, Colin L. Soskolne and Paolo Vineis

Exposure Assessment

M. Gerald Ott

Summary Worklife Exposure Measures

Colin L. Soskolne

Measuring Effects of Exposures

Shelia Hoar Zahm

Case Study: Measures

Franco Merletti, Colin L. Soskolne and Paola Vineis

Options in Study Design

Sven Hernberg

Validity Issues in Study Design

Annie J. Sasco

Impact of Random Measurement Error

Paolo Vineis and Colin L. Soskolne

Statistical Methods

Annibale Biggeri and Mario Braga

Causality Assessment and Ethics in Epidemiological Research

Paolo Vineis

Case Studies Illustrating Methodological Issues in the Surveillance of Occupational Diseases

Jung-Der Wang

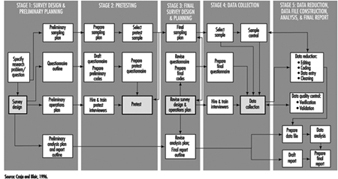

Questionnaires in Epidemiological Research

Steven D. Stellman and Colin L. Soskolne

Asbestos Historical Perspective

Lawrence Garfinkel

Tables

Click a link below to view table in article context.

1. Five selected summary measures of worklife exposure

2. Measures of disease occurrence

3. Measures of association for a cohort study

4. Measures of association for case-control studies

5. General frequency table layout for cohort data

6. Sample layout of case-control data

7. Layout case-control data - one control per case

8. Hypothetical cohort of 1950 individuals to T2

9. Indices of central tendency & dispersion

10. A binomial experiment & probabilities

11. Possible outcomes of a binomial experiment

12. Binomial distribution, 15 successes/30 trials

13. Binomial distribution, p = 0.25; 30 trials

14. Type II error & power; x = 12, n = 30, a = 0.05

15. Type II error & power; x = 12, n = 40, a = 0.05

16. 632 workers exposed to asbestos 20 years or longer

17. O/E number of deaths among 632 asbestos workers

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

29. Ergonomics (27)

29. Ergonomics

Chapter Editors: Wolfgang Laurig and Joachim Vedder

Table of Contents

Tables and Figures

Overview

Wolfgang Laurig and Joachim Vedder

Goals, Principles and Methods

The Nature and Aims of Ergonomics

William T. Singleton

Analysis of Activities, Tasks and Work Systems

Véronique De Keyser

Ergonomics and Standardization

Friedhelm Nachreiner

Checklists

Pranab Kumar Nag

Physical and Physiological Aspects

Anthropometry

Melchiorre Masali

Muscular Work

Juhani Smolander and Veikko Louhevaara

Postures at Work

Ilkka Kuorinka

Biomechanics

Frank Darby

General Fatigue

Étienne Grandjean

Fatigue and Recovery

Rolf Helbig and Walter Rohmert

Psychological Aspects

Mental Workload

Winfried Hacker

Vigilance

Herbert Heuer

Mental Fatigue

Peter Richter

Organizational Aspects of Work

Work Organization

Eberhard Ulich and Gudela Grote

Sleep Deprivation

Kazutaka Kogi

Work Systems Design

Workstations

Roland Kadefors

Tools

T.M. Fraser

Controls, Indicators and Panels

Karl H. E. Kroemer

Information Processing and Design

Andries F. Sanders

Designing for Everyone

Designing for Specific Groups

Joke H. Grady-van den Nieuwboer

Case Study: The International Classification of Functional Limitation in People

Cultural Differences

Houshang Shahnavaz

Elderly Workers

Antoine Laville and Serge Volkoff

Workers with Special Needs

Joke H. Grady-van den Nieuwboer

Diversity and Importance of Ergonomics--Two Examples

System Design in Diamond Manufacturing

Issachar Gilad

Disregarding Ergonomic Design Principles: Chernobyl

Vladimir M. Munipov

Tables

Click a link below to view table in article context.

1. Basic anthropometric core list

2. Fatigue & recovery dependent on activity levels

3. Rules of combination effects of two stress factors on strain

4. Differenting among several negative consequences of mental strain

5. Work-oriented principles for production structuring

6. Participation in organizational context

7. User participation in the technology process

8. Irregular working hours & sleep deprivation

9. Aspects of advance, anchor & retard sleeps

10. Control movements & expected effects

11. Control-effect relations of common hand controls

12. Rules for arrangement of controls

Figures

Point to a thumbnail to see figure caption, click to see the figure in the article context.

30. Occupational Hygiene (6)

30. Occupational Hygiene

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Goals, Definitions and General Information

Berenice I. Ferrari Goelzer

Recognition of Hazards

Linnéa Lillienberg

Evaluation of the Work Environment

Lori A. Todd

Occupational Hygiene: Control of Exposures Through Intervention

James Stewart

The Biological Basis for Exposure Assessment

Dick Heederik

Occupational Exposure Limits

Dennis J. Paustenbach

Tables

1. Hazards of chemical; biological & physical agents

2. Occupational exposure limits (OELs) - various countries

Figures

31. Personal Protection (7)

31. Personal Protection

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Overview and Philosophy of Personal Protection

Robert F. Herrick

Eye and Face Protectors

Kikuzi Kimura

Foot and Leg Protection

Toyohiko Miura

Head Protection

Isabelle Balty and Alain Mayer

Hearing Protection

John R. Franks and Elliott H. Berger

Protective Clothing

S. Zack Mansdorf

Respiratory Protection

Thomas J. Nelson

Tables

Click a link below to view table in article context.

1. Transmittance requirements (ISO 4850-1979)

2. Scales of protection - gas-welding & braze-welding

3. Scales of protection - oxygen cutting

4. Scales of protection - plasma arc cutting

5. Scales of protection - electric arc welding or gouging

6. Scales of protection - plasma direct arc welding

7. Safety helmet: ISO Standard 3873-1977

8. Noise Reduction Rating of a hearing protector

9. Computing the A-weighted noise reduction

10. Examples of dermal hazard categories

11. Physical, chemical & biological performance requirements

12. Material hazards associated with particular activities

13. Assigned protection factors from ANSI Z88 2 (1992)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

32. Record Systems and Surveillance (9)

32. Record Systems and Surveillance

Chapter Editor: Steven D. Stellman

Table of Contents

Tables and Figures

Occupational Disease Surveillance and Reporting Systems

Steven B. Markowitz

Occupational Hazard Surveillance

David H. Wegman and Steven D. Stellman

Surveillance in Developing Countries

David Koh and Kee-Seng Chia

Development and Application of an Occupational Injury and Illness Classification System

Elyce Biddle

Risk Analysis of Nonfatal Workplace Injuries and Illnesses

John W. Ruser

Case Study: Worker Protection and Statistics on Accidents and Occupational Diseases - HVBG, Germany

Martin Butz and Burkhard Hoffmann

Case Study: Wismut - A Uranium Exposure Revisited

Heinz Otten and Horst Schulz

Measurement Strategies and Techniques for Occupational Exposure Assessment in Epidemiology

Frank Bochmann and Helmut Blome

Case Study: Occupational Health Surveys in China

Tables

Click a link below to view the table in article context.

1. Angiosarcoma of the liver - world register

2. Occupational illness, US, 1986 versus 1992

3. US Deaths from pneumoconiosis & pleural mesothelioma

4. Sample list of notifiable occupational diseases

5. Illness & injury reporting code structure, US

6. Nonfatal occupational injuries & illnesses, US 1993

7. Risk of occupational injuries & illnesses

8. Relative risk for repetitive motion conditions

9. Workplace accidents, Germany, 1981-93

10. Grinders in metalworking accidents, Germany, 1984-93

11. Occupational disease, Germany, 1980-93

12. Infectious diseases, Germany, 1980-93

13. Radiation exposure in the Wismut mines

14. Occupational diseases in Wismut uranium mines 1952-90

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

33. Toxicology (21)

33. Toxicology

Chapter Editor: Ellen K. Silbergeld

Table of Contents

Tables and Figures

Introduction

Ellen K. Silbergeld, Chapter Editor

General Principles of Toxicology

Definitions and Concepts

Bo Holmberg, Johan Hogberg and Gunnar Johanson

Toxicokinetics

Dušan Djuríc

Target Organ And Critical Effects

Marek Jakubowski

Effects Of Age, Sex And Other Factors

Spomenka Telišman

Genetic Determinants Of Toxic Response

Daniel W. Nebert and Ross A. McKinnon

Mechanisms of Toxicity

Introduction And Concepts

Philip G. Watanabe

Cellular Injury And Cellular Death

Benjamin F. Trump and Irene K. Berezesky

Genetic Toxicology

R. Rita Misra and Michael P. Waalkes

Immunotoxicology

Joseph G. Vos and Henk van Loveren

Target Organ Toxicology

Ellen K. Silbergeld

Toxicology Test Methods

Biomarkers

Philippe Grandjean

Genetic Toxicity Assessment

David M. DeMarini and James Huff

In Vitro Toxicity Testing

Joanne Zurlo

Structure Activity Relationships

Ellen K. Silbergeld

Regulatory Toxicology

Toxicology In Health And Safety Regulation

Ellen K. Silbergeld

Principles Of Hazard Identification - The Japanese Approach

Masayuki Ikeda

The United States Approach to Risk Assessment Of Reproductive Toxicants and Neurotoxic Agents

Ellen K. Silbergeld

Approaches To Hazard Identification - IARC

Harri Vainio and Julian Wilbourn

Appendix - Overall Evaluations of Carcinogenicity to Humans: IARC Monographs Volumes 1-69 (836)

Carcinogen Risk Assessment: Other Approaches

Cees A. van der Heijden

Tables

Click a link below to view table in article context.

- Examples of critical organs & critical effects

- Basic effects of possible multiple interactions of metals

- Haemoglobin adducts in workers exposed to aniline & acetanilide

- Hereditary, cancer-prone disorders & defects in DNA repair

- Examples of chemicals that exhibit genotoxicity in human cells

- Classification of tests for immune markers

- Examples of biomarkers of exposure

- Pros & cons of methods for identifying human cancer risks

- Comparison of in vitro systems for hepatotoxicity studies

- Comparison of SAR & test data: OECD/NTP analyses

- Regulation of chemical substances by laws, Japan

- Test items under the Chemical Substance Control Law, Japan

- Chemical substances & the Chemical Substances Control Law

- Selected major neurotoxicity incidents

- Examples of specialized tests to measure neurotoxicity

- Endpoints in reproductive toxicology

- Comparison of low-dose extrapolations procedures

- Frequently cited models in carcinogen risk characterization

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

General Fatigue

This article is adapted from the 3rd edition of the Encyclopaedia of Occupational Health and Safety.

The two concepts of fatigue and rest are familiar to all from personal experience. The word “fatigue” is used to denote very different conditions, all of which cause a reduction in work capacity and resistance. The very varied use of the concept of fatigue has resulted in an almost chaotic confusion and some clarification of current ideas is necessary. For a long time, physiology has distinguished between muscle fatigue and general fatigue. The former is an acute painful phenomenon localized in the muscles: general fatigue is characterized by a sense of diminishing willingness to work. This article is concerned only with general fatigue, which may also be called “psychic fatigue” or “nervous fatigue” and the rest that it necessitates.

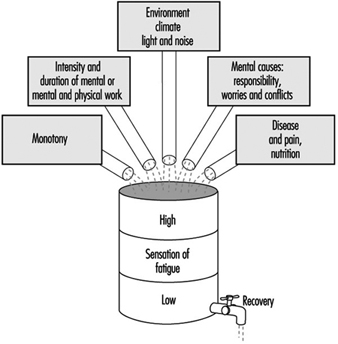

General fatigue may be due to quite different causes, the most important of which are shown in figure 1. The effect is as if, during the course of the day, all the various stresses experienced accumulate within the organism, gradually producing a feeling of increasing fatigue. This feeling prompts the decision to stop work; its effect is that of a physiological prelude to sleep.

Figure 1. Diagrammatic presentation of the cumulative effect of the everyday causes of fatigue

Fatigue is a salutary sensation if one can lie down and rest. However, if one disregards this feeling and forces oneself to continue working, the feeling of fatigue increases until it becomes distressing and finally overwhelming. This daily experience demonstrates clearly the biological significance of fatigue which plays a part in sustaining life, similar to that played by other sensations such as, for example, thirst, hunger, fear, etc.

Rest is represented in figure 1 as the emptying of a barrel. The phenomenon of rest can take place normally if the organism remains undisturbed or if at least one essential part of the body is not subjected to stress. This explains the decisive part played on working days by all work breaks, from the short pause during work to the nightly sleep. The simile of the barrel illustrates how necessary it is for normal living to reach a certain equilibrium between the total load borne by the organism and the sum of the possibilities for rest.

Neurophysiological interpretation of fatigue

The progress of neurophysiology during the last few decades has greatly contributed to a better understanding of the phenomena triggered off by fatigue in the central nervous system.

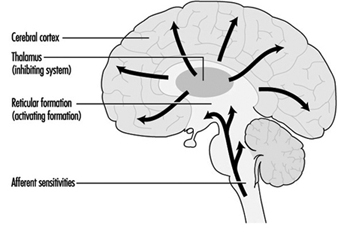

The physiologist Hess was the first to observe that electrical stimulation of certain of the diencephalic structures, and more especially of certain of the structures of the medial nucleus of the thalamus, gradually produced an inhibiting effect which showed itself in a deterioration in the capacity for reaction and in a tendency to sleep. If the stimulation was continued for a certain time, general relaxation was followed by sleepiness and finally by sleep. It was later proved that starting from these structures, an active inhibition may extend to the cerebral cortex where all conscious phenomena are centered. This is reflected not only in behaviour, but also in the electrical activity of the cerebral cortex. Other experiments have also succeeded in initiating inhibitions from other subcortical regions.

The conclusion which can be drawn from all these studies is that there are structures located in the diencephalon and mesencephalon which represent an effective inhibiting system and which trigger off fatigue with all its accompanying phenomena.

Inhibition and activation

Numerous experiments performed on animals and humans have shown that the general disposition of them both to reaction depends not only on this system of inhibition but essentially also on a system functioning in an antagonistic manner, known as the reticular ascending system of activation. We know from experiments that the reticular formation contains structures that control the degree of wakefulness, and consequently the general dispositions to a reaction. Nervous links exist between these structures and the cerebral cortex where the activating influences are exerted on the consciousness. Moreover, the activating system receives stimulation from the sensory organs. Other nervous connections transmit impulses from the cerebral cortex—the area of perception and thought—to the activation system. On the basis of these neurophysiological concepts, it can be established that external stimuli, as well as influences originating in the areas of consciousness, may, in passing through the activating system, stimulate a disposition to a reaction.

In addition, many other investigations make it possible to conclude that stimulation of the activating system frequently spreads also from the vegetative centers, and cause the organism to orient towards the expenditure of energy, towards work, struggle, flight, etc. (ergotropic conversion of the internal organs). Conversely, it appears that stimulation of the inhibiting system within the sphere of the vegetative nervous system causes the organism to tend towards rest, reconstitution of its reserves of energy, phenomena of assimilation (trophotropic conversion).

By synthesis of all these neurophysiological findings, the following conception of fatigue can be established: the state and feeling of fatigue are conditioned by the functional reaction of the consciousness in the cerebral cortex, which is, in turn, governed by two mutually antagonistic systems—the inhibiting system and the activating system. Thus, the disposition of humans to work depends at each moment on the degree of activation of the two systems: if the inhibiting system is dominant, the organism will be in a state of fatigue; when the activating system is dominant, it will exhibit an increased disposition to work.

This psychophysiological conception of fatigue makes it possible to understand certain of its symptoms which are sometimes difficult to explain. Thus, for example, a feeling of fatigue may disappear suddenly when some unexpected outside event occurs or when emotional tension develops. It is clear in both these cases that the activating system has been stimulated. Conversely, if the surroundings are monotonous or work seems boring, the functioning of the activating system is diminished and the inhibiting system becomes dominant. This explains why fatigue appears in a monotonous situation without the organism being subjected to any workload.

Figure 2 depicts diagrammatically the notion of the mutually antagonistic systems of inhibition and activation.

Figure 2. Diagrammatic presentation of the control of disposition to work by means of inhibiting and activating systems

Clinical fatigue

It is a matter of common experience that pronounced fatigue occurring day after day will gradually produce a state of chronic fatigue. The feeling of fatigue is then intensified and comes on not only in the evening after work but already during the day, sometimes even before the start of work. A feeling of malaise, frequently of an emotive nature, accompanies this state. The following symptoms are often observed in persons suffering from fatigue: heightened psychic emotivity (antisocial behaviour, incompatibility), tendency to depression (unmotivated anxiety), and lack of energy with loss of initiative. These psychic effects are often accompanied by an unspecific malaise and manifest themselves by psychosomatic symptoms: headaches, vertigo, cardiac and respiratory functional disturbances, loss of appetite, digestive disorders, insomnia, etc.

In view of the tendency towards morbid symptoms that accompany chronic fatigue, it may justly be called clinical fatigue. There is a tendency towards increased absenteeism, and particularly to more absences for short periods. This would appear to be caused both by the need for rest and by increased morbidity. The state of chronic fatigue occurs particularly among persons exposed to psychic conflicts or difficulties. It is sometimes very difficult to distinguish the external and internal causes. In fact, it is almost impossible to distinguish cause and effect in clinical fatigue: a negative attitude towards work, superiors or workplace may just as well be the cause of clinical fatigue as the result.

Research has shown that the switchboard operators and supervisory personnel employed in telecommunications services exhibited a significant increase in physiological symptoms of fatigue after their work (visual reaction time, flicker fusion frequency, dexterity tests). Medical investigations revealed that in these two groups of workers there was a significant increase in neurotic conditions, irritability, difficulty in sleeping and in the chronic feeling of lassitude, by comparison with a similar group of women employed in the technical branches of the postal, telephone and telegraphic services. The accumulation of symptoms was not always due to a negative attitude on the part of the women affected their job or their working conditions.

Preventive Measures

There is no panacea for fatigue but much can be done to alleviate the problem by attention to general working conditions and the physical environment at the workplace. For example much can be achieved by the correct arrangement of hours of work, provision of adequate rest periods and suitable canteens and restrooms; adequate paid holidays should also be given to workers. The ergonomic study of the workplace can also help in the reduction of fatigue by ensuring that seats, tables, and workbenches are of suitable dimensions and that the workflow is correctly organized. In addition, noise control, air-conditioning, heating, ventilation, and lighting may all have a beneficial effect on delaying the onset of fatigue in workers.

Monotony and tension may also be alleviated by controlled use of colour and decoration in the surroundings, intervals of music and sometimes breaks for physical exercises for sedentary workers. Training of workers and in particular of supervisory and management staff also play an important part.

Target Organ Toxicology

The study and characterization of chemicals and other agents for toxic properties is often undertaken on the basis of specific organs and organ systems. In this chapter, two targets have been selected for in-depth discussion: the immune system and the gene. These examples were chosen to represent a complex target organ system and a molecular target within cells. For more comprehensive discussion of the toxicology of target organs, the reader is referred to standard toxicology texts such as Casarett and Doull, and Hayes. The International Programme on Chemical Safety (IPCS) has also published several criteria documents on target organ toxicology, by organ system.

Target organ toxicology studies are usually undertaken on the basis of information indicating the potential for specific toxic effects of a substance, either from epidemiological data or from general acute or chronic toxicity studies, or on the basis of special concerns to protect certain organ functions, such as reproduction or foetal development. In some cases, specific target organ toxicity tests are expressly mandated by statutory authorities, such as neurotoxicity testing under the US pesticides law (see “The United States approach to risk assessment of reproductive toxicants and neurotoxic agents,” and mutagenicity testing under the Japanese Chemical Substance Control Law (see “Principles of hazard identification: The Japanese approach”).

As discussed in “Target organ and critical effects,” the identification of a critical organ is based upon the detection of the organ or organ system which first responds adversely or to the lowest doses or exposures. This information is then used to design specific toxicology investigations or more defined toxicity tests that are designed to elicit more sensitive indications of intoxication in the target organ. Target organ toxicology studies may also be used to determine mechanisms of action, of use in risk assessment (see “The United States approach to risk assessment of reproductive toxicants and neurotoxic agents”).

Methods of Target Organ Toxicity Studies

Target organs may be studied by exposure of intact organisms and detailed analysis of function and histopathology in the target organ, or by in vitro exposure of cells, tissue slices, or whole organs maintained for short or long term periods in culture (see “Mechanisms of toxicology: Introduction and concepts”). In some cases, tissues from human subjects may also be available for target organ toxicity studies, and these may provide opportunities to validate assumptions of cross-species extrapolation. However, it must be kept in mind that such studies do not provide information on relative toxicokinetics.

In general, target organ toxicity studies share the following common characteristics: detailed histopathological examination of the target organ, including post mortem examination, tissue weight, and examination of fixed tissues; biochemical studies of critical pathways in the target organ, such as important enzyme systems; functional studies of the ability of the organ and cellular constituents to perform expected metabolic and other functions; and analysis of biomarkers of exposure and early effects in target organ cells.

Detailed knowledge of target organ physiology, biochemistry and molecular biology may be incorporated in target organ studies. For instance, because the synthesis and secretion of small-molecular-weight proteins is an important aspect of renal function, nephrotoxicity studies often include special attention to these parameters (IPCS 1991). Because cell-to-cell communication is a fundamental process of nervous system function, target organ studies in neurotoxicity may include detailed neurochemical and biophysical measurements of neurotransmitter synthesis, uptake, storage, release and receptor binding, as well as electrophysiological measurement of changes in membrane potential associated with these events.

A high degree of emphasis is being placed upon the development of in vitro methods for target organ toxicity, to replace or reduce the use of whole animals. Substantial advances in these methods have been achieved for reproductive toxicants (Heindel and Chapin 1993).

In summary, target organ toxicity studies are generally undertaken as a higher order test for determining toxicity. The selection of specific target organs for further evaluation depends upon the results of screening level tests, such as the acute or subchronic tests used by OECD and the European Union; some target organs and organ systems may be a priori candidates for special investigation because of concerns to prevent certain types of adverse health effects.

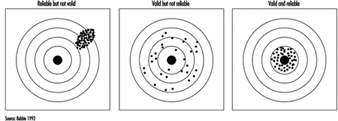

Validity Issues in Study Design

The Need for Validity

Epidemiology aims at providing an understanding of the disease experience in populations. In particular, it can be used to obtain insight into the occupational causes of ill health. This knowledge comes from studies conducted on groups of people having a disease by comparing them to people without that disease. Another approach is to examine what diseases people who work in certain jobs with particular exposures acquire and to compare these disease patterns to those of people not similarly exposed. These studies provide estimates of risk of disease for specific exposures. For information from such studies to be used for establishing prevention programmes, for the recognition of occupational diseases, and for those workers affected by exposures to be appropriately compensated, these studies must be valid.

Validity can be defined as the ability of a study to reflect the true state of affairs. A valid study is therefore one which measures correctly the association (either positive, negative or absent) between an exposure and a disease. It describes the direction and magnitude of a true risk. Two types of validity are distinguished: internal and external validity. Internal validity is a study’s ability to reflect what really happened among the study subjects; external validity reflects what could occur in the population.

Validity relates to the truthfulness of a measurement. Validity must be distinguished from precision of the measurement, which is a function of the size of the study and the efficiency of the study design.

Internal Validity

A study is said to be internally valid when it is free from biases and therefore truly reflects the association between exposure and disease which exists among the study participants. An observed risk of disease in association with an exposure may indeed result from a real association and therefore be valid, but it may also reflect the influence of biases. A bias will give a distorted image of reality.

Three major types of biases, also called systematic errors, are usually distinguished:

- selection bias

- information or observation bias

- confounding

They will be presented briefly below, using examples from the occupational health setting.

Selection bias

Selection bias will occur when the entry into the study is influenced by knowledge of the exposure status of the potential study participant. This problem is therefore encountered only when the disease has already taken place by the time (before) the person enters the study. Typically, in the epidemiological setting, this will happen in case-control studies or in retrospective cohort studies. This means that a person will be more likely to be considered a case if it is known that he or she has been exposed. Three sets of circumstances may lead to such an event, which will also depend on the severity of the disease.

Self-selection bias

This can occur when people who know they have been exposed to known or believed harmful products in the past and who are convinced their disease is the result of the exposure will consult a physician for symptoms which other people, not so exposed, might have ignored. This is particularly likely to happen for diseases which have few noticeable symptoms. An example may be early pregnancy loss or spontaneous abortion among female nurses handling drugs used for cancer treatment. These women are more aware than most of reproductive physiology and, by being concerned about their ability to have children, may be more likely to recognize or label as a spontaneous abortion what other women would only consider as a delay in the onset of menstruation. Another example from a retrospective cohort study, cited by Rothman (1986), involves a Centers for Disease Control study of leukaemia among troops who had been present during a US atomic test in Nevada. Of the troops present on the test site, 76% were traced and constituted the cohort. Of these, 82% were found by the investigators, but an additional 18% contacted the investigators themselves after hearing publicity about the study. Four cases of leukaemia were present among the 82% traced by CDC and four cases were present among the self-referred 18%. This strongly suggests that the investigators’ ability to identify exposed persons was linked to leukaemia risk.

Diagnostic bias

This will occur when the doctors are more likely to diagnose a given disease once they know to what the patient has been previously exposed. For example, when most paints were lead-based, a symptom of disease of the peripheral nerves called peripheral neuritis with paralysis was also known as painters’ “wrist drop”. Knowing the occupation of the patient made it easier to diagnose the disease even in its early stages, whereas the identification of the causal agent would be much more difficult in research participants not known to be occupationally exposed to lead.

Bias resulting from refusal to participate in a study

When people, either healthy or sick, are asked to participate in a study, several factors play a role in determining whether or not they will agree. Willingness to answer variably lengthy questionnaires, which at times inquire about sensitive issues, and even more so to give blood or other biological samples, may be determined by the degree of self-interest held by the person. Someone who is aware of past potential exposure may be ready to comply with this inquiry in the hope that it will help to find the cause of the disease, whereas someone who considers that they have not been exposed to anything dangerous, or who is not interested in knowing, may decline the invitation to participate in the study. This can lead to a selection of those people who will finally be the study participants as compared to all those who might have been.

Information bias

This is also called observation bias and concerns disease outcome in follow-up studies and exposure assessment in case-control studies.

Differential outcome assessment in prospective follow-up (cohort) studies

Two groups are defined at the start of the study: an exposed group and an unexposed group. Problems of diagnostic bias will arise if the search for cases differs between these two groups. For example, consider a cohort of people exposed to an accidental release of dioxin in a given industry. For the highly exposed group, an active follow-up system is set up with medical examinations and biological monitoring at regular intervals, whereas the rest of the working population receives only routine care. It is highly likely that more disease will be identified in the group under close surveillance, which would lead to a potential over-estimation of risk.

Differential losses in retrospective cohort studies

The reverse mechanism to that described in the preceding paragraph may occur in retrospective cohort studies. In these studies, the usual way of proceeding is to start with the files of all the people who have been employed in a given industry in the past, and to assess disease or mortality subsequent to employment. Unfortunately, in almost all studies files are incomplete, and the fact that a person is missing may be related either to exposure status or to disease status or to both. For example, in a recent study conducted in the chemical industry in workers exposed to aromatic amines, eight tumours were found in a group of 777 workers who had undergone cytological screening for urinary tumours. Altogether, only 34 records were found missing, corresponding to a 4.4% loss from the exposure assessment file, but for bladder cancer cases, exposure data were missing for two cases out of eight, or 25%. This shows that the files of people who became cases were more likely to become lost than the files of other workers. This may occur because of more frequent job changes within the company (which may be linked to exposure effects), resignation, dismissal or mere chance.

Differential assessment of exposure in case-control studies

In case-control studies, the disease has already occurred at the start of the study, and information will be sought on exposures in the past. Bias may result either from the interviewer’s or study participant’s attitude to the investigation. Information is usually collected by trained interviewers who may or may not be aware of the hypothesis underlying the research. For example, in a population-based case-control study of bladder cancer conducted in a highly industrialized region, study staff may well be aware of the fact that certain chemicals, such as aromatic amines, are risk factors for bladder cancer. If they also know who has developed the disease and who has not, they may be likely to conduct more in-depth interviews with the participants who have bladder cancer than with the controls. They may insist on more detailed information of past occupations, searching systematically for exposure to aromatic amines, whereas for controls they may record occupations in a more routine way. The resulting bias is known as exposure suspicion bias.

The participants themselves may also be responsible for such bias. This is called recall bias to distinguish it from interviewer bias. Both have exposure suspicion as the mechanism for the bias. Persons who are sick may suspect an occupational origin to their disease and therefore will try to remember as accurately as possible all the dangerous agents to which they may have been exposed. In the case of handling undefined products, they may be inclined to recall the names of precise chemicals, particularly if a list of suspected products is made available to them. By contrast, controls may be less likely to go through the same thought process.

Confounding

Confounding exists when the association observed between exposure and disease is in part the result of a mixing of the effect of the exposure under study and another factor. Let us say, for example, that we are finding an increased risk of lung cancer among welders. We are tempted to conclude immediately that there is a causal association between exposure to welding fumes and lung cancer. However, we also know that smoking is by far the main risk factor for lung cancer. Therefore, if information is available, we begin checking the smoking status of welders and other study participants. We may find that welders are more likely to smoke than non-welders. In that situation, smoking is known to be associated with lung cancer and, at the same time, in our study smoking is also found to be associated with being a welder. In epidemiological terms, this means that smoking, linked both to lung cancer and to welding, is confounding the association between welding and lung cancer.

Interaction or effect modification

In contrast to all the issues listed above, namely selection, information and confounding, which are biases, interaction is not a bias due to problems in study design or analysis, but reflects reality and its complexity. An example of this phenomenon is the following: exposure to radon is a risk factor for lung cancer, as is smoking. In addition, smoking and radon exposure have different effects on lung cancer risk depending on whether they act together or in isolation. Most of the occupational studies on this topic have been conducted among underground miners and at times have provided conflicting results. Overall, there seem to be arguments in favour of an interaction of smoking and radon exposure in producing lung cancer. This means that lung cancer risk is increased by exposure to radon, even in non-smokers, but that the size of the risk increase from radon is much greater among smokers than among non-smokers. In epidemiological terms, we say that the effect is multiplicative. In contrast to confounding, described above, interaction needs to be carefully analysed and described in the analysis rather than simply controlled, as it reflects what is happening at the biological level and is not merely a consequence of poor study design. Its explanation leads to a more valid interpretation of the findings from a study.

External Validity

This issue can be addressed only after ensuring that internal validity is secured. If we are convinced that the results observed in the study reflect associations which are real, we can ask ourselves whether or not we can extrapolate these results to the larger population from which the study participants themselves were drawn, or even to other populations which are identical or at least very similar. The most common question is whether results obtained for men also apply to women. For years, studies and, in particular, occupational epidemiological investigations have been conducted exclusively among men. Studies among chemists carried out in the 1960s and 1970s in the United States, United Kingdom and Sweden all found increased risks of specific cancers—namely leukaemia, lymphoma and pancreatic cancer. Based on what we knew of the effects of exposure to solvents and some other chemicals, we could already have deduced at the time that laboratory work also entailed carcinogenic risk for women. This in fact was shown to be the case when the first study among women chemists was finally published in the mid-1980s, which found results similar to those among men. It is worth noting that other excess cancers found were tumours of the breast and ovary, traditionally considered as being related only to endogenous factors or reproduction, but for which newly suspected environmental factors such as pesticides may play a role. Much more work needs to be done on occupational determinants of female cancers.

Strategies for a Valid Study

A perfectly valid study can never exist, but it is incumbent upon the researcher to try to avoid, or at least to minimize, as many biases as possible. This can often best be done at the study design stage, but can also be carried out during analysis.

Study design

Selection and information bias can be avoided only through the careful design of an epidemiological study and the scrupulous implementation of all the ensuing day-to-day guidelines, including meticulous attention to quality assurance, for the conduct of the study in field conditions. Confounding may be dealt with either at the design or analysis stage.

Selection

Criteria for considering a participant as a case must be explicitly defined. One cannot, or at least should not, attempt to study ill-defined clinical conditions. A way of minimizing the impact that knowledge of the exposure may have on disease assessment is to include only severe cases which would have been diagnosed irrespective of any information on the history of the patient. In the field of cancer, studies often will be limited to cases with histological proof of the disease to avoid the inclusion of borderline lesions. This also will mean that groups under study are well defined. For example, it is well-known in cancer epidemiology that cancers of different histological types within a given organ may have dissimilar risk factors. If the number of cases is sufficient, it is better to separate adenocarcinoma of the lung from squamous cell carcinoma of the lung. Whatever the final criteria for entry into the study, they should always be clearly defined and described. For example, the exact code of the disease should be indicated using the International Classification of Diseases (ICD) and also, for cancer, the International Classification of Diseases-Oncology (ICD-O).

Efforts should be made once the criteria are specified to maximize participation in the study. The decision to refuse to participate is hardly ever made at random and therefore leads to bias. Studies should first of all be presented to the clinicians who are seeing the patients. Their approval is needed to approach patients, and therefore they will have to be convinced to support the study. One argument that is often persuasive is that the study is in the interest of the public health. However, at this stage it is better not to discuss the exact hypothesis being evaluated in order to avoid unduly influencing the clinicians involved. Physicians should not be asked to take on supplementary duties; it is easier to convince health personnel to lend their support to a study if means are provided by the study investigators to carry out any additional tasks, over and above routine care, necessitated by the study. Interviewers and data abstractors ought to be unaware of the disease status of their patients.

Similar attention should be paid to the information provided to participants. The goal of the study must be described in broad, neutral terms, but must also be convincing and persuasive. It is important that issues of confidentiality and interest for public health be fully understood while avoiding medical jargon. In most settings, use of financial or other incentives is not considered appropriate, although compensation should be provided for any expense a participant may incur. Last, but not least, the general population should be sufficiently scientifically literate to understand the importance of such research. Both the benefits and the risks of participation must be explained to each prospective participant where they need to complete questionnaires and/or to provide biological samples for storage and/or analysis. No coercion should be applied in obtaining prior and fully informed consent. Where studies are exclusively records-based, prior approval of the agencies responsible for ensuring the confidentiality of such records must be secured. In these instances, individual participant consent usually can be waived. Instead, approval of union and government officers will suffice. Epidemiological investigations are not a threat to an individual’s private life, but are a potential aid to improve the health of the population. The approval of an institutional review board (or ethics review committee) will be needed prior to the conduct of a study, and much of what is stated above will be expected by them for their review.

Information

In prospective follow-up studies, means for assessment of the disease or mortality status must be identical for exposed and non-exposed participants. In particular, different sources should not be used, such as only checking in a central mortality register for non-exposed participants and using intensive active surveillance for exposed participants. Similarly, the cause of death must be obtained in strictly comparable ways. This means that if a system is used to gain access to official documents for the unexposed population, which is often the general population, one should never plan to get even more precise information through medical records or interviews on the participants themselves or on their families for the exposed subgroup.

In retrospective cohort studies, efforts should be made to determine how closely the population under study is compared to the population of interest. One should beware of potential differential losses in exposed and non-exposed groups by using various sources concerning the composition of the population. For example, it may be useful to compare payroll lists with union membership lists or other professional listings. Discrepancies must be reconciled and the protocol adopted for the study must be closely followed.

In case-control studies, other options exist to avoid biases. Interviewers, study staff and study participants need not be aware of the precise hypothesis under study. If they do not know the association being tested, they are less likely to try to provide the expected answer. Keeping study personnel in the dark as to the research hypothesis is in fact often very impractical. The interviewer will almost always know the exposures of greatest potential interest as well as who is a case and who is a control. We therefore have to rely on their honesty and also on their training in basic research methodology, which should be a part of their professional background; objectivity is the hallmark at all stages in science.

It is easier not to inform the study participants of the exact object of the research. Good, basic explanations on the need to collect data in order to have a better understanding of health and disease are usually sufficient and will satisfy the needs of ethics review.

Confounding

Confounding is the only bias which can be dealt with either at the study design stage or, provided adequate information is available, at the analysis stage. If, for example, age is considered to be a potential confounder of the association of interest because age is associated with the risk of disease (i.e., cancer becomes more frequent in older age) and also with exposure (conditions of exposure vary with age or with factors related to age such as qualification, job position and duration of employment), several solutions exist. The simplest is to limit the study to a specified age range—for example, enrol only Caucasian men aged 40 to 50. This will provide elements for a simple analysis, but will also have the drawback of limiting the application of the results to a single sex age/racial group. Another solution is matching on age. This means that for each case, a referent of the same age is needed. This is an attractive idea, but one has to keep in mind the possible difficulty of fulfilling this requirement as the number of matching factors increases. In addition, once a factor has been matched on, it becomes impossible to evaluate its role in the occurrence of disease. The last solution is to have sufficient information on potential confounders in the study database in order to check for them in the analysis. This can be done either through a simple stratified analysis, or with more sophisticated tools such as multivariate analysis. However, it should be remembered that analysis will never be able to compensate for a poorly designed or conducted study.

Conclusion

The potential for biases to occur in epidemiological research is long established. This was not too much of a concern when the associations being studied were strong (as is the case for smoking and lung cancer) and therefore some inaccuracy did not cause too severe a problem. However, now that the time has come to evaluate weaker risk factors, the need for better tools becomes paramount. This includes the need for excellent study designs and the possibility of combining the advantages of various traditional designs such as the case-control or cohort studies with more innovative approaches such as case-control studies nested within a cohort. Also, the use of biomarkers may provide the means of obtaining more accurate assessments of current and possibly past exposures, as well as for the early stages of disease.

Fatigue and Recovery

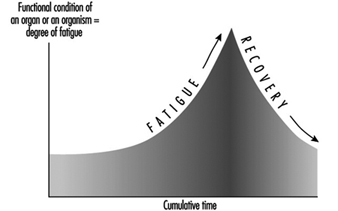

Fatigue and recovery are periodic processes in every living organism. Fatigue can be described as a state which is characterized by a feeling of tiredness combined with a reduction or undesired variation in the performance of the activity (Rohmert 1973).

Not all the functions of the human organism become tired as a result of use. Even when asleep, for example, we breathe and our heart is pumping without pause. Obviously, the basic functions of breathing and heart activity are possible throughout life without fatigue and without pauses for recovery.

On the other hand, we find after fairly prolonged heavy work that there is a reduction in capacity—which we call fatigue. This does not apply to muscular activity alone. The sensory organs or the nerve centres also become tired. It is, however, the aim of every cell to balance out the capacity lost by its activity, a process which we call recovery.

Stress, Strain, Fatigue and Recovery

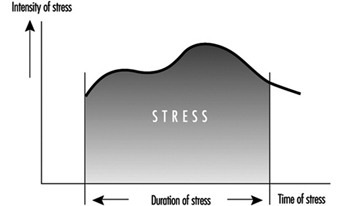

The concepts of fatigue and recovery at human work is closely related to the ergonomic concepts of stress and strain (Rohmert 1984) (figure 1).

Figure 1. Stress, strain and fatigue

Stress means the sum of all parameters of work in the working system influencing people at work, which are perceived or sensed mainly over the receptor system or which put demands on the effector system. The parameters of stress result from the work task (muscular work, non-muscular work—task-oriented dimensions and factors) and from the physical, chemical and social conditions under which the work has to be done (noise, climate, illumination, vibration, shift work, etc.—situation-oriented dimensions and factors).

The intensity/difficulty, the duration and the composition (i.e., the simultaneous and successive distribution of these specific demands) of the stress factors results in combined stress, which all the exogenous effects of a working system exert on the working person. This combined stress can be actively coped with or passively put up with, specifically depending on the behaviour of the working person. The active case will involve activities directed towards the efficiency of the working system, while the passive case will induce reactions (voluntary or involuntary), which are mainly concerned with minimizing stress. The relation between the stress and activity is decisively influenced by the individual characteristics and needs of the working person. The main factors of influence are those that determine performance and are related to motivation and concentration and those related to disposition, which can be referred to as abilities and skills.

The stresses relevant to behaviour, which are manifest in certain activities, cause individually different strains. The strains can be indicated by the reaction of physiological or biochemical indicators (e.g., raising the heart rate) or it can be perceived. Thus, the strains are susceptible to “psycho-physical scaling”, which estimates the strain as experienced by the working person. In a behavioural approach, the existence of strain can also be derived from an activity analysis. The intensity with which indicators of strain (physiological-biochemical, behaviouristic or psycho-physical) react depends on the intensity, duration, and combination of stress factors as well as on the individual characteristics, abilities, skills, and needs of the working person.

Despite constant stresses the indicators derived from the fields of activity, performance and strain may vary over time (temporal effect). Such temporal variations are to be interpreted as processes of adaptation by the organic systems. The positive effects cause a reduction of strain/improvement of activity or performance (e.g., through training). In the negative case, however, they will result in increased strain/reduced activity or performance (e.g., fatigue, monotony).

The positive effects may come into action if the available abilities and skills are improved in the working process itself, e.g., when the threshold of training stimulation is slightly exceeded. The negative effects are likely to appear if so-called endurance limits (Rohmert 1984) are exceeded in the course of the working process. This fatigue leads to a reduction of physiological and psychological functions, which can be compensated by recovery.

To restore the original performance rest allowances or at least periods with less stress are necessary (Luczak 1993).

When the process of adaptation is carried beyond defined thresholds, the employed organic system may be damaged so as to cause a partial or total deficiency of its functions. An irreversible reduction of functions may appear when stress is far too high (acute damage) or when recovery is impossible for a longer time (chronic damage). A typical example of such damage is noise-induced hearing loss.

Models of Fatigue

Fatigue can be many-sided, depending on the form and combi-nation of strain, and a general definition of it is yet not possible. The biological proceedings of fatigue are in general not measurable in a direct way, so that the definitions are mainly oriented towards the fatigue symptoms. These fatigue symptoms can be divided, for example, into the following three categories.

- Physiological symptoms: fatigue is interpreted as a decrease of functions of organs or of the whole organism. It results in physiological reactions, e.g., in an increase of heart rate frequency or electrical muscle activity (Laurig 1970).

- Behavioural symptoms: fatigue is interpreted mainly as a decrease of performance parameters. Examples are increasing errors when solving certain tasks, or an increasing variability of performance.

- Psycho-physical symptoms: fatigue is interpreted as an increase of the feeling of exertion and deterioration of sensation, depending on the intensity, duration and composition of stress factors.

In the process of fatigue all three of these symptoms may play a role, but they may appear at different points in time.

Physiological reactions in organic systems, particularly those involved in the work, may appear first. Later on, the feelings of exertion may be affected. Changes in performance are manifested generally in a decreasing regularity of work or in an increasing quantity of errors, although the mean of the performance may not yet be affected. On the contrary, with appropriate motivation, the working person may even try to maintain performance through will-power. The next step may be a clear reduction of performance ending with a breakdown of performance. The physiological symptoms may lead to a breakdown of the organism including changes of the structure of personality and in exhaustion. The process of fatigue is explained in the theory of successive destabilization (Luczak 1983).

The principal trend of fatigue and recovery is shown in figure 2.

Figure 2. Principal trend of fatigue and recovery

Prognosis of Fatigue and Recovery

In the field of ergonomics there is a special interest in predicting fatigue dependent on the intensity, duration and composition of stress factors and to determine the necessary recovery time. Table 1 shows those different activity levels and consideration periods and possible reasons of fatigue and different possibilities of recovery.

Table 1. Fatigue and recovery dependent on activity levels

|

Level of activity |

Period |

Fatigue from |

Recovery by |

|

Work life |

Decades |

Overexertion for |

Retirement |

|

Phases of work life |

Years |

Overexertion for |

Holidays |

|

Sequences of |

Months/weeks |

Unfavourable shift |

Weekend, free |

|

One work shift |

One day |

Stress above |

Free time, rest |

|

Tasks |

Hours |

Stress above |

Rest period |

|

Part of a task |

Minutes |

Stress above |

Change of stress |

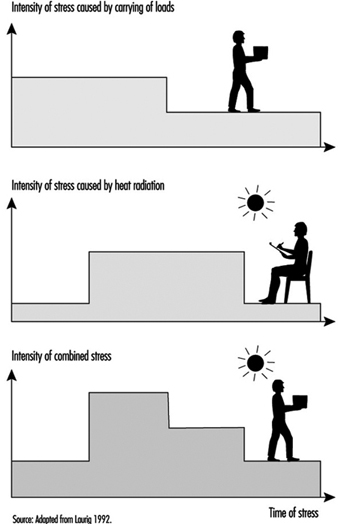

In ergonomic analysis of stress and fatigue for determining the necessary recovery time, considering the period of one working day is the most important. The methods of such analyses start with the determination of the different stress factors as a function of time (Laurig 1992) (figure 3).

Figure 3. Stress as a function of time

The stress factors are determined from the specific work content and from the conditions of work. Work content could be the production of force (e.g., when handling loads), the coordination of motor and sensory functions (e.g., when assembling or crane operating), the conversion of information into reaction (e.g., when controlling), the transformations from input to output information (e.g., when programming, translating) and the production of information (e.g., when designing, problem solving). The conditions of work include physical (e.g., noise, vibration, heat), chemical (chemical agents) and social (e.g., colleagues, shift work) aspects.

In the easiest case there will be a single important stress factor while the others can be neglected. In those cases, especially when the stress factors results from muscular work, it is often possible to calculate the necessary rest allowances, because the basic concepts are known.

For example, the sufficient rest allowance in static muscle work depends on the force and duration of muscular contraction as in an exponential function linked by multiplication according to the formula:

![]()

with

R.A. = Rest allowance in percentage of t

t = duration of contraction (working period) in minutes

T = maximal possible duration of contraction in minutes

f = the force needed for the static force and

F = maximal force.

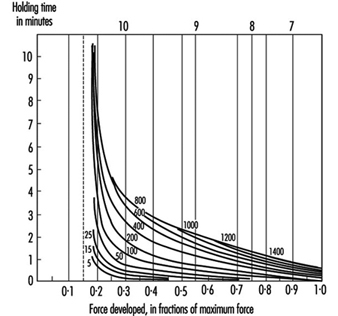

The connection between force, holding time and rest allowances is shown in figure 4.

Figure 4. Percentage rest allowances for various combinations of holding forces and time

Similar laws exist for heavy dynamic muscular work (Rohmert 1962), active light muscular work (Laurig 1974) or different industrial muscular work (Schmidtke 1971). More rarely you find comparable laws for non-physical work, e.g., for computing (Schmidtke 1965). An overview of existing methods for determining rest allowances for mainly isolated muscle and non-muscle work is given by Laurig (1981) and Luczak (1982).

More difficult is the situation where a combination of different stress factors exists, as shown in figure 5, which affect the working person simultaneously (Laurig 1992).

Figure 5. The combination of two stress factors

The combination of two stress factors, for example, can lead to different strain reactions depending on the laws of combination. The combined effect of different stress factors can be indifferent, compensatory or cumulative.

In the case of indifferent combination laws, the different stress factors have an effect on different subsystems of the organism. Each of these subsystems can compensate for the strain without the strain being fed into a common subsystem. The overall strain depends on the highest stress factor, and thus laws of superposition are not needed.

A compensatory effect is given when the combination of different stress factors leads to a lower strain than does each stress factor alone. The combination of muscular work and low temperatures can reduce the overall strain, because low temperatures allow the body to lose heat which is produced by the muscular work.

A cumulative effect arises if several stress factors are superimposed, that is, they must pass through one physiological “bottleneck”. An example is the combination of muscular work and heat stress. Both stress factors affect the circulatory system as a common bottleneck with resultant cumulative strain.

Possible combination effects between muscle work and physical conditions are described in Bruder (1993) (see table 2).

Table 2. Rules of combination effects of two stress factors on strain

|

Cold |

Vibration |

Illumination |

Noise |

|

|

Heavy dynamic work |

– |

+ |

0 |

0 |

|

Active light muscle work |

+ |

+ |

0 |

0 |

|

Static muscle work |

+ |

+ |

0 |

0 |

0 indifferent effect; + cumulative effect; – compensatory effect.

Source: Adapted from Bruder 1993.

For the case of the combination of more than two stress factors, which is the normal situation in practice, only limited scientific knowledge is available. The same applies for the successive combination of stress factors, (i.e., the strain effect of different stress factors which affect the worker successively). For such cases, in practice, the necessary recovery time is determined by measuring physiological or psychological parameters and using them as integrating values.

Impact of Random Measurement Error

Errors in exposure measurement may have different impacts on the exposure-disease relationship being studied, depending on how the errors are distributed. If an epidemiological study has been conducted blindly (i.e., measurements have been taken with no knowledge of the disease or health status of the study participants) we expect that measurement error will be evenly distributed across the strata of disease or health status.

Table 1 provides an example: suppose we recruit a cohort of people exposed at work to a toxicant, in order to investigate a frequent disease. We determine the exposure status only at recruitment (T0), and not at any further points in time during follow-up. However, let us say that a number of individuals do, in fact, change their exposure status in the following year: at time T1, 250 of the original 1,200 exposed people have ceased being exposed, while 150 of the original 750 non-exposed people have started to be exposed to the toxicant. Therefore, at time T1, 1,100 individuals are exposed and 850 are not exposed. As a consequence, we have “misclassification” of exposure, based on our initial measurement of exposure status at time T0. These individuals are then traced after 20 years (at time T2) and the cumulative risk of disease is evaluated. (The assumption being made in the example is that only exposure of more than one year is a concern.)

Table 1. Hypothetical cohort of 1950 individuals (exposed and unexposed at work), recruited at time T0 and whose disease status is ascertained at time T2

|

Time |

|||

|

T0 |

T1 |

T2 |

|

Exposed workers 1200 250 quit exposure 1100 (1200-250+150)

Cases of disease at time T2 = 220 among exposed workers

Non-exposed workers 750 150 start exposure 850 (750-150+250)

Cases of disease at time T2 = 85 among non-exposed workers

The true risk of disease at time T2 is 20% among exposed workers (220/1100),

and 10% in non-exposed workers (85/850) (risk ratio = 2.0).

Estimated risk at T2 of disease among those classified as exposed at T0: 20%

(i.e., true risk in those exposed) ´ 950 (i.e., 1200-250)+ 10%

(i.e., true risk in non-exposed) ´ 250 = (190+25)/1200 = 17.9%

Estimated risk at T2 of disease among those classified as non-exposed at

T0: 20% (i.e., true risk in those exposed) ´ 150 +10%

(i.e., true risk innon-exposed) ´ 600 (i.e., 750-150) = (30+60)/750 = 12%

Estimated risk ratio = 17.9% / 12% = 1.49

Misclassification depends, in this example, on the study design and the characteristics of the population, rather than on technical limitations of the exposure measurement. The effect of misclassification is such that the “true” ratio of 2.0 between the cumulative risk among exposed people and non-exposed people becomes an “observed” ratio of 1.49 (table 1). This underestimation of the risk ratio arises from a “blurring” of the relationship between exposure and disease, which occurs when the misclassification of exposure, as in this case, is evenly distributed according to the disease or health status (i.e., the exposure measurement is not influenced by whether or not the person suffered from the disease that we are studying).

By contrast, either underestimation or overestimation of the association of interest may occur when exposure misclassification is not evenly distributed across the outcome of interest. In the example, we may have bias, and not only a blurring of the aetiologic relationship, if classification of exposure depends on the disease or health status among the workers. This could arise, for example, if we decide to collect biological samples from a group of exposed workers and from a group of unexposed workers, in order to identify early changes related to exposure at work. Samples from the exposed workers might then be analysed in a more accurate way than samples from those unexposed; scientific curiosity might lead the researcher to measure additional biomarkers among the exposed people (including, e.g., DNA adducts in lymphocytes or urinary markers of oxidative damage to DNA), on the assumption that these people are scientifically “more interesting”. This is a rather common attitude which, however, could lead to serious bias.

Statistical Methods

There is much debate on the role of statistics in epidemiological research on causal relationships. In epidemiology, statistics is primarily a collection of methods for assessing data based on human (and also on animal) populations. In particular, statistics is a technique for the quantification and measurement of uncertain phenomena. All the scientific investigations which deal with non-deterministic, variable aspects of reality could benefit from statistical methodology. In epidemiology, variability is intrinsic to the unit of observation—a person is not a deterministic entity. While experimental designs would be improved in terms of better meeting the assumptions of statistics in terms of random variation, for ethical and practical reasons this approach is not too common. Instead, epidemiology is engaged in observational research which has associated with it both random and other sources of variability.

Statistical theory is concerned with how to control unstructured variability in the data in order to make valid inferences from empirical observations. Lacking any explanation for the variable behaviour of the phenomenon studied, statistics assumes it as random—that is, non-systematic deviations from some average state of nature (see Greenland 1990 for a criticism of these assumptions).

Science relies on empirical evidence to demonstrate whether its theoretical models of natural events have any validity. Indeed, the methods used from statistical theory determine the degree to which observations in the real world conform to the scientists’ view, in mathematical model form, of a phenomenon. Statistical methods, based in mathematics, have therefore to be carefully selected; there are plenty of examples about “how to lie with statistics”. Therefore, epidemiologists should be aware of the appropriateness of the techniques they apply to measure the risk of disease. In particular, great care is needed when interpreting both statistically significant and statistically non-significant results.

The first meaning of the word statistics relates to any summary quantity computed on a set of values. Descriptive indices or statistics such as the arithmetic average, the median or the mode, are widely used to summarize the information in a series of observations. Historically, these summary descriptors were used for administrative purposes by states, and therefore they were named statistics. In epidemiology, statistics that are commonly seen derive from the comparisons inherent to the nature of epidemiology, which asks questions such as: “Is one population at greater risk of disease than another?” In making such comparisons, the relative risk is a popular measure of the strength of association between an individual characteristic and the probability of becoming ill, and it is most commonly applied in aetiological research; attributable risk is also a measure of association between individual characteristics and disease occurrence, but it emphasizes the gain in terms of number of cases spared by an intervention which removes the factor in question—it is mostly applied in public health and preventive medicine.

The second meaning of the word statistics relates to the collection of techniques and the underlying theory of statistical inference. This is a particular form of inductive logic which specifies the rules for obtaining a valid generalization from a particular set of empirical observations. This generalization would be valid provided some assumptions are met. This is the second way in which an uneducated use of statistics can deceive us: in observational epidemiology, it is very difficult to be sure of the assumptions implied by statistical techniques. Therefore, sensitivity analysis and robust estimators should be companions of any correctly conducted data analysis. Final conclusions also should be based on overall knowledge, and they should not rely exclusively on the findings from statistical hypothesis testing.

Definitions

A statistical unit is the element on which the empirical observations are made. It could be a person, a biological specimen or a piece of raw material to be analysed. Usually the statistical units are independently chosen by the researcher, but sometimes more complex designs can be set up. For example, in longitudinal studies, a series of determinations is made on a collection of persons over time; the statistical units in this study are the set of determinations, which are not independent, but structured by their respective connections to each person being studied. Lack of independence or correlation among statistical units deserves special attention in statistical analysis.

A variable is an individual characteristic measured on a given statistical unit. It should be contrasted with a constant, a fixed individual characteristic—for example, in a study on human beings, having a head or a thorax are constants, while the gender of a single member of the study is a variable.

Variables are evaluated using different scales of measurement. The first distinction is between qualitative and quantitative scales. Qualitative variables provide different modalities or categories. If each modality cannot be ranked or ordered in relation to others—for example, hair colour, or gender modalities—we denote the variable as nominal. If the categories can be ordered—like degree of severity of an illness—the variable is called ordinal. When a variable consists of a numeric value, we say that the scale is quantitative. A discrete scale denotes that the variable can assume only some definite values—for example, integer values for the number of cases of disease. A continuous scale is used for those measures which result in real numbers. Continuous scales are said to be interval scales when the null value has a purely conventional meaning. That is, a value of zero does not mean zero quantity—for example, a temperature of zero degrees Celsius does not mean zero thermal energy. In this instance, only differences among values make sense (this is the reason for the term “interval” scale). A real null value denotes a ratio scale. For a variable measured on that scale, ratios of values also make sense: indeed, a twofold ratio means double the quantity. For example, to say that a body has a temperature two times greater than a second body means that it has two times the thermal energy of the second body, provided that the temperature is measured on a ratio scale (e.g., in Kelvin degrees). The set of permissible values for a given variable is called the domain of the variable.

Statistical Paradigms

Statistics deals with the way to generalize from a set of particular observations. This set of empirical measurements is called a sample. From a sample, we calculate some descriptive statistics in order to summarize the information collected.

The basic information that is generally required in order to characterize a set of measures relates to its central tendency and to its variability. The choice between several alternatives depends on the scale used to measure a phenomenon and on the purposes for which the statistics are computed. In table 1 different measures of central tendency and variability (or, dispersion) are described and associated with the appropriate scale of measurement.

Table 1. Indices of central tendency and dispersion by scale of measurement

|

Scale of measurement |

||||

|

Qualitative |

Quantitative |

|||

|

Indices |

Definition |

Nominal |

Ordinal |

Interval/ratio |

|

Arithmetic mean |

Sum of the observed values divided by the total number of observations |

|

|

x |

|

Median |

Midpoint value of the observed distribution |

|

x |

x |

|

Mode |

Most frequent value |

x |

x |

x |

|

Range |

Lowest and highest values of the distribution |

|

x |

x |

|

Variance |

Sum of the squared difference of each value from the mean divided by the total number of observations minus 1 |

|

|

x |

The descriptive statistics computed are called estimates when we use them as a substitute for the analogous quantity of the population from which the sample has been selected. The population counterparts of the estimates are constants called parameters. Estimates of the same parameter can be obtained using different statistical methods. An estimate should be both valid and precise.

The population-sample paradigm implies that validity can be assured by the way the sample is selected from the population. Random or probabilistic sampling is the usual strategy: if each member of the population has the same probability of being included in the sample, then, on average, our sample should be representative of the population and, moreover, any deviation from our expectation could be explained by chance. The probability of a given deviation from our expectation also can be computed, provided that random sampling has been performed. The same kind of reasoning applies to the estimates calculated for our sample with regard to the population parameters. We take, for example, the arithmetic average from our sample as an estimate of the mean value for the population. Any difference, if it exists, between the sample average and the population mean is attributed to random fluctuations in the process of selection of the members included in the sample. We can calculate the probability of any value of this difference, provided the sample was randomly selected. If the deviation between the sample estimate and the population parameter cannot be explained by chance, the estimate is said to be biased. The design of the observation or experiment provides validity to the estimates and the fundamental statistical paradigm is that of random sampling.