Children categories

27. Biological Monitoring (6)

27. Biological Monitoring

Chapter Editor: Robert Lauwerys

Table of Contents

Tables and Figures

General Principles

Vito Foà and Lorenzo Alessio

Quality Assurance

D. Gompertz

Metals and Organometallic Compounds

P. Hoet and Robert Lauwerys

Organic Solvents

Masayuki Ikeda

Genotoxic Chemicals

Marja Sorsa

Pesticides

Marco Maroni and Adalberto Ferioli

Tables

Click a link below to view table in article context.

1. ACGIH, DFG & other limit values for metals

2. Examples of chemicals & biological monitoring

3. Biological monitoring for organic solvents

4. Genotoxicity of chemicals evaluated by IARC

5. Biomarkers & some cell/tissue samples & genotoxicity

6. Human carcinogens, occupational exposure & cytogenetic end points

8. Exposure from production & use of pesticides

9. Acute OP toxicity at different levels of ACHE inhibition

10. Variations of ACHE & PCHE & selected health conditions

11. Cholinesterase activities of unexposed healthy people

12. Urinary alkyl phosphates & OP pesticides

13. Urinary alkyl phosphates measurements & OP

14. Urinary carbamate metabolites

15. Urinary dithiocarbamate metabolites

16. Proposed indices for biological monitoring of pesticides

17. Recommended biological limit values (as of 1996)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

28. Epidemiology and Statistics (12)

28. Epidemiology and Statistics

Chapter Editors: Franco Merletti, Colin L. Soskolne and Paolo Vineis

Table of Contents

Tables and Figures

Epidemiological Method Applied to Occupational Health and Safety

Franco Merletti, Colin L. Soskolne and Paolo Vineis

Exposure Assessment

M. Gerald Ott

Summary Worklife Exposure Measures

Colin L. Soskolne

Measuring Effects of Exposures

Shelia Hoar Zahm

Case Study: Measures

Franco Merletti, Colin L. Soskolne and Paola Vineis

Options in Study Design

Sven Hernberg

Validity Issues in Study Design

Annie J. Sasco

Impact of Random Measurement Error

Paolo Vineis and Colin L. Soskolne

Statistical Methods

Annibale Biggeri and Mario Braga

Causality Assessment and Ethics in Epidemiological Research

Paolo Vineis

Case Studies Illustrating Methodological Issues in the Surveillance of Occupational Diseases

Jung-Der Wang

Questionnaires in Epidemiological Research

Steven D. Stellman and Colin L. Soskolne

Asbestos Historical Perspective

Lawrence Garfinkel

Tables

Click a link below to view table in article context.

1. Five selected summary measures of worklife exposure

2. Measures of disease occurrence

3. Measures of association for a cohort study

4. Measures of association for case-control studies

5. General frequency table layout for cohort data

6. Sample layout of case-control data

7. Layout case-control data - one control per case

8. Hypothetical cohort of 1950 individuals to T2

9. Indices of central tendency & dispersion

10. A binomial experiment & probabilities

11. Possible outcomes of a binomial experiment

12. Binomial distribution, 15 successes/30 trials

13. Binomial distribution, p = 0.25; 30 trials

14. Type II error & power; x = 12, n = 30, a = 0.05

15. Type II error & power; x = 12, n = 40, a = 0.05

16. 632 workers exposed to asbestos 20 years or longer

17. O/E number of deaths among 632 asbestos workers

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

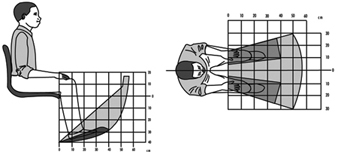

29. Ergonomics (27)

29. Ergonomics

Chapter Editors: Wolfgang Laurig and Joachim Vedder

Table of Contents

Tables and Figures

Overview

Wolfgang Laurig and Joachim Vedder

Goals, Principles and Methods

The Nature and Aims of Ergonomics

William T. Singleton

Analysis of Activities, Tasks and Work Systems

Véronique De Keyser

Ergonomics and Standardization

Friedhelm Nachreiner

Checklists

Pranab Kumar Nag

Physical and Physiological Aspects

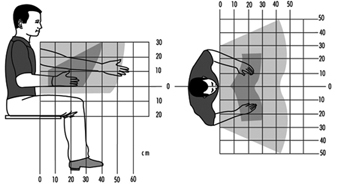

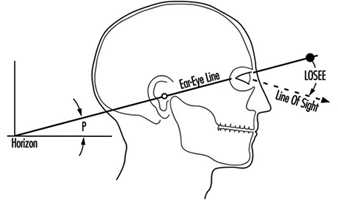

Anthropometry

Melchiorre Masali

Muscular Work

Juhani Smolander and Veikko Louhevaara

Postures at Work

Ilkka Kuorinka

Biomechanics

Frank Darby

General Fatigue

Étienne Grandjean

Fatigue and Recovery

Rolf Helbig and Walter Rohmert

Psychological Aspects

Mental Workload

Winfried Hacker

Vigilance

Herbert Heuer

Mental Fatigue

Peter Richter

Organizational Aspects of Work

Work Organization

Eberhard Ulich and Gudela Grote

Sleep Deprivation

Kazutaka Kogi

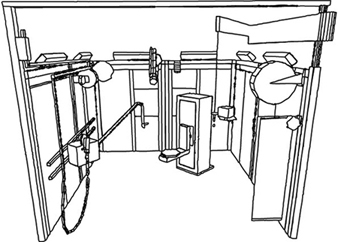

Work Systems Design

Workstations

Roland Kadefors

Tools

T.M. Fraser

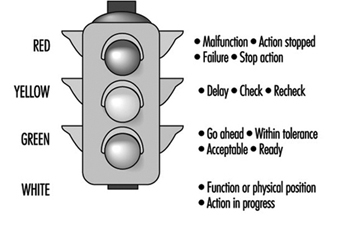

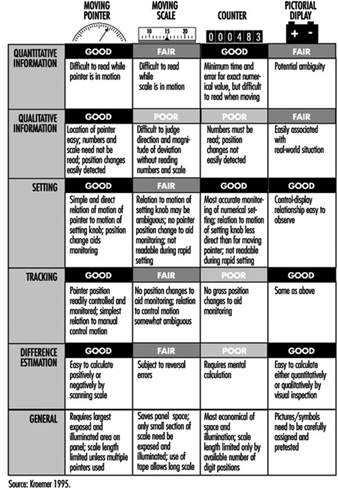

Controls, Indicators and Panels

Karl H. E. Kroemer

Information Processing and Design

Andries F. Sanders

Designing for Everyone

Designing for Specific Groups

Joke H. Grady-van den Nieuwboer

Case Study: The International Classification of Functional Limitation in People

Cultural Differences

Houshang Shahnavaz

Elderly Workers

Antoine Laville and Serge Volkoff

Workers with Special Needs

Joke H. Grady-van den Nieuwboer

Diversity and Importance of Ergonomics--Two Examples

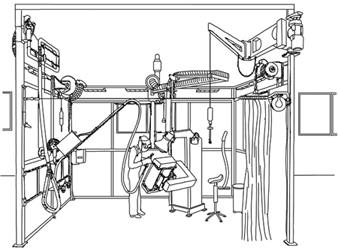

System Design in Diamond Manufacturing

Issachar Gilad

Disregarding Ergonomic Design Principles: Chernobyl

Vladimir M. Munipov

Tables

Click a link below to view table in article context.

1. Basic anthropometric core list

2. Fatigue & recovery dependent on activity levels

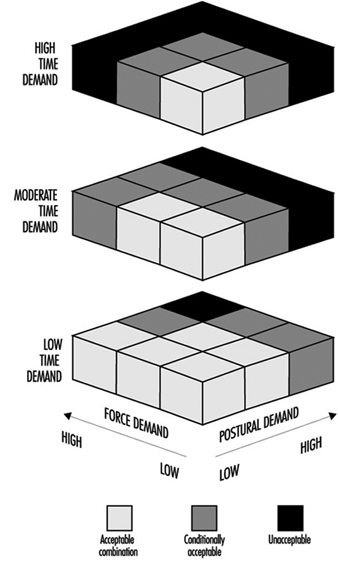

3. Rules of combination effects of two stress factors on strain

4. Differenting among several negative consequences of mental strain

5. Work-oriented principles for production structuring

6. Participation in organizational context

7. User participation in the technology process

8. Irregular working hours & sleep deprivation

9. Aspects of advance, anchor & retard sleeps

10. Control movements & expected effects

11. Control-effect relations of common hand controls

12. Rules for arrangement of controls

Figures

Point to a thumbnail to see figure caption, click to see the figure in the article context.

30. Occupational Hygiene (6)

30. Occupational Hygiene

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Goals, Definitions and General Information

Berenice I. Ferrari Goelzer

Recognition of Hazards

Linnéa Lillienberg

Evaluation of the Work Environment

Lori A. Todd

Occupational Hygiene: Control of Exposures Through Intervention

James Stewart

The Biological Basis for Exposure Assessment

Dick Heederik

Occupational Exposure Limits

Dennis J. Paustenbach

Tables

1. Hazards of chemical; biological & physical agents

2. Occupational exposure limits (OELs) - various countries

Figures

31. Personal Protection (7)

31. Personal Protection

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Overview and Philosophy of Personal Protection

Robert F. Herrick

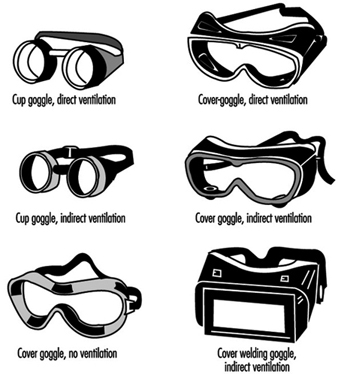

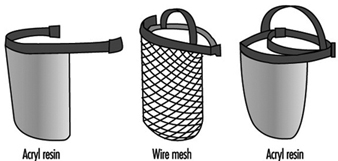

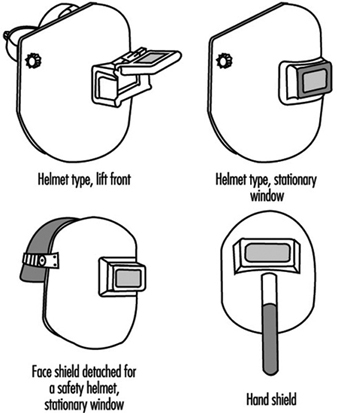

Eye and Face Protectors

Kikuzi Kimura

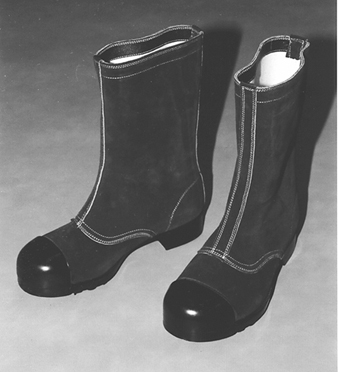

Foot and Leg Protection

Toyohiko Miura

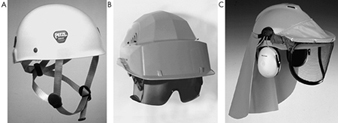

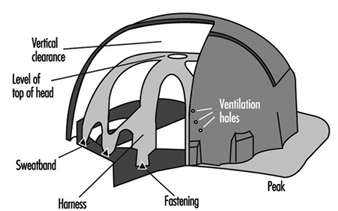

Head Protection

Isabelle Balty and Alain Mayer

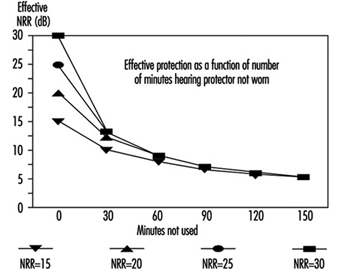

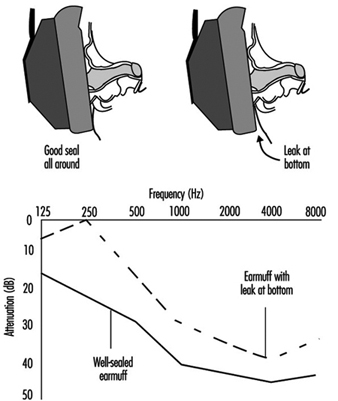

Hearing Protection

John R. Franks and Elliott H. Berger

Protective Clothing

S. Zack Mansdorf

Respiratory Protection

Thomas J. Nelson

Tables

Click a link below to view table in article context.

1. Transmittance requirements (ISO 4850-1979)

2. Scales of protection - gas-welding & braze-welding

3. Scales of protection - oxygen cutting

4. Scales of protection - plasma arc cutting

5. Scales of protection - electric arc welding or gouging

6. Scales of protection - plasma direct arc welding

7. Safety helmet: ISO Standard 3873-1977

8. Noise Reduction Rating of a hearing protector

9. Computing the A-weighted noise reduction

10. Examples of dermal hazard categories

11. Physical, chemical & biological performance requirements

12. Material hazards associated with particular activities

13. Assigned protection factors from ANSI Z88 2 (1992)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

32. Record Systems and Surveillance (9)

32. Record Systems and Surveillance

Chapter Editor: Steven D. Stellman

Table of Contents

Tables and Figures

Occupational Disease Surveillance and Reporting Systems

Steven B. Markowitz

Occupational Hazard Surveillance

David H. Wegman and Steven D. Stellman

Surveillance in Developing Countries

David Koh and Kee-Seng Chia

Development and Application of an Occupational Injury and Illness Classification System

Elyce Biddle

Risk Analysis of Nonfatal Workplace Injuries and Illnesses

John W. Ruser

Case Study: Worker Protection and Statistics on Accidents and Occupational Diseases - HVBG, Germany

Martin Butz and Burkhard Hoffmann

Case Study: Wismut - A Uranium Exposure Revisited

Heinz Otten and Horst Schulz

Measurement Strategies and Techniques for Occupational Exposure Assessment in Epidemiology

Frank Bochmann and Helmut Blome

Case Study: Occupational Health Surveys in China

Tables

Click a link below to view the table in article context.

1. Angiosarcoma of the liver - world register

2. Occupational illness, US, 1986 versus 1992

3. US Deaths from pneumoconiosis & pleural mesothelioma

4. Sample list of notifiable occupational diseases

5. Illness & injury reporting code structure, US

6. Nonfatal occupational injuries & illnesses, US 1993

7. Risk of occupational injuries & illnesses

8. Relative risk for repetitive motion conditions

9. Workplace accidents, Germany, 1981-93

10. Grinders in metalworking accidents, Germany, 1984-93

11. Occupational disease, Germany, 1980-93

12. Infectious diseases, Germany, 1980-93

13. Radiation exposure in the Wismut mines

14. Occupational diseases in Wismut uranium mines 1952-90

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

33. Toxicology (21)

33. Toxicology

Chapter Editor: Ellen K. Silbergeld

Table of Contents

Tables and Figures

Introduction

Ellen K. Silbergeld, Chapter Editor

General Principles of Toxicology

Definitions and Concepts

Bo Holmberg, Johan Hogberg and Gunnar Johanson

Toxicokinetics

Dušan Djuríc

Target Organ And Critical Effects

Marek Jakubowski

Effects Of Age, Sex And Other Factors

Spomenka Telišman

Genetic Determinants Of Toxic Response

Daniel W. Nebert and Ross A. McKinnon

Mechanisms of Toxicity

Introduction And Concepts

Philip G. Watanabe

Cellular Injury And Cellular Death

Benjamin F. Trump and Irene K. Berezesky

Genetic Toxicology

R. Rita Misra and Michael P. Waalkes

Immunotoxicology

Joseph G. Vos and Henk van Loveren

Target Organ Toxicology

Ellen K. Silbergeld

Toxicology Test Methods

Biomarkers

Philippe Grandjean

Genetic Toxicity Assessment

David M. DeMarini and James Huff

In Vitro Toxicity Testing

Joanne Zurlo

Structure Activity Relationships

Ellen K. Silbergeld

Regulatory Toxicology

Toxicology In Health And Safety Regulation

Ellen K. Silbergeld

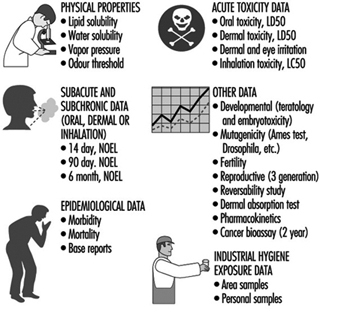

Principles Of Hazard Identification - The Japanese Approach

Masayuki Ikeda

The United States Approach to Risk Assessment Of Reproductive Toxicants and Neurotoxic Agents

Ellen K. Silbergeld

Approaches To Hazard Identification - IARC

Harri Vainio and Julian Wilbourn

Appendix - Overall Evaluations of Carcinogenicity to Humans: IARC Monographs Volumes 1-69 (836)

Carcinogen Risk Assessment: Other Approaches

Cees A. van der Heijden

Tables

Click a link below to view table in article context.

- Examples of critical organs & critical effects

- Basic effects of possible multiple interactions of metals

- Haemoglobin adducts in workers exposed to aniline & acetanilide

- Hereditary, cancer-prone disorders & defects in DNA repair

- Examples of chemicals that exhibit genotoxicity in human cells

- Classification of tests for immune markers

- Examples of biomarkers of exposure

- Pros & cons of methods for identifying human cancer risks

- Comparison of in vitro systems for hepatotoxicity studies

- Comparison of SAR & test data: OECD/NTP analyses

- Regulation of chemical substances by laws, Japan

- Test items under the Chemical Substance Control Law, Japan

- Chemical substances & the Chemical Substances Control Law

- Selected major neurotoxicity incidents

- Examples of specialized tests to measure neurotoxicity

- Endpoints in reproductive toxicology

- Comparison of low-dose extrapolations procedures

- Frequently cited models in carcinogen risk characterization

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Approaches to Hazard Identification: IARC

The identification of carcinogenic risks to humans has been the objective of the IARC Monographs on the Evaluation of Carcinogenic Risks to Humans since 1971. To date, 69 volumes of monographs have been published or are in press, with evaluations of carcinogenicity of 836 agents or exposure circumstances (see Appendix).

These qualitative evaluations of carcinogenic risk to humans are equivalent to the hazard identification phase in the now generally accepted scheme of risk assessment, which involves identification of hazard, dose-response assessment (including extrapolation outside the limits of observations), exposure assessment and risk characterization.

The aim of the IARC Monographs programme has been to publish critical qualitative evaluations on the carcinogenicity to humans of agents (chemicals, groups of chemicals, complex mixtures, physical or biological factors) or exposure circumstances (occupational exposures, cultural habits) through international cooperation in the form of expert working groups. The working groups prepare monographs on a series of individual agents or exposures and each volume is published and widely distributed. Each monograph consists of a brief description of the physical and chemical properties of the agent; methods for its analysis; a description of how it is produced, how much is produced, and how it is used; data on occurrence and human exposure; summaries of case reports and epidemiological studies of cancer in humans; summaries of experimental carcinogenicity tests; a brief description of other relevant biological data, such as toxicity and genetic effects, that may indicate its possible mechanism of action; and an evaluation of its carcinogenicity. The first part of this general scheme is adjusted appropriately when dealing with agents other than chemicals or chemical mixtures.

The guiding principles for evaluating carcinogens have been drawn up by various ad-hoc groups of experts and are laid down in the Preamble to the Monographs (IARC 1994a).

Tools for Qualitative Carcinogenic Risk (Hazard) Identification

Associations are established by examining the available data from studies of exposed humans, the results of bioassays in experimental animals and studies of exposure, metabolism, toxicity and genetic effects in both humans and animals.

Studies of cancer in humans

Three types of epidemiological studies contribute to an assessment of carcinogenicity: cohort studies, case-control studies and correlation (or ecological) studies. Case reports of cancer may also be reviewed.

Cohort and case-control studies relate individual exposures under study to the occurrence of cancer in individuals and provide an estimate of relative risk (ratio of the incidence in those exposed to the incidence in those not exposed) as the main measure of association.

In correlation studies, the unit of investigation is usually whole populations (e.g., particular geographical areas) and cancer frequency is related to a summary measure of the exposure of the population to the agent. Because individual exposure is not documented, a causal relationship is less easy to infer from such studies than from cohort and case-control studies. Case reports generally arise from a suspicion, based on clinical experience, that the concurrence of two events—that is, a particular exposure and occurrence of a cancer—has happened rather more frequently than would be expected by chance. The uncertainties surrounding interpretation of case reports and correlation studies make them inadequate, except in rare cases, to form the sole basis for inferring a causal relationship.

In the interpretation of epidemiological studies, it is necessary to take into account the possible roles of bias and confounding. By bias is meant the operation of factors in study design or execution that lead erroneously to a stronger or weaker association than in fact exists between disease and an agent. By confounding is meant a situation in which the relationship with disease is made to appear stronger or weaker than it truly is as a result of an association between the apparent causal factor and another factor that is associated with either an increase or decrease in the incidence of the disease.

In the assessment of the epidemiological studies, a strong association (i.e., a large relative risk) is more likely to indicate causality than a weak association, although it is recognized that relative risks of small magnitude do not imply lack of causality and may be important if the disease is common. Associations that are replicated in several studies of the same design or using different epidemiological approaches or under different circumstances of exposure are more likely to represent a causal relationship than isolated observations from single studies. An increase in risk of cancer with increasing amounts of exposure is considered to be a strong indication of causality, although the absence of a graded response is not necessarily evidence against a causal relationship. Demonstration of a decline in risk after cessation of or reduction in exposure in individuals or in whole populations also supports a causal interpretation of the findings.

When several epidemiological studies show little or no indication of an association between an exposure and cancer, the judgement may be made that, in the aggregate, they show evidence suggesting lack of carcinogenicity. The possibility that bias, confounding or misclassification of exposure or outcome could explain the observed results must be considered and excluded with reasonable certainty. Evidence suggesting lack of carcinogenicity obtained from several epidemiological studies can apply only to those type(s) of cancer, dose levels and intervals between first exposure and observation of disease that were studied. For some human cancers, the period between first exposure and the development of clinical disease is seldom less than 20 years; latent periods substantially shorter than 30 years cannot provide evidence suggesting lack of carcinogenicity.

The evidence relevant to carcinogenicity from studies in humans is classified into one of the following categories:

Sufficient evidence of carcinogenicity. A causal relationship has been established between exposure to the agent, mixture or exposure circumstance and human cancer. That is, a positive relationship has been observed between the exposure and cancer in studies in which chance, bias and confounding could be ruled out with reasonable confidence.

Limited evidence of carcinogenicity. A positive association has been observed between exposure to the agent, mixture or exposure circumstance and cancer for which a causal interpretation is considered to be credible, but chance, bias or confounding cannot be ruled out with reasonable confidence.

Inadequate evidence of carcinogenicity. The available studies are of insufficient quality, consistency or statistical power to permit a conclusion regarding the presence or absence of a causal association, or no data on cancer in humans are available.

Evidence suggesting lack of carcinogenicity. There are several adequate studies covering the full range of levels of exposure that human beings are known to encounter, which are mutually consistent in not showing a positive association between exposure to the agent and the studied cancer at any observed level of exposure. A conclusion of “evidence suggesting lack of carcinogenicity” is inevitably limited to the cancer sites, conditions and levels of exposure and length of observation covered by the available studies.

The applicability of an evaluation of the carcinogenicity of a mixture, process, occupation or industry on the basis of evidence from epidemiological studies depends on time and place. The specific exposure, process or activity considered most likely to be responsible for any excess risk should be sought and the evaluation focused as narrowly as possible. The long latent period of human cancer complicates the interpretation of epidemiological studies. A further complication is the fact that humans are exposed simultaneously to a variety of chemicals, which can interact either to increase or decrease the risk for neoplasia.

Studies on carcinogenicity in experimental animals

Studies in which experimental animals (usually mice and rats) are exposed to potential carcinogens and examined for evidence of cancer were introduced about 50 years ago with the aim of introducing a scientific approach to the study of chemical carcinogenesis and to avoid some of the disadvantages of using only epidemiological data in humans. In the IARC Monographs all available, published studies of carcinogenicity in animals are summarized, and the degree of evidence of carcinogenicity is then classified into one of the following categories:

Sufficient evidence of carcinogenicity. A causal relationship has been established between the agent or mixture and an increased incidence of malignant neoplasms or of an appropriate combination of benign and malignant neoplasms in two or more species of animals or in two or more independent studies in one species carried out at different times or in different laboratories or under different protocols. Exceptionally, a single study in one species might be considered to provide sufficient evidence of carcinogenicity when malignant neoplasms occur to an unusual degree with regard to incidence, site, type of tumour or age at onset.

Limited evidence of carcinogenicity. The data suggest a carcinogenic effect but are limited for making a definitive evaluation because, for example, (a) the evidence of carcinogenicity is restricted to a single experiment; or (b) there are some unresolved questions regarding the adequacy of the design, conduct or interpretation of the study; or (c) the agent or mixture increases the incidence only of benign neoplasms or lesions of uncertain neoplastic potential, or of certain neoplasms which may occur spontaneously in high incidences in certain strains.

Inadequate evidence of carcinogenicity. The studies cannot be interpreted as showing either the presence or absence of a carcinogenic effect because of major qualitative or quantitative limitations, or no data on cancer in experimental animals are available.

Evidence suggesting lack of carcinogenicity. Adequate studies involving at least two species are available which show that, within the limits of the tests used, the agent or mixture is not carcinogenic. A conclusion of evidence suggesting lack of carcinogenicity is inevitably limited to the species, tumour sites and levels of exposure studied.

Other data relevant to an evaluationof carcinogenicity

Data on biological effects in humans that are of particular relevance include toxicological, kinetic and metabolic considerations and evidence of DNA binding, persistence of DNA lesions or genetic damage in exposed humans. Toxicological information, such as that on cytotoxicity and regeneration, receptor binding and hormonal and immunological effects, and data on kinetics and metabolism in experimental animals are summarized when considered relevant to the possible mechanism of the carcinogenic action of the agent. The results of tests for genetic and related effects are summarized for whole mammals including man, cultured mammalian cells and nonmammalian systems. Structure-activity relationships are mentioned when relevant.

For the agent, mixture or exposure circumstance being evaluated, the available data on end-points or other phenomena relevant to mechanisms of carcinogenesis from studies in humans, experimental animals and tissue and cell test systems are summarized within one or more of the following descriptive dimensions:

- evidence of genotoxicity (i.e., structural changes at the level of the gene): for example, structure-activity considerations, adduct formation, mutagenicity (effect on specific genes), chromosomal mutation or aneuploidy

- evidence of effects on the expression of relevant genes (i.e., functional changes at the intracellular level): for example, alterations to the structure or quantity of the product of a proto-oncogene or tumour suppressor gene, alterations to metabolic activation, inactivation or DNA repair

- evidence of relevant effects on cell behaviour (i.e., morphological or behavioural changes at the cellular or tissue level): for example, induction of mitogenesis, compensatory cell proliferation, preneoplasia and hyperplasia, survival of premalignant or malignant cells (immortalization, immunosuppression), effects on metastatic potential

- evidence from dose and time relationships of carcinogenic effects and interactions between agents: for example, early versus late stage, as inferred from epidemiological studies; initiation, promotion, progression or malignant conversion, as defined in animal carcinogenicity experiments; toxicokinetics.

These dimensions are not mutually exclusive, and an agent may fall within more than one. Thus, for example, the action of an agent on the expression of relevant genes could be summarized under both the first and second dimension, even if it were known with reasonable certainty that those effects resulted from genotoxicity.

Overall evaluations

Finally, the body of evidence is considered as a whole, in order to reach an overall evaluation of the carcinogenicity to humans of an agent, mixture or circumstance of exposure. An evaluation may be made for a group of chemicals when supporting data indicate that other, related compounds for which there is no direct evidence of capacity to induce cancer in humans or in animals may also be carcinogenic, a statement describing the rationale for this conclusion is added to the evaluation narrative.

The agent, mixture or exposure circumstance is described according to the wording of one of the following categories, and the designated group is given. The categorization of an agent, mixture or exposure circumstance is a matter of scientific judgement, reflecting the strength of the evidence derived from studies in humans and in experimental animals and from other relevant data.

Group 1

The agent (mixture) is carcinogenic to humans. The exposure circumstance entails exposures that are carcinogenic to humans.

This category is used when there is sufficient evidence of carcinogenicity in humans. Exceptionally, an agent (mixture) may be placed in this category when evidence in humans is less than sufficient but there is sufficient evidence of carcinogenicity in experimental animals and strong evidence in exposed humans that the agent (mixture) acts through a relevant mechanism of carcinogenicity.

Group 2

This category includes agents, mixtures and exposure circumstances for which, at one extreme, the degree of evidence of carcinogenicity in humans is almost sufficient, as well as those for which, at the other extreme, there are no human data but for which there is evidence of carcinogenicity in experimental animals. Agents, mixtures and exposure circumstances are assigned to either group 2A (probably carcinogenic to humans) or group 2B (possibly carcinogenic to humans) on the basis of epidemiological and experimental evidence of carcinogenicity and other relevant data.

Group 2A. The agent (mixture) is probably carcinogenic to humans. The exposure circumstance entails exposures that are probably carcinogenic to humans. This category is used when there is limited evidence of carcinogenicity in humans and sufficient evidence of carcinogenicity in experimental animals. In some cases, an agent (mixture) may be classified in this category when there is inadequate evidence of carcinogenicity in humans and sufficient evidence of carcinogenicity in experimental animals and strong evidence that the carcinogenesis is mediated by a mechanism that also operates in humans. Exceptionally, an agent, mixture or exposure circumstance may be classified in this category solely on the basis of limited evidence of carcinogenicity in humans.

Group 2B. The agent (mixture) is possibly carcinogenic to humans. The exposure circumstance entails exposures that are possibly carcinogenic to humans. This category is used for agents, mixtures and exposure circumstances for which there is limited evidence of carcinogenicity in humans and less than sufficient evidence of carcinogenicity in experimental animals. It may also be used when there is inadequate evidence of carcinogenicity in humans but there is sufficient evidence of carcinogenicity in experimental animals. In some instances, an agent, mixture or exposure circumstance for which there is inadequate evidence of carcinogenicity in humans but limited evidence of carcinogenicity in experimental animals together with supporting evidence from other relevant data may be placed in this group.

Group 3

The agent (mixture or exposure circumstance) is not classifiable as to its carcinogenicity to humans. This category is used most commonly for agents, mixtures and exposure circumstances for which the evidence of carcinogenicity is inadequate in humans and inadequate or limited in experimental animals.

Exceptionally, agents (mixtures) for which the evidence of carcinogenicity is inadequate in humans but sufficient in experimental animals may be placed in this category when there is strong evidence that the mechanism of carcinogenicity in experimental animals does not operate in humans.

Group 4

The agent (mixture) is probably not carcinogenic to humans. This category is used for agents or mixtures for which there is evidence suggesting lack of carcinogenicity in humans and in experimental animals. In some instances, agents or mixtures for which there is inadequate evidence of carcinogenicity in humans but evidence suggesting lack of carcinogenicity experimental animals, consistently and strongly supported by a broad range of other relevant data, may be classified in this group.

Classification systems made by humans are not sufficiently perfect to encompass all the complex entities of biology. They are, however, useful as guiding principles and may be modified as new knowledge of carcinogenesis becomes more firmly established. In the categorization of an agent, mixture or exposure circumstance, it is essential to rely on scientific judgements formulated by the group of experts.

Results to Date

To date, 69 volumes of IARC Monographs have been published or are in press, in which evaluations of carcinogenicity to humans have been made for 836 agents or exposure circumstances. Seventy-four agents or exposures have been evaluated as carcinogenic to humans (Group 1), 56 as probably carcinogenic to humans (Group 2A), 225 as possibly carcinogenic to humans (Group 2B) and one as probably not carcinogenic to humans (Group 4). For 480 agents or exposures, the available epidemiological and experimental data did not allow an evaluation of their carcinogenicity to humans (Group 3).

Importance of Mechanistic Data

The revised Preamble, which first appeared in volume 54 of the IARC Monographs, allows for the possibility that an agent for which epidemiological evidence of cancer is less than sufficient can be placed in Group 1 when there is sufficient evidence of carcinogenicity in experimental animals and strong evidence in exposed humans that the agent acts through a relevant mechanism of carcinogenicity. Conversely, an agent for which there is inadequate evidence of carcinogenicity in humans together with sufficient evidence in experimental animals and strong evidence that the mechanism of carcinogenesis does not operate in humans may be placed in Group 3 instead of the normally assigned Group 2B—possibly carcinogenic to humans—category.

The use of such data on mechanisms has been discussed on three recent occasions:

While it is generally accepted that solar radiation is carcinogenic to humans (Group 1), epidemiological studies on cancer in humans for UVA and UVB radiation from sun lamps provide only limited evidence of carcinogenicity. Special tandem base substitutions (GCTTT) have been observed in p53 tumour suppression genes in squamous-cell tumours at sun-exposed sites in humans. Although UVR can induce similar transitions in some experimental systems and UVB, UVA and UVC are carcinogenic in experimental animals, the available mechanistic data were not considered strong enough to allow the working group to classify UVB, UVA and UVC higher than Group 2A (IARC 1992). In a study published after the meeting (Kress et al. 1992), CCTTT transitions in p53 have been demonstrated in UVB-induced skin tumours in mice, which might suggest that UVB should also be classified as carcinogenic to humans (Group 1).

The second case in which the possibility of placing an agent in Group 1 in the absence of sufficient epidemiological evidence was considered was 4,4´-methylene-bis(2-chloroaniline) (MOCA). MOCA is carcinogenic in dogs and rodents and is comprehensively genotoxic. It binds to DNA through reaction with N-hydroxy MOCA and the same adducts that are formed in target tissues for carcinogenicity in animals have been found in urothelial cells from a small number of exposed humans. After lengthy discussions on the possibility of an upgrading, the working group finally made an overall evaluation of Group 2A, probably carcinogenic to humans (IARC 1993).

During a recent evaluation of ethylene oxide (IARC 1994b), the available epidemiological studies provided limited evidence of carcinogenicity in humans, and studies in experimental animals provided sufficient evidence of carcinogenicity. Taking into account the other relevant data that (1) ethylene oxide induces a sensitive, persistent, dose-related increase in the frequency of chromosomal aberrations and sister chromatid exchanges in peripheral lymphocytes and micronuclei in bone-marrow cells from exposed workers; (2) it has been associated with malignancies of the lymphatic and haematopoietic system in both humans and experimental animals; (3) it induces a dose-related increase in the frequency of haemoglobin adducts in exposed humans and dose-related increases in the numbers of adducts in both DNA and haemoglobin in exposed rodents; (4) it induces gene mutations and heritable translocations in germ cells of exposed rodents; and (5) it is a powerful mutagen and clastogen at all phylogenetic levels; ethylene oxide was classified as carcinogenic to humans (Group 1).

In the case where the Preamble allows for the possibility that an agent for which there is sufficient evidence of carcinogenicity in animals can be placed in Group 3 (instead of Group 2B, in which it would normally be categorized) when there is strong evidence that the mechanism of carcinogenicity in animals does not operate in humans, this possibility has not yet been used by any working group. Such a possibility could have been envisaged in the case of d-limonene had there been sufficient evidence of its carcinogenicity in animals, since there are data suggesting that α2-microglobulin production in male rat kidney is linked to the renal tumours observed.

Among the many chemicals nominated as priorities by an ad-hoc working group in December 1993, some common postulated intrinsic mechanisms of action appeared or certain classes of agents based upon their biological properties were identified. The working group recommended that before evaluations are made on such agents as peroxisome proliferators, fibres, dusts and thyrostatic agents within the Monographs programme, special ad-hoc groups should be convened to discuss the latest state of the art on their particular mechanisms of action.

The Biological Basis for Exposure Assessment

Workplace exposure assessment is concerned with identifying and evaluating agents with which a worker may come in contact, and exposure indices can be constructed to reflect the amount of an agent present in the general environment or in inhaled air, as well as to reflect the amount of agent that is actually inhaled, swallowed or otherwise absorbed (the intake). Other indices include the amount of agent that is resorbed (the uptake) and the exposure at the target organ. Dose is a pharmacological or toxicological term used to indicate the amount of a substance administered to a subject. Dose rate is the amount administered per unit of time. The dose of a workplace exposure is difficult to determine in a practical situation, since physical and biological processes, like inhalation, uptake and distribution of an agent in the human body cause exposure and dose to have complex, non-linear relationships. The uncertainty about the actual level of exposure to agents also makes it difficult to quantify relationships between exposure and health effects.

For many occupational exposures there exists a time window during which the exposure or dose is most relevant to the development of a particular health-related problem or symptom. Hence, the biologically relevant exposure, or dose, would be that exposure which occurs during the relevant time window. Some exposures to occupational carcinogens are believed to have such a relevant time window of exposure. Cancer is a disease with a long latency period, and hence it could be that the exposure which is related to the ultimate development of the disease took place many years before the cancer actually manifested itself. This phenomenon is counter-intuitive, since one would have expected that cumulative exposure over a working lifetime would have been the relevant parameter. The exposure at the time of manifestation of disease may not be of particular importance.

The pattern of exposure—continuous exposure, intermittent exposure and exposure with or without sharp peaks—may be relevant as well. Taking exposure patterns into account is important for both epidemiological studies and for environmental measurements which may be used to monitor compliance with health standards or for environmental control as part of control and prevention programmes. For example, if a health effect is caused by peak exposures, such peak levels must be monitorable in order to be controlled. Monitoring which provides data only about long-term average exposures is not useful since the peak excursion values may well be masked by averaging, and certainly cannot be controlled as they occur.

The biologically relevant exposure or dose for a certain endpoint is often not known because the patterns of intake, uptake, distribution and elimination, or the mechanisms of biotransformation, are not understood in sufficient detail. Both the rate at which an agent enters and leaves the body (the kinetics) and the biochemical processes for handling the substance (biotransformation) will help determine the relationships between exposure, dose and effect.

Environmental monitoring is the measurement and assessment of agents at the workplace to evaluate ambient exposure and related health risks. Biological monitoring is the measurement and assessment of workplace agents or their metabolites in tissue, secreta or excreta to evaluate exposure and assess health risks. Sometimes biomarkers, such as DNA-adducts, are used as measures of exposure. Biomarkers may also be indicative of the mechanisms of the disease process itself, but this is a complex subject, which is covered more fully in the chapter Biological Monitoring and later in the discussion here.

A simplification of the basic model in exposure-response modelling is as follows:

exposure ![]() uptake

uptake ![]() distribution,

distribution,

elimination, transformation![]() target dose

target dose![]() physiopathology

physiopathology![]() effect

effect

Depending on the agent, exposure-uptake and exposure-intake relationships can be complex. For many gases, simple approximations can be made, based on the concentration of the agent in the air during the course of a working day and on the amount of air that is inhaled. For dust sampling, deposition patterns are also related to particle size. Size considerations may also lead to a more complex relationship. The chapter Respiratory System provides more detail on the aspect of respiratory toxicity.

Exposure and dose assessment are elements of quantitative risk assessment. Health risk assessment methods often form the basis upon which exposure limits are established for emission levels of toxic agents in the air for environmental as well as for occupational standards. Health risk analysis provides an estimate of the probability (risk) of occurrence of specific health effects or an estimate of the number of cases with these health effects. By means of health risk analysis an acceptable concentration of a toxicant in air, water or food can be provided, given an a priori chosen acceptable magnitude of risk. Quantitative risk analysis has found an application in cancer epidemiology, which explains the strong emphasis on retrospective exposure assessment. But applications of more elaborate exposure assessment strategies can be found in both retrospective as well as prospective exposure assessment, and exposure assessment principles have found applications in studies focused on other endpoints as well, such as benign respiratory disease (Wegman et al. 1992; Post et al. 1994). Two directions in research predominate at this moment. One uses dose estimates obtained from exposure monitoring information, and the other relies on biomarkers as measures of exposure.

Exposure Monitoring and Prediction of Dose

Unfortunately, for many exposures few quantitative data are available for predicting the risk for developing a certain endpoint. As early as 1924, Haber postulated that the severity of the health effect (H) is proportional to the product of exposure concentration (X) and time of exposure (T):

H=X x T

Haber’s law, as it is called, formed the basis for development of the concept that time-weighted average (TWA) exposure measurements—that is, measurements taken and averaged over a certain period of time—would be a useful measure for the exposure. This assumption about the adequacy of the time-weighted average has been questioned for many years. In 1952, Adams and co-workers stated that “there is no scientific basis for the use of the time-weighted average to integrate varying exposures …” (in Atherly 1985). The problem is that many relations are more complex than the relationship that Haber’s law represents. There are many examples of agents where the effect is more strongly determined by concentration than by length of time. For example, interesting evidence from laboratory studies has shown that in rats exposed to carbon tetrachloride, the pattern of exposure (continuous versus intermittent and with or without peaks) as well as the dose can modify the observed risk of the rats developing liver enzyme level changes (Bogers et al. 1987). Another example is bio-aerosols, such as α-amylase enzyme, a dough improver, which can cause allergic diseases in people who work in the bakery industry (Houba et al. 1996). It is unknown whether the risk of developing such a disease is mainly determined by peak exposures, average exposure, or cumulative level of exposure. (Wong 1987; Checkoway and Rice 1992). Information on temporal patterns is not available for most agents, especially not for agents that have chronic effects.

The first attempts to model exposure patterns and estimate dose were published in the 1960s and 1970s by Roach (1966; 1977). He showed that the concentration of an agent reaches an equilibrium value at the receptor after an exposure of infinite duration because elimination counterbalances the uptake of the agent. In an eight-hour exposure, a value of 90% of this equilibrium level can be reached if the half-life of the agent at the target organ is smaller than approximately two-and-a-half hours. This illustrates that for agents with a short half-life, the dose at the target organ is determined by an exposure shorter than an eight-hour period. Dose at the target organ is a function of the product of exposure time and concentration for agents with a long half-life. A similar but more elaborate approach has been applied by Rappaport (1985). He showed that intra-day variability in exposure has a limited influence when dealing with agents with long half-lives. He introduced the term dampening at the receptor.

The information presented above has mainly been used to draw conclusions on appropriate averaging times for exposure measurements for compliance purposes. Since Roach’s papers it is common knowledge that for irritants, grab samples with short averaging times have to be taken, while for agents with long half-lives, such as asbestos, long-term average of cumulative exposure has to be approximated. One should however realize that the dichotomization into grab sample strategies and eight-hour time average exposure strategies as adopted in many countries for compliance purposes is an extremely crude translation of the biological principles discussed above.

An example of improving an exposure assessment strategy based on pharmocokinetic principles in epidemiology can be found in a paper of Wegman et al. (1992). They applied an interesting exposure assessment strategy by using continuous monitoring devices to measure personal dust exposure peak levels and relating these to acute reversible respiratory symptoms occurring every 15 minutes.A conceptual problem in this kind of study, extensively discussed in their paper, is the definition of a health-relevant peak exposure. The definition of a peak will, again, depend on biological considerations. Rappaport (1991) gives two requirements for peak exposures to be of aetiological relevance in the disease process: (1) the agent is eliminated rapidly from the body and (2) there is a non-linear rate of biological damage during a peak exposure. Non-linear rates of biological damage may be related to changes in uptake, which in turn are related to exposure levels, host susceptibility, synergy with other exposures, involvement of other disease mechanisms at higher exposures or threshold levels for disease processes.

These examples also show that pharmacokinetic approaches can lead elsewhere than to dose estimates. The results of pharmacokinetic modelling can also be used to explore the biological relevance of existing indices of exposure and to design new health-relevant exposure assessment strategies.

Pharmacokinetic modelling of the exposure may also generate estimates of the actual dose at the target organ. For instance in the case of ozone, an acute irritant gas, models have been developed which predict the tissue concentration in the airways as a function of the average ozone concentration in the airspace of the lung at a certain distance from the trachea, the radius of the airways, the average air velocity, the effective dispersion, and the ozone flux from air to lung surface (Menzel 1987; Miller and Overton 1989). Such models can be used to predict ozone dose in a particular region of the airways, dependent on environmental ozone concentrations and breathing patterns.

In most cases estimates of target dose are based on information on the exposure pattern over time, job history and pharmacokinetic information on uptake, distribution, elimination and transformation of the agent. The whole process can be described by a set of equations which can be mathematically solved. Often information on pharmacokinetic parameters is not available for humans, and parameter estimates based on animal experiments have to be used. There are several examples by now of the use of pharmacokinetic modelling of exposure in order to generate dose estimates. The first references to modelling of exposure data into dose estimates in the literature go back to the paper of Jahr (1974).

Although dose estimates have generally not been validated and have found limited application in epidemiological studies, the new generation of exposure or dose indices is expected to result in optimal exposure-response analyses in epidemiological studies (Smith 1985, 1987). A problem not yet tackled in pharmacokinetic modelling is that large interspecies differences exist in kinetics of toxic agents, and therefore effects of intra-individual variation in pharmacokinetic parameters are of interest (Droz 1992).

Biomonitoring and Biomarkers of Exposure

Biological monitoring offers an estimate of dose and therefore is often considered superior to environmental monitoring. However, the intra-individual variability of biomonitoring indices can be considerable. In order to derive an acceptable estimate of a worker’s dose, repeated measurements have to be taken, and sometimes the measurement effort can become larger than for environmental monitoring.

This is illustrated by an interesting study on workers producing boats made of plastic reinforced with glass fibre (Rappaport et al. 1995). The variability of styrene exposure was assessed by measuring styrene in air repeatedly. Styrene in exhaled air of exposed workers was monitored, as well as sister chromatid exchanges (SCEs). They showed that an epidemiological study using styrene in the air as a measure of exposure would be more efficient, in terms of numbers of measurements required, than a study using the other indices of exposure. For styrene in air three repeats were required to estimate the long-term average exposure with a given precision. For styrene in exhaled air, four repeats per worker were necessary, while for the SCEs 20 repeats were necessary. The explanation for this observation is the signal-to-noise ratio, determined by the day-to-day and between-worker variability in exposure, which was more favourable for styrene in air than for the two biomarkers of exposure. Thus, although the biological relevance of a certain exposure surrogate might be optimal, the performance in an exposure-response analysis can still be poor because of a limited signal-to-noise ratio, leading to misclassification error.

Droz (1991) applied pharmacokinetic modelling to study advantages of exposure assessment strategies based on air sampling compared to biomonitoring strategies dependent on the half-life of the agent. He showed that biological monitoring is also greatly affected by biological variability, which is not related to variability of the toxicological test. He suggested that no statistical advantage exists in using biological indicators when the half-life of the agent considered is smaller than about ten hours.

Although one might tend to decide to measure the environmental exposure instead of a biological indicator of an effect because of variability in the variable measured, additional arguments can be found for choosing a biomarker, even when this would lead to a greater measurement effort, such as when a considerable dermal exposure is present. For agents like pesticides and some organic solvents, dermal exposure can be of greater relevance that the exposure through the air. A biomarker of exposure would include this route of exposure, while measuring of dermal exposure is complex and results are not easily interpretable (Boleij et al. 1995). Early studies among agricultural workers using “pads” to assess dermal exposure showed remarkable distributions of pesticides over the body surface, depending on the tasks of the worker. However, because little information is available on skin uptake, exposure profiles cannot yet be used to estimate an internal dose.

Biomarkers can also have considerable advantages in cancer epidemiology. When a biomarker is an early marker of the effect, its use could result in reduction of the follow-up period. Although validation studies are required, biomarkers of exposure or individual susceptibility could result in more powerful epidemiological studies and more precise risk estimates.

Time Window Analysis

Parallel to the development of pharmacokinetic modelling, epidemiologists have explored new approaches in the data analysis phase such as “time frame analysis” to relate relevant exposure periods to endpoints, and to implement effects of temporal patterns in the exposure or peak exposures in occupational cancer epidemiology (Checkoway and Rice 1992). Conceptually this technique is related to pharmacokinetic modelling since the relationship between exposure and outcome is optimized by putting weights on different exposure periods, exposure patterns and exposure levels. In pharmacokinetic modelling these weights are believed to have a physiological meaning and are estimated beforehand. In time frame analysis the weights are estimated from the data on the basis of statistical criteria. Examples of this approach are given by Hodgson and Jones (1990), who analysed the relationship between radon gas exposure and lung cancer in a cohort of UK tin miners, and by Seixas, Robins and Becker (1993), who analysed the relationship between dust exposure and respiratory health in a cohort of US coal miners. A very interesting study underlining the relevance of time window analysis is the one by Peto et al. (1982).

They showed that mesothelioma death rates appeared to be proportional to some function of time since first exposure and cumulative exposure in a cohort of insulation workers. Time since first exposure was of particular relevance because this variable was an approximation of the time required for a fibre to migrate from its place of deposition in the lungs to the pleura. This example shows how kinetics of deposition and migration determine the risk function to a large extent. A potential problem with time frame analysis is that it requires detailed information on exposure periods and exposure levels, which hampers its application in many studies of chronic disease outcomes.

Concluding Remarks

In conclusion, the underlying principles of pharmacokinetic modelling and time frame or time window analysis are widely recognized. Knowledge in this area has mainly been used to develop exposure assessment strategies. More elaborate use of these approaches, however, requires a considerable research effort and has to be developed. The number of applications is therefore still limited. Relatively simple applications, such as the development of more optimal exposure assessment strategies dependent on the endpoint, have found wider use. An important issue in the development of biomarkers of exposure or effect is validation of these indices. It is often assumed that a measurable biomarker can predict health risk better than traditional methods. However, unfortunately, very few validation studies substantiate this assumption.

Appendix: Overall Evaluations of Carcinogenicity to Humans: IARC Monographs Volumes 1-69 (836)

Group 1—Carcinogenic to Humans (74)

Agents and groups of agents

Aflatoxins [1402-68-2] (1993)

4-Aminobiphenyl [92-67-1]

Arsenic [7440-38-2] and arsenic compounds2

Asbestos [1332-21-4]

Azathioprine [446-86-6]

Benzene [71-43-2]

Benzidine [92-87-5]

Beryllium [7440-41-7] and beryllium compounds (1993)3

Bis(2-chloroethyl)-2-naphthylamine (Chlornaphazine)[494-03-1]

Bis(chloromethyl)ether [542-88-1] and chloromethyl methyl ether [107-30-2] (technical-grade)

1,4-Butanediol dimethanesulphonate (Myleran) [55-98-1]

Cadmium [7440-43-9] and cadmium compounds (1993)3

Chlorambucil [305-03-3]

1-(2-Chloroethyl)-3-(4-methylcyclohexyl)-1-nitrosourea (Methyl-CCNU; Semustine) [13909-09-6]

Chromium[VI] compounds (1990)3

Ciclosporin [79217-60-0] (1990)

Cyclophosphamide [50-18-0] [6055-19-2]

Diethylstilboestrol [56-53-1]

Erionite [66733-21-9]

Ethylene oxide4 [75-21-8] (1994)

Helicobacter pylori (infection with) (1994)

Hepatitis B virus (chronic infection with) (1993)

Hepatitis C virus (chronic infection with) (1993)

Human papillomavirus type 16 (1995)

Human papillomavirus type 18 (1995)

Human T-cell lymphotropic virus type I (1996)

Melphalan [148-82-3]

8-Methoxypsoralen (Methoxsalen) [298-81-7] plus ultraviolet A radiation

MOPP and other combined chemotherapy including alkylating agents

Mustard gas (Sulphur mustard) [505-60-2]

2-Naphthylamine [91-59-8]

Nickel compounds (1990)3

Oestrogen replacement therapy

Oestrogens, nonsteroidal2

Oestrogens, steroidal2

Opisthorchis viverrini (infection with) (1994)

Oral contraceptives, combined5

Oral contraceptives, sequential

Radon [10043-92-2] and its decay products (1988)

Schistosoma haematobium (infection with) (1994)

Silica [14808-60-7] crystalline (inhaled in the form of quartz or cristobalite from occupational sources)

Solar radiation (1992)

Talc containing asbestiform fibres

Tamoxifen [10540-29-1]6

Thiotepa [52-24-4] (1990)

Treosulphan [299-75-2]

Vinyl chloride [75-01-4]

Mixtures

Alcoholic beverages (1988)

Analgesic mixtures containing phenacetin

Betel quid with tobacco

Coal-tar pitches [65996-93-2]

Coal-tars [8007-45-2]

Mineral oils, untreated and mildly treated

Salted fish (Chinese-style) (1993)

Shale oils [68308-34-9]

Soots

Tobacco products, smokeless

Tobacco smoke

Wood dust

Exposure circumstances

Aluminium production

Auramine, manufacture of

Boot and shoe manufacture and repair

Coal gasification

Coke production

Furniture and cabinet making

Haematite mining (underground) with exposure to radon

Iron and steel founding

Isopropanol manufacture (strong-acid process)

Magenta, manufacture of (1993)

Painter (occupational exposure as a) (1989)

Rubber industry

Strong-inorganic-acid mists containing sulphuric acid (occupational exposure to) (1992)

Group 2A—Probably carcinogenic to humans (56)

Agents and groups of agents

Acrylamide [79-06-1] (1994)8

Acrylonitrile [107-13-1]

Adriamycin8 [23214-92-8]

Androgenic (anabolic) steroids

Azacitidine8 [320-67-2] (1990)

Benz[a]anthracene8 [56-55-3]

Benzidine-based dyes8

Benzo[a]pyrene8 [50-32-8]

Bischloroethyl nitrosourea (BCNU) [154-93-8]

1,3-Butadiene [106-99-0] (1992)

Captafol [2425-06-1] (1991)

Chloramphenicol [56-75-7] (1990)

1-(2-Chloroethyl)-3-cyclohexyl-1-nitrosourea8 (CCNU)[13010-47-4]

p-Chloro-o-toluidine [95-69-2] and its strong acid salts (1990)3

Chlorozotocin8 [54749-90-5] (1990)

Cisplatin8 [15663-27-1]

Clonorchis sinensis (infection with)8 (1994)

Dibenz[a,h]anthracene8 [53-70-3]

Diethyl sulphate [64-67-5] (1992)

Dimethylcarbamoyl chloride8 [79-44-7]

Dimethyl sulphate8 [77-78-1]

Epichlorohydrin8 [106-89-8]

Ethylene dibromide8 [106-93-4]

N-Ethyl-N-nitrosourea8 [759-73-9]

Formaldehyde [50-00-0])

IQ8 (2-Amino-3-methylimidazo[4,5-f]quinoline) [76180-96-6] (1993)

5-Methoxypsoralen8 [484-20-8]

4,4´-Methylene bis(2-chloroaniline) (MOCA)8 [101-14-4] (1993)

N-Methyl-N´-nitro-N-nitrosoguanidine8 (MNNG) [70-25-7]

N-Methyl-N-nitrosourea8 [684-93-5]

Nitrogen mustard [51-75-2]

N-Nitrosodiethylamine8 [55-18-5]

N-Nitrosodimethylamine 8 [62-75-9]

Phenacetin [62-44-2]

Procarbazine hydrochloride8 [366-70-1]

Tetrachloroethylene [127-18-4]

Trichloroethylene [79-01-6]

Styrene-7,8-oxide8 [96-09-3] (1994)

Tris(2,3-dibromopropyl)phosphate8 [126-72-7]

Ultraviolet radiation A8 (1992)

Ultraviolet radiation B8 (1992)

Ultraviolet radiation C8 (1992)

Vinyl bromide6 [593-60-2]

Vinyl fluoride [75-02-5]

Mixtures

Creosotes [8001-58-9]

Diesel engine exhaust (1989)

Hot mate (1991)

Non-arsenical insecticides (occupational exposures in spraying and application of) (1991)

Polychlorinated biphenyls [1336-36-3]

Exposure circumstances

Art glass, glass containers and pressed ware (manufacture of) (1993)

Hairdresser or barber (occupational exposure as a) (1993)

Petroleum refining (occupational exposures in) (1989)

Sunlamps and sunbeds (use of) (1992)

Group 2B—Possibly carcinogenic to humans (225)

Agents and groups of agents

A–α–C (2-Amino-9H-pyrido[2,3-b]indole) [26148-68-5]

Acetaldehyde [75-07-0]

Acetamide [60-35-5]

AF-2 [2-(2-Furyl)-3-(5-nitro-2-furyl)acrylamide] [3688-53-7]

Aflatoxin M1 [6795-23-9] (1993)

p-Aminoazobenzene [60-09-3]

o-Aminoazotoluene [97-56-3]

2-Amino-5-(5-nitro-2-furyl)-1,3,4-thiadiazole [712-68-5]

Amitrole [61-82-5]

o-Anisidine [90-04-0]

Antimony trioxide [1309-64-4] (1989)

Aramite [140-57-8]

Atrazine9 [1912-24-9] (1991)

Auramine [492-80-8] (technical-grade)

Azaserine [115-02-6]

Benzo[b]fluoranthene [205-99-2]

Benzo[j]fluoranthene [205-82-3]

Benzo[k]fluoranthene [207-08-9]

Benzyl violet 4B [1694-09-3]

Bleomycins [11056-06-7]

Bracken fern

Bromodichloromethane [75-27-4] (1991)

Butylated hydroxyanisole (BHA) [25013-16-5]

β-Butyrolactone [3068-88-0]

Caffeic acid [331-39-5] (1993)

Carbon-black extracts

Carbon tetrachloride [56-23-5]

Ceramic fibres

Chlordane [57-74-9] (1991)

Chlordecone (Kepone) [143-50-0]

Chlorendic acid [115-28-6] (1990)

α-Chlorinated toluenes (benzyl chloride, benzal chloride,benzotrichloride)

p-Chloroaniline [106-47-8] (1993)

Chloroform [67-66-3]

1-Chloro-2-methylpropene [513-37-1]

Chlorophenols

Chlorophenoxy herbicides

4-Chloro-o-phenylenediamine [95-83-0]

CI Acid Red 114 [6459-94-5] (1993)

CI Basic Red 9 [569-61-9] (1993)

CI Direct Blue 15 [2429-74-5] (1993)

Citrus Red No. 2 [6358-53-8]

Cobalt [7440-48-4] and cobalt compounds3 (1991)

p-Cresidine [120-71-8]

Cycasin [14901-08-7]

Dacarbazine [4342-03-4]

Dantron (Chrysazin; 1,8-Dihydroxyanthraquinone) [117-10-2] (1990)

Daunomycin [20830-81-3]

DDT´-DDT, 50-29-3] (1991)

N,N´-Diacetylbenzidine [613-35-4]

2,4-Diaminoanisole [615-05-4]

4,4´-Diaminodiphenyl ether [101-80-4]

2,4-Diaminotoluene [95-80-7]

Dibenz[a,h]acridine [226-36-8]

Dibenz[a,j]acridine [224-42-0]

7H-Dibenzo[c,g]carbazole [194-59-2]

Dibenzo[a,e]pyrene [192-65-4]

Dibenzo[a,h]pyrene [189-64-0]

Dibenzo[a,i]pyrene [189-55-9]

Dibenzo[a,l]pyrene [191-30-0]

1,2-Dibromo-3-chloropropane [96-12-8]

p-Dichlorobenzene [106-46-7]

3,3´-Dichlorobenzidine [91-94-1]

3,3´-Dichloro-4,4´-diaminodiphenyl ether [28434-86-8]

1,2-Dichloroethane [107-06-2]

Dichloromethane (methylene chloride) [75-09-2]

1,3-Dichloropropene [542-75-6] (technical grade)

Dichlorvos [62-73-7] (1991)

Diepoxybutane [1464-53-5]

Di(2-ethylhexyl)phthalate [117-81-7]

1,2-Diethylhydrazine [1615-80-1]

Diglycidyl resorcinol ether [101-90-6]

Dihydrosafrole [94-58-6]

Diisopropyl sulphate [2973-10-6] (1992)

3,3´-Dimethoxybenzidine (o-Dianisidine) [119-90-4]

p-Dimethylaminoazobenzene [60-11-7]

trans-2-[(Dimethylamino)methylimino]-5-[2-(5-nitro-2-furyl)-vinyl]-1,3,4-oxadiazole [25962-77-0]

2,6-Dimethylaniline (2,6-xylidine) [87-62-7] (1993)

3,3´-Dimethylbenzidine (o-tolidine) [119-93-7]

Dimethylformamide [68-12-2] (1989)

1,1-Dimethylhydrazine [57-14-7]

1,2-Dimethylhydrazine [540-73-8]

3,7-Dinitrofluoranthene [105735-71-5]

3,9-Dinitrofluoranthene [22506-53-2]

1,6-Dinitropyrene [42397-64-8] (1989)

1,8-Dinitropyrene [42397-65-9] (1989)

2,4-Dinitrotoluene [121-14-2]

2,6-Dinitrotoluene [606-20-2]

1,4-Dioxane [123-91-1]

Disperse Blue 1 [2475-45-8] (1990)

Ethyl acrylate [140-88-5]

Ethylene thiourea [96-45-7]

Ethyl methanesulphonate [62-50-0]

2-(2-Formylhydrazino)-4-(5-nitro-2-furyl)thiazole [3570-75-0]

Glass wool (1988)

Glu-P-1 (2-amino-6-methyldipyrido[1,2-a:3´,2´-d]imidazole)[67730-11-4]

Glu-P-2 (2-aminodipyrido[1,2-a:3´,2´-d]imidazole) [67730-10-3]

Glycidaldehyde [765-34-4]

Griseofulvin [126-07-8]

HC Blue No. 1 [2784-94-3] (1993)

Heptachlor [76-44-8] (1991)

Hexachlorobenzene [118-74-1]

Hexachlorocyclohexanes

Hexamethylphosphoramide [680-31-9]

Human immunodeficiency virus type 2 (infection with) (1996)

Human papillomaviruses: some types other than 16, 18, 31 and 33 (1995)

Hydrazine [302-01-2]

Indeno[1,2,3-cd]pyrene [193-39-5]

Iron-dextran complex [9004-66-4]

Isoprene [78-79-5] (1994)

Lasiocarpine [303-34-4]

Lead [7439-92-1] and lead compounds, inorganic3

Magenta [632-99-5] (containing CI Basic Red 9) (1993)

MeA-α-C (2-Amino-3-methyl-9H-pyrido[2,3-b]indole)[68006-83-7]

Medroxyprogesterone acetate [71-58-9]

MeIQ (2-Amino-3,4-dimethylimidazo[4,5-f]quinoline)[77094-11-2] (1993)

MeIQx (2-Amino-3,8-dimethylimidazo[4,5-f]quinoxaline) [77500-04-0] (1993)

Merphalan [531-76-0]

2-Methylaziridine (propyleneimine) [75-55-8]

Methylazoxymethanol acetate [592-62-1]

5-Methylchrysene [3697-24-3]

4,4´-Methylene bis(2-methylaniline) [838-88-0]

4,4´-Methylenedianiline [101-77-9]

Methylmercury compounds (1993)3

Methyl methanesulphonate [66-27-3]

2-Methyl-1-nitroanthraquinone [129-15-7] (uncertain purity)

N-Methyl-N-nitrosourethane [615-53-2]

Methylthiouracil [56-04-2]

Metronidazole [443-48-1]

Mirex [2385-85-5]

Mitomycin C [50-07-7]

Monocrotaline [315-22-0]

5-(Morpholinomethyl)-3-[(5-nitrofurfurylidene)amino]-2-oxazolidinone [3795-88-8]

Nafenopin [3771-19-5]

Nickel, metallic [7440-02-0] (1990)

Niridazole [61-57-4]

Nitrilotriacetic acid [139-13-9] and its salts (1990)3

5-Nitroacenaphthene [602-87-9]

2-Nitroanisole [91-23-6] (1996)

Nitrobenzene [98-95-3] (1996)

6-Nitrochrysene [7496-02-8] (1989)

Nitrofen [1836-75-5], technical-grade

2-Nitrofluorene [607-57-8] (1989)

1-[(5-Nitrofurfurylidene)amino]-2-imidazolidinone [555-84-0]

N-[4-(5-Nitro-2-furyl)-2-thiazolyl]acetamide [531-82-8]

Nitrogen mustard N-oxide [126-85-2]

2-Nitropropane [79-46-9]

1-Nitropyrene [5522-43-0] (1989)

4-Nitropyrene [57835-92-4] (1989)

N-Nitrosodi-n-butylamine [924-16-3]

N-Nitrosodiethanolamine [1116-54-7]

N-Nitrosodi-n-propylamine [621-64-7]

3-(N-Nitrosomethylamino)propionitrile [60153-49-3]

4-(N-Nitrosomethylamino)-1-(3-pyridyl)-1-butanone (NNK) [64091-91-4]

N-Nitrosomethylethylamine [10595-95-6]

N-Nitrosomethylvinylamine [4549-40-0]

N-Nitrosomorpholine [59-89-2]

N‘-Nitrosonornicotine [16543-55-8]

N-Nitrosopiperidine [100-75-4]

N-Nitrosopyrrolidine [930-55-2]

N-Nitrososarcosine [13256-22-9]

Ochratoxin A [303-47-9] (1993)

Oil Orange SS [2646-17-5]

Oxazepam [604-75-1] (1996)

Palygorskite (attapulgite) [12174-11-7] (long fibres, >>5 micro-meters) (1997)

Panfuran S (containing dihydroxymethylfuratrizine [794-93-4])

Pentachlorophenol [87-86-5] (1991)

Phenazopyridine hydrochloride [136-40-3]

Phenobarbital [50-06-6]

Phenoxybenzamine hydrochloride [63-92-3]

Phenyl glycidyl ether [122-60-1] (1989)

Phenytoin [57-41-0]

PhIP (2-Amino-1-methyl-6-phenylimidazo[4,5-b]pyridine) [105650-23-5] (1993)

Ponceau MX [3761-53-3]

Ponceau 3R [3564-09-8]

Potassium bromate [7758-01-2]

Progestins

1,3-Propane sultone [1120-71-4]

β-Propiolactone [57-57-8]

Propylene oxide [75-56-9] (1994)

Propylthiouracil [51-52-5]

Rockwool (1988)

Saccharin [81-07-2]

Safrole [94-59-7]

Schistosoma japonicum (infection with) (1994)

Slagwool (1988)

Sodium o-phenylphenate [132-27-4]

Sterigmatocystin [10048-13-2]

Streptozotocin [18883-66-4]

Styrene [100-42-5] (1994)

Sulfallate [95-06-7]

Tetranitromethane [509-14-8] (1996)

Thioacetamide [62-55-5]

4,4´-Thiodianiline [139-65-1]

Thiourea [62-56-6]

Toluene diisocyanates [26471-62-5]

o-Toluidine [95-53-4]

Trichlormethine (Trimustine hydrochloride) [817-09-4] (1990)

Trp-P-1 (3-Amino-1,4-dimethyl-5H-pyrido[4,3-b]indole) [62450-06-0]

Trp-P-2 (3-Amino-1-methyl-5H-pyrido[4,3-b]indole) [62450-07-1]

Trypan blue [72-57-1]

Uracil mustard [66-75-1]

Urethane [51-79-6]

Vinyl acetate [108-05-4] (1995)

4-Vinylcyclohexene [100-40-3] (1994)

4-Vinylcyclohexene diepoxide [107-87-6] (1994)

Mixtures

Bitumens [8052-42-4], extracts of steam-refined and air-refined

Carrageenan [9000-07-1], degraded

Chlorinated paraffins of average carbon chain length C12 and average degree of chlorination approximately 60% (1990)

Coffee (urinary bladder)9 (1991)

Diesel fuel, marine (1989)

Engine exhaust, gasoline (1989)

Fuel oils, residual (heavy) (1989)

Gasoline (1989)

Pickled vegetables (traditional in Asia) (1993)

Polybrominated biphenyls [Firemaster BP-6, 59536-65-1]

Toxaphene (Polychlorinated camphenes) [8001-35-2]

Toxins derived from Fusarium moniliforme (1993)

Welding fumes (1990)

Exposure circumstances

Carpentry and joinery

Dry cleaning (occupational exposures in) (1995)

Printing processes (occupational exposures in) (1996)

Textile manufacturing industry (work in) (1990)

Group 3—Unclassifiable as to carcinogenicity to humans (480)

Agents and groups of agents

Acridine orange [494-38-2]

Acriflavinium chloride [8018-07-3]

Acrolein [107-02-8]

Acrylic acid [79-10-7]

Acrylic fibres

Acrylonitrile-butadiene-styrene copolymers

Actinomycin D [50-76-0]

Aldicarb [116-06-3] (1991)

Aldrin [309-00-2]

Allyl chloride [107-05-1]

Allyl isothiocyanate [57-06-7]

Allyl isovalerate [2835-39-4]

Amaranth [915-67-3]

5-Aminoacenaphthene [4657-93-6]

2-Aminoanthraquinone [117-79-3]

p-Aminobenzoic acid [150-13-0]

1-Amino-2-methylanthraquinone [82-28-0]

2-Amino-4-nitrophenol [99-57-0] (1993)

2-Amino-5-nitrophenol [121-88-0] (1993)

4-Amino-2-nitrophenol [119-34-6]

2-Amino-5-nitrothiazole [121-66-4]

11-Aminoundecanoic acid [2432-99-7]

Ampicillin [69-53-4] (1990)

Anaesthetics, volatile

Angelicin [523-50-2] plus ultraviolet A radiation

Aniline [62-53-3]

p-Anisidine [104-94-9]

Anthanthrene [191-26-4]

Anthracene [120-12-7]

Anthranilic acid [118-92-3]

Antimony trisulphide [1345-04-6] (1989)

Apholate [52-46-0]

p-Aramid fibrils [24938-64-5] (1997)

Aurothioglucose [12192-57-3]

Aziridine [151-56-4]

2-(1-Aziridinyl)ethanol [1072-52-2]

Aziridyl benzoquinone [800-24-8]

Azobenzene [103-33-3]

Benz[a]acridine [225-11-6]

Benz[c]acridine [225-51-4]

Benzo[ghi]fluoranthene [203-12-3]

Benzo[a]fluorene [238-84-6]

Benzo[b]fluorene [243-17-4]

Benzo[c]fluorene [205-12-9]

Benzo[ghi]perylene [191-24-2]

Benzo[c]phenanthrene [195-19-7]

Benzo[e]pyrene [192-97-2]

p-Benzoquinone dioxime [105-11-3]

Benzoyl chloride [98-88-4]

Benzoyl peroxide [94-36-0]

Benzyl acetate [140-11-4]

Bis(1-aziridinyl)morpholinophosphine sulphide [2168-68-5]

Bis(2-chloroethyl)ether [111-44-4]

1,2-Bis(chloromethoxy)ethane [13483-18-6]

1,4-Bis(chloromethoxymethyl)benzene [56894-91-8]

Bis(2-chloro-1-methylethyl)ether [108-60-1]

Bis(2,3-epoxycyclopentyl)ether [2386-90-5] (1989)

Bisphenol A diglycidyl ether [1675-54-3] (1989)

Bisulphites (1992)

Blue VRS [129-17-9]

Brilliant Blue FCF, disodium salt [3844-45-9]

Bromochloroacetonitrile [83463-62-1] (1991)

Bromoethane [74-96-4] (1991)

Bromoform [75-25-2] (1991)

n-Butyl acrylate [141-32-2]

Butylated hydroxytoluene (BHT) [128-37-0]

Butyl benzyl phthalate [85-68-7]

γ-Butyrolactone [96-48-0]

Caffeine [58-08-2] (1991)

Cantharidin [56-25-7]

Captan [133-06-2]

Carbaryl [63-25-2]

Carbazole [86-74-8]

3-Carbethoxypsoralen [20073-24-9]

Carmoisine [3567-69-9]

Carrageenan [9000-07-1], native

Catechol [120-80-9]

Chloral [75-87-6] (1995)

Chloral hydrate [302-17-0] (1995)

Chlordimeform [6164-98-3]

Chlorinated dibenzodioxins (other than TCDD)

Chlorinated drinking-water (1991)

Chloroacetonitrile [107-14-2] (1991)

Chlorobenzilate [510-15-6]

Chlorodibromomethane [124-48-1] (1991)

Chlorodifluoromethane [75-45-6]

Chloroethane [75-00-3] (1991)

Chlorofluoromethane [593-70-4]

3-Chloro-2-methylpropene [563-47-3] (1995)

4-Chloro-m-phenylenediamine [5131-60-2]

Chloronitrobenzenes [88-73-3; 121-73-3; 100-00-5] (1996)

Chloroprene [126-99-8]

Chloropropham [101-21-3]

Chloroquine [54-05-7]

Chlorothalonil [1897-45-6]

2-Chloro-1,1,1-trifluoroethane [75-88-7]

Cholesterol [57-88-5]

Chromium[III] compounds (1990)

Chromium [7440-47-3], metallic (1990)

Chrysene [218-01-9]

Chrysoidine [532-82-1]

CI Acid Orange 3 [6373-74-6] (1993)

Cimetidine [51481-61-9] (1990)

Cinnamyl anthranilate [87-29-6]

CI Pigment Red 3 [2425-85-6] (1993)

Citrinin [518-75-2]

Clofibrate [637-07-0]

Clomiphene citrate [50-41-9]

Coal dust (1997)

Copper 8-hydroxyquinoline [10380-28-6]

Coronene [191-07-1]

Coumarin [91-64-5]

m-Cresidine [102-50-1]

Crotonaldehyde [4170-30-3] (1995)

Cyclamates [sodium cyclamate, 139-05-9]

Cyclochlorotine [12663-46-6]

Cyclohexanone [108-94-1] (1989)

Cyclopenta[cd]pyrene [27208-37-3]

D & C Red No. 9 [5160-02-1] (1993)

Dapsone [80-08-0]

Decabromodiphenyl oxide [1163-19-5] (1990)

Deltamethrin [52918-63-5] (1991)

Diacetylaminoazotoluene [83-63-6]

Diallate [2303-16-4]

1,2-Diamino-4-nitrobenzene [99-56-9]

1,4-Diamino-2-nitrobenzene [5307-14-2] (1993)

2,5-Diaminotoluene [95-70-5]

Diazepam [439-14-5]

Diazomethane [334-88-3]

Dibenz[a,c]anthracene [215-58-7]

Dibenz[a,j]anthracene [224-41-9]

Dibenzo-p-dioxin (1997)

Dibenzo[a,e]fluoranthene [5385-75-1]

Dibenzo[h,rst]pentaphene [192-47-2]

Dibromoacetonitrile [3252-43-5] (1991)

Dichloroacetic acid [79-43-6] (1995)

Dichloroacetonitrile [3018-12-0] (1991)

Dichloroacetylene [7572-29-4]

o-Dichlorobenzene [95-50-1]

trans-1,4-Dichlorobutene [110-57-6]

2,6-Dichloro-para-phenylenediamine [609-20-1]

1,2-Dichloropropane [78-87-5]

Dicofol [115-32-2]

Dieldrin [60-57-1]

Di(2-ethylhexyl)adipate [103-23-1]

Dihydroxymethylfuratrizine [794-93-4]

Dimethoxane [828-00-2]

3,3´-Dimethoxybenzidine-4,4´-diisocyanate [91-93-0]

p-Dimethylaminoazobenzenediazo sodium sulphonate[140-56-7]

4,4´-Dimethylangelicin [22975-76-4] plus ultraviolet Aradiation

4,5´-Dimethylangelicin [4063-41-6] plus ultraviolet A

N,N-Dimethylaniline [121-69-7] (1993)

Dimethyl hydrogen phosphite [868-85-9] (1990)

1,4-Dimethylphenanthrene [22349-59-3]

1,3-Dinitropyrene [75321-20-9] (1989)

Dinitrosopentamethylenetetramine [101-25-7]

2,4´-Diphenyldiamine [492-17-1]

Disperse Yellow 3 [2832-40-8] (1990)

Disulfiram [97-77-8]

Dithranol [1143-38-0]

Doxefazepam [40762-15-0] (1996)

Droloxifene [82413-20-5] (1996)

Dulcin [150-69-6]

Endrin [72-20-8]

Eosin [15086-94-9]

1,2-Epoxybutane [106-88-7] (1989)

3,4-Epoxy-6-methylcyclohexylmethyl-3,4-epoxy-6-methylcyclohexane carboxylate [141-37-7]

cis-9,10-Epoxystearic acid [2443-39-2]

Estazolam [29975-16-4] (1996)

Ethionamide [536-33-4]

Ethylene [74-85-1] (1994)

Ethylene sulphide [420-12-2]

2-Ethylhexyl acrylate [103-11-7] (1994)

Ethyl selenac [5456-28-0]

Ethyl tellurac [20941-65-5]

Eugenol [97-53-0]

Evans blue [314-13-6]

Fast Green FCF [2353-45-9]

Fenvalerate [51630-58-1] (1991)

Ferbam [14484-64-1]

Ferric oxide [1309-37-1]

Fluometuron [2164-17-2]

Fluoranthene [206-44-0]

Fluorene [86-73-7]

Fluorescent lighting (1992)

Fluorides (inorganic, used in drinking-water)

5-Fluorouracil [51-21-8]

Furazolidone [67-45-8]

Furfural [98-01-1] (1995)

Furosemide (Frusemide) [54-31-9] (1990)

Gemfibrozil [25812-30-0] (1996)

Glass filaments (1988)

Glycidyl oleate [5431-33-4]

Glycidyl stearate [7460-84-6]

Guinea Green B [4680-78-8]

Gyromitrin [16568-02-8]

Haematite [1317-60-8]

HC Blue No. 2 [33229-34-4] (1993)

HC Red No. 3 [2871-01-4] (1993)

HC Yellow No. 4 [59820-43-8] (1993)

Hepatitis D virus (1993)

Hexachlorobutadiene [87-68-3]

Hexachloroethane [67-72-1]

Hexachlorophene [70-30-4]

Human T-cell lymphotropic virus type II (1996)

Hycanthone mesylate [23255-93-8]

Hydralazine [86-54-4]