Children categories

27. Biological Monitoring (6)

27. Biological Monitoring

Chapter Editor: Robert Lauwerys

Table of Contents

Tables and Figures

General Principles

Vito Foà and Lorenzo Alessio

Quality Assurance

D. Gompertz

Metals and Organometallic Compounds

P. Hoet and Robert Lauwerys

Organic Solvents

Masayuki Ikeda

Genotoxic Chemicals

Marja Sorsa

Pesticides

Marco Maroni and Adalberto Ferioli

Tables

Click a link below to view table in article context.

1. ACGIH, DFG & other limit values for metals

2. Examples of chemicals & biological monitoring

3. Biological monitoring for organic solvents

4. Genotoxicity of chemicals evaluated by IARC

5. Biomarkers & some cell/tissue samples & genotoxicity

6. Human carcinogens, occupational exposure & cytogenetic end points

8. Exposure from production & use of pesticides

9. Acute OP toxicity at different levels of ACHE inhibition

10. Variations of ACHE & PCHE & selected health conditions

11. Cholinesterase activities of unexposed healthy people

12. Urinary alkyl phosphates & OP pesticides

13. Urinary alkyl phosphates measurements & OP

14. Urinary carbamate metabolites

15. Urinary dithiocarbamate metabolites

16. Proposed indices for biological monitoring of pesticides

17. Recommended biological limit values (as of 1996)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

28. Epidemiology and Statistics (12)

28. Epidemiology and Statistics

Chapter Editors: Franco Merletti, Colin L. Soskolne and Paolo Vineis

Table of Contents

Tables and Figures

Epidemiological Method Applied to Occupational Health and Safety

Franco Merletti, Colin L. Soskolne and Paolo Vineis

Exposure Assessment

M. Gerald Ott

Summary Worklife Exposure Measures

Colin L. Soskolne

Measuring Effects of Exposures

Shelia Hoar Zahm

Case Study: Measures

Franco Merletti, Colin L. Soskolne and Paola Vineis

Options in Study Design

Sven Hernberg

Validity Issues in Study Design

Annie J. Sasco

Impact of Random Measurement Error

Paolo Vineis and Colin L. Soskolne

Statistical Methods

Annibale Biggeri and Mario Braga

Causality Assessment and Ethics in Epidemiological Research

Paolo Vineis

Case Studies Illustrating Methodological Issues in the Surveillance of Occupational Diseases

Jung-Der Wang

Questionnaires in Epidemiological Research

Steven D. Stellman and Colin L. Soskolne

Asbestos Historical Perspective

Lawrence Garfinkel

Tables

Click a link below to view table in article context.

1. Five selected summary measures of worklife exposure

2. Measures of disease occurrence

3. Measures of association for a cohort study

4. Measures of association for case-control studies

5. General frequency table layout for cohort data

6. Sample layout of case-control data

7. Layout case-control data - one control per case

8. Hypothetical cohort of 1950 individuals to T2

9. Indices of central tendency & dispersion

10. A binomial experiment & probabilities

11. Possible outcomes of a binomial experiment

12. Binomial distribution, 15 successes/30 trials

13. Binomial distribution, p = 0.25; 30 trials

14. Type II error & power; x = 12, n = 30, a = 0.05

15. Type II error & power; x = 12, n = 40, a = 0.05

16. 632 workers exposed to asbestos 20 years or longer

17. O/E number of deaths among 632 asbestos workers

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

29. Ergonomics (27)

29. Ergonomics

Chapter Editors: Wolfgang Laurig and Joachim Vedder

Table of Contents

Tables and Figures

Overview

Wolfgang Laurig and Joachim Vedder

Goals, Principles and Methods

The Nature and Aims of Ergonomics

William T. Singleton

Analysis of Activities, Tasks and Work Systems

Véronique De Keyser

Ergonomics and Standardization

Friedhelm Nachreiner

Checklists

Pranab Kumar Nag

Physical and Physiological Aspects

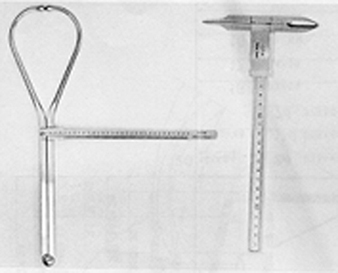

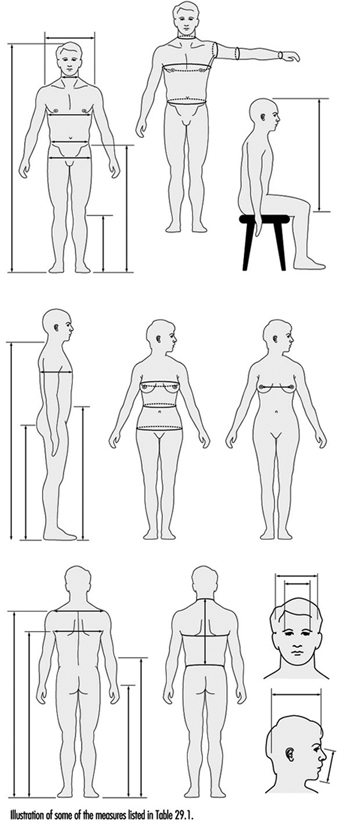

Anthropometry

Melchiorre Masali

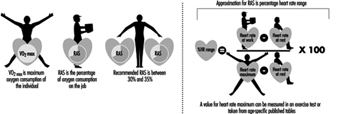

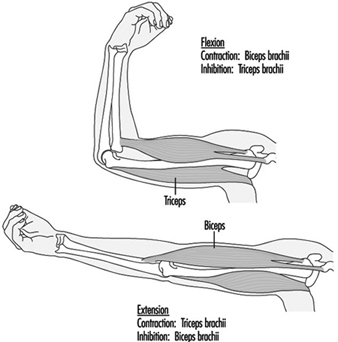

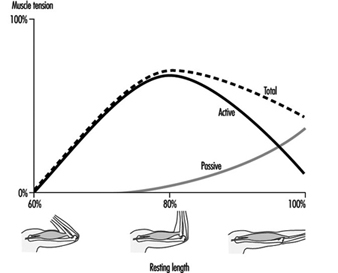

Muscular Work

Juhani Smolander and Veikko Louhevaara

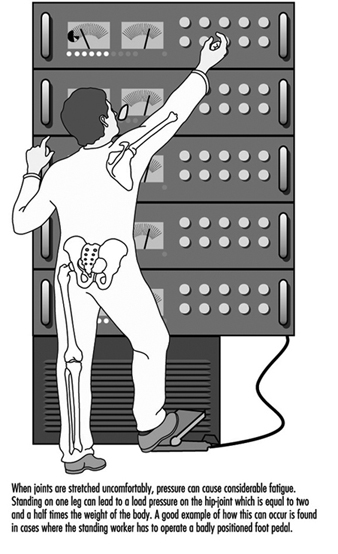

Postures at Work

Ilkka Kuorinka

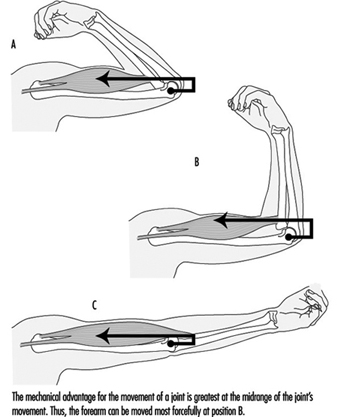

Biomechanics

Frank Darby

General Fatigue

Étienne Grandjean

Fatigue and Recovery

Rolf Helbig and Walter Rohmert

Psychological Aspects

Mental Workload

Winfried Hacker

Vigilance

Herbert Heuer

Mental Fatigue

Peter Richter

Organizational Aspects of Work

Work Organization

Eberhard Ulich and Gudela Grote

Sleep Deprivation

Kazutaka Kogi

Work Systems Design

Workstations

Roland Kadefors

Tools

T.M. Fraser

Controls, Indicators and Panels

Karl H. E. Kroemer

Information Processing and Design

Andries F. Sanders

Designing for Everyone

Designing for Specific Groups

Joke H. Grady-van den Nieuwboer

Case Study: The International Classification of Functional Limitation in People

Cultural Differences

Houshang Shahnavaz

Elderly Workers

Antoine Laville and Serge Volkoff

Workers with Special Needs

Joke H. Grady-van den Nieuwboer

Diversity and Importance of Ergonomics--Two Examples

System Design in Diamond Manufacturing

Issachar Gilad

Disregarding Ergonomic Design Principles: Chernobyl

Vladimir M. Munipov

Tables

Click a link below to view table in article context.

1. Basic anthropometric core list

2. Fatigue & recovery dependent on activity levels

3. Rules of combination effects of two stress factors on strain

4. Differenting among several negative consequences of mental strain

5. Work-oriented principles for production structuring

6. Participation in organizational context

7. User participation in the technology process

8. Irregular working hours & sleep deprivation

9. Aspects of advance, anchor & retard sleeps

10. Control movements & expected effects

11. Control-effect relations of common hand controls

12. Rules for arrangement of controls

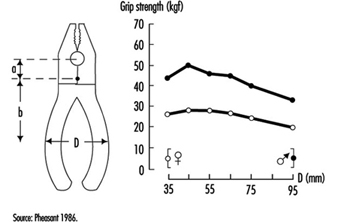

Figures

Point to a thumbnail to see figure caption, click to see the figure in the article context.

30. Occupational Hygiene (6)

30. Occupational Hygiene

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Goals, Definitions and General Information

Berenice I. Ferrari Goelzer

Recognition of Hazards

Linnéa Lillienberg

Evaluation of the Work Environment

Lori A. Todd

Occupational Hygiene: Control of Exposures Through Intervention

James Stewart

The Biological Basis for Exposure Assessment

Dick Heederik

Occupational Exposure Limits

Dennis J. Paustenbach

Tables

1. Hazards of chemical; biological & physical agents

2. Occupational exposure limits (OELs) - various countries

Figures

31. Personal Protection (7)

31. Personal Protection

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Overview and Philosophy of Personal Protection

Robert F. Herrick

Eye and Face Protectors

Kikuzi Kimura

Foot and Leg Protection

Toyohiko Miura

Head Protection

Isabelle Balty and Alain Mayer

Hearing Protection

John R. Franks and Elliott H. Berger

Protective Clothing

S. Zack Mansdorf

Respiratory Protection

Thomas J. Nelson

Tables

Click a link below to view table in article context.

1. Transmittance requirements (ISO 4850-1979)

2. Scales of protection - gas-welding & braze-welding

3. Scales of protection - oxygen cutting

4. Scales of protection - plasma arc cutting

5. Scales of protection - electric arc welding or gouging

6. Scales of protection - plasma direct arc welding

7. Safety helmet: ISO Standard 3873-1977

8. Noise Reduction Rating of a hearing protector

9. Computing the A-weighted noise reduction

10. Examples of dermal hazard categories

11. Physical, chemical & biological performance requirements

12. Material hazards associated with particular activities

13. Assigned protection factors from ANSI Z88 2 (1992)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

32. Record Systems and Surveillance (9)

32. Record Systems and Surveillance

Chapter Editor: Steven D. Stellman

Table of Contents

Tables and Figures

Occupational Disease Surveillance and Reporting Systems

Steven B. Markowitz

Occupational Hazard Surveillance

David H. Wegman and Steven D. Stellman

Surveillance in Developing Countries

David Koh and Kee-Seng Chia

Development and Application of an Occupational Injury and Illness Classification System

Elyce Biddle

Risk Analysis of Nonfatal Workplace Injuries and Illnesses

John W. Ruser

Case Study: Worker Protection and Statistics on Accidents and Occupational Diseases - HVBG, Germany

Martin Butz and Burkhard Hoffmann

Case Study: Wismut - A Uranium Exposure Revisited

Heinz Otten and Horst Schulz

Measurement Strategies and Techniques for Occupational Exposure Assessment in Epidemiology

Frank Bochmann and Helmut Blome

Case Study: Occupational Health Surveys in China

Tables

Click a link below to view the table in article context.

1. Angiosarcoma of the liver - world register

2. Occupational illness, US, 1986 versus 1992

3. US Deaths from pneumoconiosis & pleural mesothelioma

4. Sample list of notifiable occupational diseases

5. Illness & injury reporting code structure, US

6. Nonfatal occupational injuries & illnesses, US 1993

7. Risk of occupational injuries & illnesses

8. Relative risk for repetitive motion conditions

9. Workplace accidents, Germany, 1981-93

10. Grinders in metalworking accidents, Germany, 1984-93

11. Occupational disease, Germany, 1980-93

12. Infectious diseases, Germany, 1980-93

13. Radiation exposure in the Wismut mines

14. Occupational diseases in Wismut uranium mines 1952-90

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

33. Toxicology (21)

33. Toxicology

Chapter Editor: Ellen K. Silbergeld

Table of Contents

Tables and Figures

Introduction

Ellen K. Silbergeld, Chapter Editor

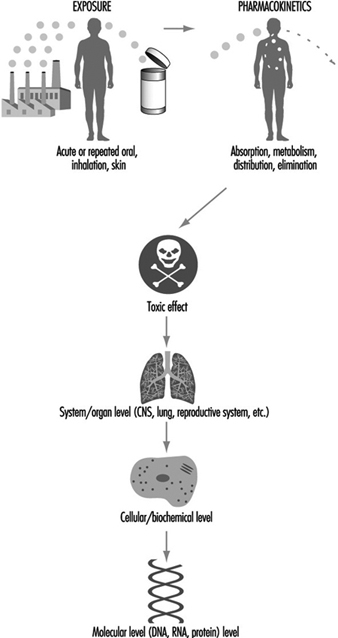

General Principles of Toxicology

Definitions and Concepts

Bo Holmberg, Johan Hogberg and Gunnar Johanson

Toxicokinetics

Dušan Djuríc

Target Organ And Critical Effects

Marek Jakubowski

Effects Of Age, Sex And Other Factors

Spomenka Telišman

Genetic Determinants Of Toxic Response

Daniel W. Nebert and Ross A. McKinnon

Mechanisms of Toxicity

Introduction And Concepts

Philip G. Watanabe

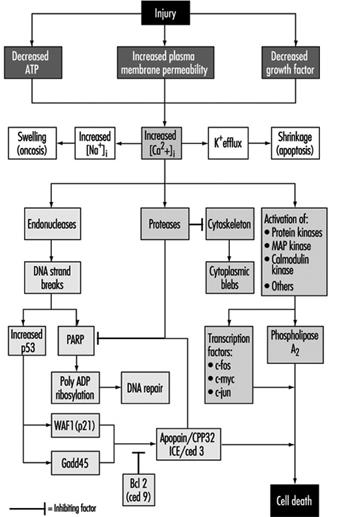

Cellular Injury And Cellular Death

Benjamin F. Trump and Irene K. Berezesky

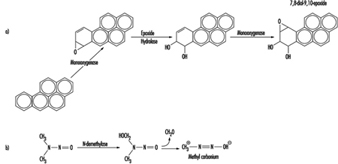

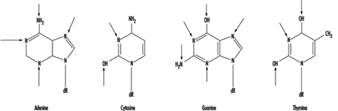

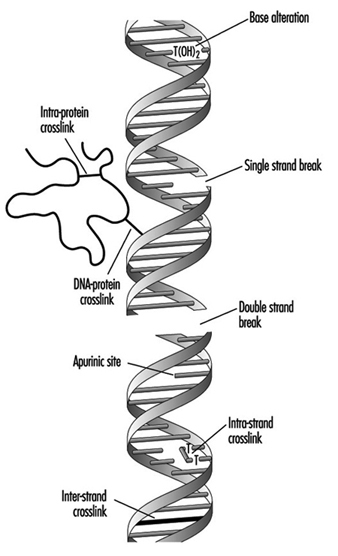

Genetic Toxicology

R. Rita Misra and Michael P. Waalkes

Immunotoxicology

Joseph G. Vos and Henk van Loveren

Target Organ Toxicology

Ellen K. Silbergeld

Toxicology Test Methods

Biomarkers

Philippe Grandjean

Genetic Toxicity Assessment

David M. DeMarini and James Huff

In Vitro Toxicity Testing

Joanne Zurlo

Structure Activity Relationships

Ellen K. Silbergeld

Regulatory Toxicology

Toxicology In Health And Safety Regulation

Ellen K. Silbergeld

Principles Of Hazard Identification - The Japanese Approach

Masayuki Ikeda

The United States Approach to Risk Assessment Of Reproductive Toxicants and Neurotoxic Agents

Ellen K. Silbergeld

Approaches To Hazard Identification - IARC

Harri Vainio and Julian Wilbourn

Appendix - Overall Evaluations of Carcinogenicity to Humans: IARC Monographs Volumes 1-69 (836)

Carcinogen Risk Assessment: Other Approaches

Cees A. van der Heijden

Tables

Click a link below to view table in article context.

- Examples of critical organs & critical effects

- Basic effects of possible multiple interactions of metals

- Haemoglobin adducts in workers exposed to aniline & acetanilide

- Hereditary, cancer-prone disorders & defects in DNA repair

- Examples of chemicals that exhibit genotoxicity in human cells

- Classification of tests for immune markers

- Examples of biomarkers of exposure

- Pros & cons of methods for identifying human cancer risks

- Comparison of in vitro systems for hepatotoxicity studies

- Comparison of SAR & test data: OECD/NTP analyses

- Regulation of chemical substances by laws, Japan

- Test items under the Chemical Substance Control Law, Japan

- Chemical substances & the Chemical Substances Control Law

- Selected major neurotoxicity incidents

- Examples of specialized tests to measure neurotoxicity

- Endpoints in reproductive toxicology

- Comparison of low-dose extrapolations procedures

- Frequently cited models in carcinogen risk characterization

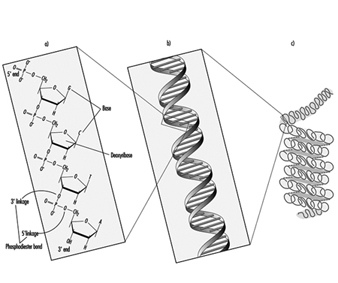

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Pesticides

Introduction

Human exposure to pesticides has different characteristics according to whether it occurs during industrial production or use (table 1). The formulation of commercial products (by mixing active ingredients with other coformulants) has some exposure characteristics in common with pesticide use in agriculture. In fact, since formulation is typically performed by small industries which manufacture many different products in successive operations, the workers are exposed to each of several pesticides for a short time. In public health and agriculture, the use of a variety of compounds is generally the rule, although in some specific applications (for example, cotton defoliation or malaria control programmes) a single product may be used.

Table 1. Comparison of exposure characteristics during production and use of pesticides

|

Exposure on production |

Exposure on use |

|

|

Duration of exposure |

Continuous and prolonged |

Variable and intermittent |

|

Degree of exposure |

Fairly constant |

Extremely variable |

|

Type of exposure |

To one or few compounds |

To numerous compounds either in sequence or concomitantly |

|

Skin absorption |

Easy to control |

Variable according to work procedures |

|

Ambient monitoring |

Useful |

Seldom informative |

|

Biological monitoring |

Complementary to ambient monitoring |

Very useful when available |

Source: WHO 1982a, modified.

The measurement of biological indicators of exposure is particularly useful for pesticide users where the conventional techniques of exposure assessment through ambient air monitoring are scarcely applicable. Most pesticides are lipid-soluble substances that penetrate the skin. The occurrence of percutaneous (skin) absorption makes the use of biological indicators very important in assessing the level of exposure in these circumstances.

Organophosphate Insecticides

Biological indicators of effect:

Cholinesterases are the target enzymes accounting for organophosphate (OP) toxicity to insect and mammalian species. There are two principal types of cholinesterases in the human organism: acetylcholinesterase (ACHE) and plasma cholinesterase (PCHE). OP causes toxic effects in humans through the inhibition of synaptic acetylcholinesterase in the nervous system. Acetylcholinesterase is also present in red blood cells, where its function is unknown. Plasma cholinesterase is a generic term covering an inhomogeneous group of enzymes present in glial cells, plasma, liver and some other organs. PCHE is inhibited by OPs, but its inhibition does not produce known functional derangements.

Inhibition of blood ACHE and PCHE activity is highly correlated with intensity and duration of OP exposure. Blood ACHE, being the same molecular target as that responsible for acute OP toxicity in the nervous system, is a more specific indicator than PCHE. However, sensitivity of blood ACHE and PCHE to OP inhibition varies among the individual OP compounds: at the same blood concentration, some inhibit more ACHE and others more PCHE.

A reasonable correlation exists between blood ACHE activity and the clinical signs of acute toxicity (table 2). The correlation tends to be better as the rate of inhibition is faster. When inhibition occurs slowly, as with chronic low-level exposures, the correlation with illness may be low or totally non-existent. It must be noted that blood ACHE inhibition is not predictive for chronic or delayed effects.

Table 2. Severity and prognosis of acute OP toxicity at different levels of ACHE inhibition

|

ACHE inhibition (%) |

Level of poisoning |

Clinical symptoms |

Prognosis |

|

50–60 |

Mild |

Weakness, headache, dizziness, nausea, salivation, lacrimation, miosis, moderate bronchial spasm |

Convalescence in 1-3 days |

|

60–90 |

Moderate |

Abrupt weakness, visual disturbance, excess salivation, sweating, vomiting, diarrhoea, bradycardia, hypertonia, tremors of hands and head, disturbed gait, miosis, pain in the chest, cyanosis of the mucous membranes |

Convalescence in 1-2 weeks |

|

90–100 |

Severe |

Abrupt tremor, generalized convulsions, psychic disturbance, intensive cyanosis, lung oedema, coma |

Death from respiratory or cardiac failure |

Variations of ACHE and PCHE activities have been observed in healthy people and in specific physiopathological conditions (table 3). Thus, the sensitivity of these tests in monitoring OP exposure can be increased by adopting individual pre-exposure values as a reference. Cholinesterase activities after exposure are then compared with the individual baseline values. One should make use of population cholinesterase activity reference values only when pre-exposure cholinesterase levels are not known (table 4).

Table 3. Variations of ACHE and PCHE activities in healthy people and in selected physiopathological conditions

|

Condition |

ACHE activity |

PCHE activity |

|

Healthy people |

||

|

Interindividual variation1 |

10–18 % |

15–25 % |

|

Intraindividual variation1 |

3–7 % |

6% |

|

Sex differences |

No |

10–15 % higher in male |

|

Age |

Reduced up to 6 months old |

|

|

Body mass |

Positive correlation |

|

|

Serum cholesterol |

Positive correlation |

|

|

Seasonal variation |

No |

No |

|

Circadian variation |

No |

No |

|

Menstruation |

Decreased |

|

|

Pregnancy |

Decreased |

|

|

Pathological conditions |

||

|

Reduced activity |

Leukaemia, neoplasm |

Liver disease; uraemia; cancer; heart failure; allergic reactions |

|

Increased activity |

Polycythaemia; thalassaemia; other congenital blood dyscrasias |

Hyperthyroidism; other conditions of high metabolic rate |

1 Source: Augustinsson 1955 and Gage 1967.

Table 4. Cholinesterase activities of healthy people without exposure to OP measured with selected methods

|

Method |

Sex |

ACHE* |

PCHE* |

|

Michel1 (DpH/h) |

male female |

0.77±0.08 0.75±0.08 |

0.95±0.19 0.82±0.19 |

|

Titrimetric1 (mmol/min ml) |

male/female |

13.2±0.31 |

4.90±0.02 |

|

Ellman’s modified2 (UI/ml) |

male female |

4.01±0.65 3.45±0.61 |

3.03±0.66 3.03±0.68 |

* mean result, ± standard deviation.

Source: 1 Laws 1991. 2 Alcini et al. 1988.

Blood should preferably be sampled within two hours after exposure. Venipuncture is preferred to extracting capillary blood from a finger or earlobe because the sampling point can be contaminated with pesticide residing on the skin in exposed subjects. Three sequential samples are recommended to establish a normal baseline for each worker before exposure (WHO 1982b).

Several analytical methods are available for the determination of blood ACHE and PCHE. According to WHO, the Ellman spectrophotometric method (Ellman et al. 1961) should serve as a reference method.

Biological indicators of exposure.

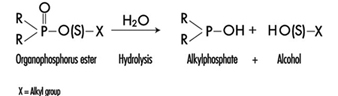

The determination in urine of metabolites that are derived from the alkyl phosphate moiety of the OP molecule or of the residues generated by the hydrolysis of the P–X bond (figure 1) has been used to monitor OP exposure.

Figure 1. Hydrolysis of OP insecticides

Alkyl phosphate metabolites.

The alkyl phosphate metabolites detectable in urine and the main parent compound from which they can originate are listed in table 5. Urinary alkyl phosphates are sensitive indicators of exposure to OP compounds: the excretion of these metabolites in urine is usually detectable at an exposure level at which plasma or erythrocyte cholinesterase inhibition cannot be detected. The urinary excretion of alkyl phosphates has been measured for different conditions of exposure and for various OP compounds (table 6). The existence of a relationship between external doses of OP and alkyl phosphate urinary concentrations has been established in a few studies. In some studies a significant relationship between cholinesterase activity and levels of alkyl phosphates in urine has also been demonstrated.

Table 5. Alkyl phosphates detectable in urine as metabolites of OP pesticides

|

Metabolite |

Abbreviation |

Principal parent compounds |

|

Monomethylphosphate |

MMP |

Malathion, parathion |

|

Dimethylphosphate |

DMP |

Dichlorvos, trichlorfon, mevinphos, malaoxon, dimethoate, fenchlorphos |

|

Diethylphosphate |

DEP |

Paraoxon, demeton-oxon, diazinon-oxon, dichlorfenthion |

|

Dimethylthiophosphate |

DMTP |

Fenitrothion, fenchlorphos, malathion, dimethoate |

|

Diethylthiophosphate |

DETP |

Diazinon, demethon, parathion,fenchlorphos |

|

Dimethyldithiophosphate |

DMDTP |

Malathion, dimethoate, azinphos-methyl |

|

Diethyldithiophosphate |

DEDTP |

Disulfoton, phorate |

|

Phenylphosphoric acid |

Leptophos, EPN |

Table 6. Examples of levels of urinary alkyl phosphates measured in various conditions of exposure to OP

|

Compound |

Condition of exposure |

Route of exposure |

Metabolite concentrations1 (mg/l) |

|

Parathion2 |

Nonfatal poisoning |

Oral |

DEP = 0.5 DETP = 3.9 |

|

Disulfoton2 |

Formulators |

Dermal/inhalation |

DEP = 0.01-4.40 DETP = 0.01-1.57 DEDTP = <0.01-.05 |

|

Phorate2 |

Formulators |

Dermal/inhalation |

DEP = 0.02-5.14 DETP = 0.08-4.08 DEDTP = <0.01-0.43 |

|

Malathion3 |

Sprayers |

Dermal |

DMDTP = <0.01 |

|

Fenitrothion3 |

Sprayers |

Dermal |

DMP = 0.01-0.42 DMTP = 0.02-0.49 |

|

Monocrotophos4 |

Sprayers |

Dermal/inhalation |

DMP = <0.04-6.3/24 h |

1 For abbreviations see table 27.12 [BMO12TE].

2 Dillon and Ho 1987.

3 Richter 1993.

4 van Sittert and Dumas 1990.

Alkyl phosphates are usually excreted in urine within a short time. Samples collected soon after the end of the workday are suitable for metabolite determination.

The measurement of alkyl phosphates in urine requires a rather sophisticated analytical method, based on derivatization of the compounds and detection by gas-liquid chromatography (Shafik et al. 1973a; Reid and Watts 1981).

Hydrolytic residues.

p-Nitrophenol (PNP) is the phenolic metabolite of parathion, methylparathion and ethyl parathion, EPN. The measurement of PNP in urine (Cranmer 1970) has been widely used and has proven to be successful in evaluating exposure to parathion. Urinary PNP correlates well with the absorbed dose of parathion. With PNP urinary levels up to 2 mg/l, the absorption of parathion does not cause symptoms, and little or no reduction of cholinesterase activities is observed. PNP excretion occurs rapidly and urinary levels of PNP become insignificant 48 hours after exposure. Thus, urine samples should be collected soon after exposure.

Carbamates

Biological indicators of effect.

Carbamate pesticides include insecticides, fungicides and herbicides. Insecticidal carbamate toxicity is due to the inhibition of synaptic ACHE, while other mechanisms of toxicity are involved for herbicidal and fungicidal carbamates. Thus, only exposure to carbamate insecticides can be monitored through the assay of cholinesterase activity in red blood cells (ACHE) or plasma (PCHE). ACHE is usually more sensitive to carbamate inhibitors than PCHE. Cholinergic symptoms have been usually observed in carbamate-exposed workers with a blood ACHE activity lower than 70% of the individual baseline level (WHO 1982a).

Inhibition of cholinesterases by carbamates is rapidly reversible. Therefore, false negative results can be obtained if too much time elapses between exposure and biological sampling or between sampling and analysis. In order to avoid such problems, it is recommended that blood samples be collected and analysed within four hours after exposure. Preference should be given to the analytical methods that allow the determination of cholinesterase activity immediately after blood sampling, as discussed for organophosphates.

Biological indicators of exposure.

The measurement of urinary excretion of carbamate metabolites as a method to monitor human exposure has so far been applied only to few compounds and in limited studies. Table 7 summarizes the relevant data. Since carbamates are promptly excreted in the urine, samples collected soon after the end of exposure are suitable for metabolite determination. Analytical methods for the measurements of carbamate metabolites in urine have been reported by Dawson et al. (1964); DeBernardinis and Wargin (1982) and Verberk et al. (1990).

Table 7. Levels of urinary carbamate metabolites measured in field studies

|

Compound |

Biological index |

Condition of exposure |

Environmental concentrations |

Results |

References |

|

Carbaryl |

a-naphthol a-naphthol a-naphthol |

formulators mixer/applicators unexposed population |

0.23–0.31 mg/m3 |

x=18.5 mg/l1 , max. excretion rate = 80 mg/day x=8.9 mg/l, range = 0.2–65 mg/l range = 1.5–4 mg/l |

WHO 1982a |

|

Pirimicarb |

metabolites I2 and V3 |

applicators |

range = 1–100 mg/l |

Verberk et al. 1990 |

1 Systemic poisonings have been occasionally reported.

2 2-dimethylamino-4-hydroxy-5,6-dimethylpyrimidine.

3 2-methylamino-4-hydroxy-5,6-dimethylpyrimidine.

x = standard deviation.

Dithiocarbamates

Biological indicators of exposure.

Dithiocarbamates (DTC) are widely used fungicides, chemically grouped in three classes: thiurams, dimethyldithiocarbamates and ethylene-bis-dithiocarbamates.

Carbon disulphide (CS2) and its main metabolite 2-thiothiazolidine-4-carboxylic acid (TTCA) are metabolites common to almost all DTC. A significant increase in urinary concentrations of these compounds has been observed for different conditions of exposure and for various DTC pesticides. Ethylene thiourea (ETU) is an important urinary metabolite of ethylene-bis-dithiocarbamates. It may also be present as an impurity in market formulations. Since ETU has been determined to be a teratogen and a carcinogen in rats and in other species and has been associated with thyroid toxicity, it has been widely applied to monitor ethylene-bis-dithiocarbamate exposure. ETU is not compound-specific, as it may be derived from maneb, mancozeb or zineb.

Measurement of the metals present in the DTC has been proposed as an alternative approach in monitoring DTC exposure. Increased urinary excretion of manganese has been observed in workers exposed to mancozeb (table 8).

Table 8. Levels of urinary dithiocarbamate metabolites measured in field studies

|

Compound |

Biological index |

Condition of exposure |

Environmental concentrations* ± standard deviation |

Results ± standard deviation |

References |

|

Ziram |

Carbon disulphide (CS2) TTCA1 |

formulators formulators |

1.03 ± 0.62 mg/m3 |

3.80 ± 3.70 mg/l 0.45 ± 0.37 mg/l |

Maroni et al. 1992 |

|

Maneb/Mancozeb |

ETU2 |

applicators |

range = < 0.2–11.8 mg/l |

Kurttio et al. 1990 |

|

|

Mancozeb |

Manganese |

applicators |

57.2 mg/m3 |

pre-exposure: 0.32 ± 0.23 mg/g creatinine; post-exposure: 0.53 ± 0.34 mg/g creatinine |

Canossa et al. 1993 |

* Mean result according to Maroni et al. 1992.

1 TTCA = 2-thiothiazolidine-4-carbonylic acid.

2 ETU = ethylene thiourea.

CS2, TTCA, and manganese are commonly found in urine of non-exposed subjects. Thus, the measurement of urinary levels of these compounds prior to exposure is recommended. Urine samples should be collected in the morning following the cessation of exposure. Analytical methods for the measurements of CS2, TTCA and ETU have been reported by Maroni et al. (1992).

Synthetic Pyrethroids

Biological indicators of exposure.

Synthetic pyrethroids are insecticides similar to natural pyrethrins. Urinary metabolites suitable for application in biological monitoring of exposure have been identified through studies with human volunteers. The acidic metabolite 3-(2,2’-dichloro-vinyl)-2,2’-dimethyl-cyclopropane carboxylic acid (Cl2CA) is excreted both by subjects orally dosed with permethrin and cypermethrin and the bromo-analogue (Br2CA) by subjects treated with deltamethrin. In the volunteers treated with cypermethrin, a phenoxy metabolite, 4-hydroxy-phenoxy benzoic acid (4-HPBA), has also been identified. These tests, however, have not often been applied in monitoring occupational exposures because of the complex analytical techniques required (Eadsforth, Bragt and van Sittert 1988; Kolmodin-Hedman, Swensson and Akerblom 1982). In applicators exposed to cypermethrin, urinary levels of Cl2CA have been found to range from 0.05 to 0.18 mg/l, while in formulators exposed to a-cypermethrin, urinary levels of 4-HPBA have been found to be lower than 0.02 mg/l.

A 24-hour urine collection period started after the end of exposure is recommended for metabolite determinations.

Organochlorines

Biological indicators of exposure.

Organochlorine (OC) insecticides were widely used in the 1950s and 1960s. Subsequently, the use of many of these compounds was discontinued in many countries because of their persistence and consequent contamination of the environment.

Biological monitoring of OC exposure can be carried out through the determination of intact pesticides or their metabolites in blood or serum (Dale, Curley and Cueto 1966; Barquet, Morgade and Pfaffenberger 1981). After absorption, aldrin is rapidly metabolized to dieldrin and can be measured as dieldrin in blood. Endrin has a very short half-life in blood. Therefore, endrin blood concentration is of use only in determining recent exposure levels. The determination of the urinary metabolite anti-12-hydroxy-endrin has also proven to be useful in monitoring endrin exposure (van Sittert and Tordoir 1987) .

Significant correlations between the concentration of biological indicators and the onset of toxic effects have been demonstrated for some OC compounds. Instances of toxicity due to aldrin and dieldrin exposure have been related to levels of dieldrin in blood above 200 μg/l. A blood lindane concentration of 20 μg/l has been indicated as the upper critical level as far as neurological signs and symptoms are concerned. No acute adverse effects have been reported in workers with blood endrin concentrations below 50 μg/l. Absence of early adverse effects (induction of liver microsomal enzymes) has been shown on repeated exposures to endrin at urinary anti-12-hydroxy-endrin concentrations below 130 μg/g creatinine and on repeated exposures to DDT at DDT or DDE serum concentrations below 250 μg/l.

OC may be found in low concentrations in the blood or urine of the general population. Examples of observed values are as follows: lindane blood concentrations up to 1 μg/l, dieldrin up to 10 μg/l, DDT or DDE up to 100 μg/l, and anti-12-hydroxy-endrin up to 1 μg/g creatinine. Thus, a baseline assessment prior to exposure is recommended.

For exposed subjects, blood samples should be taken immediately after the end of a single exposure. For conditions of long-term exposure, the time of collection of the blood sample is not critical. Urine spot samples for urinary metabolite determination should be collected at the end of exposure.

Triazines

Biological indicators of exposure.

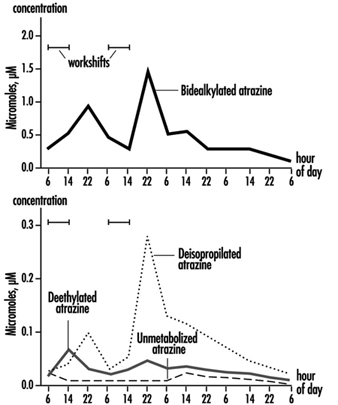

The measurement of urinary excretion of triazinic metabolites and the unmodified parent compound has been applied to subjects exposed to atrazine in limited studies. Figure 2 shows the urinary excretion profiles of atrazine metabolites of a manufacturing worker with dermal exposure to atrazine ranging from 174 to 275 μmol/workshift (Catenacci et al. 1993). Since other chlorotriazines (simazine, propazine, terbuthylazine) follow the same biotransformation pathway of atrazine, levels of dealkylated triazinic metabolites may be determined to monitor exposure to all chlorotriazine herbicides.

Figure 2. Urinary excretion profiles of atrazine metabolites

The determination of unmodified compounds in urine may be useful as a qualitative confirmation of the nature of the compound that has generated the exposure. A 24–hour urine collection period started at the beginning of exposure is recommended for metabolite determination.

Recently, by using an enzyme-linked immunosorbent assay (ELISA test), a mercapturic acid conjugate of atrazine has been identified as its major urinary metabolite in exposed workers. This compound has been found in concentrations at least 10 times higher than those of any dealkylated products. A relationship between cumulative dermal and inhalation exposure and total amount of the mercapturic acid conjugate excreted over a 10-day period has been observed (Lucas et al. 1993).

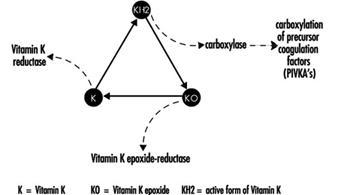

Coumarin Derivatives

Biological indicators of effect.

Coumarin rodenticides inhibit the activity of the enzymes of the vitamin K cycle in the liver of mammals, humans included (figure 3), thus causing a dose-related reduction of the synthesis of vitamin K-dependent clotting factors, namely factor II (prothrombin), VII, IX, and X. Anticoagulant effects appear when plasma levels of clotting factors have dropped below approximately 20% of normal.

Figure 3. Vitamin K cycle

These vitamin K antagonists have been grouped into so-called “first generation” (e.g., warfarin) and “second generation” compounds (e.g., brodifacoum, difenacoum), the latter characterized by a very long biological half-life (100 to 200 days).

The determination of prothrombin time is widely used in monitoring exposure to coumarins. However, this test is sensitive only to a clotting factor decrease of approximately 20% of normal plasma levels. The test is not suitable for detection of early effects of exposure. For this purpose, the determination of the prothrombin concentration in plasma is recommended.

In the future, these tests might be replaced by the determination of coagulation factor precursors (PIVKA), which are substances detectable in blood only in the case of blockage of the vitamin K cycle by coumarins.

With conditions of prolonged exposure, the time of blood collection is not critical. In cases of acute overexposure, biological monitoring should be carried out for at least five days after the event, in view of the latency of the anticoagulant effect. To increase the sensitivity of these tests, the measurement of baseline values prior to exposure is recommended.

Biological indicators of exposure.

The measurement of unmodified coumarins in blood has been proposed as a test to monitor human exposure. However, experience in applying these indices is very limited mainly because the analytical techniques are much more complex (and less standardized) in comparison with those required to monitor the effects on the coagulation system (Chalermchaikit, Felice and Murphy 1993).

Phenoxy Herbicides

Biological indicators of exposure.

Phenoxy herbicides are scarcely biotransformed in mammals. In humans, more than 95% of a 2,4-dichlorophenoxyacetic acid (2,4-D) dose is excreted unchanged in urine within five days, and 2,4,5-trichlorophenoxyacetic acid (2,4,5-T) and 4-chloro-2-methylphenoxyacetic acid (MCPA) are also excreted mostly unchanged via urine within a few days after oral absorption. The measurement of unchanged compounds in urine has been applied in monitoring occupational exposure to these herbicides. In field studies, urinary levels of exposed workers have been found to range from 0.10 to 8 μg/l for 2,4-D, from 0.05 to 4.5 μg/l for 2,4,5-T and from below 0.1 μg/l to 15 μg/l for MCPA. A 24-hour period of urine collection starting at the end of exposure is recommended for the determination of unchanged compounds. Analytical methods for the measurements of phenoxy herbicides in urine have been reported by Draper (1982).

Quaternary Ammonium Compounds

Biological indicators of exposure.

Diquat and paraquat are herbicides scarcely biotransformed by the human organism. Because of their high water solubility, they are readily excreted unchanged in urine. Urine concentrations below the analytical detection limit (0.01 μg/l) have been often observed in paraquat exposed workers; while in tropical countries, concentrations up to 0.73 μg/l have been measured after improper paraquat handling. Urinary diquat concentrations lower than the analytical detection limit (0.047 μg/l) have been reported for subjects with dermal exposures from 0.17 to 1.82 μg/h and inhalation exposures lower than 0.01 μg/h. Ideally, 24 hours sampling of urine collected at the end of exposure should be used for analysis. When this is impractical, a spot sample at the end of the workday can be used.

Determination of paraquat levels in serum is useful for prognostic purposes in case of acute poisoning: patients with serum paraquat levels up to 0.1 μg/l twenty-four hours after ingestion are likely to survive.

The analytical methods for paraquat and diquat determination have been reviewed by Summers (1980).

Miscellaneous Pesticides

4,6-Dinitro-o-cresol (DNOC).

DNOC is an herbicide introduced in 1925, but the use of this compound has been progressively decreased due to its high toxicity to plants and to humans. Since blood DNOC concentrations correlate to a certain extent with the severity of adverse health effects, the measure of unchanged DNOC in blood has been proposed for monitoring occupational exposures and for the evaluation of the clinical course of poisonings.

Pentachlorophenol.

Pentachlorophenol (PCP) is a wide-spectrum biocide with pesticidal action against weeds, insects, and fungi. Measurements of blood or urinary unchanged PCP have been recommended as suitable indices in monitoring occupational exposures (Colosio et al. 1993), because these parameters are significantly correlated with PCP body burden. In workers with prolonged exposure to PCP the time of blood collection is not critical, while urine spot samples should be collected on the morning after exposure.

A multiresidue method for the measurement of halogenated and nitrophenolic pesticides has been described by Shafik et al.(1973b).

Other tests proposed for the biological monitoring of pesticide exposure are listed in table 9.

Table 9. Other indices proposed in the literature for the biological monitoring of pesticide exposure

|

Compound |

Biological index |

|

|

Urine |

Blood |

|

|

Bromophos |

Bromophos |

Bromophos |

|

Captan |

Tetrahydrophtalimide |

|

|

Carbofuran |

3-Hydroxycarbofuran |

|

|

Chlordimeform |

4-Chloro-o-toluidine derivatives |

|

|

Chlorobenzilate |

p,p-1-Dichlorobenzophenone |

|

|

Dichloropropene |

Mercapturic acid metabolites |

|

|

Fenitrothion |

p-Nitrocresol |

|

|

Ferbam |

Thiram |

|

|

Fluazifop-Butyl |

Fluazifop |

|

|

Flufenoxuron |

Flufenoxuron |

|

|

Glyphosate |

Glyphosate |

|

|

Malathion |

Malathion |

Malathion |

|

Organotin compounds |

Tin |

Tin |

|

Trifenomorph |

Morpholine, triphenylcarbinol |

|

|

Ziram |

Thiram |

|

Conclusions

Biological indicators for monitoring pesticide exposure have been applied in a number of experimental and field studies.

Some tests, such as those for cholinesterase in blood or for selected unmodified pesticides in urine or blood, have been validated by extensive experience. Biological exposure limits have been proposed for these tests (table 10). Other tests, in particular those for blood or urinary metabolites, suffer from greater limitations because of analytical difficulties or because of limitations in interpretation of results.

Table 10. Recommended biological limit values (as of 1996)

|

Compound |

Biological index |

BEI1 |

BAT2 |

HBBL3 |

BLV4 |

|

ACHE inhibitors |

ACHE in blood |

70% |

70% |

70%, |

|

|

DNOC |

DNOC in blood |

20 mg/l, |

|||

|

Lindane |

Lindane in blood |

0.02mg/l |

0.02mg/l |

||

|

Parathion |

PNP in urine |

0.5mg/l |

0.5mg/l |

||

|

Pentachlorophenol (PCP) |

PCP in urine PCP in plasma |

2 mg/l 5 mg/l |

0.3mg/l 1 mg/l |

||

|

Dieldrin/Aldrin |

Dieldrin in blood |

100 mg/l |

|||

|

Endrin |

Anti-12-hydroxy-endrin in urine |

130 mg/l |

|||

|

DDT |

DDT and DDEin serum |

250 mg/l |

|||

|

Coumarins |

Prothrombin time in plasma Prothrombin concentration in plasma |

10% above baseline 60% of baseline |

|||

|

MCPA |

MCPA in urine |

0.5 mg/l |

|||

|

2,4-D |

2,4-D in urine |

0.5 mg/l |

1 Biological exposure indices (BEIs) are recommended by the American Conference of Governmental Industrial Hygienists (ACGIH 1995).

2 Biological tolerance values (BATs) are recommended by the German Commission for the Investigation of Health Hazards of Chemical Compounds in the Work Area (DFG 1992).

3 Health-based biological limits (HBBLs) are recommended by a WHO Study Group (WHO 1982a).

4 Biological limit values (BLVs) are proposed by a Study Group of the Scientific Committee on Pesticides of the International Commission on Occupational Health (Tordoir et al. 1994). Assessment of working conditions is called for if this value is exceeded.

This field is in rapid development and, given the enormous importance of using biological indicators to assess exposure to these substances, new tests will be continuously developed and validated.

Epidemiological Method Applied to Occupational Health and Safety

Epidemiology

Epidemiology is recognized both as the science basic to preventive medicine and one that informs the public health policy process. Several operational definitions of epidemiology have been suggested. The simplest is that epidemiology is the study of the occurrence of disease or other health-related characteristics in human and in animal populations. Epidemiologists study not only the frequency of disease, but whether the frequency differs across groups of people; i.e., they study the cause-effect relationship between exposure and illness. Diseases do not occur at random; they have causes—quite often man-made causes—which are avoidable. Thus, many diseases could be prevented if the causes were known. The methods of epidemiology have been crucial to identifying many causative factors which, in turn, have led to health policies designed to prevent disease, injury and premature death.

What is the task of epidemiology and what are its strengths and weaknesses when definitions and concepts of epidemiology are applied to occupational health? This chapter addresses these questions and the ways in which occupational health hazards can be investigated using epidemiological techniques. This article introduces the ideas found in successive articles in this chapter.

Occupational Epidemiology

Occupational epidemiology has been defined as the study of the effects of workplace exposures on the frequency and distribution of diseases and injuries in the population. Thus it is an exposure-oriented discipline with links to both epidemiology and occupational health (Checkoway et al. 1989). As such, it uses methods similar to those employed by epidemiology in general.

The main objective of occupational epidemiology is prevention through identifying the consequences of workplace exposures on health. This underscores the preventive focus of occupational epidemiology. Indeed, all research in the field of occupational health and safety should serve preventive purposes. Hence, epidemiological knowledge can and should be readily implementable. While the public health interest always should be the primary concern of epidemiological research, vested interests can exercise influence, and care must be taken to minimize such influence in the formulation, conduct and/or interpretation of studies (Soskolne 1985; Soskolne 1989).

A second objective of occupational epidemiology is to use results from specific settings to reduce or to eliminate hazards in the population at large. Thus, apart from providing information on the health effects of exposures in the workplace, the results from occupational epidemiology studies also play a role in the estimation of risk associated with the same exposures but at the lower levels generally experienced by the general population. Environmental contamination from industrial processes and products usually would result in lower levels of exposure than those experienced in the workplace.

The levels of application of occupational epidemiology are:

- surveillance to describe the occurrence of illness in different categories of workers and so provide early warning signals of unrecognized occupational hazards

- generation and testing of an hypothesis that a given exposure may be harmful, and the quantification of an effect

- evaluation of an intervention (for example, a preventive action such as reduction in exposure levels) by measuring changes in the health status of a population over time.

The causal role that occupational exposures can play in the development of disease, injury and premature death had been identified long ago and is part of the history of epidemiology. Reference has to be made to Bernardino Ramazzini, founder of occupational medicine and one of the first to revive and add to the Hippocratic tradition of the dependence of health on identifiable natural external factors. In the year 1700, he wrote in his “De Morbis Artificum Diatriba” (Ramazzini 1705; Saracci 1995):

The physician has to ask many questions of the patients. Hippocrates states in De Affectionibus: “When you face a sick person you should ask him from what he is suffering, for what reason, for how many days, what he eats, and what are his bowel movements. To all these questions one should be added: ‘What work does he do?’.”

This reawakening of clinical observation and of the attention to the circumstances surrounding the occurrence of disease, brought Ramazzini to identify and describe many of the occupational diseases that were later studied by occupational physicians and epidemiologists.

Using this approach, Pott was first to report in 1775 (Pott 1775) the possible connection between cancer and occupation (Clayson 1962). His observations on cancer of the scrotum among chimney-sweeps began with a description of the disease and continued:

The fate of these people seems singularly hard: in their early infancy, they are most frequently treated with great brutality, and almost starved with cold and hunger; they are thrust up narrow, and sometimes hot chimneys, where they are bruised, burned and almost suffocated; and when they get to puberty, become peculiarly liable to a most noisome, painful, and fatal disease.

Of this last circumstance there is not the least doubt, though perhaps it may not have been sufficiently attended to, to make it generally known. Other people have cancer of the same parts; and so have others, besides lead-workers, the Poitou colic, and the consequent paralysis; but it is nevertheless a disease to which they are peculiarly liable; and so are chimney-sweeps to cancer of the scrotum and testicles.

The disease, in these people, seems to derive its origin from a lodgement of soot in the rugae of the scrotum, and at first not to be a disease of the habit … but here the subjects are young, in general good health, at least at first; the disease brought on them by their occupation, and in all probability local; which last circumstance may, I think, be fairly presumed from its always seizing the same parts; all this makes it (at first) a very different case from a cancer which appears in an elderly man.

This first account of an occupational cancer still remains a model of lucidity. The nature of the disease, the occupation concerned and the probable causal agent are all clearly defined. An increased incidence of scrotal cancer among chimney-sweeps is noted although no quantitative data are given to substantiate the claim.

Another fifty years passed before Ayrton-Paris noticed in 1822 (Ayrton-Paris 1822) the frequent development of scrotal cancers among the copper and tin smelters of Cornwall, and surmised that arsenic fumes might be the causal agent. Von Volkmann reported in 1874 skin tumours in paraffin workers in Saxony, and shortly afterwards, Bell suggested in 1876 that shale oil was responsible for cutaneous cancer (Von Volkmann 1874; Bell 1876). Reports of the occupational origin of cancer then became relatively more frequent (Clayson 1962).

Among the early observations of occupational diseases was the increased occurrence of lung cancer among Schneeberg miners (Harting and Hesse 1879). It is noteworthy (and tragic) that a recent case study shows that the epidemic of lung cancer in Schneeberg is still a huge public health problem, more than a century after the first observation in 1879. An approach to identify an “increase” in disease and even to quantify it had been present in the history of occupational medicine. For example, as Axelson (1994) has pointed out, W.A. Guy in 1843 studied “pulmonary consumption” in letter press printers and found a higher risk among compositors than among pressmen; this was done by applying a design similar to the case-control approach (Lilienfeld and Lilienfeld 1979). Nevertheless, it was not until perhaps the early 1950s that modern occupational epidemiology and its methodology began to develop. Major contributions marking this development were the studies on bladder cancer in dye workers (Case and Hosker 1954) and lung cancer among gas workers (Doll 1952).

Issues in Occupational Epidemiology

The articles in this chapter introduce both the philosophy and the tools of epidemiological investigation. They focus on assessing the exposure experience of workers and on the diseases that arise in these populations. Issues in drawing valid conclusions about possible causative links in the pathway from exposures to hazardous substances to the development of diseases are addressed in this chapter.

Ascertainment of an individual’s work life exposure experience constitutes the core of occupational epidemiology. The informativeness of an epidemiological study depends, in the first instance, on the quality and extent of available exposure data. Secondly, the health effects (or, the diseases) of concern to the occupational epidemiologist must be accurately determinable among a well-defined and accessible group of workers. Finally, data about other potential influences on the disease of interest should be available to the epidemiologist so that any occupational exposure effects that are established from the study can be attributed to the occupational exposure per se rather than to other known causes of the disease in question. For example, in a group of workers who may work with a chemical that is suspected of causing lung cancer, some workers may also have a history of tobacco smoking, a further cause of lung cancer. In the latter situation, occupational epidemiologists must determine which exposure (or, which risk factor—the chemical or the tobacco, or, indeed, the two in combination) is responsible for any increase in the risk of lung cancer in the group of workers being studied.

Exposure assessment

If a study has access only to the fact that a worker was employed in a particular industry, then the results from such a study can link health effects only to that industry. Likewise, if knowledge about exposure exists for the occupations of the workers, conclusions can be directly drawn only in so far as occupations are concerned. Indirect inferences on chemical exposures can be made, but their reliability has to be evaluated situation by situation. If a study has access, however, to information about the department and/or job title of each worker, then conclusions will be able to be made to that finer level of workplace experience. Where information about the actual substances with which a person works is known to the epidemiologist (in collaboration with an industrial hygienist), then this would be the finest level of exposure information available in the absence of rarely available dosimetry. Furthermore, the findings from such studies can provide more useful information to industry for creating safer workplaces.

Epidemiology has been a sort of “black box” discipline until now, because it has studied the relationship between exposure and disease (the two extremes of the causal chain), without considering the intermediate mechanistic steps. This approach, despite its apparent lack of refinement, has been extremely useful: in fact, all the known causes of cancer in humans, for instance, have been discovered with the tools of epidemiology.

The epidemiological method is based on available records —questionnaires, job titles or other “proxies” of exposure; this makes the conduct of epidemiological studies and the interpretation of their findings relatively simple.

Limitations of the more crude approach to exposure assessment, however, have become evident in recent years, with epidemiologists facing more complex problems. Limiting our consideration to occupational cancer epidemiology, most well-known risk factors have been discovered because of high levels of exposure in the past; a limited number of exposures for each job; large populations of exposed workers; and a clear-cut correspondence between “proxy” information and chemical exposures (e.g., shoe workers and benzene, shipyards and asbestos, and so on). Nowadays, the situation is substantially different: levels of exposure are considerably lower in Western countries (this qualification should always be stressed); workers are exposed to many different chemicals and mixtures in the same job title (e.g., agricultural workers); homogeneous populations of exposed workers are more difficult to find and are usually small in number; and, the correspondence between “proxy” information and actual exposure grows progressively weaker. In this context, the tools of epidemiology have reduced sensitivity owing to the misclassification of exposure.

In addition, epidemiology has relied on “hard” end points, such as death in most cohort studies. However, workers might prefer to see something different from “body counts” when the potential health effects of occupational exposures are studied. Therefore, the use of more direct indicators of both exposure and early response would have some advantages. Biological markers may provide just a tool.

Biological markers

The use of biological markers, such as lead levels in blood or liver function tests, is not new in occupational epidemiology. However, the utilization of molecular techniques in epidemiological studies has made possible the use of biomarkers for assessing target organ exposures, for determining susceptibility and for establishing early disease.

Potential uses of biomarkers in the context of occupational epidemiology are:

- exposure assessment in cases in which traditional epidemiological tools are insufficient (particularly for low doses and low risks)

- to disentangle the causative role of single chemical agents or substances in multiple exposures or mixtures

- estimation of the total burden of exposure to chemicals having the same mechanistic target

- investigation of pathogenetic mechanisms

- study of individual susceptibility (e.g., metabolic polymorphisms, DNA repair) (Vineis 1992)

- to classify exposure and/or disease more accurately, thereby increasing statistical power.

Great enthusiasm has arisen in the scientific community about these uses, but, as noted above, methodological complexity of the use of these new “molecular tools” should serve to caution against excessive optimism. Biomarkers of chemical exposures (such as DNA adducts) have several shortcomings:

- They usually reflect recent exposures and, therefore, are of limited use in case-control studies, whereas they require repeated samplings over prolonged periods for utilization in cohort investigations.

- While they can be highly specific and thus improve exposure misclassification, findings often remain difficult to interpret.

- When complex chemical exposures are investigated (e.g., air pollution or environmental tobacco smoke) it is possible that the biomarker would reflect one particular component of the mixture, whereas the biological effect could be due to another.

- In many situations, it is not clear whether a biomarker reflects a relevant exposure, a correlate of the relevant exposure, individual susceptibility, or an early disease stage, thus limiting causal inference.

- The determination of most biomarkers requires an expensive test or an invasive procedure or both, thus creating constraints for adequate study size and statistical power.

- A biomarker of exposure is no more than a proxy for the real objective of an epidemiological investigation, which, as a rule, focuses on an avoidable environmental exposure (Trichopoulos 1995; Pearce et al. 1995).

Even more important than the methodological shortcomings is the consideration that molecular techniques might cause us to redirect our focus from identifying risks in the exogenous environment, to identifying high-risk individuals and then making personalized risk assessments by measuring phenotype, adduct load and acquired mutations. This would direct our focus, as noted by McMichael, to a form of clinical evaluation, rather than one of public health epidemiology. Focusing on individuals could distract us from the important public health goal of creating a less hazardous environment (McMichael 1994).

Two further important issues emerge regarding the use of biomarkers:

- The use of biomarkers in occupational epidemiology must be accompanied by a clear policy as far as informed consent is concerned. The worker may have several reasons to refuse cooperation. One very practical reason is that the identification of, say, an alteration in an early response marker such as sister chromatid exchange implies the possibility of discrimination by health and life insurers and by employers who might shun the worker because he or she may be more prone to disease. A second reason concerns genetic screening: since the distributions of genotypes and phenotypes vary according to ethnic group, occupational opportunities for minorities might be hampered by genetic screening. Third, doubts can be raised about the predictability of genetic tests: since the predictive value depends on the prevalence of the condition which the test aims to identify, if the latter is rare, the predictive value will be low and the practical use of the screening test will be questionable. Until now, none of the genetic screening tests have been judged applicable in the field (Ashford et al. 1990).

- Ethical principles must be applied prior to the use of biomarkers. These principles have been evaluated for biomarkers used for identifying individual susceptibility to disease by an interdisciplinary Working Group of the Technical Office of the European Trade Unions, with the support of the Commission of the European Communities (Van Damme et al. 1995); their report has reinforced the view that tests can be conducted only with the objective of preventing disease in a workforce. Among other considerations, use of tests must never.

- serve as a means for “selection of the fittest”

- be used to avoid implementing effective preventive measures, such as the identification and substitution of risk factors or improvements in conditions in the workplace

- create, confirm or reinforce social inequality

- create a gap between the ethical principles followed in the workplace and the ethical principles that must be upheld in a democratic society

- oblige a person seeking employment to disclose personal details other than those strictly necessary for obtaining the job.

Finally, evidence is accumulating that the metabolic activation or inactivation of hazardous substances (and of carcinogens in particular) varies considerably in human populations, and is partly genetically determined. Furthermore, inter-individual variability in the susceptibility to carcinogens may be particularly important at low levels of occupational and environmental exposure (Vineis et al. 1994). Such findings may strongly affect regulatory decisions that focus the risk assessment process on the most susceptible (Vineis and Martone 1995).

Study design and validity

Hernberg’s article on epidemiological study designs and their applications in occupational medicine concentrates on the concept of “study base”, defined as the morbidity experience (in relation to some exposure) of a population while it is followed over time. Thus, the study base is not only a population (i.e., a group of people), but the experience of disease occurrence of this population during a certain period of time (Miettinen 1985, Hernberg 1992). If this unifying concept of a study base is adopted, then it is important to recognize that the different study designs (e.g., case-control and cohort designs) are simply different ways of “harvesting” information on both exposure and disease from the same study base; they are not diametrically different approaches.

The article on validity in study design by Sasco addresses definitions and the importance of confounding. Study investigators must always consider the possibility of confounding in occupational studies, and it can never be sufficiently stressed that the identification of potentially confounding variables is an integral part of any study design and analysis. Two aspects of confounding must be addressed in occupational epidemiology:

- Negative confounding should be explored: for example, some industrial populations have low exposure to lifestyle-associated risk factors because of a smoke-free workplace; glass blowers tend to smoke less than the general population.

- When confounding is considered, an estimate of its direction and its potential impact ought to be assessed. This is particularly true when data to control confounding are scanty. For example, smoking is an important confounder in occupational epidemiology and it always should be considered. Nevertheless, when data on smoking are not available (as is often the case in cohort studies), it is unlikely that smoking can explain a large excess of risk found in an occupational group. This is nicely described in a paper by Axelson (1978) and further discussed by Greenland (1987). When detailed data on both occupation and smoking have been available in the literature, confounding did not seem to heavily distort the estimates concerning the association between lung cancer and occupation (Vineis and Simonato 1991). Furthermore, suspected confounding does not always introduce non-valid associations. Since investigators also are at risk of being led astray by other undetected observation and selection biases, these should receive as much emphasis as the issue of confounding in designing a study (Stellman 1987).

Time and time-related variables such as age at risk, calendar period, time since hire, time since first exposure, duration of exposure and their treatment at the analysis stage, are among the most complex methodological issues in occupational epidemiology. They are not covered in this chapter, but two relevant and recent methodological references are noted (Pearce 1992; Robins et al. 1992).

Statistics

The article on statistics by Biggeri and Braga, as well as the title of this chapter, indicate that statistical methods cannot be separated from epidemiological research. This is because: (a) a sound understanding of statistics may provide valuable insights into the proper design of an investigation and (b) statistics and epidemiology share a common heritage, and the entire quantitative basis of epidemiology is grounded in the notion of probability (Clayton 1992; Clayton and Hills 1993). In many of the articles that follow, empirical evidence and proof of hypothesized causal relationships are evaluated using probabilistic arguments and appropriate study designs. For example, emphasis is placed on estimating the risk measure of interest, like rates or relative risks, and on the construction of confidence intervals around these estimates instead of the execution of statistical tests of probability (Poole 1987; Gardner and Altman 1989; Greenland 1990). A brief introduction to statistical reasoning using the binomial distribution is provided. Statistics should be a companion to scientific reasoning. But it is worthless in the absence of properly designed and conducted research. Statisticians and epidemiologists are aware that the choice of methods determines what and the extent to which we make observations. The thoughtful choice of design options is therefore of fundamental importance in order to ensure valid observations.

Ethics

The last article, by Vineis, addresses ethical issues in epidemiological research. Points to be mentioned in this introduction refer to epidemiology as a discipline that implies preventive action by definition. Specific ethical aspects with regard to the protection of workers and of the population at large require recognition that:

- Epidemiological studies in occupational settings should in no way delay preventive measures in the workplace.

- Occupational epidemiology does not refer to lifestyle factors, but to situations where usually little or no personal role is played in the choice of exposure. This implies a particular commitment to effective prevention and to the immediate transmission of information to workers and the public.

- Research uncovers health hazards and provides the knowledge for preventive action. The ethical problems of not carrying out research, when it is feasible, should be considered.

- Notification to workers of the results of epidemiological studies is both an ethical and methodological issue in risk communication. Research in evaluating the potential impact and effectiveness of notification should be given high priority (Schulte et al. 1993).

Training in occupational epidemiology

People with a diverse range of backgrounds can find their way into the specialization of occupational epidemiology. Medicine, nursing and statistics are some of the more likely backgrounds seen among those specializing in this area. In North America, about half of all trained epidemiologists have science backgrounds, while the other half will have proceeded along the doctor of medicine path. In countries outside North America, most specialists in occupational epidemiology will have advanced through the doctor of medicine ranks. In North America, those with medical training tend to be considered “content experts”, while those who are trained through the science route are deemed “methodological experts”. It is often advantageous for a content expert to team up with a methodological expert in order to design and conduct the best possible study.

Not only is knowledge of epidemiological methods, statistics and computers needed for the occupational epidemiology speciality, but so is knowledge of toxicology, industrial hygiene and disease registries (Merletti and Comba 1992). Because large studies can require linkage to disease registries, knowledge of sources of population data is useful. Knowledge of labour and corporate organization also is important. Theses at the masters level and dissertations at the doctoral level of training equip students with the knowledge needed for conducting large record-based and interview-based studies among workers.

Proportion of disease attributable to occupation

The proportion of disease which is attributable to occupational exposures either in a group of exposed workers or in the general population is covered at least with respect to cancer in another part of this Encyclopaedia. Here we should remember that if an estimate is computed, it should be for a specific disease (and a specific site in the case of cancer), a specific time period and a specific geographic area. Furthermore, it should be based on accurate measures of the proportion of exposed people and the degree of exposure. This implies that the proportion of disease attributable to occupation may vary from very low or zero in certain populations to very high in others located in industrial areas where, for example, as much as 40% of lung cancer can be attributable to occupational exposures (Vineis and Simonato 1991). Estimates which are not based on a detailed review of well-designed epidemiological studies can, at the very best, be considered as informed guesses, and are of limited value.

Transfer of hazardous industries

Most epidemiological research is carried out in the developed world, where regulation and control of known occupational hazards has reduced the risk of disease over the past several decades. At the same time, however, there has been a large transfer of hazardous industries to the developing world (Jeyaratnam 1994). Chemicals previously banned in the United States or Europe now are produced in developing countries. For example, asbestos milling has been transferred from the US to Mexico, and benzidine production from European countries to the former Yugoslavia and Korea (Simonato 1986; LaDou 1991; Pearce et al. 1994).

An indirect sign of the level of occupational risk and of the working conditions in the developing world is the epidemic of acute poisoning taking place in some of these countries. According to one assessment, there are about 20,000 deaths each year in the world from acute pesticide intoxication, but this is likely to be a substantial underestimate (Kogevinas et al. 1994). It has been estimated that 99% of all deaths from acute pesticide poisoning occur in developing countries, where only 20% of the world’s agrochemicals are used (Kogevinas et al. 1994). This is to say that even if the epidemiological research seems to point to a reduction of occupational hazards, this might simply be due to the fact that most of this research is being conducted in the developed world. The occupational hazards may simply have been transferred to the developing world and the total world occupational exposure burden might have increased (Vineis et al. 1995).

Veterinary epidemiology

For obvious reasons, veterinary epidemiology is not directly pertinent to occupational health and occupational epidemiology. Nevertheless, clues to environmental and occupational causes of diseases may come from epidemiological studies on animals for several reasons:

- The life span of animals is relatively short compared with that of humans, and the latency period for diseases (e.g., most cancers) is shorter in animals than in humans. This implies that a disease that occurs in a wild or pet animal can serve as a sentinel event to alert us to the presence of a potential environmental toxicant or carcinogen for humans before it would have been identified by other means (Glickman 1993).

- Markers of exposures, such as haemoglobin adducts or levels of absorption and excretion of toxins, may be measured in wild and pet animals to assess environmental contamination from industrial sources (Blondin and Viau 1992; Reynolds et al. 1994; Hungerford et al. 1995).

- Animals are not exposed to some factors which may act as confounders in human studies, and investigations in animal populations therefore can be conducted without regard to these potential confounders. For example, a study of lung cancer in pet dogs might detect significant associations between the disease and exposure to asbestos (e.g., via owners’ asbestos-related occupations and proximity to industrial sources of asbestos). Clearly, such a study would remove the effect of active smoking as a confounder.

Veterinarians talk about an epidemiological revolution in veterinary medicine (Schwabe 1993) and textbooks about the discipline have appeared (Thrusfield 1986; Martin et al. 1987). Certainly, clues to environmental and occupational hazards have come from the joint efforts of human and animal epidemiologists. Among others, the effect of phenoxyherbicides in sheep and dogs (Newell et al. 1984; Hayes et al. 1990), of magnetic fields (Reif et al. 1995) and pesticides (notably flea preparations) contaminated with asbestos-like compounds in dogs (Glickman et al. 1983) are notable contributions.

Participatory research, communicating results and prevention

It is important to recognize that many epidemiological studies in the field of occupational health are initiated through the experience and concern of workers themselves (Olsen et al. 1991). Often, the workers—those historically and/or presently exposed—believed that something was wrong long before this was confirmed by research. Occupational epidemiology can be thought of as a way of “making sense” of the workers’ experience, of collecting and grouping the data in a systematic way, and allowing inferences to be made about the occupational causes of their ill health. Furthermore, the workers themselves, their representatives and the people in charge of workers’ health are the most appropriate persons to interpret the data which are collected. They therefore should always be active participants in any investigation conducted in the workplace. Only their direct involvement will guarantee that the workplace will remain safe after the researchers have left. The aim of any study is the use of the results in the prevention of disease and disability, and the success of this depends to a large extent on ensuring that the exposed participate in obtaining and interpreting the results of the study. The role and use of research findings in the litigation process as workers seek compensation for damages caused through workplace exposure is beyond the scope of this chapter. For some insight on this, the reader is referred elsewhere (Soskolne, Lilienfeld and Black 1994).

Participatory approaches to ensuring the conduct of occupational epidemiological research have in some places become standard practice in the form of steering committees established to oversee the research initiative from its inception to its completion. These committees are multipartite in their structure, including labour, science, management and/or government. With representatives of all stakeholder groups in the research process, the communication of results will be made more effective by virtue of their enhanced credibility because “one of their own” would have been overseeing the research and would be communicating the findings to his or her respective constituency. In this way, the greatest level of effective prevention is likely.

These and other participatory approaches in occupational health research are undertaken with the involvement of those who experience or are otherwise affected by the exposure-related problem of concern. This should be seen more commonly in all epidemiological research (Laurell et al. 1992). It is relevant to remember that while in epidemiological work the objective of analysis is estimation of the magnitude and distribution of risk, in participatory research, the preventability of the risk is also an objective (Loewenson and Biocca 1995). This complementarity of epidemiology and effective prevention is part of the message of this Encyclopaedia and of this chapter.

Maintaining public health relevance

Although new developments in epidemiological methodology, in data analysis and in exposure assessment and measurement (such as new molecular biological techniques) are welcome and important, they can also contribute to a reductionist approach focusing on individuals, rather than on populations. It has been said that: