Children categories

27. Biological Monitoring (6)

27. Biological Monitoring

Chapter Editor: Robert Lauwerys

Table of Contents

Tables and Figures

General Principles

Vito Foà and Lorenzo Alessio

Quality Assurance

D. Gompertz

Metals and Organometallic Compounds

P. Hoet and Robert Lauwerys

Organic Solvents

Masayuki Ikeda

Genotoxic Chemicals

Marja Sorsa

Pesticides

Marco Maroni and Adalberto Ferioli

Tables

Click a link below to view table in article context.

1. ACGIH, DFG & other limit values for metals

2. Examples of chemicals & biological monitoring

3. Biological monitoring for organic solvents

4. Genotoxicity of chemicals evaluated by IARC

5. Biomarkers & some cell/tissue samples & genotoxicity

6. Human carcinogens, occupational exposure & cytogenetic end points

8. Exposure from production & use of pesticides

9. Acute OP toxicity at different levels of ACHE inhibition

10. Variations of ACHE & PCHE & selected health conditions

11. Cholinesterase activities of unexposed healthy people

12. Urinary alkyl phosphates & OP pesticides

13. Urinary alkyl phosphates measurements & OP

14. Urinary carbamate metabolites

15. Urinary dithiocarbamate metabolites

16. Proposed indices for biological monitoring of pesticides

17. Recommended biological limit values (as of 1996)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

28. Epidemiology and Statistics (12)

28. Epidemiology and Statistics

Chapter Editors: Franco Merletti, Colin L. Soskolne and Paolo Vineis

Table of Contents

Tables and Figures

Epidemiological Method Applied to Occupational Health and Safety

Franco Merletti, Colin L. Soskolne and Paolo Vineis

Exposure Assessment

M. Gerald Ott

Summary Worklife Exposure Measures

Colin L. Soskolne

Measuring Effects of Exposures

Shelia Hoar Zahm

Case Study: Measures

Franco Merletti, Colin L. Soskolne and Paola Vineis

Options in Study Design

Sven Hernberg

Validity Issues in Study Design

Annie J. Sasco

Impact of Random Measurement Error

Paolo Vineis and Colin L. Soskolne

Statistical Methods

Annibale Biggeri and Mario Braga

Causality Assessment and Ethics in Epidemiological Research

Paolo Vineis

Case Studies Illustrating Methodological Issues in the Surveillance of Occupational Diseases

Jung-Der Wang

Questionnaires in Epidemiological Research

Steven D. Stellman and Colin L. Soskolne

Asbestos Historical Perspective

Lawrence Garfinkel

Tables

Click a link below to view table in article context.

1. Five selected summary measures of worklife exposure

2. Measures of disease occurrence

3. Measures of association for a cohort study

4. Measures of association for case-control studies

5. General frequency table layout for cohort data

6. Sample layout of case-control data

7. Layout case-control data - one control per case

8. Hypothetical cohort of 1950 individuals to T2

9. Indices of central tendency & dispersion

10. A binomial experiment & probabilities

11. Possible outcomes of a binomial experiment

12. Binomial distribution, 15 successes/30 trials

13. Binomial distribution, p = 0.25; 30 trials

14. Type II error & power; x = 12, n = 30, a = 0.05

15. Type II error & power; x = 12, n = 40, a = 0.05

16. 632 workers exposed to asbestos 20 years or longer

17. O/E number of deaths among 632 asbestos workers

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

29. Ergonomics (27)

29. Ergonomics

Chapter Editors: Wolfgang Laurig and Joachim Vedder

Table of Contents

Tables and Figures

Overview

Wolfgang Laurig and Joachim Vedder

Goals, Principles and Methods

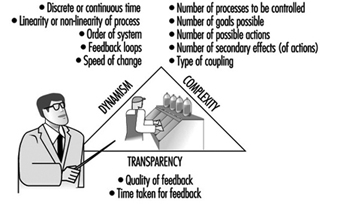

The Nature and Aims of Ergonomics

William T. Singleton

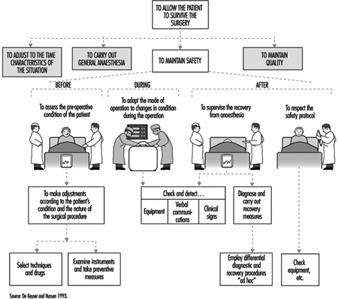

Analysis of Activities, Tasks and Work Systems

Véronique De Keyser

Ergonomics and Standardization

Friedhelm Nachreiner

Checklists

Pranab Kumar Nag

Physical and Physiological Aspects

Anthropometry

Melchiorre Masali

Muscular Work

Juhani Smolander and Veikko Louhevaara

Postures at Work

Ilkka Kuorinka

Biomechanics

Frank Darby

General Fatigue

Étienne Grandjean

Fatigue and Recovery

Rolf Helbig and Walter Rohmert

Psychological Aspects

Mental Workload

Winfried Hacker

Vigilance

Herbert Heuer

Mental Fatigue

Peter Richter

Organizational Aspects of Work

Work Organization

Eberhard Ulich and Gudela Grote

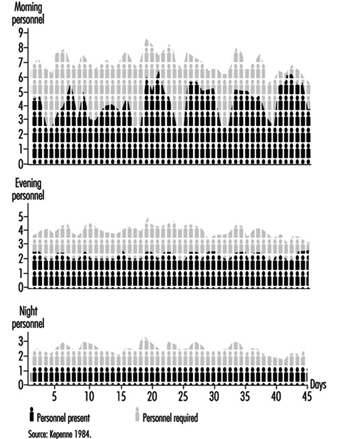

Sleep Deprivation

Kazutaka Kogi

Work Systems Design

Workstations

Roland Kadefors

Tools

T.M. Fraser

Controls, Indicators and Panels

Karl H. E. Kroemer

Information Processing and Design

Andries F. Sanders

Designing for Everyone

Designing for Specific Groups

Joke H. Grady-van den Nieuwboer

Case Study: The International Classification of Functional Limitation in People

Cultural Differences

Houshang Shahnavaz

Elderly Workers

Antoine Laville and Serge Volkoff

Workers with Special Needs

Joke H. Grady-van den Nieuwboer

Diversity and Importance of Ergonomics--Two Examples

System Design in Diamond Manufacturing

Issachar Gilad

Disregarding Ergonomic Design Principles: Chernobyl

Vladimir M. Munipov

Tables

Click a link below to view table in article context.

1. Basic anthropometric core list

2. Fatigue & recovery dependent on activity levels

3. Rules of combination effects of two stress factors on strain

4. Differenting among several negative consequences of mental strain

5. Work-oriented principles for production structuring

6. Participation in organizational context

7. User participation in the technology process

8. Irregular working hours & sleep deprivation

9. Aspects of advance, anchor & retard sleeps

10. Control movements & expected effects

11. Control-effect relations of common hand controls

12. Rules for arrangement of controls

Figures

Point to a thumbnail to see figure caption, click to see the figure in the article context.

30. Occupational Hygiene (6)

30. Occupational Hygiene

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Goals, Definitions and General Information

Berenice I. Ferrari Goelzer

Recognition of Hazards

Linnéa Lillienberg

Evaluation of the Work Environment

Lori A. Todd

Occupational Hygiene: Control of Exposures Through Intervention

James Stewart

The Biological Basis for Exposure Assessment

Dick Heederik

Occupational Exposure Limits

Dennis J. Paustenbach

Tables

1. Hazards of chemical; biological & physical agents

2. Occupational exposure limits (OELs) - various countries

Figures

31. Personal Protection (7)

31. Personal Protection

Chapter Editor: Robert F. Herrick

Table of Contents

Tables and Figures

Overview and Philosophy of Personal Protection

Robert F. Herrick

Eye and Face Protectors

Kikuzi Kimura

Foot and Leg Protection

Toyohiko Miura

Head Protection

Isabelle Balty and Alain Mayer

Hearing Protection

John R. Franks and Elliott H. Berger

Protective Clothing

S. Zack Mansdorf

Respiratory Protection

Thomas J. Nelson

Tables

Click a link below to view table in article context.

1. Transmittance requirements (ISO 4850-1979)

2. Scales of protection - gas-welding & braze-welding

3. Scales of protection - oxygen cutting

4. Scales of protection - plasma arc cutting

5. Scales of protection - electric arc welding or gouging

6. Scales of protection - plasma direct arc welding

7. Safety helmet: ISO Standard 3873-1977

8. Noise Reduction Rating of a hearing protector

9. Computing the A-weighted noise reduction

10. Examples of dermal hazard categories

11. Physical, chemical & biological performance requirements

12. Material hazards associated with particular activities

13. Assigned protection factors from ANSI Z88 2 (1992)

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

32. Record Systems and Surveillance (9)

32. Record Systems and Surveillance

Chapter Editor: Steven D. Stellman

Table of Contents

Tables and Figures

Occupational Disease Surveillance and Reporting Systems

Steven B. Markowitz

Occupational Hazard Surveillance

David H. Wegman and Steven D. Stellman

Surveillance in Developing Countries

David Koh and Kee-Seng Chia

Development and Application of an Occupational Injury and Illness Classification System

Elyce Biddle

Risk Analysis of Nonfatal Workplace Injuries and Illnesses

John W. Ruser

Case Study: Worker Protection and Statistics on Accidents and Occupational Diseases - HVBG, Germany

Martin Butz and Burkhard Hoffmann

Case Study: Wismut - A Uranium Exposure Revisited

Heinz Otten and Horst Schulz

Measurement Strategies and Techniques for Occupational Exposure Assessment in Epidemiology

Frank Bochmann and Helmut Blome

Case Study: Occupational Health Surveys in China

Tables

Click a link below to view the table in article context.

1. Angiosarcoma of the liver - world register

2. Occupational illness, US, 1986 versus 1992

3. US Deaths from pneumoconiosis & pleural mesothelioma

4. Sample list of notifiable occupational diseases

5. Illness & injury reporting code structure, US

6. Nonfatal occupational injuries & illnesses, US 1993

7. Risk of occupational injuries & illnesses

8. Relative risk for repetitive motion conditions

9. Workplace accidents, Germany, 1981-93

10. Grinders in metalworking accidents, Germany, 1984-93

11. Occupational disease, Germany, 1980-93

12. Infectious diseases, Germany, 1980-93

13. Radiation exposure in the Wismut mines

14. Occupational diseases in Wismut uranium mines 1952-90

Figures

Point to a thumbnail to see figure caption, click to see the figure in article context.

33. Toxicology (21)

33. Toxicology

Chapter Editor: Ellen K. Silbergeld

Table of Contents

Tables and Figures

Introduction

Ellen K. Silbergeld, Chapter Editor

General Principles of Toxicology

Definitions and Concepts

Bo Holmberg, Johan Hogberg and Gunnar Johanson

Toxicokinetics

Dušan Djuríc

Target Organ And Critical Effects

Marek Jakubowski

Effects Of Age, Sex And Other Factors

Spomenka Telišman

Genetic Determinants Of Toxic Response

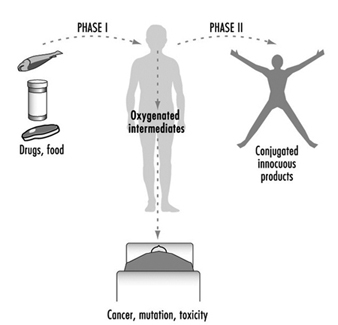

Daniel W. Nebert and Ross A. McKinnon

Mechanisms of Toxicity

Introduction And Concepts

Philip G. Watanabe

Cellular Injury And Cellular Death

Benjamin F. Trump and Irene K. Berezesky

Genetic Toxicology

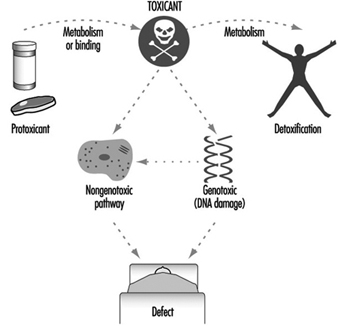

R. Rita Misra and Michael P. Waalkes

Immunotoxicology

Joseph G. Vos and Henk van Loveren

Target Organ Toxicology

Ellen K. Silbergeld

Toxicology Test Methods

Biomarkers

Philippe Grandjean

Genetic Toxicity Assessment

David M. DeMarini and James Huff

In Vitro Toxicity Testing

Joanne Zurlo

Structure Activity Relationships

Ellen K. Silbergeld

Regulatory Toxicology

Toxicology In Health And Safety Regulation

Ellen K. Silbergeld

Principles Of Hazard Identification - The Japanese Approach

Masayuki Ikeda

The United States Approach to Risk Assessment Of Reproductive Toxicants and Neurotoxic Agents

Ellen K. Silbergeld

Approaches To Hazard Identification - IARC

Harri Vainio and Julian Wilbourn

Appendix - Overall Evaluations of Carcinogenicity to Humans: IARC Monographs Volumes 1-69 (836)

Carcinogen Risk Assessment: Other Approaches

Cees A. van der Heijden

Tables

Click a link below to view table in article context.

- Examples of critical organs & critical effects

- Basic effects of possible multiple interactions of metals

- Haemoglobin adducts in workers exposed to aniline & acetanilide

- Hereditary, cancer-prone disorders & defects in DNA repair

- Examples of chemicals that exhibit genotoxicity in human cells

- Classification of tests for immune markers

- Examples of biomarkers of exposure

- Pros & cons of methods for identifying human cancer risks

- Comparison of in vitro systems for hepatotoxicity studies

- Comparison of SAR & test data: OECD/NTP analyses

- Regulation of chemical substances by laws, Japan

- Test items under the Chemical Substance Control Law, Japan

- Chemical substances & the Chemical Substances Control Law

- Selected major neurotoxicity incidents

- Examples of specialized tests to measure neurotoxicity

- Endpoints in reproductive toxicology

- Comparison of low-dose extrapolations procedures

- Frequently cited models in carcinogen risk characterization

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

General Principles

Basic Concepts and Definitions

At the worksite, industrial hygiene methodologies can measure and control only airborne chemicals, while other aspects of the problem of possible harmful agents in the environment of workers, such as skin absorption, ingestion, and non-work-related exposure, remain undetected and therefore uncontrolled. Biological monitoring helps fill this gap.

Biological monitoring was defined in a 1980 seminar, jointly sponsored by the European Economic Community (EEC), National Institute for Occupational Safety and Health (NIOSH) and Occupational Safety and Health Association (OSHA) (Berlin, Yodaiken and Henman 1984) in Luxembourg as “the measurement and assessment of agents or their metabolites either in tissues, secreta, excreta, expired air or any combination of these to evaluate exposure and health risk compared to an appropriate reference”. Monitoring is a repetitive, regular and preventive activity designed to lead, if necessary, to corrective actions; it should not be confused with diagnostic procedures.

Biological monitoring is one of the three important tools in the prevention of diseases due to toxic agents in the general or occupational environment, the other two being environmental monitoring and health surveillance.

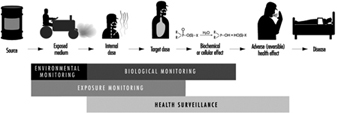

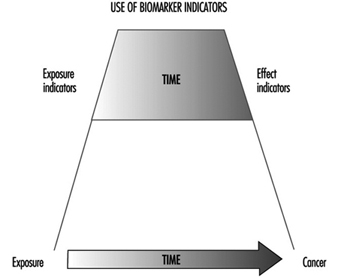

The sequence in the possible development of such disease may be schematically represented as follows: source-exposed chemical agent—internal dose—biochemical or cellular effect (reversible) —health effects—disease. The relationships among environmental, biological, and exposure monitoring, and health surveillance, are shown in figure 1.

Figure 1. The relationship between environmental, biological and exposure monitoring, and health surveillance

When a toxic substance (an industrial chemical, for example) is present in the environment, it contaminates air, water, food, or surfaces in contact with the skin; the amount of toxic agent in these media is evaluated via environmental monitoring.

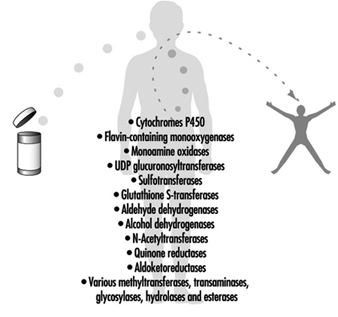

As a result of absorption, distribution, metabolism, and excretion, a certain internal dose of the toxic agent (the net amount of a pollutant absorbed in or passed through the organism over a specific time interval) is effectively delivered to the body, and becomes detectable in body fluids. As a result of its interaction with a receptor in the critical organ (the organ which, under specific conditions of exposure, exhibits the first or the most important adverse effect), biochemical and cellular events occur. Both the internal dose and the elicited biochemical and cellular effects may be measured through biological monitoring.

Health surveillance was defined at the above-mentioned 1980 EEC/NIOSH/OSHA seminar as “the periodic medico-physiological examination of exposed workers with the objective of protecting health and preventing disease”.

Biological monitoring and health surveillance are parts of a continuum that can range from the measurement of agents or their metabolites in the body via evaluation of biochemical and cellular effects, to the detection of signs of early reversible impairment of the critical organ. The detection of established disease is outside the scope of these evaluations.

Goals of Biological Monitoring

Biological monitoring can be divided into (a) monitoring of exposure, and (b) monitoring of effect, for which indicators of internal dose and of effect are used respectively.

The purpose of biological monitoring of exposure is to assess health risk through the evaluation of internal dose, achieving an estimate of the biologically active body burden of the chemical in question. Its rationale is to ensure that worker exposure does not reach levels capable of eliciting adverse effects. An effect is termed “adverse” if there is an impairment of functional capacity, a decreased ability to compensate for additional stress, a decreased ability to maintain homeostasis (a stable state of equilibrium), or an enhanced susceptibility to other environmental influences.

Depending on the chemical and the analysed biological parameter, the term internal dose may have different meanings (Bernard and Lauwerys 1987). First, it may mean the amount of a chemical recently absorbed, for example, during a single workshift. A determination of the pollutant’s concentration in alveolar air or in the blood may be made during the workshift itself, or as late as the next day (samples of blood or alveolar air may be taken up to 16 hours after the end of the exposure period). Second, in the case that the chemical has a long biological half-life—for example, metals in the bloodstream—the internal dose could reflect the amount absorbed over a period of a few months.

Third, the term may also mean the amount of chemical stored. In this case it represents an indicator of accumulation which can provide an estimate of the concentration of the chemical in organs and/or tissues from which, once deposited, it is only slowly released. For example, measurements of DDT or PCB in blood could provide such an estimate.

Finally, an internal dose value may indicate the quantity of the chemical at the site where it exerts its effects, thus providing information about the biologically effective dose. One of the most promising and important uses of this capability, for example, is the determination of adducts formed by toxic chemicals with protein in haemoglobin or with DNA.

Biological monitoring of effects is aimed at identifying early and reversible alterations which develop in the critical organ, and which, at the same time, can identify individuals with signs of adverse health effects. In this sense, biological monitoring of effects represents the principal tool for the health surveillance of workers.

Principal Monitoring Methods

Biological monitoring of exposure is based on the determination of indicators of internal dose by measuring:

- the amount of the chemical, to which the worker is exposed, in blood or urine (rarely in milk, saliva, or fat)

- the amount of one or more metabolites of the chemical involved in the same body fluids

- the concentration of volatile organic compounds (solvents) in alveolar air

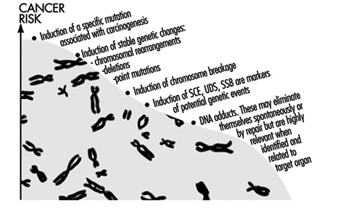

- the biologically effective dose of compounds which have formed adducts to DNA or other large molecules and which thus have a potential genotoxic effect.

Factors affecting the concentration of the chemical and its metabolites in blood or urine will be discussed below.

As far as the concentration in alveolar air is concerned, besides the level of environmental exposure, the most important factors involved are solubility and metabolism of the inhaled substance, alveolar ventilation, cardiac output, and length of exposure (Brugnone et al. 1980).

The use of DNA and haemoglobin adducts in monitoring human exposure to substances with carcinogenic potential is a very promising technique for measurement of low level exposures. (It should be noted, however, that not all chemicals that bind to macromolecules in the human organism are genotoxic, i.e., potentially carcinogenic.) Adduct formation is only one step in the complex process of carcinogenesis. Other cellular events, such as DNA repair promotion and progression undoubtedly modify the risk of developing a disease such as cancer. Thus, at the present time, the measurement of adducts should be seen as being confined only to monitoring exposure to chemicals. This is discussed more fully in the article “Genotoxic chemicals” later in this chapter.

Biological monitoring of effects is performed through the determination of indicators of effect, that is, those that can identify early and reversible alterations. This approach may provide an indirect estimate of the amount of chemical bound to the sites of action and offers the possibility of assessing functional alterations in the critical organ in an early phase.

Unfortunately, we can list only a few examples of the application of this approach, namely, (1) the inhibition of pseudocholinesterase by organophosphate insecticides, (2) the inhibition of d-aminolaevulinic acid dehydratase (ALA-D) by inorganic lead, and (3) the increased urinary excretion of d-glucaric acid and porphyrins in subjects exposed to chemicals inducing microsomal enzymes and/or to porphyrogenic agents (e.g., chlorinated hydrocarbons).

Advantages and Limitations of Biological Monitoring

For substances that exert their toxicity after entering the human organism, biological monitoring provides a more focused and targeted assessment of health risk than does environmental monitoring. A biological parameter reflecting the internal dose brings us one step closer to understanding systemic adverse effects than does any environmental measurement.

Biological monitoring offers numerous advantages over environmental monitoring and in particular permits assessment of:

- exposure over an extended time period

- exposure as a result of worker mobility in the working environment

- absorption of a substance via various routes, including the skin

- overall exposure as a result of different sources of pollution, both occupational and non-occupational

- the quantity of a substance absorbed by the subject depending on factors other than the degree of exposure, such as the physical effort required by the job, ventilation, or climate

- the quantity of a substance absorbed by a subject depending on individual factors that can influence the toxicokinetics of the toxic agent in the organism; for example, age, sex, genetic features, or functional state of the organs where the toxic substance undergoes biotransformation and elimination.

In spite of these advantages, biological monitoring still suffers today from considerable limitations, the most significant of which are the following:

- The number of possible substances which can be monitored biologically is at present still rather small.

- In the case of acute exposure, biological monitoring supplies useful information only for exposure to substances that are rapidly metabolized, for example, aromatic solvents.

- The significance of biological indicators has not been clearly defined; for example, it is not always known whether the levels of a substance measured on biological material reflect current or cumulative exposure (e.g., urinary cadmium and mercury).

- Generally, biological indicators of internal dose allow assessment of the degree of exposure, but do not furnish data that will measure the actual amount present in the critical organ

- Often there is no knowledge of possible interference in the metabolism of the substances being monitored by other exogenous substances to which the organism is simultaneously exposed in the working and general environment.

- There is not always sufficient knowledge on the relationships existing between the levels of environmental exposure and the levels of the biological indicators on the one hand, and between the levels of the biological indicators and possible health effects on the other.

- The number of biological indicators for which biological exposure indices (BEIs) exist at present is rather limited. Follow-up information is needed to determine whether a substance, presently identified as not capable of causing an adverse effect, may at a later time be shown to be harmful.

- A BEI usually represents a level of an agent that is most likely to be observed in a specimen collected from a healthy worker who has been exposed to the chemical to the same extent as a worker with an inhalation exposure to the TLV (threshold limit value) time-weighted average (TWA).

Information Required for the Development of Methods and Criteria for Selecting Biological Tests

Programming biological monitoring requires the following basic conditions:

- knowledge of the metabolism of an exogenous substance in the human organism (toxicokinetics)

- knowledge of the alterations that occur in the critical organ (toxicodynamics)

- existence of indicators

- existence of sufficiently accurate analytical methods

- possibility of using readily obtainable biological samples on which the indicators can be measured

- existence of dose-effect and dose-response relationships and knowledge of these relationships

- predictive validity of the indicators.

In this context, the validity of a test is the degree to which the parameter under consideration predicts the situation as it really is (i.e., as more accurate measuring instruments would show it to be). Validity is determined by the combination of two properties: sensitivity and specificity. If a test possesses a high sensitivity, this means that it will give few false negatives; if it possesses high specificity, it will give few false positives (CEC 1985-1989).

Relationship between exposure, internal dose and effects

The study of the concentration of a substance in the working environment and the simultaneous determination of the indicators of dose and effect in exposed subjects allows information to be obtained on the relationship between occupational exposure and the concentration of the substance in biological samples, and between the latter and the early effects of exposure.

Knowledge of the relationships between the dose of a substance and the effect it produces is an essential requirement if a programme of biological monitoring is to be put into effect. The evaluation of this dose-effect relationship is based on the analysis of the degree of association existing between the indicator of dose and the indicator of effect and on the study of the quantitative variations of the indicator of effect with every variation of indicator of dose. (See also the chapter Toxicology, for further discussion of dose-related relationships).

With the study of the dose-effect relationship it is possible to identify the concentration of the toxic substance at which the indicator of effect exceeds the values currently considered not harmful. Furthermore, in this way it may also be possible to examine what the no-effect level might be.

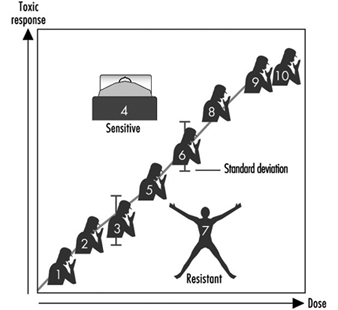

Since not all the individuals of a group react in the same manner, it is necessary to examine the dose-response relationship, in other words, to study how the group responds to exposure by evaluating the appearance of the effect compared to the internal dose. The term response denotes the percentage of subjects in the group who show a specific quantitative variation of an effect indicator at each dose level.

Practical Applications of Biological Monitoring

The practical application of a biological monitoring programme requires information on (1) the behaviour of the indicators used in relation to exposure, especially those relating to degree, continuity and duration of exposure, (2) the time interval between end of exposure and measurement of the indicators, and (3) all physiological and pathological factors other than exposure that can alter the indicator levels.

In the following articles the behaviour of a number of biological indicators of dose and effect that are used for monitoring occupational exposure to substances widely used in industry will be presented. The practical usefulness and limits will be assessed for each substance, with particular emphasis on time of sampling and interfering factors. Such considerations will be helpful in establishing criteria for selecting a biological test.

Time of sampling

In selecting the time of sampling, the different kinetic aspects of the chemical must be kept in mind; in particular it is essential to know how the substance is absorbed via the lung, the gastrointestinal tract and the skin, subsequently distributed to the different compartments of the body, biotransformed, and finally eliminated. It is also important to know whether the chemical may accumulate in the body.

With respect to exposure to organic substances, the collection time of biological samples becomes all the more important in view of the different velocity of the metabolic processes involved and consequently the more or less rapid excretion of the absorbed dose.

Interfering Factors

Correct use of biological indicators requires a thorough knowledge of those factors which, although independent of exposure, may nevertheless affect the biological indicator levels. The following are the most important types of interfering factors (Alessio, Berlin and Foà 1987).

Physiological factors including diet, sex and age, for example, can affect results. Consumption of fish and crustaceans may increase the levels of urinary arsenic and blood mercury. In female subjects with the same lead blood levels as males, the erythrocyte protoporphyrin values are significantly higher compared to those of male subjects. The levels of urinary cadmium increase with age.

Among the personal habits that can distort indicator levels, smoking and alcohol consumption are particularly important. Smoking may cause direct absorption of substances naturally present in tobacco leaves (e.g., cadmium), or of pollutants present in the working environment that have been deposited on the cigarettes (e.g., lead), or of combustion products (e.g., carbon monoxide).

Alcohol consumption may influence biological indicator levels, since substances such as lead are naturally present in alcoholic beverages. Heavy drinkers, for example, show higher blood lead levels than control subjects. Ingestion of alcohol can interfere with the biotransformation and elimination of toxic industrial compounds: with a single dose, alcohol can inhibit the metabolism of many solvents, for example, trichloroethylene, xylene, styrene and toluene, because of their competition with ethyl alcohol for enzymes which are essential for the breakdown of both ethanol and solvents. Regular alcohol ingestion can also affect the metabolism of solvents in a totally different manner by accelerating solvent metabolism, presumably due to induction of the microsome oxidizing system. Since ethanol is the most important substance capable of inducing metabolic interference, it is advisable to determine indicators of exposure for solvents only on days when alcohol has not been consumed.

Less information is available on the possible effects of drugs on the levels of biological indicators. It has been demonstrated that aspirin can interfere with the biological transformation of xylene to methylhippuric acid, and phenylsalicylate, a drug widely used as an analgesic, can significantly increase the levels of urinary phenols. The consumption of aluminium-based antacid preparations can give rise to increased levels of aluminium in plasma and urine.

Marked differences have been observed in different ethnic groups in the metabolism of widely used solvents such as toluene, xylene, trichloroethylene, tetrachloroethylene, and methylchloroform.

Acquired pathological states can influence the levels of biological indicators. The critical organ can behave anomalously with respect to biological monitoring tests because of the specific action of the toxic agent as well as for other reasons. An example of situations of the first type is the behaviour of urinary cadmium levels: when tubular disease due to cadmium sets in, urinary excretion increases markedly and the levels of the test no longer reflect the degree of exposure. An example of the second type of situation is the increase in erythrocyte protoporphyrin levels observed in iron-deficient subjects who show no abnormal lead absorption.

Physiological changes in the biological media—urine, for example—on which determinations of the biological indicators are based, can influence the test values. For practical purposes, only spot urinary samples can be obtained from individuals during work, and the varying density of these samples means that the levels of the indicator can fluctuate widely in the course of a single day.

In order to overcome this difficulty, it is advisable to eliminate over-diluted or over-concentrated samples according to selected specific gravity or creatinine values. In particular, urine with a specific gravity below 1010 or higher than 1030 or with a creatinine concentration lower than 0.5 g/l or greater than 3.0 g/l should be discarded. Several authors also suggest adjusting the values of the indicators according to specific gravity or expressing the values according to urinary creatinine content.

Pathological changes in the biological media can also considerably influence the values of the biological indicators. For example, in anaemic subjects exposed to metals (mercury, cadmium, lead, etc.) the blood levels of the metal may be lower than would be expected on the basis of exposure; this is due to the low level of red blood cells that transport the toxic metal in the blood circulation.

Therefore, when determinations of toxic substances or metabolites bound to red blood cells are made on whole blood, it is always advisable to determine the haematocrit, which gives a measure of the percentage of blood cells in whole blood.

Multiple exposure to toxic substances present in the workplace

In the case of combined exposure to more than one toxic substance present at the workplace, metabolic interferences may occur that can alter the behaviour of the biological indicators and thus create serious problems in interpretation. In human studies, interferences have been demonstrated, for example, in combined exposure to toluene and xylene, xylene and ethylbenzene, toluene and benzene, hexane and methyl ethyl ketone, tetrachloroethylene and trichloroethylene.

In particular, it should be noted that when biotransformation of a solvent is inhibited, the urinary excretion of its metabolite is reduced (possible underestimation of risk) whereas the levels of the solvent in blood and expired air increase (possible overestimation of risk).

Thus, in situations in which it is possible to measure simultaneously the substances and their metabolites in order to interpret the degree of inhibitory interference, it would be useful to check whether the levels of the urinary metabolites are lower than expected and at the same time whether the concentration of the solvents in blood and/or expired air is higher.

Metabolic interferences have been described for exposures where the single substances are present in levels close to and sometimes below the currently accepted limit values. Interferences, however, do not usually occur when exposure to each substance present in the workplace is low.

Practical Use of Biological Indicators

Biological indicators can be used for various purposes in occupational health practice, in particular for (1) periodic control of individual workers, (2) analysis of the exposure of a group of workers, and (3) epidemiological assessments. The tests used should possess the features of precision, accuracy, good sensitivity, and specificity in order to minimize the possible number of false classifications.

Reference values and reference groups

A reference value is the level of a biological indicator in the general population not occupationally exposed to the toxic substance under study. It is necessary to refer to these values in order to compare the data obtained through biological monitoring programmes in a population which is presumed to be exposed. Reference values should not be confused with limit values, which generally are the legal limits or guidelines for occupational and environmental exposure (Alessio et al. 1992).

When it is necessary to compare the results of group analyses, the distribution of the values in the reference group and in the group under study must be known because only then can a statistical comparison be made. In these cases, it is essential to attempt to match the general population (reference group) with the exposed group for similar characteristics such as, sex, age, lifestyle and eating habits.

To obtain reliable reference values one must make sure that the subjects making up the reference group have never been exposed to the toxic substances, either occupationally or due to particular conditions of environmental pollution.

In assessing exposure to toxic substances one must be careful not to include subjects who, although not directly exposed to the toxic substance in question, work in the same workplace, since if these subjects are, in fact, indirectly exposed, the exposure of the group may be in consequence underestimated.

Another practice to avoid, although it is still widespread, is the use for reference purposes of values reported in the literature that are derived from case lists from other countries and may often have been collected in regions where different environmental pollution situations exist.

Periodic monitoring of individual workers

Periodic monitoring of individual workers is mandatory when the levels of the toxic substance in the atmosphere of the working environment approach the limit value. Where possible, it is advisable to simultaneously check an indicator of exposure and an indicator of effect. The data thus obtained should be compared with the reference values and the limit values suggested for the substance under study (ACGIH 1993).

Analysis of a group of workers

Analysis of a group becomes mandatory when the results of the biological indicators used can be markedly influenced by factors independent of exposure (diet, concentration or dilution of urine, etc.) and for which a wide range of “normal” values exists.

In order to ensure that the group study will furnish useful results, the group must be sufficiently numerous and homogeneous as regards exposure, sex, and, in the case of some toxic agents, work seniority. The more the exposure levels are constant over time, the more reliable the data will be. An investigation carried out in a workplace where the workers frequently change department or job will have little value. For a correct assessment of a group study it is not sufficient to express the data only as mean values and range. The frequency distribution of the values of the biological indicator in question must also be taken into account.

Epidemiological assessments

Data obtained from biological monitoring of groups of workers can also be used in cross-sectional or prospective epidemiological studies.

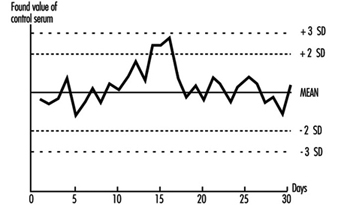

Cross-sectional studies can be used to compare the situations existing in different departments of the factory or in different industries in order to set up risk maps for manufacturing processes. A difficulty that may be encountered in this type of application depends on the fact that inter-laboratory quality controls are not yet sufficiently widespread; thus it cannot be guaranteed that different laboratories will produce comparable results.

Prospective studies serve to assess the behaviour over time of the exposure levels so as to check, for example, the efficacy of environmental improvements or to correlate the behaviour of biological indicators over the years with the health status of the subjects being monitored. The results of such long-term studies are very useful in solving problems involving changes over time. At present, biological monitoring is mainly used as a suitable procedure for assessing whether current exposure is judged to be “safe,” but it is as yet not valid for assessing situations over time. A given level of exposure considered safe today may no longer be regarded as such at some point in the future.

Ethical Aspects

Some ethical considerations arise in connection with the use of biological monitoring as a tool to assess potential toxicity. One goal of such monitoring is to assemble enough information to decide what level of any given effect constitutes an undesirable effect; in the absence of sufficient data, any perturbation will be considered undesirable. The regulatory and legal implications of this type of information need to be evaluated. Therefore, we should seek societal discussion and consensus as to the ways in which biological indicators should best be used. In other words, education is required of workers, employers, communities and regulatory authorities as to the meaning of the results obtained by biological monitoring so that no one is either unduly alarmed or complacent.

There must be appropriate communication with the individual upon whom the test has been performed concerning the results and their interpretation. Further, whether or not the use of some indicators is experimental should be clearly conveyed to all participants.

The International Code of Ethics for Occupational Health Professionals, issued by the International Commission on Occupational Health in 1992, stated that “biological tests and other investigations must be chosen from the point of view of their validity for protection of the health of the worker concerned, with due regard to their sensitivity, their specificity and their predictive value”. Use must not be made of tests “which are not reliable or which do not have a sufficient predictive value in relation to the requirements of the work assignment”. (See the chapter Ethical Issues for further discussion and the text of the Code.)

Trends in Regulation and Application

Biological monitoring can be carried out for only a limited number of environmental pollutants on account of the limited availability of appropriate reference data. This imposes important limitations on the use of biological monitoring in evaluating exposure.

The World Health Organization (WHO), for example, has proposed health-based reference values for lead, mercury, and cadmium only. These values are defined as levels in blood and urine not linked to any detectable adverse effect.The American Conference of Governmental Industrial Hygienists (ACGIH) has established biological exposure indices (BEIs) for about 26 compounds; BEIs are defined as “values for determinants which are indicators of the degree of integrated exposure to industrial chemicals” (ACGIH 1995).

The Nature and Aims of Ergonomics

Definition and Scope

Ergonomics means literally the study or measurement of work. In this context, the term work signifies purposeful human function; it extends beyond the more restricted concept of work as labour for monetary gain to incorporate all activities whereby a rational human operator systematically pursues an objective. Thus it includes sports and other leisure activities, domestic work such as child care and home maintenance, education and training, health and social service, and either controlling engineered systems or adapting to them, for example, as a passenger in a vehicle.

The human operator, the focus of study, may be a skilled professional operating a complex machine in an artificial environment, a customer who has casually purchased a new piece of equipment for personal use, a child sitting in a classroom or a disabled person in a wheelchair. The human being is highly adaptable but not infinitely so. There are ranges of optimum conditions for any activity. One of the tasks of ergonomics is to define what these ranges are and to explore the undesirable effects which occur if the limits are transgressed—for example if a person is expected to work in conditions of excessive heat, noise or vibration, or if the physical or mental workload is too high or too low.

Ergonomics examines not only the passive ambient situation but also the unique advantages of the human operator and the contributions that can be made if a work situation is designed to permit and encourage the person to make the best use of his or her abilities. Human abilities may be characterized not only with reference to the generic human operator but also with respect to those more particular abilities that are called upon in specific situations where high performance is essential. For example, an automobile manufacturer will consider the range of physical size and strength of the population of drivers who are expected to use a particular model to ensure that the seats are comfortable, that the controls are readily identifiable and within reach, that there is clear visibility to the front and the rear, and that the internal instruments are easy to read. Ease of entry and egress will also be taken into account. By contrast, the designer of a racing car will assume that the driver is athletic so that ease of getting in and out, for example, is not important and, in fact, design features as a whole as they relate to the driver may well be tailored to the dimensions and preferences of a particular driver to ensure that he or she can exercise his or her full potential and skill as a driver.

In all situations, activities and tasks the focus is the person or persons involved. It is assumed that the structure, the engineering and any other technology is there to serve the operator, not the other way round.

History and Status

About a century ago it was recognized that working hours and conditions in some mines and factories were not tolerable in terms of safety and health, and the need was evident to pass laws to set permissible limits in these respects. The determination and statement of those limits can be regarded as the beginning of ergonomics. They were, incidentally, the beginning of all the activities which now find expression through the work of the International Labour Organization (ILO).

Research, development and application proceeded slowly until the Second World War. This triggered greatly accelerated development of machines and instrumentation such as vehicles, aircraft, tanks, guns and vastly improved sensing and navigation devices. As technology advanced, greater flexibility was available to allow adaptation to the operator, an adaptation that became the more necessary because human performance was limiting the performance of the system. If a powered vehicle can travel at a speed of only a few kilometres per hour there is no need to worry about the performance of the driver, but when the vehicle’s maximum speed is increased by a factor of ten or a hundred, then the driver has to react more quickly and there is no time to correct mistakes to avert disaster. Similarly, as technology is improved there is less need to worry about mechanical or electrical failure (for instance) and attention is freed to think about the needs of the driver.

Thus ergonomics, in the sense of adapting engineering technology to the needs of the operator, becomes simultaneously both more necessary and more feasible as engineering advances.

The term ergonomics came into use about 1950 when the priorities of developing industry were taking over from the priorities of the military. The development of research and application for the following thirty years is described in detail in Singleton (1982). The United Nations agencies, particularly the ILO and the World Health Organization (WHO), became active in this field in the 1960s.

In immediate postwar industry the overriding objective, shared by ergonomics, was greater productivity. This was a feasible objective for ergonomics because so much industrial productivity was determined directly by the physical effort of the workers involved—speed of assembly and rate of lifting and movement determined the extent of output. Gradually, mechanical power replaced human muscle power. More power, however, leads to more accidents on the simple principle that an accident is the consequence of power in the wrong place at the wrong time. When things are happening faster, the potential for accidents is further increased. Thus the concern of industry and the aim of ergonomics gradually shifted from productivity to safety. This occurred in the 1960s and early 1970s. About and after this time, much of manufacturing industry shifted from batch production to flow and process production. The role of the operator shifted correspondingly from direct participation to monitoring and inspection. This resulted in a lower frequency of accidents because the operator was more remote from the scene of action but sometimes in a greater severity of accidents because of the speed and power inherent in the process.

When output is determined by the speed at which machines function then productivity becomes a matter of keeping the system running: in other words, reliability is the objective. Thus the operator becomes a monitor, a trouble-shooter and a maintainer rather than a direct manipulator.

This historical sketch of the postwar changes in manufacturing industry might suggest that the ergonomist has regularly dropped one set of problems and taken up another set but this is not the case for several reasons. As explained earlier, the concerns of ergonomics are much wider than those of manufacturing industry. In addition to production ergonomics, there is product or design ergonomics, that is, adapting the machine or product to the user. In the car industry, for example, ergonomics is important not only to component manufacturing and the production lines but also to the eventual driver, passenger and maintainer. It is now routine in the marketing of cars and in their critical appraisal by others to review the quality of the ergonomics, considering ride, seat comfort, handling, noise and vibration levels, ease of use of controls, visibility inside and outside, and so on.

It was suggested above that human performance is usually optimized within a tolerance range of a relevant variable. Much of the early ergonomics attempted to reduce both muscle power output and the extent and variety of movement by way of ensuring that such tolerances were not exceeded. The greatest change in the work situation, the advent of computers, has created the opposite problem. Unless it is well designed ergonomically, a computer workspace can induce too fixed a posture, too little bodily movement and too much repetition of particular combinations of joint movements.

This brief historical review is intended to indicate that, although there has been continuous development of ergonomics, it has taken the form of adding more and more problems rather than changing the problems. However, the corpus of knowledge grows and becomes more reliable and valid, energy expenditure norms are not dependent on how or why the energy is expended, postural issues are the same in aircraft seats and in front of computer screens, much human activity now involves using videoscreens and there are well-established principles based on a mix of laboratory evidence and field studies.

Ergonomics and Related Disciplines

The development of a science-based application which is intermediate between the well-established technologies of engineering and medicine inevitably overlaps into many related disciplines. In terms of its scientific basis, much of ergonomic knowledge derives from the human sciences: anatomy, physiology and psychology. The physical sciences also make a contribution, for example, to solving problems of lighting, heating, noise and vibration.

Most of the European pioneers in ergonomics were workers among the human sciences and it is for this reason that ergonomics is well-balanced between physiology and psychology. A physiological orientation is required as a background to problems such as energy expenditure, posture and application of forces, including lifting. A psychological orientation is required to study problems such as information presentation and job satisfaction. There are of course many problems which require a mixed human sciences approach such as stress, fatigue and shift work.

Most of the American pioneers in this field were involved in either experimental psychology or engineering and it is for this reason that their typical occupational titles—human engineering and human factors—reflect a difference in emphasis (but not in core interests) from European ergonomics. This also explains why occupational hygiene, from its close relationship to medicine, particularly occupational medicine, is regarded in the United States as quite different from human factors or ergonomics. The difference in other parts of the world is less marked. Ergonomics concentrates on the human operator in action, occupational hygiene concentrates on the hazards to the human operator present in the ambient environment. Thus the central interest of the occupational hygienist is toxic hazards, which are outside the scope of the ergonomist. The occupational hygienist is concerned about effects on health, either long-term or short-term; the ergonomist is, of course, concerned about health but he or she is also concerned about other consequences, such as productivity, work design and workspace design. Safety and health are the generic issues which run through ergonomics, occupational hygiene, occupational health and occupational medicine. It is, therefore, not surprising to find that in a large institution of a research, design or production kind, these subjects are often grouped together. This makes possible an approach based on a team of experts in these separate subjects, each making a specialist contribution to the general problem of health, not only of the workers in the institution but also of those affected by its activities and products. By contrast, in institutions concerned with design or provision of services, the ergonomist might be closer to the engineers and other technologists.

It will be clear from this discussion that because ergonomics is interdisciplinary and still quite new there is an important problem of how it should best be fitted into an existing organization. It overlaps onto so many other fields because it is concerned with people and people are the basic and all-pervading resource of every organization. There are many ways in which it can be fitted in, depending on the history and objectives of the particular organization. The main criteria are that ergonomics objectives are understood and appreciated and that mechanisms for implementation of recommendations are built into the organization.

Aims of Ergonomics

It will be clear already that the benefits of ergonomics can appear in many different forms, in productivity and quality, in safety and health, in reliability, in job satisfaction and in personal development.

The reason for this breadth of scope is that its basic aim is efficiency in purposeful activity—efficiency in the widest sense of achieving the desired result without wasteful input, without error and without damage to the person involved or to others. It is not efficient to expend unnecessary energy or time because insufficient thought has been given to the design of the work, the workspace, the working environment and the working conditions. It is not efficient to achieve the desired result in spite of the situation design rather than with support from it.

The aim of ergonomics is to ensure that the working situation is in harmony with the activities of the worker. This aim is self-evidently valid but attaining it is far from easy for a variety of reasons. The human operator is flexible and adaptable and there is continuous learning, but there are quite large individual differences. Some differences, such as physical size and strength, are obvious, but others, such as cultural differences and differences in style and in level of skill, are less easy to identify.

In view of these complexities it might seem that the solution is to provide a flexible situation where the human operator can optimize a specifically appropriate way of doing things. Unfortunately such an approach is sometimes impracticable because the more efficient way is often not obvious, with the result that a worker can go on doing something the wrong way or in the wrong conditions for years.

Thus it is necessary to adopt a systematic approach: to start from a sound theory, to set measurable objectives and to check success against these objectives. The various possible objectives are considered below.

Safety and health

There can be no disagreement about the desirability of safety and health objectives. The difficulty stems from the fact that neither is directly measurable: their achievement is assessed by their absence rather than their presence. The data in question always pertain to departures from safety and health.

In the case of health, much of the evidence is long-term as it is based on populations rather than individuals. It is, therefore, necessary to maintain careful records over long periods and to adopt an epidemiological approach through which risk factors can be identified and measured. For example, what should be the maximum hours per day or per year required of a worker at a computer workstation? It depends on the design of the workstation, the kind of work and the kind of person (age, vision, abilities and so on). The effects on health can be diverse, from wrist problems to mental apathy, so it is necessary to carry out comprehensive studies covering quite large populations while simultaneously keeping track of differences within the populations.

Safety is more directly measurable in a negative sense in terms of kinds and frequencies of accidents and damage. There are problems in defining different kinds of accidents and identifying the often multiple causal factors and there is often a distant relationship between the kind of accident and the degree of harm, from none to fatality.

Nevertheless, an enormous body of evidence concerning safety and health has been accumulated over the past fifty years and consistencies have been discovered which can be related back to theory, to laws and standards and to principles operative in particular kinds of situations.

Productivity and efficiency

Productivity is usually defined in terms of output per unit of time, whereas efficiency incorporates other variables, particularly the ratio of output to input. Efficiency incorporates the cost of what is done in relation to achievement, and in human terms this requires the consideration of the penalties to the human operator.

In industrial situations, productivity is relatively easy to measure: the amount produced can be counted and the time taken to produce it is simple to record. Productivity data are often used in before/after comparisons of working methods, situations or conditions. It involves assumptions about equivalence of effort and other costs because it is based on the principle that the human operator will perform as well as is feasible in the circumstances. If the productivity is higher then the circumstances must be better. There is much to recommend this simple approach provided that it is used with due regard to the many possible complicating factors which can disguise what is really happening. The best safeguard is to try to make sure that nothing has changed between the before and after situations except the aspects being studied.

Efficiency is a more comprehensive but always a more difficult measure. It usually has to be specifically defined for a particular situation and in assessing the results of any studies the definition should be checked for its relevance and validity in terms of the conclusions being drawn. For example, is bicycling more efficient than walking? Bicycling is much more productive in terms of the distance that can be covered on a road in a given time, and it is more efficient in terms of energy expenditure per unit of distance or, for indoor exercise, because the apparatus required is cheaper and simpler. On the other hand, the purpose of the exercise might be energy expenditure for health reasons or to climb a mountain over difficult terrain; in these circumstances walking will be more efficient. Thus, an efficiency measure has meaning only in a well-defined context.

Reliability and quality

As explained above, reliability rather than productivity becomes the key measure in high technology systems (for instance, transport aircraft, oil refining and power generation). The controllers of such systems monitor performance and make their contribution to productivity and to safety by making tuning adjustments to ensure that the automatic machines stay on line and function within limits. All these systems are in their safest states either when they are quiescent or when they are functioning steadily within the designed performance envelope. They become more dangerous when moving or being moved between equilibrium states, for example, when an aircraft is taking off or a process system is being shut down. High reliability is the key characteristic not only for safety reasons but also because unplanned shut-down or stoppage is extremely expensive. Reliability is straightforward to measure after performance but is extremely difficult to predict except by reference to the past performance of similar systems. When or if something goes wrong human error is invariably a contributing cause, but it is not necessarily an error on the part of the controller: human errors can originate at the design stage and during setting up and maintenance. It is now accepted that such complex high-technology systems require a considerable and continuous ergonomics input from design to the assessment of any failures that occur.

Quality is related to reliability but is very difficult if not impossible to measure. Traditionally, in batch and flow production systems, quality has been checked by inspection after output, but the current established principle is to combine production and quality maintenance. Thus each operator has parallel responsibility as an inspector. This usually proves to be more effective, but it may mean abandoning work incentives based simply on rate of production. In ergonomic terms it makes sense to treat the operator as a responsible person rather than as a kind of robot programmed for repetitive performance.

Job satisfaction and personal development

From the principle that the worker or human operator should be recognized as a person and not a robot it follows that consideration should be given to responsibilities, attitudes, beliefs and values. This is not easy because there are many variables, mostly detectable but not quantifiable, and there are large individual and cultural differences. Nevertheless a great deal of effort now goes into the design and management of work with the aim of ensuring that the situation is as satisfactory as is reasonably practicable from the operator’s viewpoint. Some measurement is possible by using survey techniques and some principles are available based on such working features as autonomy and empowerment.

Even accepting that these efforts take time and cost money, there can still be considerable dividends from listening to the suggestions, opinions and attitudes of the people actually doing the work. Their approach may not be the same as that of the external work designer and not the same as the assumptions made by the work designer or manager. These differences of view are important and can provide a refreshing change in strategy on the part of everyone involved.

It is well established that the human being is a continuous learner or can be, given the appropriate conditions. The key condition is to provide feedback about past and present performance which can be used to improve future performance. Moreover, such feedback itself acts as an incentive to performance. Thus everyone gains, the performer and those responsible in a wider sense for the performance. It follows that there is much to be gained from performance improvement, including self-development. The principle that personal development should be an aspect of the application of ergonomics requires greater designer and manager skills but, if it can be applied successfully, can improve all the aspects of human performance discussed above.

Successful application of ergonomics often follows from doing no more than developing the appropriate attitude or point of view. The people involved are inevitably the central factor in any human effort and the systematic consideration of their advantages, limitations, needs and aspirations is inherently important.

Conclusion

Ergonomics is the systematic study of people at work with the objective of improving the work situation, the working conditions and the tasks performed. The emphasis is on acquiring relevant and reliable evidence on which to base recommendation for changes in specific situations and on developing more general theories, concepts, guidelines and procedures which will contribute to the continually developing expertise available from ergonomics.

Definitions and Concepts

Exposure, Dose and Response

Toxicity is the intrinsic capacity of a chemical agent to affect an organism adversely.

Xenobiotics is a term for “foreign substances”, that is, foreign to the organism. Its opposite is endogenous compounds. Xenobiotics include drugs, industrial chemicals, naturally occurring poisons and environmental pollutants.

Hazard is the potential for the toxicity to be realized in a specific setting or situation.

Risk is the probability of a specific adverse effect to occur. It is often expressed as the percentage of cases in a given population and during a specific time period. A risk estimate can be based upon actual cases or a projection of future cases, based upon extrapolations.

Toxicity rating and toxicity classification can be used for regulatory purposes. Toxicity rating is an arbitrary grading of doses or exposure levels causing toxic effects. The grading can be “supertoxic,” “highly toxic,” “moderately toxic” and so on. The most common ratings concern acute toxicity. Toxicity classification concerns the grouping of chemicals into general categories according to their most important toxic effect. Such categories can include allergenic, neurotoxic, carcinogenic and so on. This classification can be of administrative value as a warning and as information.

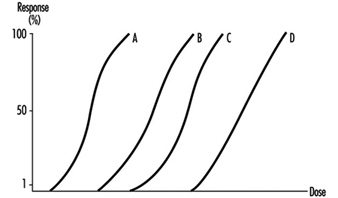

The dose-effect relationship is the relationship between dose and effect on the individual level. An increase in dose may in- crease the intensity of an effect, or a more severe effect may result. A dose-effect curve may be obtained at the level of the whole organism, the cell or the target molecule. Some toxic effects, such as death or cancer, are not graded but are “all or none” effects.

The dose-response relationship is the relationship between dose and the percentage of individuals showing a specific effect. With increasing dose a greater number of individuals in the exposed population will usually be affected.

It is essential to toxicology to establish dose-effect and dose- response relationships. In medical (epidemiological) studies a criterion often used for accepting a causal relationship between an agent and a disease is that effect or response is proportional to dose.

Several dose-response curves can be drawn for a chemical—one for each type of effect. The dose-response curve for most toxic effects (when studied in large populations) has a sigmoid shape. There is usually a low-dose range where there is no response detected; as dose increases, the response follows an ascending curve that will usually reach a plateau at a 100% response. The dose-response curve reflects the variations among individuals in a population. The slope of the curve varies from chemical to chemical and between different types of effects. For some chemicals with specific effects (carcinogens, initiators, mutagens) the dose-response curve might be linear from dose zero within a certain dose range. This means that no threshold exists and that even small doses represent a risk. Above that dose range, the risk may increase at greater than a linear rate.

Variation in exposure during the day and the total length of exposure during one’s lifetime may be as important for the outcome (response) as mean or average or even integrated dose level. High peak exposures may be more harmful than a more even exposure level. This is the case for some organic solvents. On the other hand, for some carcinogens, it has been experimentally shown that the fractionation of a single dose into several exposures with the same total dose may be more effective in producing tumours.

A dose is often expressed as the amount of a xenobiotic entering an organism (in units such as mg/kg body weight). The dose may be expressed in different (more or less informative) ways: exposure dose, which is the air concentration of pollutant inhaled during a certain time period (in work hygiene usually eight hours), or the retained or absorbed dose (in industrial hygiene also called the body burden), which is the amount present in the body at a certain time during or after exposure. The tissue dose is the amount of substance in a specific tissue and the target dose is the amount of substance (usually a metabolite) bound to the critical molecule. The target dose can be expressed as mg chemical bound per mg of a specific macromolecule in the tissue. To apply this concept, information on the mechanism of toxic action on the molecular level is needed. The target dose is more exactly associated with the toxic effect. The exposure dose or body burden may be more easily available, but these are less precisely related to the effect.

In the dose concept a time aspect is often included, even if it is not always expressed. The theoretical dose according to Haber’s law is D = ct, where D is dose, c is concentration of the xenobiotic in the air and t the duration of exposure to the chemical. If this concept is used at the target organ or molecular level, the amount per mg tissue or molecule over a certain time may be used. The time aspect is usually more important for understanding repeated exposures and chronic effects than for single exposures and acute effects.

Additive effects occur as a result of exposure to a combination of chemicals, where the individual toxicities are simply added to each other (1+1= 2). When chemicals act via the same mechanism, additivity of their effects is assumed although not always the case in reality. Interaction between chemicals may result in an inhibition (antagonism), with a smaller effect than that expected from addition of the effects of the individual chemicals (1+1 2). Alternatively, a combination of chemicals may produce a more pronounced effect than would be expected by addition (increased response among individuals or an increase in frequency of response in a population), this is called synergism (1+1 >2).

Latency time is the time between first exposure and the appearance of a detectable effect or response. The term is often used for carcinogenic effects, where tumours may appear a long time after the start of exposure and sometimes long after the cessation of exposure.

A dose threshold is a dose level below which no observable effect occurs. Thresholds are thought to exist for certain effects, like acute toxic effects; but not for others, like carcinogenic effects (by DNA-adduct-forming initiators). The mere absence of a response in a given population should not, however, be taken as evidence for the existence of a threshold. Absence of response could be due to simple statistical phenomena: an adverse effect occurring at low frequency may not be detectable in a small population.

LD50 (effective dose) is the dose causing 50% lethality in an animal population. The LD50 is often given in older literature as a measure of acute toxicity of chemicals. The higher the LD50, the lower is the acute toxicity. A highly toxic chemical (with a low LD50) is said to be potent. There is no necessary correlation between acute and chronic toxicity. ED50 (effective dose) is the dose causing a specific effect other than lethality in 50% of the animals.

NOEL (NOAEL) means the no observed (adverse) effect level, or the highest dose that does not cause a toxic effect. To establish a NOEL requires multiple doses, a large population and additional information to make sure that absence of a response is not merely a statistical phenomenon. LOEL is the lowest observed effective dose on a dose-response curve, or the lowest dose that causes an effect.

A safety factor is a formal, arbitrary number with which one divides the NOEL or LOEL derived from animal experiments to obtain a tentative permissible dose for humans. This is often used in the area of food toxicology, but may be used also in occupational toxicology. A safety factor may also be used for extrapolation of data from small populations to larger populations. Safety factors range from 100 to 103. A safety factor of two may typically be sufficient to protect from a less serious effect (such as irritation) and a factor as large as 1,000 may be used for very serious effects (such as cancer). The term safety factor could be better replaced by the term protection factor or, even, uncertainty factor. The use of the latter term reflects scientific uncertainties, such as whether exact dose-response data can be translated from animals to humans for the particular chemical, toxic effect or exposure situation.

Extrapolations are theoretical qualitative or quantitative estimates of toxicity (risk extrapolations) derived from translation of data from one species to another or from one set of dose-response data (typically in the high dose range) to regions of dose-response where no data exist. Extrapolations usually must be made to predict toxic responses outside the observation range. Mathematical modelling is used for extrapolations based upon an understanding of the behaviour of the chemical in the organism (toxicokinetic modelling) or based upon the understanding of statistical probabilities that specific biological events will occur (biologically or mechanistically based models). Some national agencies have developed sophisticated extrapolation models as a formalized method to predict risks for regulatory purposes. (See discussion of risk assessment later in the chapter.)

Systemic effects are toxic effects in tissues distant from the route of absorption.

Target organ is the primary or most sensitive organ affected after exposure. The same chemical entering the body by different routes of exposure dose, dose rate, sex and species may affect different target organs. Interaction between chemicals, or between chemicals and other factors may affect different target organs as well.

Acute effects occur after limited exposure and shortly (hours, days) after exposure and may be reversible or irreversible.

Chronic effects occur after prolonged exposure (months, years, decades) and/or persist after exposure has ceased.

Acute exposure is an exposure of short duration, while chronic exposure is long-term (sometimes life-long) exposure.

Tolerance to a chemical may occur when repeat exposures result in a lower response than what would have been expected without pretreatment.

Uptake and Disposition

Transport processes

Diffusion. In order to enter the organism and reach a site where damage is produced, a foreign substance has to pass several barriers, including cells and their membranes. Most toxic substances pass through membranes passively by diffusion. This may occur for small water-soluble molecules by passage through aqueous channels or, for fat-soluble ones, by dissolution into and diffusion through the lipid part of the membrane. Ethanol, a small molecule that is both water and fat soluble, diffuses rapidly through cell membranes.

Diffusion of weak acids and bases. Weak acids and bases may readily pass membranes in their non-ionized, fat-soluble form while ionized forms are too polar to pass. The degree of ionization of these substances depends on pH. If a pH gradient exists across a membrane they will therefore accumulate on one side. The urinary excretion of weak acids and bases is highly dependent on urinary pH. Foetal or embryonic pH is somewhat higher than maternal pH, causing a slight accumulation of weak acids in the foetus or embryo.

Facilitated diffusion. The passage of a substance may be facilitated by carriers in the membrane. Facilitated diffusion is similar to enzyme processes in that it is protein mediated, highly selective, and saturable. Other substances may inhibit the facilitated transport of xenobiotics.

Active transport. Some substances are actively transported across cell membranes. This transport is mediated by carrier proteins in a process analogous to that of enzymes. Active transport is similar to facilitated diffusion, but it may occur against a concentration gradient. It requires energy input and a metabolic inhibitor can block the process. Most environmental pollutants are not transported actively. One exception is the active tubular secretion and reabsorption of acid metabolites in the kidneys.

Phagocytosis is a process where specialized cells such as macrophages engulf particles for subsequent digestion. This transport process is important, for example, for the removal of particles in the alveoli.

Bulk flow. Substances are also transported in the body along with the movement of air in the respiratory system during breathing, and the movements of blood, lymph or urine.