Children categories

36. Barometric Pressure Increased (2)

36. Barometric Pressure Increased

Chapter Editor: T.J.R. Francis

Table of Contents

Working under Increased Barometric Pressure

Eric Kindwall

Dees F. Gorman

Tables

Click a link below to view table in article context.

1. Instructions for compressed-air workers

2. Decompression illness: Revised classification

37. Barometric Pressure Reduced (4)

37. Barometric Pressure Reduced

Chapter Editor: Walter Dümmer

Table of Contents

Figures and Tables

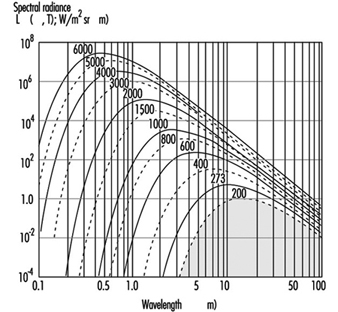

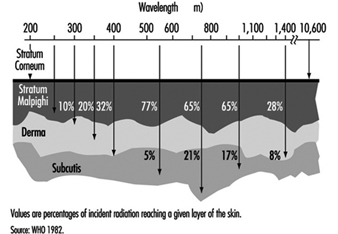

Ventilatory Acclimatization to High Altitude

John T. Reeves and John V. Weil

Physiological Effects of Reduced Barometric Pressure

Kenneth I. Berger and William N. Rom

Health Considerations for Managing Work at High Altitudes

John B. West

Prevention of Occupational Hazards at High Altitudes

Walter Dümmer

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

38. Biological Hazards (4)

38. Biological Hazards

Chapter Editor: Zuheir Ibrahim Fakhri

Table of Contents

Tables

Workplace Biohazards

Zuheir I. Fakhri

Aquatic Animals

D. Zannini

Terrestrial Venomous Animals

J.A. Rioux and B. Juminer

Clinical Features of Snakebite

David A. Warrell

Tables

Click a link below to view table in article context.

1. Occupational settings with biological agents

2. Viruses, bacteria, fungi & plants in the workplace

3. Animals as a source of occupational hazards

39. Disasters, Natural and Technological (12)

39. Disasters, Natural and Technological

Chapter Editor: Pier Alberto Bertazzi

Table of Contents

Tables and Figures

Disasters and Major Accidents

Pier Alberto Bertazzi

ILO Convention concerning the Prevention of Major Industrial Accidents, 1993 (No. 174)

Disaster Preparedness

Peter J. Baxter

Post-Disaster Activities

Benedetto Terracini and Ursula Ackermann-Liebrich

Weather-Related Problems

Jean French

Avalanches: Hazards and Protective Measures

Gustav Poinstingl

Transportation of Hazardous Material: Chemical and Radioactive

Donald M. Campbell

Radiation Accidents

Pierre Verger and Denis Winter

Case Study: What does dose mean?

Occupational Health and Safety Measures in Agricultural Areas Contaminated by Radionuclides: The Chernobyl Experience

Yuri Kundiev, Leonard Dobrovolsky and V.I. Chernyuk

Case Study: The Kader Toy Factory Fire

Casey Cavanaugh Grant

Impacts of Disasters: Lessons from a Medical Perspective

José Luis Zeballos

Tables

Click a link below to view table in article context.

1. Definitions of disaster types

2. 25-yr average # victims by type & region-natural trigger

3. 25-yr average # victims by type & region-non-natural trigger

4. 25-yr average # victims by type-natural trigger (1969-1993)

5. 25-yr average # victims by type-non-natural trigger (1969-1993)

6. Natural trigger from 1969 to 1993: Events over 25 years

7. Non-natural trigger from 1969 to 1993: Events over 25 years

8. Natural trigger: Number by global region & type in 1994

9. Non-natural trigger: Number by global region & type in 1994

10. Examples of industrial explosions

11. Examples of major fires

12. Examples of major toxic releases

13. Role of major hazard installations management in hazard control

14. Working methods for hazard assessment

15. EC Directive criteria for major hazard installations

16. Priority chemicals used in identifying major hazard installations

17. Weather-related occupational risks

18. Typical radionuclides, with their radioactive half-lives

19. Comparison of different nuclear accidents

20. Contamination in Ukraine, Byelorussia & Russia after Chernobyl

21. Contamination strontium-90 after the Khyshtym accident (Urals 1957)

22. Radioactive sources that involved the general public

23. Main accidents involving industrial irradiators

24. Oak Ridge (US) radiation accident registry (worldwide, 1944-88)

25. Pattern of occupational exposure to ionizing radiation worldwide

26. Deterministic effects: thresholds for selected organs

27. Patients with acute irradiation syndrome (AIS) after Chernobyl

28. Epidemiological cancer studies of high dose external irradiation

29. Thyroid cancers in children in Belarus, Ukraine & Russia, 1981-94

30. International scale of nuclear incidents

31. Generic protective measures for general population

32. Criteria for contamination zones

33. Major disasters in Latin America & the Caribbean, 1970-93

34. Losses due to six natural disasters

35. Hospitals & hospital beds damaged/ destroyed by 3 major disasters

36. Victims in 2 hospitals collapsed by the 1985 earthquake in Mexico

37. Hospital beds lost resulting from the March 1985 Chilean earthquake

38. Risk factors for earthquake damage to hospital infrastructure

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Click to return to top of page

40. Electricity (3)

40. Electricity

Chapter Editor: Dominique Folliot

Table of Contents

Figures and Tables

Electricity—Physiological Effects

Dominique Folliot

Static Electricity

Claude Menguy

Prevention And Standards

Renzo Comini

Tables

Click a link below to view table in article context.

1. Estimates of the rate of electrocution-1988

2. Basic relationships in electrostatics-Collection of equations

3. Electron affinities of selected polymers

4. Typical lower flammability limits

5. Specific charge associated with selected industrial operations

6. Examples of equipment sensitive to electrostatic discharges

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

41. Fire (6)

41. Fire

Chapter Editor: Casey C. Grant

Table of Contents

Figures and Tables

Basic Concepts

Dougal Drysdale

Sources of Fire Hazards

Tamás Bánky

Fire Prevention Measures

Peter F. Johnson

Passive Fire Protection Measures

Yngve Anderberg

Active Fire Protection Measures

Gary Taylor

Organizing for Fire Protection

S. Dheri

Tables

Click a link below to view table in article context.

1. Lower & upper flammability limits in air

2. Flashpoints & firepoints of liquid & solid fuels

3. Ignition sources

4. Comparison of concentrations of different gases required for inerting

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

42. Heat and Cold (12)

42. Heat and Cold

Chapter Editor: Jean-Jacques Vogt

Table of Contents

Figures and Tables

Physiological Responses to the Thermal Environment

W. Larry Kenney

Effects of Heat Stress and Work in the Heat

Bodil Nielsen

Heat Disorders

Tokuo Ogawa

Prevention of Heat Stress

Sarah A. Nunneley

The Physical Basis of Work in Heat

Jacques Malchaire

Assessment of Heat Stress and Heat Stress Indices

Kenneth C. Parsons

Case Study: Heat Indices: Formulae and Definitions

Heat Exchange through Clothing

Wouter A. Lotens

Cold Environments and Cold Work

Ingvar Holmér, Per-Ola Granberg and Goran Dahlstrom

Prevention of Cold Stress in Extreme Outdoor Conditions

Jacques Bittel and Gustave Savourey

Cold Indices and Standards

Ingvar Holmér

Tables

Click a link below to view table in article context.

1. Electrolyte concentration in blood plasma & sweat

2. Heat Stress Index & Allowable Exposure Times: calculations

3. Interpretation of Heat Stress Index values

4. Reference values for criteria of thermal stress & strain

5. Model using heart rate to assess heat stress

6. WBGT reference values

7. Working practices for hot environments

8. Calculation of the SWreq index & assessment method: equations

9. Description of terms used in ISO 7933 (1989b)

10. WBGT values for four work phases

11. Basic data for the analytical assessment using ISO 7933

12. Analytical assessment using ISO 7933

13. Air temperatures of various cold occupational environments

14. Duration of uncompensated cold stress & associated reactions

15. Indication of anticipated effects of mild & severe cold exposure

16. Body tissue temperature & human physical performance

17. Human responses to cooling: Indicative reactions to hypothermia

18. Health recommendations for personnel exposed to cold stress

19. Conditioning programmes for workers exposed to cold

20. Prevention & alleviation of cold stress: strategies

21. Strategies & measures related to specific factors & equipment

22. General adaptational mechanisms to cold

23. Number of days when water temperature is below 15 ºC

24. Air temperatures of various cold occupational environments

25. Schematic classification of cold work

26. Classification of levels of metabolic rate

27. Examples of basic insulation values of clothing

28. Classification of thermal resistance to cooling of handwear

29. Classification of contact thermal resistance of handwear

30. Wind Chill Index, temperature & freezing time of exposed flesh

31. Cooling power of wind on exposed flesh

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

43. Hours of Work (1)

43. Hours of Work

Chapter Editor: Peter Knauth

Table of Contents

Hours of Work

Peter Knauth

Tables

Click a link below to view table in article context.

1. Time intervals from beginning shiftwork until three illnesses

2. Shiftwork & incidence of cardiovascular disorders

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

44. Indoor Air Quality (8)

44. Indoor Air Quality

Chapter Editor: Xavier Guardino Solá

Table of Contents

Figures and Tables

Indoor Air Quality: Introduction

Xavier Guardino Solá

Nature and Sources of Indoor Chemical Contaminants

Derrick Crump

Radon

María José Berenguer

Tobacco Smoke

Dietrich Hoffmann and Ernst L. Wynder

Smoking Regulations

Xavier Guardino Solá

Measuring and Assessing Chemical Pollutants

M. Gracia Rosell Farrás

Biological Contamination

Brian Flannigan

Regulations, Recommendations, Guidelines and Standards

María José Berenguer

Tables

Click a link below to view table in article context.

1. Classification of indoor organic pollutants

2. Formaldehyde emission from a variety of materials

3. Ttl. volatile organic comp’ds concs, wall/floor coverings

4. Consumer prods & other sources of volatile organic comp’ds

5. Major types & concentrations in the urban United Kingdom

6. Field measurements of nitrogen oxides & carbon monoxide

7. Toxic & tumorigenic agents in cigarette sidestream smoke

8. Toxic & tumorigenic agents from tobacco smoke

9. Urinary cotinine in non-smokers

10. Methodology for taking samples

11. Detection methods for gases in indoor air

12. Methods used for the analysis of chemical pollutants

13. Lower detection limits for some gases

14. Types of fungus which can cause rhinitis and/or asthma

15. Micro-organisms and extrinsic allergic alveolitis

16. Micro-organisms in nonindustrial indoor air & dust

17. Standards of air quality established by the US EPA

18. WHO guidelines for non-cancer and non-odour annoyance

19. WHO guideline values based on sensory effects or annoyance

20. Reference values for radon of three organizations

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

45. Indoor Environmental Control (6)

45. Indoor Environmental Control

Chapter Editor: Juan Guasch Farrás

Table of Contents

Figures and Tables

Control of Indoor Environments: General Principles

A. Hernández Calleja

Indoor Air: Methods for Control and Cleaning

E. Adán Liébana and A. Hernández Calleja

Aims and Principles of General and Dilution Ventilation

Emilio Castejón

Ventilation Criteria for Nonindustrial Buildings

A. Hernández Calleja

Heating and Air-Conditioning Systems

F. Ramos Pérez and J. Guasch Farrás

Indoor Air: Ionization

E. Adán Liébana and J. Guasch Farrás

Tables

Click a link below to view table in article context.

1. Most common indoor pollutants & their sources

2. Basic requirements-dilution ventilation system

3. Control measures & their effects

4. Adjustments to working environment & effects

5. Effectiveness of filters (ASHRAE standard 52-76)

6. Reagents used as absorbents for contaminents

7. Levels of quality of indoor air

8. Contamination due to the occupants of a building

9. Degree of occupancy of different buildings

10. Contamination due to the building

11. Quality levels of outside air

12. Proposed norms for environmental factors

13. Temperatures of thermal comfort (based on Fanger)

14. Characteristics of ions

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

46. Lighting (3)

46. Lighting

Chapter Editor: Juan Guasch Farrás

Table of Contents

Figures and Tables

Types of Lamps and Lighting

Richard Forster

Conditions Required for Visual

Fernando Ramos Pérez and Ana Hernández Calleja

General Lighting Conditions

N. Alan Smith

Tables

Click a link below to view table in article context.

1. Improved output & wattage of some 1,500 mm fluorescent tube lamps

2. Typical lamp efficacies

3. International Lamp Coding System (ILCOS) for some lamp types

4. Common colours & shapes of incandescent lamps & ILCOS codes

5. Types of high-pressure sodium lamp

6. Colour contrasts

7. Reflection factors of different colours & materials

8. Recommended levels of maintained illuminance for locations/tasks

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

47. Noise (5)

47. Noise

Chapter Editor: Alice H. Suter

Table of Contents

Figures and Tables

The Nature and Effects of Noise

Alice H. Suter

Noise Measurement and Exposure Evaluation

Eduard I. Denisov and German A. Suvorov

Engineering Noise Control

Dennis P. Driscoll

Hearing Conservation Programmes

Larry H. Royster and Julia Doswell Royster

Standards and Regulations

Alice H. Suter

Tables

Click a link below to view table in article context.

1. Permissible exposure limits (PEL)for noise exposure, by nation

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

48. Radiation: Ionizing (6)

48. Radiation: Ionizing

Chapter Editor: Robert N. Cherry, Jr.

Table of Contents

Introduction

Robert N. Cherry, Jr.

Radiation Biology and Biological Effects

Arthur C. Upton

Sources of Ionizing Radiation

Robert N. Cherry, Jr.

Workplace Design for Radiation Safety

Gordon M. Lodde

Radiation Safety

Robert N. Cherry, Jr.

Planning for and Management of Radiation Accidents

Sydney W. Porter, Jr.

49. Radiation, Non-Ionizing (9)

49. Radiation, Non-Ionizing

Chapter Editor: Bengt Knave

Table of Contents

Tables and Figures

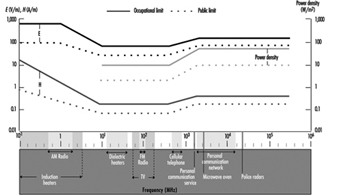

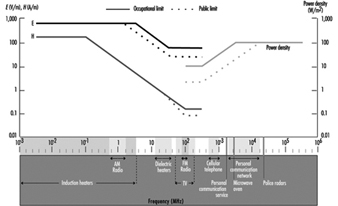

Electric and Magnetic Fields and Health Outcomes

Bengt Knave

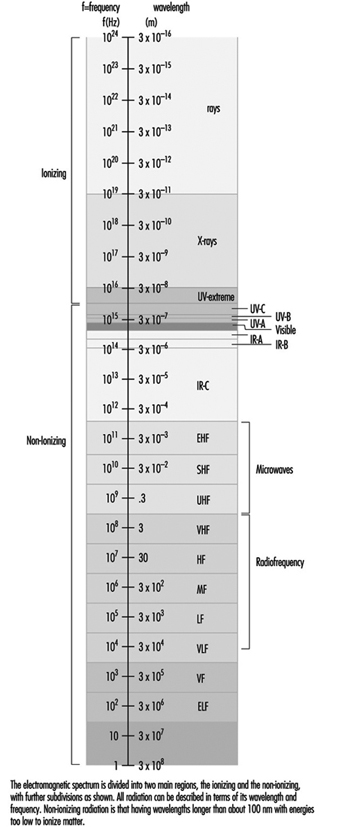

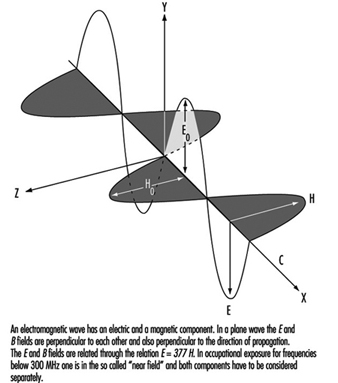

The Electromagnetic Spectrum: Basic Physical Characteristics

Kjell Hansson Mild

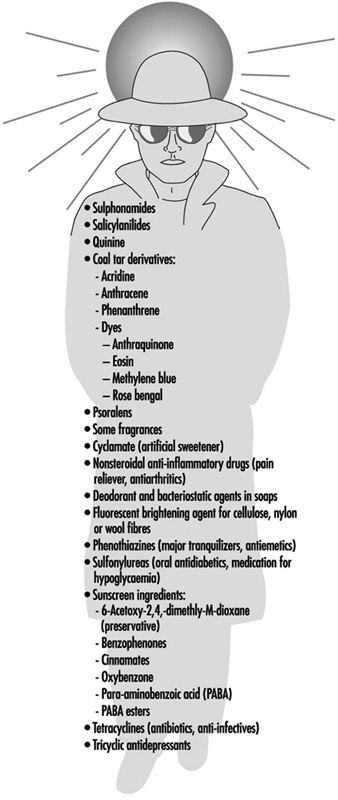

Ultraviolet Radiation

David H. Sliney

Infrared Radiation

R. Matthes

Light and Infrared Radiation

David H. Sliney

Lasers

David H. Sliney

Radiofrequency Fields and Microwaves

Kjell Hansson Mild

VLF and ELF Electric and Magnetic Fields

Michael H. Repacholi

Static Electric and Magnetic Fields

Martino Grandolfo

Tables

Click a link below to view table in article context.

1. Sources and exposures for IR

2. Retinal thermal hazard function

3. Exposure limits for typical lasers

4. Applications of equipment using range >0 to 30 kHz

5. Occupational sources of exposure to magnetic fields

6. Effects of currents passing through the human body

7. Biological effects of various current density ranges

8. Occupational exposure limits-electric/magnetic fields

9. Studies on animals exposed to static electric fields

10. Major technologies and large static magnetic fields

11. ICNIRP recommendations for static magnetic fields

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

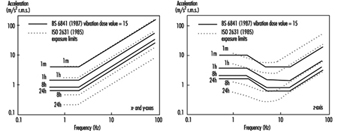

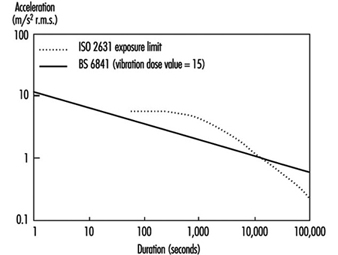

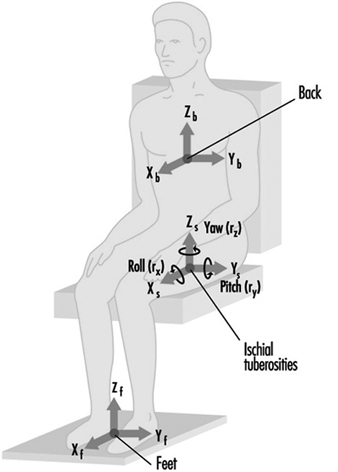

50. Vibration (4)

50. Vibration

Chapter Editor: Michael J. Griffin

Table of Contents

Table and Figures

Vibration

Michael J. Griffin

Whole-body Vibration

Helmut Seidel and Michael J. Griffin

Hand-transmitted Vibration

Massimo Bovenzi

Motion Sickness

Alan J. Benson

Tables

Click a link below to view table in article context.

1. Activities with adverse effects of whole-body vibration

2. Preventive measures for whole-body vibration

3. Hand-transmitted vibration exposures

4. Stages, Stockholm Workshop scale, hand-arm vibration syndrome

5. Raynaud’s phenomenon & hand-arm vibration syndrome

6. Threshold limit values for hand-transmitted vibration

7. European Union Council Directive: Hand-transmitted vibration (1994)

8. Vibration magnitudes for finger blanching

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

51. Violence (1)

51. Violence

Chapter Editor: Leon J. Warshaw

Table of Contents

Violence in the Workplace

Leon J. Warshaw

Tables

Click a link below to view table in article context.

1. Highest rates of occupational homicide, US workplaces, 1980-1989

2. Highest rates of occupational homicide US occupations, 1980-1989

3. Risk factors for workplace homicides

4. Guides for programmes to prevent workplace violence

52. Visual Display Units (11)

52. Visual Display Units

Chapter Editor: Diane Berthelette

Table of Contents

Tables and Figures

Overview

Diane Berthelette

Characteristics of Visual Display Workstations

Ahmet Çakir

Ocular and Visual Problems

Paule Rey and Jean-Jacques Meyer

Reproductive Hazards - Experimental Data

Ulf Bergqvist

Reproductive Effects - Human Evidence

Claire Infante-Rivard

Case Study: A Summary of Studies of Reproductive Outcomes

Musculoskeletal Disorders

Gabriele Bammer

Skin Problems

Mats Berg and Sture Lidén

Psychosocial Aspects of VDU Work

Michael J. Smith and Pascale Carayon

Ergonomic Aspects of Human - Computer Interaction

Jean-Marc Robert

Ergonomics Standards

Tom F.M. Stewart

Tables

Click a link below to view table in article context.

1. Distribution of computers in various regions

2. Frequency & importance of elements of equipment

3. Prevalence of ocular symptoms

4. Teratological studies with rats or mice

5. Teratological studies with rats or mice

6. VDU use as a factor in adverse pregnancy outcomes

7. Analyses to study causes musculoskeletal problems

8. Factors thought to cause musculoskeletal problems

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Radiation Safety

This article describes aspects of radiation safety programmes. The objective of radiation safety is to eliminate or minimize harmful effects of ionizing radiation and radioactive material on workers, the public and the environment while allowing their beneficial uses.

Most radiation safety programmes will not have to implement every one of the elements described below. The design of a radiation safety programme depends on the types of ionizing radiation sources involved and how they are used.

Radiation Safety Principles

The International Commission on Radiological Protection (ICRP) has proposed that the following principles should guide the use of ionizing radiation and the application of radiation safety standards:

- No practice involving exposures to radiation should be adopted unless it produces sufficient benefit to the exposed individuals or to society to offset the radiation detriment it causes (the justification of a practice).

- In relation to any particular source within a practice, the magnitude of individual doses, the number of people exposed, and the likelihood of incurring exposures where these are not certain to be received should all be kept as low as reasonably achievable (ALARA), economic and social factors being taken into account. This procedure should be constrained by restrictions on the doses to individuals (dose constraints), so as to limit the inequity likely to result from the inherent economic and social judgements (the optimization of protection).

- The exposure of individuals resulting from the combination of all the relevant practices should be subject to dose limits, or to some control of risk in the case of potential exposures. These are aimed at ensuring that no individual is exposed to radiation risks that are judged to be unacceptable from these practices in any normal circumstances. Not all sources are susceptible of control by action at the source and it is necessary to specify the sources to be included as relevant before selecting a dose limit (individual dose and risk limits).

Radiation Safety Standards

Standards exist for radiation exposure of workers and the general public and for annual limits on intake (ALI) of radionuclides. Standards for concentrations of radionuclides in air and in water can be derived from the ALIs.

The ICRP has published extensive tabulations of ALIs and derived air and water concentrations. A summary of its recommended dose limits is in table 1.

Table 1. Recommended dose limits of the International Commission on Radiological Protection1

|

Application |

Dose limit |

|

|

Occupational |

Public |

|

|

Effective dose |

20 mSv per year averaged over |

1 mSv in a year3 |

|

Annual equivalent dose in: |

||

|

Lens of the eye |

150 mSv |

15 mSv |

|

Skin4 |

500 mSv |

50 mSv |

|

Hands and feet |

500 mSv |

- |

1 The limits apply to the sum of the relevant doses from external exposure in the specified period and the 50-year committed dose (to age 70 years for children) from intakes in the same period.

2 With the further provision that the effective dose should not exceed 50 mSv in any single year. Additional restrictions apply to the occupational exposure of pregnant women.

3 In special circumstances, a higher value of effective dose could be allowed in a single year, provided that the average over 5 years does not exceed 1 mSv per year.

4 The limitation on the effective dose provides sufficient protection for the skin against stochastic effects. An additional limit is needed for localized exposures in order to prevent deterministic effects.

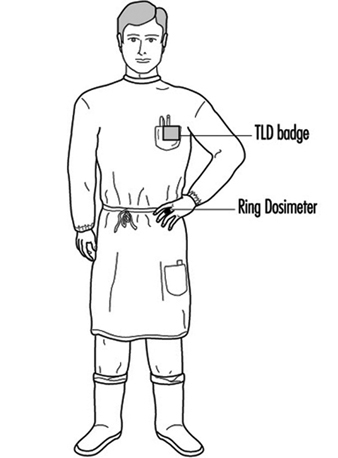

Dosimetry

Dosimetry is used to indicate dose equivalents that workers receive from external radiation fields to which they may be exposed. Dosimeters are characterized by the type of device, the type of radiation they measure and the portion of the body for which the absorbed dose is to be indicated.

Three main types of dosimeters are most commonly employed. They are thermoluminescent dosimeters, film dosimeters and ionization chambers. Other types of dosimeters (not discussed here) include fission foils, track-etch devices and plastic “bubble” dosimeters.

Thermoluminescent dosimeters are the most commonly used type of personnel dosimeter. They take advantage of the principle that when some materials absorb energy from ionizing radiation, they store it such that later it can be recovered in the form of light when the materials are heated. To a high degree, the amount of light released is directly proportional to the energy absorbed from the ionizing radiation and hence to the absorbed dose the material received. This proportionality is valid over a very wide range of ionizing radiation energy and absorbed dose rates.

Special equipment is necessary to process thermoluminescent dosimeters accurately. Reading the thermoluminescent dosimeter destroys the dose information contained in it. However, after appropriate processing, thermoluminescent dosimeters are reusable.

The material used for thermoluminescent dosimeters must be transparent to the light it emits. The most common materials used for thermoluminescent dosimeters are lithium fluoride (LiF) and calcium fluoride (CaF2). The materials may be doped with other materials or made with a specific isotopic composition for specialized purposes such as neutron dosimetry.

Many dosimeters contain several thermoluminescent chips with different filters in front of them to allow discrimination between energies and types of radiation.

Film was the most popular material for personnel dosimetry before thermoluminescent dosimetry became common. The degree of film darkening depends on the energy absorbed from the ionizing radiation, but the relationship is not linear. Dependence of film response on total absorbed dose, absorbed dose rate and radiation energy is greater than that for thermoluminescent dosimeters and can limit film’s range of applicability. However, film has the advantage of providing a permanent record of the absorbed dose to which it was exposed.

Various film formulations and filter arrangements may be used for special purposes, such as neutron dosimetry. As with thermoluminescent dosimeters, special equipment is needed for proper analysis.

Film is generally much more sensitive to ambient humidity and temperature than thermoluminescent materials, and can give falsely high readings under adverse conditions. On the other hand, dose equivalents indicated by thermoluminescent dosimeters may be affected by the shock of dropping them on a hard surface.

Only the largest of organizations operate their own dosimetry services. Most obtain such services from companies specializing in providing them. It is important that such companies be licensed or accredited by appropriate independent authorities so that accurate dosimetry results are assured.

Self-reading, small ionization chambers, also called pocket chambers, are used to obtain immediate dosimetry information. Their use is often required when personnel must enter high or very high radiation areas, where personnel could receive a large absorbed dose in a short period of time. Pocket chambers often are calibrated locally, and they are very sensitive to shock. Consequently, they should always be supplemented by thermoluminescent or film dosimeters, which are more accurate and dependable but do not provide immediate results.

Dosimetry is required for a worker when he or she has a reasonable probability of accumulating a certain percentage, usually 5 or 10%, of the maximum permissible dose equivalent for the whole-body or certain parts of the body.

A whole-body dosimeter should be worn somewhere between the shoulders and the waist, at a point where the highest exposure is anticipated. When conditions of exposure warrant, other dosimeters may be worn on fingers or wrists, at the abdomen, on a band or hat at the forehead, or on a collar, to assess localized exposure to extremities, a foetus or embryo, the thyroid or the lenses of the eyes. Refer to appropriate regulatory guidelines about whether dosimeters should be worn inside or outside protective garments such as lead aprons, gloves and collars.

Personnel dosimeters indicate only the radiation to which the dosimeter was exposed. Assigning the dosimeter dose equivalent to the person or organs of the person is acceptable for small, trivial doses, but large dosimeter doses, especially those greatly exceeding regulatory standards, should be analysed carefully with respect to dosimeter placement and the actual radiation fields to which the worker was exposed when estimating the dose that the worker actually received. A statement should be obtained from the worker as part of the investigation and included in the record. However, much more often than not, very large dosimeter doses are the result of deliberate radiation exposure of the dosimeter while it was not being worn.

Bioassay

Bioassay (also called radiobioassay) means the determination of kinds, quantities or concentrations, and, in some cases, the locations of radioactive material in the human body, whether by direct measurement (in vivo counting) or by analysis and evaluation of materials excreted or removed from the human body.

Bioassay is usually used to assess worker dose equivalent due to radioactive material taken into the body. It also can provide an indication of the effectiveness of active measures taken to prevent such intake. More rarely it may be used to estimate the dose a worker received from a massive external radiation exposure (for example, by counting white blood cells or chromosomal defects).

Bioassay must be performed when a reasonable possibility exists that a worker may take or has taken into his or her body more than a certain percentage (usually 5 or 10%) of the ALI for a radionuclide. The chemical and physical form of the radionuclide sought in the body determines the type of bioassay necessary to detect it.

Bioassay can consist of analysing samples taken from the body (for example, urine, faeces, blood or hair) for radioactive isotopes. In this case, the amount of radioactivity in the sample can be related to the radioactivity in the person’s body and subsequently to the radiation dose that the person’s body or certain organs have received or are committed to receive. Urine bioassay for tritium is an example of this type of bioassay.

Whole or partial body scanning can be used to detect radionuclides that emit x or gamma rays of energy reasonably detectable outside the body. Thyroid bioassay for iodine-131 (131I) is an example of this type of bioassay.

Bioassay can be performed in-house or samples or personnel can be sent to a facility or organization that specializes in the bioassay to be performed. In either case, proper calibration of equipment and accreditation of laboratory procedures is essential to ensure accurate, precise, and defensible bioassay results.

Protective Clothing

Protective clothing is supplied by the employer to the worker to reduce the possibility of radioactive contamination of the worker or his or her clothing or to partially shield the worker from beta, x, or gamma radiation. Examples of the former are anti-contamination clothing, gloves, hoods and boots. Examples of the latter are leaded aprons, gloves and eyeglasses.

Respiratory Protection

A respiratory protection device is an apparatus, such as a respirator, used to reduce a worker’s intake of airborne radioactive materials.

Employers must use, to the extent practical, process or other engineering controls (for example, containment or ventilation) to limit the concentrations of the radioactive materials in air. When this is not possible for controlling the concentrations of radioactive material in air to values below those that define an airborne radioactivity area, the employer, consistent with maintaining the total effective dose equivalent ALARA, must increase monitoring and limit intakes by one or more of the following means:

- control of access

- limitation of exposure times

- use of respiratory protection equipment

- other controls.

Respiratory protection equipment issued to workers must comply with applicable national standards for such equipment.

The employer must implement and maintain a respiratory protection programme that includes:

- air sampling sufficient to identify the potential hazard, permit proper equipment selection and estimate exposures

- surveys and bioassays, as appropriate, to evaluate actual intakes

- testing of respirators for operability immediately prior to each use

- written procedures regarding selection, fitting, issuance, maintenance and testing of respirators, including testing for operability immediately prior to each use; supervision and training of personnel; monitoring, including air sampling and bioassays; and record-keeping

- determination by a physician prior to the initial fitting of respirators, and periodically at a frequency determined by a physician, that the individual user is medically fit to use the respiratory protection equipment.

The employer must advise each respirator user that the user may leave the work area at any time for relief from respirator use in the event of equipment malfunction, physical or psychological distress, procedural or communication failure, significant deterioration of operating conditions, or any other conditions that might require such relief.

Even though circumstances may not require routine use of respirators, credible emergency conditions may mandate their availability. In such cases, the respirators also must be certified for such use by an appropriate accrediting organization and maintained in a condition ready for use.

Occupational Health Surveillance

Workers exposed to ionizing radiation should receive occupational health services to the same extent as workers exposed to other occupational hazards.

General preplacement examinations assess the overall health of the prospective employee and establish baseline data. Previous medical and exposure history should always be obtained. Specialized examinations, such as of lens of the eye and blood cell counts, may be necessary depending on the nature of the expected radiation exposure. This should be left to the discretion of the attending physician.

Contamination Surveys

A contamination survey is an evaluation of the radiological conditions incident to the production, use, release, disposal or presence of radioactive materials or other sources of radiation. When appropriate, such an evaluation includes a physical survey of the location of radioactive material and measurements or calculations of levels of radiation, or concentrations or quantities of radioactive material present.

Contamination surveys are performed to demonstrate compliance with national regulations and to evaluate the extent of radiation levels, concentrations or quantities of radioactive material, and the potential radiological hazards that could be present.

The frequency of contamination surveys is determined by the degree of potential hazard present. Weekly surveys should be performed in radioactive waste storage areas and in laboratories and clinics where relatively large amounts of unsealed radioactive sources are used. Monthly surveys suffice for laboratories that work with small amounts of radioactive sources, such as laboratories that perform in vitro testing using isotopes such as tritium, carbon-14 (14C), and iodine-125 (125I) with activities less than a few kBq.

Radiation safety equipment and survey meters must be appropriate for the types of radioactive material and radiations involved, and must be properly calibrated.

Contamination surveys consist of measurements of ambient radiation levels with a Geiger-Mueller (G-M) counter, ionization chamber or scintillation counter; measurements of possible α or βγ surface contamination with appropriate thin-window G-M or zinc sulphide (ZnS) scintillation counters; and wipe tests of surfaces to be later counted in a scintillation (sodium iodide (NaI)) well counter, a germanium (Ge) counter or a liquid scintillation counter, as appropriate.

Appropriate action levels must be established for ambient radiation and contamination measurement results. When an action level is exceeded, steps must be taken immediately to mitigate the detected levels, restore them to acceptable conditions and prevent unnecessary personnel exposure to radiation and the uptake and spread of radioactive material.

Environmental Monitoring

Environmental monitoring refers to collecting and measuring environmental samples for radioactive materials and monitoring areas outside the environs of the workplace for radiation levels. Purposes of environmental monitoring include estimating consequences to humans resulting from the release of radionuclides to the biosphere, detecting releases of radioactive material to the environment before they become serious and demonstrating compliance with regulations.

A complete description of environmental monitoring techniques is beyond the scope of this article. However, general principles will be discussed.

Environmental samples must be taken that monitor the most likely pathway for radionuclides from the environment to man. For example, soil, water, grass and milk samples in agricultural regions around a nuclear power plant should be taken routinely and analysed for iodine-131 (131I) and strontium-90 (90Sr) content.

Environmental monitoring can include taking samples of air, ground water, surface water, soil, foliage, fish, milk, game animals and so on. The choices of which samples to take and how often to take them should be based on the purposes of the monitoring, although a small number of random samples may sometimes identify a previously unknown problem.

The first step in designing an environmental monitoring programme is to characterize the radionuclides being released or having the potential for being accidentally released, with respect to type and quantity and physical and chemical form.

The possibility of transport of these radionuclides through the air, ground water and surface water is the next consideration. The objective is to predict the concentrations of radionuclides reaching humans directly through air and water or indirectly through food.

The bioaccumulation of radionuclides resulting from deposition in aquatic and terrestrial environments is the next item of concern. The goal is to predict the concentration of radionuclides once they enter the food chain.

Finally, the rate of human consumption of these potentially contaminated foodstuffs and how this consumption contributes to human radiation dose and resultant health risk are examined. The results of this analysis are used to determine the best approach to environmental sampling and to ensure that the goals of the environmental monitoring programme are met.

Leak Tests of Sealed Sources

A sealed source means radioactive material that is encased in a capsule designed to prevent leakage or escape of the material. Such sources must be tested periodically to verify that the source is not leaking radioactive material.

Each sealed source must be tested for leakage before its first use unless the supplier has provided a certificate indicating that the source was tested within six months (three months for α emitters) before transfer to the present owner. Each sealed source must be tested for leakage at least once every six months (three months for α emitters) or at an interval specified by the regulatory authority.

Generally, leak tests on the following sources are not required:

- sources containing only radioactive material with a half-life of less than 30 days

- sources containing only radioactive material as a gas

- sources containing 4 MBq or less of βγ-emitting material or 0.4 MBq or less of α-emitting material

- sources stored and not being used; however, each such source must be tested for leakage before any use or transfer unless it has been leakage-tested within six months before the date of use or transfer

- seeds of iridium-192 (192Ir) encased in nylon ribbon.

A leak test is performed by taking a wipe sample from the sealed source or from the surfaces of the device in which the sealed source is mounted or stored on which radioactive contamination might be expected to accumulate or by washing the source in a small volume of detergent solution and treating the entire volume as the sample.

The sample should be measured so that the leakage test can detect the presence of at least 200 Bq of radioactive material on the sample.

Sealed radium sources require special leak test procedures to detect leaking radon (Rn) gas. For example, one procedure involves keeping the sealed source in a jar with cotton fibres for at least 24 hours. At the end of the period, the cotton fibres are analysed for the presence of Rn progeny.

A sealed source found to be leaking in excess of allowable limits must be removed from service. If the source is not repairable, it should be handled as radioactive waste. The regulatory authority may require that leaking sources be reported in case the leakage is a result of a manufacturing defect worthy of further investigation.

Inventory

Radiation safety personnel must maintain an up-to-date inventory of all radioactive material and other sources of ionizing radiation for which the employer is responsible. The organization’s procedures must ensure that radiation safety personnel are aware of the receipt, use, transfer and disposal of all such material and sources so that the inventory can be kept current. A physical inventory of all sealed sources should be done at least once every three months. The complete inventory of ionizing radiation sources should be verified during the annual audit of the radiation safety programme.

Posting of Areas

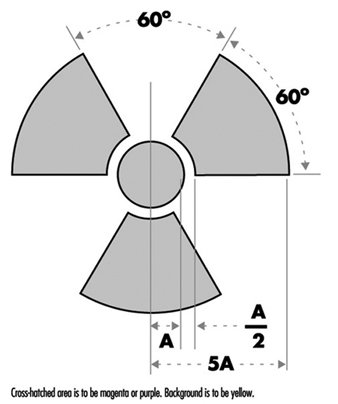

Figure 1 shows the international standard radiation symbol. This must appear prominently on all signs denoting areas controlled for the purposes of radiation safety and on container labels indicating the presence of radioactive materials.

Figure 1. Radiation symbol

Areas controlled for the purposes of radiation safety are often designated in terms of increasing dose rate levels. Such areas must be conspicuously posted with a sign or signs bearing the radiation symbol and the words “CAUTION, RADIATION AREA,” “CAUTION (or DANGER), HIGH RADIATION AREA,” or “GRAVE DANGER, VERY HIGH RADIATION AREA,” as appropriate.

- A radiation area is an area, accessible to personnel, in which radiation levels could result in an individual receiving a dose equivalent in excess of 0.05 mSv in 1 h at 30 cm from the radiation source or from any surface that the radiation penetrates.

- A high radiation area is an area, accessible to personnel, in which radiation levels could result in an individual receiving a dose equivalent in excess of 1 mSv in 1 h at 30 cm from the radiation source or from any surface that the radiation penetrates.

- A very high radiation area is an area, accessible to personnel, in which radiation levels could result in an individual receiving an absorbed dose in excess of 5 Gy in 1 h at 1 m from a radiation source or from any surface that the radiation penetrates.

If an area or room contains a significant amount of radioactive material (as defined by the regulatory authority), the entrance to such area or room must be conspicuously posted with a sign bearing the radiation symbol and the words “CAUTION (or DANGER), RADIOACTIVE MATERIALS”.

An airborne radioactivity area is a room or area in which airborne radioactivity exceeds certain levels defined by the regulatory authority. Each airborne radioactivity area must be posted with a conspicuous sign or signs bearing the radiation symbol and the words “CAUTION, AIRBORNE RADIOACTIVITY AREA” or “DANGER, AIRBORNE RADIOACTIVITY AREA”.

Exceptions for these posting requirements may be granted for patients’ rooms in hospitals where such rooms are otherwise under adequate control. Areas or rooms in which the sources of radiation are to be located for periods of eight hours or less and are otherwise constantly attended under adequate control by qualified personnel need not be posted.

Access Control

The degree to which access to an area must be controlled is determined by the degree of the potential radiation hazard in the area.

Control of access to high radiation areas

Each entrance or access point to a high radiation area must have one or more of the following features:

- a control device that, upon entry into the area, causes the level of radiation to be reduced below that level at which an individual might receive a dose of 1 mSv in 1 h at 30 cm from the radiation source or from any surface that the radiation penetrates

- a control device that energizes a conspicuous visible or audible alarm signal so that the individual entering the high radiation area and the supervisor of the activity are made aware of the entry

- entryways that are locked, except during periods when access to the area is required, with positive control over each individual entry.

In place of the controls required for a high radiation area, continuous direct or electronic surveillance that is capable of preventing unauthorized entry may be substituted.

The controls must be established in a way that does not prevent individuals from leaving the high radiation area.

Control of access to very high radiation areas

In addition to the requirements for a high radiation area, additional measures must be instituted to ensure that an individual is not able to gain unauthorized or inadvertent access to areas in which radiation levels could be encountered at 5 Gy or more in 1 h at 1 m from a radiation source or any surface through which the radiation penetrates.

Markings on Containers and Equipment

Each container of radioactive material above an amount determined by the regulatory authority must bear a durable, clearly visible label bearing the radiation symbol and the words “CAUTION, RADIOACTIVE MATERIAL” or “DANGER, RADIOACTIVE MATERIAL”. The label must also provide sufficient information - such as the radionuclide(s) present, an estimate of the quantity of radioactivity, the date for which the activity is estimated, radiation levels, kinds of materials and mass enrichment - to permit individuals handling or using the containers, or working in the vicinity of the containers, to take precautions to avoid or minimize exposures.

Prior to removal or disposal of empty uncontaminated containers to unrestricted areas, the radioactive material label must be removed or defaced, or it must be clearly indicated that the container no longer contains radioactive materials.

Containers need not be labelled if:

- the containers are attended by an individual who takes the precautions necessary to prevent the exposure of individuals in excess of the regulatory limits

- containers, when they are in transport, are packaged and labelled in accordance with appropriate transportation regulations

- containers are accessible only to individuals authorized to handle or use them, or to work in the vicinity of the containers, if the contents are identified to these individuals by a readily available written record (examples of containers of this type are containers in locations such as water-filled canals, storage vaults or hot cells); the record must be retained as long as the containers are in use for the purpose indicated on the record; or

- the containers are installed in manufacturing or process equipment, such as reactor components, piping and tanks.

Warning Devices and Alarms

High radiation areas and very high radiation areas must be equipped with warning devices and alarms as discussed above. These devices and alarms can be visible or audible or both. Devices and alarms for systems such as particle accelerators should be automatically energized as part of the start-up procedure so that personnel will have time to vacate the area or turn off the system with a “scram” button before radiation is produced. “Scram” buttons (buttons in the controlled area that, when pressed, cause radiation levels to drop immediately to safe levels) must be easily accessible and prominently marked and displayed.

Monitor devices, such as continuous air monitors (CAMs), can be preset to emit audible and visible alarms or to turn off a system when certain action levels are exceeded.

Instrumentation

The employer must make available instrumentation appropriate for the degree and kinds of radiation and radioactive material present in the workplace. This instrumentation may be used to detect, monitor or measure the levels of radiation or radioactivity.

The instrumentation must be calibrated at appropriate intervals using accredited methods and calibration sources. The calibration sources should be as much as possible like the sources to be detected or measured.

Types of instrumentation include hand-held survey instruments, continuous air monitors, hand-and-feet portal monitors, liquid scintillation counters, detectors containing Ge or NaI crystals and so on.

Radioactive Material Transportation

The International Atomic Energy Agency (IAEA) has established regulations for the transportation of radioactive material. Most countries have adopted regulations compatible with IAEA radioactive shipment regulations.

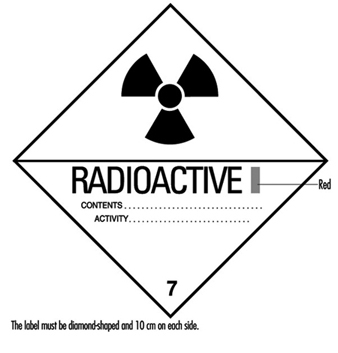

Figure 2. Category I - WHITE label

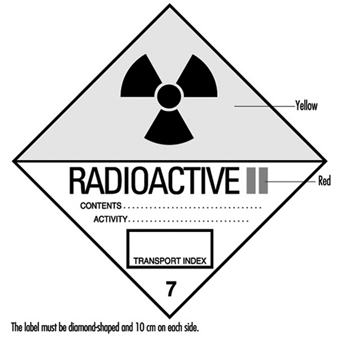

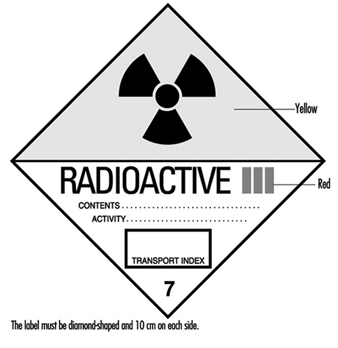

Figure 2, figure 3 and figure 4 are examples of shipping labels that IAEA regulations require on the exterior of packages presented for shipment that contain radioactive materials. The transport index on the labels shown in figure 3 and figure 4 refer to the highest effective dose rate at 1 m from any surface of the package in mSv/h multiplied by 100, then rounded up to the nearest tenth. (For example, if the highest effective dose rate at 1 m from any surface of a package is 0.0233 mSv/h, then the transport index is 2.4.)

Figure 3. Category II - YELLOW label

Figure 5 shows an example of a placard that ground vehicles must prominently display when carrying packages containing radioactive materials above certain amounts.

Figure 5. Vehicle placard

Packaging intended for use in shipping radioactive materials must comply with stringent testing and documentation requirements. The type and quantity of radioactive material being shipped determines what specifications the packaging must meet.

Radioactive material transportation regulations are complicated. Persons who do not routinely ship radioactive materials should always consult experts experienced with such shipments.

Radioactive Waste

Various radioactive waste disposal methods are available, but all are controlled by regulatory authorities. Therefore, an organization must always confer with its regulatory authority to ensure that a disposal method is permissible. Radioactive waste disposal methods include holding the material for radioactive decay and subsequent disposal without regard to radioactivity, incineration, disposal in the sanitary sewerage system, land burial and burial at sea. Burial at sea is often not permitted by national policy or international treaty and will not be discussed further.

Radioactive waste from reactor cores (high-level radioactive waste) presents special problems with regard to disposal. Handling and disposal of such wastes is controlled by national and international regulatory authorities.

Often radioactive waste may have a property other than radioactivity that by itself would make the waste hazardous. Such wastes are called mixed wastes. Examples include radioactive waste that is also a biohazard or is toxic. Mixed wastes require special handling. Refer to regulatory authorities for proper disposition of such wastes.

Holding for radioactive decay

If the half-life of the radioactive material is short (generally less than 65 days) and if the organization has enough storage space, the radioactive waste can be held for decay with subsequent disposal without regard to its radioactivity. A holding period of at least ten half-lives usually is sufficient to make radiation levels indistinguishable from background.

The waste must be surveyed before it may be disposed of. The survey should employ instrumentation appropriate for the radiation to be detected and demonstrate that radiation levels are indistinguishable from background.

Incineration

If the regulatory authority allows incineration, then usually it must be demonstrated that such incineration does not cause the concentration of radionuclides in air to exceed permissible levels. The ash must be surveyed periodically to verify that it is not radioactive. In some circumstances it may be necessary to monitor the stack to ensure that permissible air concentrations are not being exceeded.

Disposal in the sanitary sewerage system

If the regulatory authority allows such disposal, then usually it must be demonstrated that such disposal does not cause the concentration of radionuclides in water to exceed permissible levels. Material to be disposed of must be soluble or otherwise readily dispersible in water. The regulatory authority often sets specific annual limits to such disposal by radionuclide.

Land burial

Radioactive waste not disposable by any other means will be disposed of by land burial at sites licensed by national or local regulatory authorities. Regulatory authorities control such disposal tightly. Waste generators usually are not allowed to dispose of radioactive waste on their own land. Costs associated with land burial include packaging, shipping and storage expenses. These costs are in addition to the cost of the burial space itself and can often be reduced by compacting the waste. Land burial costs for radioactive waste disposal are rapidly escalating.

Programme Audits

Radiation safety programmes should be audited periodically for effectiveness, completeness and compliance with regulatory authority. The audit should be done at least once a year and be comprehensive. Self-audits are usually permissible but audits by independent outside agencies are desirable. Outside agency audits tend to be more objective and have a more global point of view than local audits. An auditing agency not associated with day-to-day operations of a radiation safety programme often can identify problems not seen by the local operators, who may have become accustomed to overlooking them.

Training

Employers must provide radiation safety training to all workers exposed or potentially exposed to ionizing radiation or radioactive materials. They must provide initial training before a worker begins work and annual refresher training. In addition, each female worker of child-bearing age must be provided special training and information about the effects of ionizing radiation on the unborn child and about appropriate precautions she should take. This special training must be given when she is first employed, at annual refresher training, and if she notifies her employer that she is pregnant.

All individuals working in or frequenting any portion of an area access to which is restricted for the purposes of radiation safety:

- must be kept informed of the storage, transfer or use of radioactive materials or of radiation in such portions of the restricted area

- must be instructed in the health protection problems associated with exposure to such radioactive materials or radiation, in precautions or procedures to minimize exposure, and in the purposes and functions of protective devices employed

- must be instructed in, and instructed to observe, to the extent within the worker’s control, the applicable provisions of national and employer regulations for the protection of personnel from exposures to radiation or radioactive materials occurring in such areas

- must be instructed of their responsibility to report promptly to the employer any condition which may lead to or cause a violation of national or employer regulations or unnecessary exposure to radiation or to radioactive material

- must be instructed in the appropriate response to warnings made in the event of any unusual occurrence or malfunction that may involve exposure to radiation or radioactive material

- must be advised as to the radiation exposure reports that workers may request.

The extent of radiation safety instructions must be commensurate with potential radiological health protection problems in the controlled area. Instructions must be extended as appropriate to ancillary personnel, such as nurses who attend radioactive patients in hospitals and fire-fighters and police officers who might respond to emergencies.

Worker Qualifications

Employers must ensure that workers using ionizing radiation are qualified to perform the work for which they are employed. The workers must have the background and experience to perform their jobs safely, particularly with reference to exposure to and use of ionizing radiation and radioactive materials.

Radiation safety personnel must have the appropriate knowledge and qualifications to implement and operate a good radiation safety programme. Their knowledge and qualifications must be at least commensurate with the potential radiological health protection problems that they and the workers are reasonably likely to encounter.

Emergency Planning

All but the smallest operations that use ionizing radiation or radioactive materials must have emergency plans in place. These plans must be kept current and exercised on a periodic basis.

Emergency plans should address all credible emergency situations. The plans for a large nuclear power plant will be much more extensive and involve a much larger area and number of people than the plans for a small radioisotope laboratory.

All hospitals, especially in large metropolitan areas, should have plans for receiving and caring for radioactively contaminated patients. Police and fire-fighting organizations should have plans for dealing with transportation accidents involving radioactive material.

Record Keeping

The radiation safety activities of an organization must be fully documented and appropriately retained. Such records are essential if the need arises for past radiation exposures or radioactivity releases and for demonstrating compliance with regulatory authority requirements. Consistent, accurate and comprehensive record keeping must receive high priority.

Organizational Considerations

The position of the person primarily responsible for radiation safety must be placed in the organization so that he or she has immediate access to all echelons of workers and management. He or she must have free access to areas to which access is restricted for purposes of radiation safety and the authority to halt unsafe or illegal practices immediately.

Planning for and Management of Radiation Accidents

This article describes several significant radiation accidents, their causes and the responses to them. A review of the events leading up to, during and following these accidents can provide planners with information to preclude future occurrences of such accidents and to enhance an appropriate, rapid response in the event a similar accident occurs again.

Acute Radiation Death Resulting from an Accidental Nuclear Critical Excursion on 30 December 1958

This report is noteworthy because it involved the largest accidental dose of radiation received by humans (to date) and because of the extremely professional and thorough work-up of the case. This represents one of the best, if not the best, documented acute radiation syndrome descriptions that exists (JOM 1961).

At 4:35 p.m. on 30 December 1958, an accidental critical excursion resulting in fatal radiation injury to an employee (K) took place in the plutonium recovery plant at the Los Alamos National Laboratory (New Mexico, United States).

The time of the accident is important because six other workers had been in the same room with K thirty minutes earlier. The date of the accident is important because the normal flow of fissionable material into the system was interrupted for year-end physical inventory. This interruption caused a routine procedure to become non-routine and led to an accidental “criticality” of the plutonium-rich solids that were accidentally introduced into the system.

Summary of estimates of K’s radiation exposure

The best estimate of K’s average total-body exposure was between 39 and 49 Gy, of which about 9 Gy was due to fission neutrons. A considerably greater portion of the dose was delivered to the upper half of the body than to the lower half. Table 1 shows an estimate of K’s radiation exposure.

Table 1. Estimates of K’s radiation exposure

|

Region and conditions |

Fast neutron |

Gamma |

Total |

|

Head (incident) |

26 |

78 |

104 |

|

Upper abdomen |

30 |

90 |

124 |

|

Total body (average) |

9 |

30-40 |

39-49 |

Clinical course of patient

In retrospect, the clinical course of patient K can be divided into four distinct periods. These periods differed in duration, symptoms and response to supportive therapy.

The first period, lasting from 20 to 30 minutes, was characterized by his immediate physical collapse and mental incapacitation. His condition progressed to semi-consciousness and severe prostration.

The second period lasted about 1.5 hours and began with his arrival by stretcher at the emergency room of the hospital and ended with his transfer from the emergency room to the ward for further supportive therapy. This interval was characterized by such severe cardiovascular shock that death seemed imminent during the whole time. He seemed to be suffering severe abdominal pain.

The third period was about 28 hours long and was characterized by enough subjective improvement to encourage continued attempts to alleviate his anoxia, hypotension and circulatory failure.

The fourth period began with the unheralded onset of rapidly increasing irritability and antagonism, bordering on mania, followed by coma and death in approximately 2 hours. The entire clinical course lasted 35 hours from the time of radiation exposure to death.

The most dramatic clinicopathological changes were observed in the haemopoietic and urinary systems. Lymphocytes were not found in the circulating blood after the eighth hour, and there was virtually complete urinary shutdown despite administration of large amount of fluids.

K’s rectal temperature varied between 39.4 and 39.7°C for the first 6 hours and then fell precipitously to normal, where it remained for the duration of his life. This high initial temperature and its maintenance for 6 hours were considered in keeping with his suspected massive dose of radiation. His prognosis was grave.

Of all the various determinations made during the course of the illness, changes in white cell count were found to be the simplest and best prognostic indicator of severe irradiation. The virtual disappearance of lymphocytes from the peripheral circulation within 6 hours of exposure was considered a grave sign.

Sixteen different therapeutic agents were employed in the symptomatic treatment of K over about a 30-hour period. In spite of this and continued oxygen administration, his heart tones became very distant, slow and irregular about 32 hours after irradiation. His heart then became progressively weaker and suddenly stopped 34 hours 45 minutes after irradiation.

Windscale Reactor No. 1 Accident of 9-12 October 1957

Windscale reactor No. 1 was an air-cooled, graphite-moderated natural uranium-fuelled plutonium production reactor. The core was partially ruined by fire on 15 October 1957. This fire resulted in a release of approximately 0.74 PBq (10+15 Bq) of iodine-131 (131I) to the downwind environment.

According to a US Atomic Energy Commission accident information report about the Windscale incident, the accident was caused by operator judgement errors concerning thermocouple data and was made worse by faulty handling of the reactor that permitted the graphite temperature to rise too rapidly. Also contributory was the fact that fuel temperature thermocouples were located in the hottest part of the reactor (that is, where the highest dose rates occurred) during normal operations rather than in parts of the reactor which were hottest during an abnormal release. A second equipment deficiency was the reactor power meter, which was calibrated for normal operations and read low during the annealing. As a result of the second heating cycle, the graphite temperature rose on 9 October, especially in the lower front part of the reactor where some cladding had failed because of the earlier rapid temperature rise. Although there were a number of small iodine releases on 9 October, the releases were not recognized until 10 October when the stack activity meter showed a significant increase (which was not regarded as highly significant). Finally, on the afternoon of 10 October, other monitoring (Calder site) indicated the release of radioactivity. Efforts to cool the reactor by forcing air through it not only failed but actually increased the magnitude of the radioactivity released.

The estimated releases from the Windscale accident were 0.74 PBq of 131I, 0.22 PBq of caesium-137 (137Cs), 3.0 TBq (1012Bq) of strontium-89 (89Sr), and 0.33 TBq of strontium-90

(90Sr). The highest offsite gamma absorbed dose rate was about 35 μGy/h due to airborne activity. Air activity readings around the Windscale and Calder plants often were 5 to 10 times the maximum permissible levels, with occasional peaks of 150 times permissible levels. A milk ban extended over a radius of approximately 420 km.

During operations to bring the reactor under control, 14 workers received dose equivalents greater than 30 mSv per calendar quarter, with the maximum dose equivalent at 46 mSv per calendar quarter.

Lessons learned

There were many lessons learned concerning natural uranium reactor design and operation. The inadequacies concerning reactor instrumentation and reactor operator training also bring up points analogous to the Three Mile Island accident (see below).

No guidelines existed for short-term permissible exposure to radioiodine in food. The British Medical Research Council performed a prompt and thorough investigation and analysis. Much ingenuity was used in promptly deriving maximum permissible concentrations for 131I in food. The study Emergency Reference Levels that resulted from this accident serves as a basis for emergency planning guides now used worldwide (Bryant 1969).

A useful correlation was derived for predicting significant radioiodine contamination in milk. It was found that gamma radiation levels in pastures which exceeded 0.3 μGy/h yielded milk which exceeded 3.7 MBq/m3.

Absorbed dose from inhalation of external exposure to radioiodines is negligible compared to that from drinking milk or eating dairy products. In an emergency, rapid gamma spectroscopy is preferable to slower laboratory procedures.

Fifteen two-person teams performed radiation surveys and obtained samples. Twenty persons were used for sample coordination and data reporting. About 150 radiochemists were involved in sampling analysis.

Glass wool stack filters are not satisfactory under accident conditions.

Gulf Oil Accelerator Accident of 4 October 1967

Gulf Oil Company technicians were using a 3 MeV Van de Graaff accelerator for the activation of soil samples on 4 October 1967. The combination of an interlock failure on the power key of the accelerator console and the taping of several of the interlocks on the safety tunnel door and the target room inside door produced serious accidental exposures to three individuals. One individual received approximately 1 Gy whole-body dose equivalent, the second received close to 3 Gy whole-body dose equivalent and the third received approximately 6 Gy whole-body dose equivalent, in addition to approximately 60 Gy to the hands and 30 Gy to the feet.

One of the accident victims reported to the medical department, complaining of nausea, vomiting and generalized muscular aches. His symptoms initially were misdiagnosed as flu symptoms. When the second patient came in with approximately the same symptoms, it was decided that they may possibly have received significant radiation exposures. Film badges verified this. Dr. Niel Wald, University of Pittsburgh Radiological Health Division, supervised the dosimetry tests and also acted as coordinating physician in the work-up and treatment of the patients.

Dr. Wald very quickly had absolute filter units flown in to the western Pennsylvania hospital in Pittsburgh where the three patients had been admitted. He set up these absolute filter/laminar flow filters to clean the patients’ environment of all biological contaminants. These “reverse isolation” units were used on the 1 Gy exposure patient for about 16 days, and on the 3 and 6 Gy exposure patients for about a month and half.

Dr. E. Donnal Thomas from the University of Washington arrived to perform a bone marrow transplant on the 6 Gy patient on the eighth day after exposure. The patient’s twin brother served as the bone marrow donor. Although this heroic medical treatment saved the 6 Gy patient’s life, nothing could be done to save his arms and legs, each of which received tens-of-gray absorbed dose.

Lessons learned

If the simple operating procedure of always using a survey meter when entering the exposure room had been followed, this tragic accident would have been avoided.

At least two interlocks had been taped closed for long periods of time prior to this accident. Defeating of protective interlocks is intolerable.

Regular maintenance checks should have been made on the key-operated power interlocks for the accelerator.

Timely medical attention saved the life of the person with the highest exposure. The heroic procedure of a complete bone marrow transplant together with the use of reverse isolation and quality medical care were all major factors in saving this person’s life.

Reverse isolation filters can be obtained in a matter of hours to be set up in any hospital to care for highly exposed patients.

In retrospect, medical authorities involved with these patients would have recommended amputation earlier and at a definitive level within two or three months after the exposure. Earlier amputation decreases the likelihood of infection, gives a shorter period of severe pain, reduces pain medication required for the patient, possibly reduces the patient’s hospital stay, and possibly contributes to earlier rehabilitation. Earlier amputation should, of course, be done while correlating dosimetry information with clinical observations.

The SL–1 Prototype Reactor Accident (Idaho, USA, 3 January 1961)

This is the first (and to date the only) fatal accident in the history of US reactor operations. The SL-1 is a prototype of a small Army Package Power Reactor (APPR) designed for air transportation to remote areas for production of electrical power. This reactor was used for fuel testing, and for reactor crew training. It was operated in the remote desert location of the National Reactor Testing Station in Idaho Falls, Idaho, by Combustion Engineering for the US Army. The SL-1 was not a commercial power reactor (AEC 1961; American Nuclear Society 1961).

At the time of the accident, the SL-1 was loaded with 40 fuel elements and 5 control rod blades. It could produce a power level of 3 MW (thermal) and was a boiling water–cooled and –moderated reactor.

The accident resulted in the deaths of three military personnel. The accident was caused by the withdrawal of a single control rod for a distance of more than 1 m. This caused the reactor to go into prompt criticality. The reason why a skilled, licensed reactor operator with much refuelling operation experience withdrew the control rod past its normal stop point is unknown.

One of the three accident victims was still alive when initial response personnel first reached the scene of the accident. High activity fission products covered his body and were embedded in his skin. Portions of the victim’s skin registered in excess of 4.4 Gy/h at 15 cm and hampered rescue and medical treatment.

Lessons learned

No reactor designed since the SL-1 accident can be brought to “prompt-critical” state with a single control rod.

All reactors must have portable survey meters onsite that have ranges greater than 20 mGy/h. Survey meters of 10 Gy/h maximum range are recommended.

Note: The Three Mile Island accident showed that 100 Gy/h is the required range for both gamma and beta measurements.

Treatment facilities are required where a highly contaminated patient can receive definitive medical treatment with reasonable safeguards for attendant personnel. Since most of these facilities will be in clinics with other ongoing missions, control of airborne and waterborne radioactive contaminants may require special provisions.

X-ray Machines, Industrial and Analytical

Accidental exposures from x-ray systems are numerous and often involve extremely high exposures to small portions of the body. It is not unusual for x-ray diffraction systems to produce absorbed dose rates of 5 Gy/s at 10 cm from the tube focus. At shorter distances, 100 Gy/s rates have often been measured. The beam is usually narrow, but even a few seconds’ exposure can result in severe local injury (Lubenau et al. 1967; Lindell 1968; Haynie and Olsher 1981; ANSI 1977).

Because these systems are often used in “non-routine” circumstances, they lend themselves to the production of accidental exposures. X-ray systems commonly used in normal operations appear to be reasonably safe. Equipment failure has not caused severe exposures.

Lessons learned from accidental x-ray exposures

Most accidental exposures occurred during non-routine uses when equipment was partially disassembled or shield covers had been removed.

In most serious exposures, adequate instruction for the staff and maintenance personnel had been lacking.

If simple and fail-safe methods had been used to ensure that x-ray tubes were turned off during repairs and maintenance, many accidental exposures would have been avoided.

Finger or wrist personnel dosimeters should be used for operators and maintenance personnel working with these machines.

If interlocks had been required, many accidental exposures would have been avoided.

Operator error was a contributing cause in most of the accidents. Lack of adequate enclosures or poor shielding design often worsened the situation.

Industrial radiography accidents

From the 1950s through the 1970s, the highest radiation accident rate for a single activity has consistently been for industrial radiographic operations (IAEA 1969, 1977). National regulatory bodies continue to struggle to reduce the rate by a combination of improved regulations, strict training requirements and ever tougher inspection and enforcement policies (USCFR 1990). These regulatory efforts have generally succeeded, but many accidents associated with industrial radiography still occur. Legislation allowing huge monetary fines may be the most effective tool in keeping radiation safety focused in the minds of industrial radiography management (and also, therefore, in workers’ minds).

Causes of industrial radiography accidents

Worker training. Industrial radiography probably has lower education and training requirements than any other type of radiation employment. Therefore, existing training requirements must be strictly enforced.

Worker production incentive. For years, major emphasis for industrial radiographers was placed on the amount of successful radiographs produced per day. This practice can lead to unsafe acts as well as to occasional non-use of personnel dosimetry so that exceeding dose equivalent limits would not be detected.

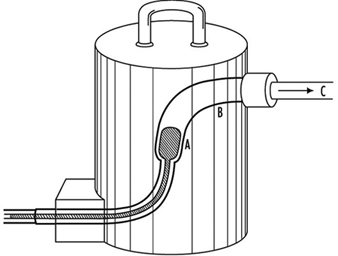

Lack of proper surveys. Thorough surveying of source pigs (storage containers) (figure 1) after every exposure is most important. Not performing these surveys is the single most probable cause of unnecessary exposures, many of which are unrecorded, since industrial radiographers rarely use hand or finger dosimeters (figure 1).

Figure 1. Industrial radiography camera

Equipment problems. Because of heavy use of industrial radiographic cameras, source winding mechanisms can loosen and cause the source to not completely retract into its safe storage position (point A in figure 1). There are also many instances of closet-source interlock failures that cause accidental exposures of personnel.

Design of Emergency Plans

Many excellent guidelines, both general and specific, exist for the design of emergency plans. Some references are particularly helpful. These are given in the suggested readings at the end of this chapter.

Initial drafting of emergency plan and procedures

First, one must assess the entire radioactive material inventory for the subject facility. Then credible accidents must be analysed so that one can determine the probable maximum source release terms. Next, the plan and its procedures must enable the facility operators to:

- recognize an accident situation

- classify the accident according to severity

- take steps to mitigate the accident

- make timely notifications

- call for help efficiently and quickly

- quantify releases

- keep track of exposures both on- and offsite, as well as keep emergency exposures ALARA

- recover the facility as quickly as practical

- keep accurate and detailed records.

Types of accidents associated with nuclear reactors