Children categories

36. Barometric Pressure Increased (2)

36. Barometric Pressure Increased

Chapter Editor: T.J.R. Francis

Table of Contents

Working under Increased Barometric Pressure

Eric Kindwall

Dees F. Gorman

Tables

Click a link below to view table in article context.

1. Instructions for compressed-air workers

2. Decompression illness: Revised classification

37. Barometric Pressure Reduced (4)

37. Barometric Pressure Reduced

Chapter Editor: Walter Dümmer

Table of Contents

Figures and Tables

Ventilatory Acclimatization to High Altitude

John T. Reeves and John V. Weil

Physiological Effects of Reduced Barometric Pressure

Kenneth I. Berger and William N. Rom

Health Considerations for Managing Work at High Altitudes

John B. West

Prevention of Occupational Hazards at High Altitudes

Walter Dümmer

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

38. Biological Hazards (4)

38. Biological Hazards

Chapter Editor: Zuheir Ibrahim Fakhri

Table of Contents

Tables

Workplace Biohazards

Zuheir I. Fakhri

Aquatic Animals

D. Zannini

Terrestrial Venomous Animals

J.A. Rioux and B. Juminer

Clinical Features of Snakebite

David A. Warrell

Tables

Click a link below to view table in article context.

1. Occupational settings with biological agents

2. Viruses, bacteria, fungi & plants in the workplace

3. Animals as a source of occupational hazards

39. Disasters, Natural and Technological (12)

39. Disasters, Natural and Technological

Chapter Editor: Pier Alberto Bertazzi

Table of Contents

Tables and Figures

Disasters and Major Accidents

Pier Alberto Bertazzi

ILO Convention concerning the Prevention of Major Industrial Accidents, 1993 (No. 174)

Disaster Preparedness

Peter J. Baxter

Post-Disaster Activities

Benedetto Terracini and Ursula Ackermann-Liebrich

Weather-Related Problems

Jean French

Avalanches: Hazards and Protective Measures

Gustav Poinstingl

Transportation of Hazardous Material: Chemical and Radioactive

Donald M. Campbell

Radiation Accidents

Pierre Verger and Denis Winter

Case Study: What does dose mean?

Occupational Health and Safety Measures in Agricultural Areas Contaminated by Radionuclides: The Chernobyl Experience

Yuri Kundiev, Leonard Dobrovolsky and V.I. Chernyuk

Case Study: The Kader Toy Factory Fire

Casey Cavanaugh Grant

Impacts of Disasters: Lessons from a Medical Perspective

José Luis Zeballos

Tables

Click a link below to view table in article context.

1. Definitions of disaster types

2. 25-yr average # victims by type & region-natural trigger

3. 25-yr average # victims by type & region-non-natural trigger

4. 25-yr average # victims by type-natural trigger (1969-1993)

5. 25-yr average # victims by type-non-natural trigger (1969-1993)

6. Natural trigger from 1969 to 1993: Events over 25 years

7. Non-natural trigger from 1969 to 1993: Events over 25 years

8. Natural trigger: Number by global region & type in 1994

9. Non-natural trigger: Number by global region & type in 1994

10. Examples of industrial explosions

11. Examples of major fires

12. Examples of major toxic releases

13. Role of major hazard installations management in hazard control

14. Working methods for hazard assessment

15. EC Directive criteria for major hazard installations

16. Priority chemicals used in identifying major hazard installations

17. Weather-related occupational risks

18. Typical radionuclides, with their radioactive half-lives

19. Comparison of different nuclear accidents

20. Contamination in Ukraine, Byelorussia & Russia after Chernobyl

21. Contamination strontium-90 after the Khyshtym accident (Urals 1957)

22. Radioactive sources that involved the general public

23. Main accidents involving industrial irradiators

24. Oak Ridge (US) radiation accident registry (worldwide, 1944-88)

25. Pattern of occupational exposure to ionizing radiation worldwide

26. Deterministic effects: thresholds for selected organs

27. Patients with acute irradiation syndrome (AIS) after Chernobyl

28. Epidemiological cancer studies of high dose external irradiation

29. Thyroid cancers in children in Belarus, Ukraine & Russia, 1981-94

30. International scale of nuclear incidents

31. Generic protective measures for general population

32. Criteria for contamination zones

33. Major disasters in Latin America & the Caribbean, 1970-93

34. Losses due to six natural disasters

35. Hospitals & hospital beds damaged/ destroyed by 3 major disasters

36. Victims in 2 hospitals collapsed by the 1985 earthquake in Mexico

37. Hospital beds lost resulting from the March 1985 Chilean earthquake

38. Risk factors for earthquake damage to hospital infrastructure

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Click to return to top of page

40. Electricity (3)

40. Electricity

Chapter Editor: Dominique Folliot

Table of Contents

Figures and Tables

Electricity—Physiological Effects

Dominique Folliot

Static Electricity

Claude Menguy

Prevention And Standards

Renzo Comini

Tables

Click a link below to view table in article context.

1. Estimates of the rate of electrocution-1988

2. Basic relationships in electrostatics-Collection of equations

3. Electron affinities of selected polymers

4. Typical lower flammability limits

5. Specific charge associated with selected industrial operations

6. Examples of equipment sensitive to electrostatic discharges

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

41. Fire (6)

41. Fire

Chapter Editor: Casey C. Grant

Table of Contents

Figures and Tables

Basic Concepts

Dougal Drysdale

Sources of Fire Hazards

Tamás Bánky

Fire Prevention Measures

Peter F. Johnson

Passive Fire Protection Measures

Yngve Anderberg

Active Fire Protection Measures

Gary Taylor

Organizing for Fire Protection

S. Dheri

Tables

Click a link below to view table in article context.

1. Lower & upper flammability limits in air

2. Flashpoints & firepoints of liquid & solid fuels

3. Ignition sources

4. Comparison of concentrations of different gases required for inerting

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

42. Heat and Cold (12)

42. Heat and Cold

Chapter Editor: Jean-Jacques Vogt

Table of Contents

Figures and Tables

Physiological Responses to the Thermal Environment

W. Larry Kenney

Effects of Heat Stress and Work in the Heat

Bodil Nielsen

Heat Disorders

Tokuo Ogawa

Prevention of Heat Stress

Sarah A. Nunneley

The Physical Basis of Work in Heat

Jacques Malchaire

Assessment of Heat Stress and Heat Stress Indices

Kenneth C. Parsons

Case Study: Heat Indices: Formulae and Definitions

Heat Exchange through Clothing

Wouter A. Lotens

Cold Environments and Cold Work

Ingvar Holmér, Per-Ola Granberg and Goran Dahlstrom

Prevention of Cold Stress in Extreme Outdoor Conditions

Jacques Bittel and Gustave Savourey

Cold Indices and Standards

Ingvar Holmér

Tables

Click a link below to view table in article context.

1. Electrolyte concentration in blood plasma & sweat

2. Heat Stress Index & Allowable Exposure Times: calculations

3. Interpretation of Heat Stress Index values

4. Reference values for criteria of thermal stress & strain

5. Model using heart rate to assess heat stress

6. WBGT reference values

7. Working practices for hot environments

8. Calculation of the SWreq index & assessment method: equations

9. Description of terms used in ISO 7933 (1989b)

10. WBGT values for four work phases

11. Basic data for the analytical assessment using ISO 7933

12. Analytical assessment using ISO 7933

13. Air temperatures of various cold occupational environments

14. Duration of uncompensated cold stress & associated reactions

15. Indication of anticipated effects of mild & severe cold exposure

16. Body tissue temperature & human physical performance

17. Human responses to cooling: Indicative reactions to hypothermia

18. Health recommendations for personnel exposed to cold stress

19. Conditioning programmes for workers exposed to cold

20. Prevention & alleviation of cold stress: strategies

21. Strategies & measures related to specific factors & equipment

22. General adaptational mechanisms to cold

23. Number of days when water temperature is below 15 ºC

24. Air temperatures of various cold occupational environments

25. Schematic classification of cold work

26. Classification of levels of metabolic rate

27. Examples of basic insulation values of clothing

28. Classification of thermal resistance to cooling of handwear

29. Classification of contact thermal resistance of handwear

30. Wind Chill Index, temperature & freezing time of exposed flesh

31. Cooling power of wind on exposed flesh

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

43. Hours of Work (1)

43. Hours of Work

Chapter Editor: Peter Knauth

Table of Contents

Hours of Work

Peter Knauth

Tables

Click a link below to view table in article context.

1. Time intervals from beginning shiftwork until three illnesses

2. Shiftwork & incidence of cardiovascular disorders

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

44. Indoor Air Quality (8)

44. Indoor Air Quality

Chapter Editor: Xavier Guardino Solá

Table of Contents

Figures and Tables

Indoor Air Quality: Introduction

Xavier Guardino Solá

Nature and Sources of Indoor Chemical Contaminants

Derrick Crump

Radon

María José Berenguer

Tobacco Smoke

Dietrich Hoffmann and Ernst L. Wynder

Smoking Regulations

Xavier Guardino Solá

Measuring and Assessing Chemical Pollutants

M. Gracia Rosell Farrás

Biological Contamination

Brian Flannigan

Regulations, Recommendations, Guidelines and Standards

María José Berenguer

Tables

Click a link below to view table in article context.

1. Classification of indoor organic pollutants

2. Formaldehyde emission from a variety of materials

3. Ttl. volatile organic comp’ds concs, wall/floor coverings

4. Consumer prods & other sources of volatile organic comp’ds

5. Major types & concentrations in the urban United Kingdom

6. Field measurements of nitrogen oxides & carbon monoxide

7. Toxic & tumorigenic agents in cigarette sidestream smoke

8. Toxic & tumorigenic agents from tobacco smoke

9. Urinary cotinine in non-smokers

10. Methodology for taking samples

11. Detection methods for gases in indoor air

12. Methods used for the analysis of chemical pollutants

13. Lower detection limits for some gases

14. Types of fungus which can cause rhinitis and/or asthma

15. Micro-organisms and extrinsic allergic alveolitis

16. Micro-organisms in nonindustrial indoor air & dust

17. Standards of air quality established by the US EPA

18. WHO guidelines for non-cancer and non-odour annoyance

19. WHO guideline values based on sensory effects or annoyance

20. Reference values for radon of three organizations

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

45. Indoor Environmental Control (6)

45. Indoor Environmental Control

Chapter Editor: Juan Guasch Farrás

Table of Contents

Figures and Tables

Control of Indoor Environments: General Principles

A. Hernández Calleja

Indoor Air: Methods for Control and Cleaning

E. Adán Liébana and A. Hernández Calleja

Aims and Principles of General and Dilution Ventilation

Emilio Castejón

Ventilation Criteria for Nonindustrial Buildings

A. Hernández Calleja

Heating and Air-Conditioning Systems

F. Ramos Pérez and J. Guasch Farrás

Indoor Air: Ionization

E. Adán Liébana and J. Guasch Farrás

Tables

Click a link below to view table in article context.

1. Most common indoor pollutants & their sources

2. Basic requirements-dilution ventilation system

3. Control measures & their effects

4. Adjustments to working environment & effects

5. Effectiveness of filters (ASHRAE standard 52-76)

6. Reagents used as absorbents for contaminents

7. Levels of quality of indoor air

8. Contamination due to the occupants of a building

9. Degree of occupancy of different buildings

10. Contamination due to the building

11. Quality levels of outside air

12. Proposed norms for environmental factors

13. Temperatures of thermal comfort (based on Fanger)

14. Characteristics of ions

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

46. Lighting (3)

46. Lighting

Chapter Editor: Juan Guasch Farrás

Table of Contents

Figures and Tables

Types of Lamps and Lighting

Richard Forster

Conditions Required for Visual

Fernando Ramos Pérez and Ana Hernández Calleja

General Lighting Conditions

N. Alan Smith

Tables

Click a link below to view table in article context.

1. Improved output & wattage of some 1,500 mm fluorescent tube lamps

2. Typical lamp efficacies

3. International Lamp Coding System (ILCOS) for some lamp types

4. Common colours & shapes of incandescent lamps & ILCOS codes

5. Types of high-pressure sodium lamp

6. Colour contrasts

7. Reflection factors of different colours & materials

8. Recommended levels of maintained illuminance for locations/tasks

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

47. Noise (5)

47. Noise

Chapter Editor: Alice H. Suter

Table of Contents

Figures and Tables

The Nature and Effects of Noise

Alice H. Suter

Noise Measurement and Exposure Evaluation

Eduard I. Denisov and German A. Suvorov

Engineering Noise Control

Dennis P. Driscoll

Hearing Conservation Programmes

Larry H. Royster and Julia Doswell Royster

Standards and Regulations

Alice H. Suter

Tables

Click a link below to view table in article context.

1. Permissible exposure limits (PEL)for noise exposure, by nation

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

48. Radiation: Ionizing (6)

48. Radiation: Ionizing

Chapter Editor: Robert N. Cherry, Jr.

Table of Contents

Introduction

Robert N. Cherry, Jr.

Radiation Biology and Biological Effects

Arthur C. Upton

Sources of Ionizing Radiation

Robert N. Cherry, Jr.

Workplace Design for Radiation Safety

Gordon M. Lodde

Radiation Safety

Robert N. Cherry, Jr.

Planning for and Management of Radiation Accidents

Sydney W. Porter, Jr.

49. Radiation, Non-Ionizing (9)

49. Radiation, Non-Ionizing

Chapter Editor: Bengt Knave

Table of Contents

Tables and Figures

Electric and Magnetic Fields and Health Outcomes

Bengt Knave

The Electromagnetic Spectrum: Basic Physical Characteristics

Kjell Hansson Mild

Ultraviolet Radiation

David H. Sliney

Infrared Radiation

R. Matthes

Light and Infrared Radiation

David H. Sliney

Lasers

David H. Sliney

Radiofrequency Fields and Microwaves

Kjell Hansson Mild

VLF and ELF Electric and Magnetic Fields

Michael H. Repacholi

Static Electric and Magnetic Fields

Martino Grandolfo

Tables

Click a link below to view table in article context.

1. Sources and exposures for IR

2. Retinal thermal hazard function

3. Exposure limits for typical lasers

4. Applications of equipment using range >0 to 30 kHz

5. Occupational sources of exposure to magnetic fields

6. Effects of currents passing through the human body

7. Biological effects of various current density ranges

8. Occupational exposure limits-electric/magnetic fields

9. Studies on animals exposed to static electric fields

10. Major technologies and large static magnetic fields

11. ICNIRP recommendations for static magnetic fields

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

50. Vibration (4)

50. Vibration

Chapter Editor: Michael J. Griffin

Table of Contents

Table and Figures

Vibration

Michael J. Griffin

Whole-body Vibration

Helmut Seidel and Michael J. Griffin

Hand-transmitted Vibration

Massimo Bovenzi

Motion Sickness

Alan J. Benson

Tables

Click a link below to view table in article context.

1. Activities with adverse effects of whole-body vibration

2. Preventive measures for whole-body vibration

3. Hand-transmitted vibration exposures

4. Stages, Stockholm Workshop scale, hand-arm vibration syndrome

5. Raynaud’s phenomenon & hand-arm vibration syndrome

6. Threshold limit values for hand-transmitted vibration

7. European Union Council Directive: Hand-transmitted vibration (1994)

8. Vibration magnitudes for finger blanching

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

51. Violence (1)

51. Violence

Chapter Editor: Leon J. Warshaw

Table of Contents

Violence in the Workplace

Leon J. Warshaw

Tables

Click a link below to view table in article context.

1. Highest rates of occupational homicide, US workplaces, 1980-1989

2. Highest rates of occupational homicide US occupations, 1980-1989

3. Risk factors for workplace homicides

4. Guides for programmes to prevent workplace violence

52. Visual Display Units (11)

52. Visual Display Units

Chapter Editor: Diane Berthelette

Table of Contents

Tables and Figures

Overview

Diane Berthelette

Characteristics of Visual Display Workstations

Ahmet Çakir

Ocular and Visual Problems

Paule Rey and Jean-Jacques Meyer

Reproductive Hazards - Experimental Data

Ulf Bergqvist

Reproductive Effects - Human Evidence

Claire Infante-Rivard

Case Study: A Summary of Studies of Reproductive Outcomes

Musculoskeletal Disorders

Gabriele Bammer

Skin Problems

Mats Berg and Sture Lidén

Psychosocial Aspects of VDU Work

Michael J. Smith and Pascale Carayon

Ergonomic Aspects of Human - Computer Interaction

Jean-Marc Robert

Ergonomics Standards

Tom F.M. Stewart

Tables

Click a link below to view table in article context.

1. Distribution of computers in various regions

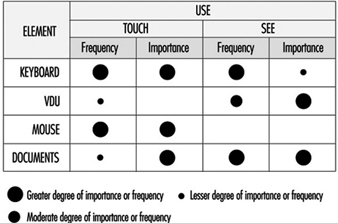

2. Frequency & importance of elements of equipment

3. Prevalence of ocular symptoms

4. Teratological studies with rats or mice

5. Teratological studies with rats or mice

6. VDU use as a factor in adverse pregnancy outcomes

7. Analyses to study causes musculoskeletal problems

8. Factors thought to cause musculoskeletal problems

Figures

Point to a thumbnail to see figure caption, click to see figure in article context.

Motion Sickness

Motion sickness, or kinetosis, is not a pathological condition, but is a normal response to certain motion stimuli with which the individual is unfamiliar and to which he or she is, therefore, unadapted; only those without a functioning vestibular apparatus of the inner ear are truly immune.

Motions producing sickness

There are many different types of provocative motion that induce the motion sickness syndrome. Most are associated with aids to locomotion—in particular, ships, hovercraft, aircraft, automobiles and trains; less commonly, elephants and camels. The complex accelerations generated by fairground amusements, such as swings, roundabouts (merry-go-rounds), roller-coasters and so on, can be highly provocative. In addition, many astronauts/cosmonauts suffer from motion sickness (space-motion sickness) when they first make head movements in the abnormal force environment (weightlessness) of orbital flight. The motion sickness syndrome is also produced by certain moving visual stimuli, without any physical motion of the observer; the external visual world display of fixed-base simulators (simulator sickness) or a large-screen projection of scenes taken from a moving vehicle (Cinerama or IMAX sickness) are examples.

Aetiology

The essential characteristics of stimuli that induce motion sickness is that they generate discordant information from the sensory systems that provide the brain with information about the spatial orientation and motion of the body. The principal feature of this discord is a mismatch between the signals provided, principally, by the eyes and inner ear, and those that the central nervous system “expects” to receive and to be correlated.

Several categories of mismatch can be identified. Most important is the mismatch of signals from the vestibular apparatus (labyrinth) of the inner ear, in which the semicircular canals (the specialized receptors of angular accelerations) and the otolith organs (the specialized receptors of translational accelerations) do not provide concordant information. For example, when a head movement is made in a car or aircraft which is turning, both the semicircular canals and the otoliths are stimulated in an atypical manner and provide erroneous and incompatible information, information that differs substantially from that generated by the same head movement in a stable, 1-G gravity environment. Likewise, low-frequency (below 0.5 Hz) linear accelerations, such as occur aboard ship in rough seas or in an aircraft during flight through turbulent air, also generate conflicting vestibular signals and, hence, are a potent cause of motion sickness.

The mismatch of visual and vestibular information can also be an important contributory factor. The occupant of a moving vehicle who cannot see out is more likely to suffer from motion sickness than one who has a good external visual reference. The passenger below deck or in an aircraft cabin senses motion of the vehicle by vestibular cues, but he or she receives visual information only of his or her relative movement within the vehicle. The absence of an “expected” and concordant signal in a particular sensory modality is also considered to be the essential feature of visually induced motion sickness, because the visual motion cues are not accompanied by the vestibular signals that the individual “expects” to occur when subjected to the motion indicated by the visual display.

Signs and symptoms

On exposure to provocative motion, the signs and symptoms of motion sickness develop in a definite sequence, the time scale being dependent upon the intensity of the motion stimuli and the susceptibility of the individual. There are, however, considerable differences among individuals not only in susceptibility, but also in the order in which particular signs and symptoms develop, or whether they are experienced at all. Typically, the earliest symptom is epigastric discomfort (“stomach awareness”); this is followed by nausea, pallor and sweating, and is likely to be accompanied by a feeling of bodily warmth, increased salivation and eructation (belching). These symptoms commonly develop relatively slowly, but with continuing exposure to the motion, there is a rapid deterioration in well-being, the nausea increases in severity and culminates in vomiting or retching. Vomiting may bring relief, but this is likely to be short-lived unless the motion ceases.

There are other more variable features of the motion sickness syndrome. Alteration of respiratory rhythm with sighing and yawning may be an early symptom, and hyperventilation may occur, particularly in those who are anxious about the cause or consequence of their disability. Headache, tinnitus and dizziness are reported, while in those with severe malaise, apathy and depression are not uncommon, and may be of such severity that personal safety and survival are neglected. A feeling of lethargy and somnolence may be dominant following the cessation of provocative motion, and these may be the only symptoms in situations where adaptation to unfamiliar motion takes place without malaise.

Adaptation

With continued or repeated exposure to a particular provocative motion, most individuals show a decrease in the severity of symptoms; typically after three or four days of continuous exposure (as aboard ship or in a space vehicle) they have adapted to the motion and can carry out their normal duties without disability. In terms of the “mismatch” model, this adaptation or habituation represents the establishment of a new set of “expectations” in the central nervous system. However, on return to a familiar environment, these will no longer be appropriate and symptoms of motion sickness can recur (mal de débarquement) until readaptation occurs. Individuals differ considerably in the rate at which they adapt, the way they retain adaptation and the degree to which they can generalize protective adaptation from one motion environment to another. Unfortunately, a small proportion of the population (probably about 5%) do not adapt, or adapt so slowly that they continue to experience symptoms throughout the period of exposure to provocative motion.

Incidence

The incidence of sickness in a particular motion environment is governed by a number of factors, notably:

- the physical characteristics of the motion (its intensity, frequency and direction of action)

- the duration of exposure

- the intrinsic susceptibility of the individual

- the task being performed

- other environmental factors (e.g., odour).

Not surprisingly, the occurrence of sickness varies widely in different motion environments. For example: nearly all the occupants of life rafts in rough seas will vomit; 60% of student aircrew members suffer from air sickness at some time during training, which in 15%is sufficiently severe to interfere with training; in contrast, less than 0.5% of passengers in civil transport aircraft are affected, although the incidence is higher in small commuter aircraft flying at low altitude in turbulent air.

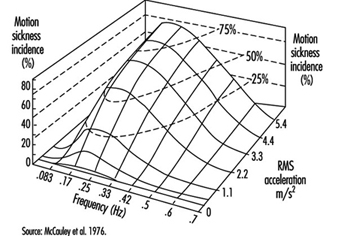

Laboratory and field studies have shown that for vertical translational oscillatory motion (appropriately called heave), oscillation at a frequency of about 0.2 Hz is the most provocative (figure 1). For a given intensity (peak acceleration) of oscillation, the incidence of sickness falls quite rapidly with an increase in frequency above 0.2 Hz; motion at 1 Hz is less than one-tenth as provocative as that at 0.2 Hz. Likewise, for motion at frequencies below 0.2 Hz, although the relationship between incidence and frequency is not well defined because of a lack of experimental data; certainly, a stable, zero frequency, 1-G environment is not provocative.

Figure 1. Motion sickness incidence as a function of wave frequency and acceleration for 2 hour exposure to vertical sinusoidal motion

Relationships established between the incidence of symptoms of motion sickness and the frequency, magnitude and duration of heave (z-axis) motion have led to the development of simple formulae that can be used to predict incidence when the physical parameters of the motion are known. The concept, embodied in British Standard 6841 (BSI 1987b) and in ISO Draft International Standard 2631-1, is that the incidence of symptoms is proportional to the Motion Sickness Dose Value (MSDVz). The MSDVz (in m/s1.5) is defined:

MSDVz=(a2t)½

where a is the root-mean-square (r.m.s.) value of the frequency-weighted acceleration (in m/s2) determined by linear integration over the duration, t (in seconds), of exposure to the motion.

The frequency weighting to be applied to the stimulus acceleration is a filter having a centre frequency and attenuation characteristics similar to those depicted in figure 1. The weighting function is defined precisely in the standards.

The percentage of an unadapted adult population (P) who are likely to vomit is given by:

P =1/3 MSDVz

Furthermore, the MSDVz may also be used to predict the level of malaise. On a four-point scale of zero (I felt all right) to three (I felt absolutely dreadful) an “illness rating” (I) is given by:

I =0.02MSDVz

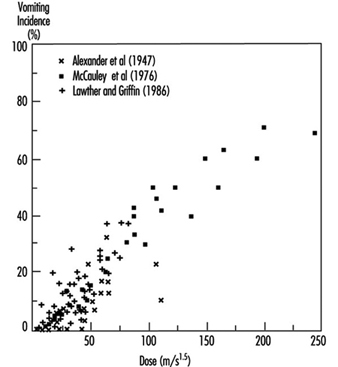

Given the large differences among individuals in their susceptibility to motion sickness, the relationship between MSDVz and the occurrence of vomiting in laboratory experiments and in sea trials (figure 2) is acceptable. It should be noted that the formulae were developed from data acquired on exposures lasting from about 20 minutes to six hours with vomiting occurring in up to 70% of individuals (mostly seated) exposed to vertical, heave, motion.

Figure 2. Relationship between incidence of vomiting and stimulus dose (MSDV2), calculated by the procedure described in the text. Data from laboratory experiments volving vertical oscillation (x) and sea trials (+)

Knowledge about the effectiveness of translational oscillation acting in other body axes and other than in a vertical direction is fragmentary. There is some evidence from laboratory experiments on small groups of subjects that translational oscillation in a horizontal plane is more provocative, by a factor of about two, than the same intensity and frequency of vertical oscillation for seated subjects, but is less provocative, also by a factor of two, when the subject is supine and the stimulus acts in the longitudinal (z) body axis. Application of formulae and weighting characteristics embodied in standards to the prediction of sickness incidence should, therefore, be made with caution and due concern for the constraints noted above.

The considerable variability between individuals in their response to provocative motion is an important feature of motion sickness. Differences in susceptibility can, in part, be related to constitutional factors. Infants much below the age of about two years are rarely affected, but with maturation, susceptibility increases rapidly to reach a peak between four and ten years. Thereafter, susceptibility falls progressively so that the elderly are less likely to be affected, but are not immune. In any age group, females are more sensitive than males, the incidence data suggesting a ratio of approximately 1.7:1. Certain dimensions of personality, such as neuroticism, introversion and perceptual style have also been shown to be correlated, albeit weakly, with susceptibility. Motion sickness can also be a conditioned response and a manifestation of phobic anxiety.

Preventive measures

Procedures which minimize the provocative stimulus or increase the tolerance are available. These may prevent sickness in a proportion of the population, but none, other than withdrawal from the motion environment, is 100%effective. In the design of a vehicle, attention to factors which raise the frequency and reduce the magnitude of the oscillations (see figure 1) experienced by occupants during normal operation is beneficial. The provision of head support and body restraint to minimize the unnecessary head movements is advantageous, and is further aided if the occupant can assume a reclined or supine position. Sickness is less if the occupant can be given a view of the horizon; for those deprived of an external visual reference, closing the eyes reduces visual/vestibular conflict. Involvement in a task, particularly control of the vehicle, is also helpful. These measures can be of immediate benefit, but in the longer term the development of protective adaptation is of the greatest value. This is achieved by continued and repeated exposure to the motion environment, though it can be facilitated by ground-based exercises in which provocative stimuli are generated by making head movements whilst rotating on a spin table (desensitization therapy).

There are several drugs which increase tolerance, though all have side-effects (in particular, sedation), so that they should not be taken by those in primary control of a vehicle or when optimum performance is mandatory. For short-term (less than four hours) prophylaxis, 0.3 to 0.6 mg hyoscine hydrobromide (scopolamine) is recommended; longer acting are the antihistaminics, promethazine hydrochloride (25 mg), meclozine hydrochloride (50 mg), dimenhydrinate (50 mg) and cinnarizine (30 mg). The combination of either hyoscine or promethazine with 25 mg ephedrine sulphate increases prophylactic potency with some reduction of side-effects. Prophylaxis for up to 48 hours can be achieved using a scopolamine patch, which allows the drug to be slowly absorbed through the skin at a controlled rate. Effective concentrations of the drug in the body are not achieved until six to eight hours after application of the patch, so the need for this type of therapy must be anticipated.

Treatment

Those suffering from established motion sickness with vomiting should, when practicable, be placed in a position where the motion stimulus is minimized, and be given an anti–motion sickness drug, preferably promethazine by injection. Should vomiting be prolonged and repeated, intravenous replacement of fluid and electrolytes may be necessary.

Violence in the Workplace

Violence is pervasive in modern society and appears to be escalating. Entirely apart from repression, wars and terrorist activities, the media daily report in banner headlines on the mayhem inflicted by humans upon each other in “civilized” as well as more primitive communities. Whether there has been a real increase or this simply represents more thorough reporting is arguable. After all, violence has been a feature of human interaction since prehistoric ages. Nevertheless, violence has become one of the leading causes of death in modern industrial societies—in some segments of the community it is the leading cause of death—and it is increasingly being recognized as a public health problem.

Inescapably, it finds its way into the workplace. From 1980 to 1989, homicide was the third leading cause of death from injury in North American workplaces, according to data compiled by the National Traumatic Occupational Facilities Surveillance System (NIOSH 1993a). During this period, occupational homicides accounted for 12% of deaths from injury in the workplace; only motor vehicles and machines accounted for more. By 1993, that figure had risen to 17%, a rate of 0.9 per 100,000 workers, now second only to motor vehicle deaths (Toscano and Windau 1994). For women workers, it remained the leading cause of work-related death, although the rate (0.4 deaths per 100,000) was lower than that for men (1.2 deaths per 100,000) (Jenkins 1995).

These deaths, however, represent only the “tip of the iceberg”. For example, in 1992, about 22,400 American workers were injured seriously enough in non-fatal assaults in the workplace to require days away from work to recuperate (Toscano and Windau 1994). Reliable and complete data are lacking, but it is estimated that for every death there have been many thousands—perhaps, even hundreds of thousands—of instances of violence in the workplace.

In its newsletter, Unison, the large British union of health care and governmental service workers, has labelled violence as “the most threatening risk faced by members at work. It is the risk which is most likely to lead to injury. It can bring unmanageable levels of occupational stress which damages personal esteem and threatens people’s ability to continue on the job” (Unison 1992).

This article will summarize the characteristics of violence in the workplace, the kinds of people involved, its effects on them and their employers, and the steps that may be taken to prevent or control such effects.

Definition of Violence

There is no consensus on the definition of violence. For example, Rosenberg and Mercy (1991) include in the definition both fatal and nonfatal interpersonal violence where physical force or other means is used by one person with the intent of causing harm, injury or death to another. The Panel on the Understanding and Control of Violent Behavior convened by the US National Academy of Sciences adopted the definition of violence as: behaviours by individuals that intentionally threaten, attempt or inflict physical harm on others (Reiss and Roth 1993).

These definitions focus on threatening or causing physical harm. However, they exclude instances in which verbal abuse, harassment or humiliation and other forms of psychological trauma may be the sole harm to the victim and which may be no less devastating. They also exclude sexual harassment, which may be physical but which is usually entirely non-physical. In the national survey of American workers conducted by the Northwestern National Life Insurance Company, the researchers separated violent acts into: harassment (the act of creating a hostile environment through unwelcome words, actions or physical contacts not resulting in physical harm), threats (expressions of an intent to cause physical harm), and physical attacks (aggression resulting in a physical assault with or without the use of a weapon) (Lawless, 1993).

In the UK, the Health and Safety Executive’s working definition of workplace violence is: any incident in which an employee is abused, threatened or assaulted by a member of the public in circumstances arising out of the course of his or her employment. Assailants may be patients, clients or co-workers (MSF 1993).

In this article, the term violence will be used in its broadest sense to include all forms of aggressive or abusive behaviour that may cause physical or psychological harm or discomfort to its victims, whether they be intentional targets or innocent bystanders involved only impersonally or incidentally. While workplaces may be targets of terrorist attacks or may become involved in riots and mob violence, such instances will not be discussed.

Prevalence of Violence in the Workplace

Accurate information on the prevalence of violence in the workplace is lacking. Most of the literature focuses on cases that are formally reported: homicides which get tallied in the obligatory death registries, cases that get enmeshed in the criminal justice system, or cases involving time off the job that generate workers’ compensation claims. Yet, for every one of these, there is an untold number of instances in which workers are victims of aggressive, abusive behaviour. For example, according to a survey conducted by the Bureau of Justice Statistics in the US Department of Justice, over half the victimizations sustained at work were not reported to the police. About 40% of the respondents said they did not report the incident because they considered it to be a minor or a personal matter, while another 27% said they did report it to a manager or a company security officer but, apparently, the report was not relayed to the police (Bachman 1994). In addition to the lack of a consensus on a taxonomy of violence, other reasons for under-reporting include:

- Cultural acceptance of violence. There is in many communities a widespread tolerance for violence among or against certain groups (Rosenberg and Mercy 1991). Although frowned upon by many, violence is often rationalized and tolerated as a “normal” response to competition. Violence among minority and ethnic groups is often condoned as a righteous response to discrimination, poverty and lack of access to social or economic equity resulting in low self-esteem and low valuations of human life. As a result, the assault is seen as a consequence of living in a violent society rather than working in an unsafe workplace. Finally, there is the “on-the-job syndrome”, in which workers in certain jobs are expected to put up with verbal abuse, threats and, even, physical attacks (SEIU 1995; Unison 1992).

- Lack of a reporting system. Only a small proportion of organizations have articulated an explicit policy on violence or have designed procedures for reporting and investigating instances of alleged violence in the workplace. Even where such a system has been installed, the trouble of obtaining, completing and filing the required report form is a deterrent to reporting all but the most outrageous incidents.

- Fear of blame or reprisal. Workers may fear being held responsible when they have been attacked by a client or a patient. Fear of reprisal by the assailant is also a potent deterrent to reporting, especially when that person is the worker’s superior and in a position to affect his or her job status.

- Lack of interest on the part of the employer. The employer’s lack of interest in investigating and reacting to prior incidents will certainly discourage reporting. Also, supervisors, concerned that workplace violence might reflect unfavourably on their managerial capabilities may actually discourage or even block the filing of reports by workers in their units.

To determine the prevalence of violence in the workplace in the absence of reliable data, attempts have been made to extrapolate both from available statistics (e.g., death certificates, crime reports and workers’ compensation systems) and from specially designed surveys. Thus, the US National Crime Victimization Survey estimated that about 1 million American workers (out of a workforce of 110 million) are assaulted at work each year (Bachman 1994). And, a 1993 telephone survey of a national sample of 600 American full-time workers (excluding self-employed and military personnel) found that one in four said that he or she had been a victim of workplace violence during the study year: 19%ere harassed, 7% were threatened, and 3% were attacked physically. The researchers reported further that 68%of the harassment victims, 43% of the threat victims and 24% of the attack victims had not reported the incident (Lawless 1993).

A similar survey of workers in the UK employed by the National Health Service revealed that, during the previous year, 0.5% had required medical treatment following an on-the-job physical assault; 11% had suffered a minor injury requiring only first aid, 4 to 6% had been threatened by persons wielding a deadly weapon, and 17% had received verbal threats. Violence was a special problem for emergency staff in ambulances and accident departments, nurses, and workers involved in the care of psychologically disturbed patients (Health Services Advisory Committee 1987). The risk of health workers being confronted by violence has been labelled a feature of everyday work in primary care and in accident/emergency departments (Shepherd 1994).

Homicide in the Workplace

Although workplace homicides are only a small proportion of all homicides, their substantial contribution to work-related deaths, at least in the United States, their unique features, and the possibility of preventive interventions by employers earn them special attention. For example, while most homicides in the community involve people who know each other, many of them close relatives, and only 13% were reported to have been associated with another felony, these proportions were reversed in the workplace, where more than three-fourths of the homicides were committed in the course of a robbery (NIOSH 1992). Further, while persons aged 65 and older in the general population have the lowest rates of being victims of homicide, this age group has the highest rates of such involvement in workplace homicides (Castillo and Jenkins 1994).

American workplaces with the highest rates of homicide are listed in table 1. Over 50% are accounted for by only two industries: retail trade and services. The latter includes taxi driving, which has nearly 40 times the average workplace homicide rate, followed by liquor/convenience stores and gas stations, prime targets for robberies, and by detective/protective services (Castillo and Jenkins 1994).

Table 1. US workplaces with the highest rates of occupational homicide, 1980-1989

|

Workplaces |

No. of homicides |

Rate1 |

|

Taxicab establishments |

287 |

26.9 |

|

Liquor stores |

115 |

8.0 |

|

Gas stations |

304 |

5.6 |

|

Detective/protective services |

152 |

5.0 |

|

Justice/public order establishments |

640 |

3.4 |

|

Grocery stores |

806 |

3.2 |

|

Jewellery stores |

56 |

3.2 |

|

Hotels/motels |

153 |

3.2 |

|

Eating/drinking places |

754 |

1.5 |

1 Number per 100,000 workers per year.

Source: NIOSH 1993b.

Table 2 lists the occupations with the highest rates of workplace homicides. Again, reflecting the likelihood of involvement in attempted felonies, taxi drivers head the list, followed by law-enforcement personnel, hotel clerks and workers in various types of retail establishments. Commenting on similar data from the UK, Drever (1995) noted that most of the occupations with the highest mortality from homicides had high rates of drug dependence (scaffolders, literary and artistic occupations, painters and decorators) or alcohol abuse (cooks and kitchen porters, publicans, bartenders and caterers).

Table 2. US occupations with the highest rates of occupational homicide, 1980-1989

|

Occupations |

No. of homicides |

Rate1 |

|

Taxicab drivers/chauffeurs |

289 |

15.1 |

|

Law enforcement officers |

520 |

9.3 |

|

Hotel clerks |

40 |

5.1 |

|

Gas station workers |

164 |

4.5 |

|

Security guards |

253 |

3.6 |

|

Stock handlers/baggers |

260 |

3.1 |

|

Store owners/managers |

1,065 |

2.8 |

|

Bartenders |

84 |

2.1 |

1 Number per 100,000 workers per year.

Source: NIOSH 1993b.

As noted above, the vast majority of work-related homicides occur during the course of a robbery or other crime committed by a person or persons usually not known to the victim. Risk factors associated with such incidents are listed in table 3.

Table 3. Risk factors for workplace homicides

Working alone or in small numbers

Exchange of money with the public

Working late night or early morning hours

Working in high crime areas

Guarding valuable property or possessions

Working in community settings (e.g. taxi drivers and police)

Source: NIOSH 1993b.

About 4% of workplace homicides occur during confrontations with family members or acquaintances who have followed the victim into the workplace. About 21% arise out of a confrontation related to the workplace: about two-thirds of these are perpetrated by workers or former employees with a grudge against a manager or a co-worker, while angry customers or clients account for the rest (Toscano and Windau 1994). In these cases, the target may be the particular manager or worker whose actions provoked the assault or, where there is a grudge against the organization, the target may be the workplace itself, and any employees and visitors who just happen to be in it at the critical moment. Sometimes, the assailant may be emotionally disturbed, as in the case of Joseph T. Weisbecker, an employee on long-term disability leave from his employer in Louisville, Kentucky, because of mental illness, who killed eight co-workers and injured 12 others before taking his own life (Kuzmits 1990).

Causes of Violence

Current understanding of the causes and risk factors for assaultive violence is very rudimentary (Rosenberg and Mercy 1991). Clearly, it is a multifactorial problem in which each incident is shaped by the characteristics of the assailant, the characteristics of the victim(s) and the nature of the interplay between them. Reflecting such complexity, a number of theories of causation have been developed. Biological theories, for example, focus on such factors as gender (most of the assailants are male), age (involvement in violence in the community diminishes with age but, as noted above, this is not so in the workplace), and the influence of hormones such as testosterone, neurotransmitters such as serotonin, and other such biological agents. The psychological approach focuses on personality, holding that violence is engendered by deprivation of love during childhood, and childhood abuse, and is learned from role models, reinforced by rewards and punishments in early life. Sociological theories emphasize as breeders of violence such cultural and subcultural factors as poverty, discrimination and lack of economic and social equity. Finally, interactional theories converge on a sequence of actions and reactions that ultimately escalate into violence (Rosenberg and Mercy 1991).

A number of risk factors have been associated with violence. They include:

Mental illness

The vast majority of people who are violent are not mentally ill, and the vast proportion of individuals with mental illness are not violent (American Psychiatric Association 1994). However, mentally disordered individuals are sometimes frightened, irritable, suspicious, excitable, or angry, or a combination of these (Bullard 1994). The resultant behaviour poses a particular risk of violence to the physicians, nurses and staff members involved in their care in ambulances, emergency departments and both inpatient and outpatient psychiatric facilities.

Certain types of mental illness are associated with a greater propensity for violence. Persons with psychopathic personalities tend to have a low threshold for anger and frustration, which often generate violent behaviour (Marks 1992), while individuals with paranoia are suspicious and prone to attack individuals or entire organizations whom they blame when things do not go as they would wish. However, violence may be exhibited by persons with other forms of mental illness. Furthermore, some mentally ill individuals are prone to episodes of acute dementia in which they may inflict violence on themselves as well as on those trying to restrain them.

Alcohol and drug abuse

Alcohol abuse has a strong association with aggressive and violent behaviour. While drunkenness on the part of either assailants or victims, or both, often results in violence, there is disagreement as to whether alcohol is the cause of the violence or merely one of a number of factors involved in its causation (Pernanen 1993). Fagan (1993) emphasized that while alcohol affects neurobiological functions, perception and cognition, it is the immediate setting in which the drinking takes place that channels the disinhibiting responses to alcohol. This was confirmed by a study in Los Angeles County which found that violent incidents were much more frequent in some bars and relatively uncommon in others where just as much drinking was taking place, and concluded that violent behaviour was not related to the amount of alcohol being consumed but, rather, to the kinds of individuals attracted to a particular drinking establishment and the kinds of unwritten rules in effect there (Scribner, MacKinnon and Dwyer 1995).

Much the same may be said for abuse of illicit drugs. Except perhaps for crack cocaine and the amphetamines, drug use is more likely to be associated with sedation and withdrawal rather than aggressive, violent behaviour. Most of the violence associated with illegal drugs seems to be associated not with the drugs, but with the effort to obtain them or the wherewithal to purchase them, and from involvement in the illegal drug traffic.

Violence in the community

Violence in the community not only spills over into workplaces but is a particular risk factor for workers such as police and firefighters, and for postal workers and other government employees, repair and service personnel, social workers and others whose jobs take them into neighbourhoods in which violence and crime are indigenous. Important factors in the frequency of violence, particularly in the United States, is the prevalence of firearms in the hands of the general public and, especially for young people, the amount of violence depicted in films and on television.

Work-Related Factors Associated with Violence

Instances of violence may occur in any and all workplaces. There are, however, certain jobs and work-related circumstances that are particularly associated with a risk of generating or being subjected to violence. They include:

Criminal activities

Perhaps the least complex of episodes of work-related violence are those associated with criminal violence, the major cause of worksite homicides. These fall into two categories: those involved with attempts at robbery or other felonies, and those related to traffic in illicit drugs. Police, security guards and other personnel with law-enforcement responsibilities face a constant risk of attack by felons attempting to enter the workplace and those resisting detection and arrest. Those working alone and field workers whose duties take them into high-crime neighbourhoods are frequent targets of robbery attempts. Health professionals making home visits to such areas are particularly at risk because they often carry drugs and drug paraphernalia such as hypodermic syringes and needles.

Dealing with the public

Workers in government and private community service agencies, banks and other institutions serving the public are frequently confronted by attacks from individuals who have been kept waiting unduly, have been greeted with disinterest and indifference (whether real or perceived), or were thwarted in obtaining the information or services they desired because of complicated bureaucratic procedures or technicalities that made them ineligible. Clerks in retail establishments receiving items being returned, workers staffing airport ticket counters when flights are overbooked, delayed or cancelled, urban bus or trolley drivers and conductors, and others who must deal with customers or clients whose wants cannot immediately be satisfied are often targets for verbal and sometimes even physical abuse. Then, there are also those who must contend with impatient and unruly crowds, such as police officers, security guards, ticket takers and ushers at popular sporting and entertainment events.

Violent attacks on government workers, particularly those in uniform, and on government buildings and offices in which workers and visitors may be indiscriminately injured or killed, may result from resentment and anger at laws and official policies which the perpetrators will not accept.

Work stress

High levels of work stress may precipitate violent behaviour, while violence in the workplace can, in turn, be a potent stressor. The elements of work stress are well known (see chapter Psychosocial and Organizational Factors). Their common denominator is a devaluation of the individual and/or the work he or she performs, resulting in fatigue, frustration and anger directed at managers and co-workers perceived to be inconsiderate, unfair and abusive. Several recent population studies have demonstrated an association between violence and job loss, one of the most potent job-related stressors (Catalano et al. 1993; Yancey et al. 1994).

Interpersonal environment in the workplace

The interpersonal environment in the workplace may be a breeding ground for violence. Discrimination and harassment, forms of violence in themselves as defined in this article, may provoke violent retaliation. For example, MSF, the British union of workers in management, science and finance, calls attention to workplace bullying (defined as persistent offensive, abusive, intimidating, malicious or insulting behaviour, abuse of power or unfair penal sanctions), as a characteristic of the management style in some organizations (MSF 1995).

Sexual harassment has been branded a form of assault on the job (SEIU 1995). It may involve unwelcome touching or patting, physical assault, suggestive remarks or other verbal abuse, staring or leering, requests for sexual favours, compromising invitations, or a work environment made offensive by pornography. It is illegal in the United States, having been declared a form of sexual discrimination under Title VII of the Civil Rights Act of 1964 when the worker feels that his or her job status depends on tolerating the advances or if the harassment creates an intimidating, hostile or offensive workplace environment.

Although women are the usual targets, men have also been sexually harassed, albeit much less frequently. In a 1980 survey of US federal employees, 42% of female respondents and 15% of males said that they had been sexually harassed on the job, and a follow-up survey in 1987 yielded similar results (SEIU 1995). In the United States, extensive media coverage of the harassment of women who had “intruded” into jobs and workplaces traditionally filled by males, and the notoriety given to the involvement of prominent political and public figures in alleged harassment, have resulted in an increase in the number of complaints received by state and federal anti-discrimination agencies and the number of civil law suits filed.

Working in health care and social services

In addition to the attempted robberies as noted above, health care staff are often targets of violence from anxious and disturbed patients, especially in emergency and outpatient departments, where long waits and impersonal procedures are not uncommon and where anxiety and anger may boil over into verbal or physical assaults. They may also be victims of assault by family members or friends of patients who had unfavourable outcomes which they rightly or wrongfully attribute to denials, delays or errors in treatment. In such instances they may attack the particular health worker(s) whom they hold responsible, or the violence may be aimed randomly at any staff member(s) of the medical facility.

Effects of Violence on the Victim

The trauma caused by physical assault varies with the nature of the attack and the weapons employed. Bruises and cuts on the hands and forearms are common when the victim has tried to defend himself or herself. Since the face and head are frequent targets, bruises and fractures of the facial bones are common; these can be traumatic psychologically because the swelling and ecchymoses are so visible and may take weeks to disappear (Mezey and Shepherd 1994).

The psychological effects may be more troublesome than the physical trauma, especially when a health worker has been assaulted by a patient. The victims may experience a loss of composure and self-confidence in their professional competence accompanied by a sense of guilt at having provoked the attack or having failed to detect that it was coming. Unfocused or directed anger may persist at the apparent rejection of their well-intended professional efforts, and there may be a persistent loss of confidence in themselves as well as a lack of trust in their co-workers and supervisors that can interfere with work performance. All this may be accompanied by insomnia, nightmares, diminished or increased appetite, increased consumption of tobacco, alcohol and/or drugs, social withdrawal and absenteeism from the job (Mezey and Shepherd 1994).

Post-traumatic stress disorder is a specific psychological syndrome (PTSD) that may develop after major disasters and instances of violent assault, not only in those directly involved in the incident but also in those who have witnessed it. While usually associated with life-threatening or fatal incidents, PTSD may occur after relatively trivial attacks that are perceived as life-threatening (Foa and Rothbaum 1992). The symptoms include: re-experiencing the incident through recurrent and intrusive recollections (“flashbacks”) and nightmares, persistent feelings of arousal and anxiety including muscular tension, autonomic hyperactivity, loss of concentration, and exaggerated reactivity. There is often conscious or unconscious avoidance of circumstances that recall the incident. There may be a long period of disability but the symptoms usually respond to supportive psychotherapy. They can often be prevented by a post-incident debriefing conducted as soon as possible after the incident, followed, when needed, by short-term counselling (Foa and Rothbaum 1992).

After the Incident

Interventive measures to be taken immediately after the incident include:

Care of the victim

Appropriate first-aid and medical care should be provided as quickly as possible to all injured individuals. For possible medico-legal purposes (e.g., criminal or civil actions against the assailant) the injuries should be described in detail and, if possible, photographed.

Clean-up of the workplace

Any damage or debris in the workplace should be cleaned up, and any equipment that was involved should be checked to make sure that the safety and cleanliness of the workplace have been fully restored (SEIU 1995).

Post-incident debriefing

As soon as possible, all those involved in or witnessing the incident should participate in a post-incident debriefing or a “trauma-crisis counselling” session conducted by an appropriately qualified staff member or an outside consultant. This will not only provide emotional support and identify those for whom referral for one-on-one counselling may be advisable, but also enable the collection of details of exactly what has happened. Where necessary, the counselling may be supplemented by the formation of a peer support group (CAL/OSHA 1995).

Reporting

A standardized report form should be completed and submitted to the proper individual in the organization and, when appropriate, to the police in the community. A number of sample forms that may be adapted to the needs of a particular organization have been designed and published (Unison 1991, MSF 1993, SEIU 1995). Aggregating and analysing incident report forms will provide epidemiological information that may identify risk factors for violence in the particular workplace and point the way to suitable preventive interventions.

Investigating the incident

Each reported incident of alleged violence, however trivial it may seem, should be investigated by a designated properly trained individual. (Assignment for such investigations may be made by the joint labour/management safety and health committee, where one exists.) The investigation should be aimed at identifying the cause(s) of the incident, the person(s) involved, what, if any, disciplinary measures should be invoked, and what may be done to prevent recurrences. Failure to conduct an impartial and effective investigation is a signal of management’s disinterest and a lack of concern for employees’ health and welfare.

Employer support

Victims and observers of the incident should be assured that they will not be subject to discrimination or any other form of reprisal for reporting it. This is especially important when the alleged assailant is the worker’s superior.

Depending on the regulations extant in the particular jurisdiction, the nature and extent of any injuries, and the duration of any absence from work, the employee may be eligible for workers’ compensation benefits. In such cases, the appropriate claim forms should be filed promptly.

When appropriate, a report should be filed with the local law enforcement agency. When needed, the victim may be provided with legal advice on pressing charges against the assailant, and assistance in dealing with the media.

Union Involvement

A number of unions have been playing a prominent role in dealing with workplace violence, most notably those representing workers in the health care and service industries, such as the Service Employees International Union (SEIU) in the United States, and Management, Science and Finance (MSF) and Unison in the UK. Through the development of guidelines and the publication of fact sheets, bulletins and pamphlets, they have focused on the education of workers, their representatives and their employers about the importance of violence in the workplace, how to deal with it, and how to prevent it. They have acted as advocates for members who have been victims to ensure that their complaints and allegations of violence are given appropriate consideration without threats of reprisal, and that they receive all of the benefits to which they may be entitled. Unions also advocate with employers’ and trade associations and government agencies on behalf of policies, rules and regulations intended to reduce the prevalence of violence in the workplace.

Threats of Violence

All threats of violence should be taken seriously, whether aimed at particular individuals or at the organization as a whole. First, steps must be taken to protect the targeted individual(s). Then, where possible, the assailant should be identified. If that person is not in the workforce, the local law enforcement agencies should be notified. If he or she is in the organization, it may be desirable to consult a qualified mental health professional to guide the handling of the situation and/or deal directly with the assailant.

Preventive Strategies

Preventing violence in the workplace is fundamentally the employer’s responsibility. Ideally, a formal policy and programme will have been developed and implemented before victimization occurs. This is a process that should involve not only the appropriate individuals in human resources/personnel, security, legal affairs, and employee health and safety departments, but also line managers and shop stewards or other employee representatives. A number of guides for such an exercise have been published (see table 4). They are generic and are intended to be tailored to the circumstances of a particular workplace or industry. Their common denominators include:

Table 4. Guides for programmes to prevent workplace violence

|

Date |

Title |

Source |

|

1991 |

Violence in the Workplace: |

Unison Health Care |

|

1993 |

CAL/OSHA Guidelines for Security |

Division of Occupational Safety and Health |

|

1993 |

Prevention of Violence at Work: |

MSF Health and Safety Office |

|

1995 |

Assault on the Job: We Can Do |

Service Employees International Union |

|

1995 |

CAL/OSHA: Model Injury and |

Division of Occupational Safety and Health |

|

1996 |

Guidelines for Preventing Work- |

OSHA Publications Office |

Establishing a policy

A policy explicitly outlawing discriminatory and abusive behaviour and the use of violence for dispute resolution, accompanied by specified disciplinary measures for infractions (up to and including dismissal), should be formulated and published.

Risk assessment

An inspection of the workplace, supplemented by analysis of prior incidents and/or information from employee surveys, will enable an expert to assess risk factors for violence and suggest preventive interventions. Examination of the prevailing style of management and supervision and the organization of work may disclose high levels of work stress that may precipitate violence. Study of interactions with clients, customers or patients may reveal features that may generate needless anxiety, frustration and anger, and precipitate violent reactions.

Workplace modifications to reduce crime

Guidance from police or private security experts may suggest changes in work procedures and in the layout and furnishing of the workplace that will make it a less attractive target for robbery attempts. In the United States, the Virginia Department of Criminal Justice has been using Crime Prevention Through Environmental Design (CPTED), a model approach developed by a consortium of the schools of architecture in the state that includes: changes in interior and exterior lighting and landscaping with particular attention to parking areas, stairwells and restrooms; making sales and waiting areas visible from the street; use of drop safes or time-release safes to hold cash; alarm systems, television monitors and other security equipment (Malcan 1993). CPTED has been successfully applied in convenience stores, banks (particularly in relation to automatic teller machines which may be accessed around the clock), schools and universities, and in the Washington, DC, Metro subway system.

In New York City, where robbery and killing of taxi drivers is relatively frequent compared to other large cities, the Taxi and Limousine Commission issued regulations that mandated the insertion of a transparent, bullet-resistant partition between the driver and passengers in the rear seat, a bullet-proof plate in the back of the driver’s seat, and an external distress signal light that could be turned on by the driver while remaining invisible to those inside the cab (NYC/TLC 1994). (There has been a spate of head and facial injuries among rear seat passengers who were not wearing seat belts and were thrown forward against the partition when the cab stopped suddenly.)

Where work involves interaction with customers or patients, employee safety may be enhanced by interposing barriers such as counters, desks or tables, transparent, shatter-proof partitions, and locked doors with shatter-proof windows (CAL/OSHA 1993). Furniture and equipment can be arranged to avoid entrapment of the employee and, where privacy is important, it should not be maintained at the expense of isolating the employee with a potentially aggressive or violent individual in a closed or secluded area.

Security systems

Every workplace should have a well-designed security system. Intrusion of strangers may be reduced by limiting entry to a designated reception area where visitors may have an identity check and receive ID badges indicating the areas to be visited. In some situations, it may be advisable to use metal detectors to identify visitors carrying concealed weapons.

Electronic alarm systems triggered by strategically located “panic buttons” can provide audible and/or visual signals that can alert co-workers to danger and summon help from a nearby security station. Such alarm systems may also be rigged to summon local police. However, they are of little use if guards and co-workers have not been trained to respond promptly and properly. Television monitors can not only provide protective surveillance but also record any incidents as they occur, and may help identify the perpetrator. Needless to say, such electronic systems are of little use unless they are maintained properly and tested at frequent intervals to ensure that they are in working order.

Two-way radios and cellular telephones can provide a measure of security for field personnel and those who are working alone. They also provide a means of reporting their location and, when necessary, summoning medical and other forms of assistance.

Work practice controls

Work practices should be reviewed periodically and modified to minimize the build-up of work stress. This involves attention to work schedules, work load, job content, and monitoring of work performance. Adequate staffing levels should be maintained in high-risk work areas both to discourage violent behaviour and to deal with it when it occurs. Adjustment of staffing levels to cope with peak flows of clients or patients will help to minimize irritating delays and crowding of work areas.

Staff training

Workers and supervisors should be trained to recognize rising tension and anger and in non-violent methods of defusing them. Training involving role-playing exercises will help employees to cope with overly aggressive or abusive individuals without being confrontational. In some situations, training employees in self-defence may be indicated, but there is the danger that this will breed a level of self-confidence that will lead them to delay or entirely neglect calling for available help.

Security guards, staff in psychiatric or penal institutions, and others likely to be involved with physically violent individuals should be trained to subdue and restrain them with minimal risk of injury to others or to themselves (SEIU 1995). However, according to Unison (1991), training can never be a substitute for good work organization and the provision of adequate security.

Employee assistance programmes

Employee assistance programmes (EAPs—also known as member assistance programmes, or MAPs, when provided by a union) can be particularly helpful in crisis situations by providing counselling and support to victims and witnesses of violent incidents, referring them to outside mental health professionals when needed, monitoring their progress and overseeing any protective arrangements intended to facilitate their return to work.

EAPs can also counsel employees whose frustration and anger might culminate in violent behaviour because they are overburdened by work-related problems or those arising from life in the family and/or in the community, whose frustration and anger might culminate in violent behaviour. When they have several such clients from a particular area of the workplace, they can (without breaching the confidentiality of personal information essential to their operation) guide managers to making desirable work modifications that will defuse the potential “powder keg” before violence erupts.

Research

Because of the seriousness and complexity of the problem and the paucity of reliable information, research is needed in the epidemiology, causation, prevention and control of violence in society in general and in the workplace. This requires a multidisciplinary effort involving (in addition to experts in occupational safety and health), mental health professionals, social workers, architects and engineers, experts in management science, lawyers, judges and experts in the criminal justice system, authorities on public policy, and others. Urgently needed are expanded and improved systems for the collection and analysis of the relevant data and the development of a consensus on a taxonomy of violence so that information and ideas can be more easily transposed from one discipline to others.

Conclusion

Violence is endemic in the workplace. Homicides are a major cause of work-related deaths, but their impact and cost are considerably outweighed by the prevalence of near misses, non-fatal physical assaults, threats, harassment, aggressive behaviour and abuse, much of which remains undocumented and unreported. Although most of the homicides and many of the assaults occur in conjunction with criminal activities, workplace violence is not just a criminal justice problem. Nor is it solely a problem for mental health professionals and specialists in addictions, although much of it is associated with mental illness, alcoholism and drug abuse. It requires a coordinated effort by experts in a broad variety of disciplines, led by occupational health and safety professionals, and aimed at developing, validating and implementing a coherent set of strategies for intervention and prevention, keeping in mind that the diversity in workers, jobs and industries dictates an ability to tailor them to the unique characteristics of a particular workforce and the organization that employs it.

Overview

New information technologies are being introduced in all industrial sectors, albeit to varying extents. In some cases, the costs of computerizing production processes may constitute an impediment to innovation, particularly in small and medium-sized companies and in developing countries. Computers make possible the rapid collection, storage, processing and dissemination of large quantities of information. Their utility is further enhanced by their integration into computer networks, which allow resources to be shared (Young 1993).

Computerization exerts significant effects on the nature of employment and on working conditions. Beginning about the mid-1980s, it was recognized that workplace computerization may lead to changes in task structure and work organization, and by extension to work requirements, career planning and stress suffered by production and management personnel. Computerization may exert positive or negative effects on occupational health and safety. In some cases, the introduction of computers has rendered work more interesting and resulted in improvements in the work environment and reductions of workload. In others, however, the result of technological innovation has been an increase in the repetitive nature and intensity of tasks, a reduction of the margin for individual initiative and the isolation of the worker. Furthermore, several companies have been reported to increase the number of work shifts in an attempt to extract the largest possible economic benefit from their financial investment (ILO 1984).